the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Atmospheric particulate matter characterization by Fourier transform infrared spectroscopy: a review of statistical calibration strategies for carbonaceous aerosol quantification in US measurement networks

Satoshi Takahama

Ann M. Dillner

Andrew T. Weakley

Matteo Reggente

Charlotte Bürki

Mária Lbadaoui-Darvas

Bruno Debus

Adele Kuzmiakova

Anthony S. Wexler

Atmospheric particulate matter (PM) is a complex mixture of many different substances and requires a suite of instruments for chemical characterization. Fourier transform infrared (FT-IR) spectroscopy is a technique that can provide quantification of multiple species provided that accurate calibration models can be constructed to interpret the acquired spectra. In this capacity, FT-IR spectroscopy has enjoyed a long history in monitoring gas-phase constituents in the atmosphere and in stack emissions. However, application to PM poses a different set of challenges as the condensed-phase spectrum has broad, overlapping absorption peaks and contributions of scattering to the mid-infrared spectrum. Past approaches have used laboratory standards to build calibration models for prediction of inorganic substances or organic functional groups and predict their concentration in atmospheric PM mixtures by extrapolation.

In this work, we review recent studies pursuing an alternate strategy, which is to build statistical calibration models for mid-IR spectra of PM using collocated ambient measurements. Focusing on calibrations with organic carbon (OC) and elemental carbon (EC) reported from thermal–optical reflectance (TOR), this synthesis serves to consolidate our knowledge for extending FT-IR spectroscopy to provide TOR-equivalent OC and EC measurements to new PM samples when TOR measurements are not available. We summarize methods for model specification, calibration sample selection, and model evaluation for these substances at several sites in two US national monitoring networks: seven sites in the Interagency Monitoring of Protected Visual Environments (IMPROVE) network for the year 2011 and 10 sites in the Chemical Speciation Network (CSN) for the year 2013. We then describe application of the model in an operational context for the IMPROVE network for samples collected in 2013 at six of the same sites as in 2011 and 11 additional sites. In addition to extending the evaluation to samples from a different year and different sites, we describe strategies for error anticipation due to precision and biases from the calibration model to assess model applicability for new spectra a priori. We conclude with a discussion regarding past work and future strategies for recalibration. In addition to targeting numerical accuracy, we encourage model interpretation to facilitate understanding of the underlying structural composition related to operationally defined quantities of TOR OC and EC from the vibrational modes in mid-IR deemed most informative for calibration. The paper is structured such that the life cycle of a statistical calibration model for FT-IR spectroscopy can be envisioned for any substance with IR-active vibrational modes, and more generally for instruments requiring ambient calibrations.

- Article

(6416 KB) - Full-text XML

- BibTeX

- EndNote

Airborne particles are made of inorganic salts, organic compounds, mineral dust, black carbon (BC), trace elements, and water (Seinfeld and Pandis, 2016). While regulatory limits on airborne particulate matter (PM) concentrations are set by gravimetric mass determination, analysis of chemical composition is desired as it provides insight into source contributions, facilitates evaluation of chemical simulations, and strengthens links between particle constituents and health and environmental impacts. However, the diversity of molecular constituents poses challenges for characterization as no single instrument can measure all relevant properties; an amalgam of analytical techniques is often required for comprehensive measurement (Hallquist et al., 2009; Kulkarni et al., 2011; Pratt and Prather, 2012; Nozière et al., 2015; Laskin et al., 2018). Fourier transform infrared (FT-IR) spectroscopy is one analytical technique that captures the signature of a multitude of PM constituents that give rise to feature-rich spectral patterns over the mid-infrared (mid-IR) wavelengths (Griffiths and Haseth, 2007). In the past decade, mid-IR spectra have been used for quantification of various substances in atmospheric PM and for apportionment of organic matter (OM) into source classes including biomass burning, biogenic aerosol, fossil fuel combustion, and marine aerosol (Russell et al., 2011). The quantitative information regarding the abundance of substances in each spectrum is limited only by the calibration models that can be built for it.

In principle, the extent of frequency-dependent absorption in the mid-IR range accompanying induced changes in the dipole moment of molecular bonds can be used to estimate the quantity of sample constituents in any medium (Griffiths and Haseth, 2007). Based on this principle, FT-IR spectroscopy has a long history in remote and ground-based measurement of chemical composition in the atmospheric vapor phase (Griffith and Jamie, 2006). For ground-based measurement, gases are measured by FT-IR spectroscopy in an open-path in situ configuration (Russwurm and Childers, 2006) or via extractive sampling into a closed multi-pass cell (Spellicy and Webb, 2006). These techniques have been used to sample urban smog (Pitts et al., 1977; Tuazon et al., 1981; Hanst et al., 1982); smog chambers (Akimoto et al., 1980; Pitts et al., 1984; Ofner, 2011), biomass burning emissions (Hurst et al., 1994; Yokelson et al., 1997; Christian et al., 2004), volcanoes (Oppenheimer and Kyle, 2008), and fugitive gases (Kirchgessner et al., 1993; Russwurm, 1999; U.S. EPA, 1998); emission fluxes (Galle et al., 1994; Griffith and Galle, 2000; Griffith et al., 2002); greenhouse gases (Shao and Griffiths, 2010; Hammer et al., 2013; Schütze et al., 2013; Hase et al., 2015); and isotopic composition (Meier and Notholt, 1996; Flores et al., 2017). For these applications, quantitative analysis has been conducted using various regression algorithms with standard gases or synthetic calibration spectra with absolute accuracies on the order of 1 %–5 %. Synthetic spectra for calibration are generated from a database of absorption line parameters together with simulation of pressure and Doppler broadening and instrumental effects (Griffith, 1996; Flores et al., 2013).

1.1 Limits of conventional approaches to calibration

Analysis of FT-IR spectra of condensed-phase systems is more challenging. PM can be found in crystalline solid, amorphous solid, liquid, and semisolid phase states (Virtanen et al., 2010; Koop et al., 2011; Li et al., 2017). Solid- and liquid-phase spectra do not have the same rotational line shapes present in the vapor phase, but inhomogeneous broadening occurs due to a multitude of local interactions of bonds within the liquid or solid environment (Turrell, 2006; Griffiths and Haseth, 2007; Kelley, 2013). Line shapes are particularly broad in complex mixtures of atmospheric PM since the resulting spectrum is the superposition of varying resonances for a given type of bond. FT-IR spectroscopy has enjoyed a long history of qualitative analysis of molecular characteristics in multicomponent PM based on visible peaks in the spectrum (e.g., Mader et al., 1952; Presto et al., 2005; Kidd et al., 2014; Q. Chen et al., 2016), and study of relative composition or changes to composition under controlled conditions (e.g., humidification, oxidation) has provided insight into atmospherically relevant aerosol processes (e.g., Cziczo et al., 1997; Gibson et al., 2006; Hung et al., 2013; Zeng et al., 2013). Quantitative prediction of substances in collected PM represents a separate task and is conventionally pursued by generating laboratory standards and relating observed features to known concentrations. This calibration approach has been predominantly used to characterize ambient and atmospherically relevant particles collected on filters or optical disks. The bulk of past work in aerosol studies has focused on using laboratory standards to build semiempirical calibration models for individual vibrational modes belonging to one of many functional groups present in the mixture. In this approach, the observed absorption is related to a reference measurement (typically gravimetric mass) of the compounds on the substrate. In this way, calibration of nitrate and sulfate salts (Cunningham et al., 1974; Cunningham and Johnson, 1976; Bogard et al., 1982; McClenny et al., 1985; Krost and McClenny, 1992, 1994; Pollard et al., 1990; Tsai and Kuo, 2006; Reff et al., 2007), silica dust (Foster and Walker, 1984; Weakley et al., 2014; Wei et al., 2017), and organic functional groups (Allen and Palen, 1989; Paulson et al., 1990; Pickle et al., 1990; Mylonas et al., 1991; Palen et al., 1992, 1993; Holes et al., 1997; Blando et al., 1998; Maria et al., 2002, 2003; Sax et al., 2005; Gilardoni et al., 2007; Reff et al., 2007; Coury and Dillner, 2008; Day et al., 2010; Takahama et al., 2013; Faber et al., 2017) has been studied. The organic carbon and organic aerosol mass reconstructed has typically ranged between 70 % and 100 % when compared with collocated evolved-gas analysis or mass spectrometry measurements (Russell et al., 2009; Corrigan et al., 2013), though many model uncertainties remain. One is that unmeasured, non-functionalized skeletal carbon can lead to less than full mass recovery, and the second is the estimation of the detectable fraction due to the multiplicity of carbon atoms associated with each type of functional group. (Maria et al., 2003; Takahama and Ruggeri, 2017). The challenge in this type of calibration is in the problem of extrapolating from the reference composition, which is necessarily kept simple, to that of the chemically complex PM. Spectroscopically, this difference can lead to shifts in absorption intensity or peak locations and a general broadening of absorption peaks on account of the same functional group appearing in many different molecules and in different condensed-phase environments.

Synthetic spectra for condensed-phase systems can be generated by mechanistic and statistical means, but are not readily available for quantitative calibration. Absolute intensities are typically even more difficult to simulate accurately for than peak frequencies (Gussoni et al., 2006). Computational models that predict vibrational motion of molecules in isolation using quantum mechanical models (Barone et al., 2012) or by harmonic approximation for larger molecules (Weymuth et al., 2012) suffer from two shortcomings: poor treatment of anharmonicity and lack of solvent effects in liquid solutions (Thomas et al., 2013). Quantum mechanical simulations can parameterize interactions with an implicitly modeled solvent through a polarizable continuum model framework (Cappelli and Biczysko, 2011) but do not adequately represent specific interactions such as hydrogen bonding (Barone et al., 2014). Microsolvation can be a better technique to describe the hydrogen bonding environment but the high computational cost prevents application to large systems (Kulkarni et al., 2009). Gaussian dispersion analysis has provided accurate spectrum reconstruction in pure liquids (water–ethanol mixtures) from their calculated dielectric functions (MacDonald and Bureau, 2003) but has not been applied to more complex systems. Molecular dynamics (MD) provides a general framework for addressing interactions with the solvent, large-amplitude motions in flexible molecules, and anharmonicities (Ishiyama and Morita, 2011; Ivanov et al., 2013). Electronic structure calculations relevant for predicting vibrational spectra can be incorporated by ab initio MD (Car and Parrinello, 1985; Marx, 2009; Thomas et al., 2013) and path integral MD methods such as centroid or ring polymer MD (Witt et al., 2009; Ceriotti et al., 2016) that additionally consider nuclear quantum effects (at higher computational cost). Ab initio MD is widely used for simulating the spectra of water and a range of small organic and biological molecules in isolation (Silvestrelli et al., 1997; Aida and Dupuis, 2003; Gaigeot et al., 2007; Gaigeot, 2008; Thomas et al., 2013; Fischer et al., 2016). Such calculations generally reproduce the shape of the spectrum well with respect to experimental ones at very high dilution, although C–H stretching peaks are known to be shifted towards higher wavenumbers due to the lack of improper hydrogen bonding in vacuum simulations (Thomas et al., 2013). Bulk liquid-phase simulations are limited to a few tens of molecules (few hundreds of atoms) and have been performed for liquids, including methanol (Thomas et al., 2013), water (Silvestrelli et al., 1997), and aqueous solutions of biomolecules (Gaigeot and Sprik, 2003). These simulations reproduce peak positions and relative intensities sufficiently well when compared to experimental spectra, albeit with lower accuracy in peak position at wavenumbers higher than 2000 cm−1. These methods have also been shown to reproduce the main features of vibrational spectra in solid (crystalline ice and naphthalene) systems (Bernasconi et al., 1998; Putrino and Parrinello, 2002; Pagliai et al., 2008; Rossi et al., 2014b). Nuclear quantum effects not explicitly accounted for by ab initio calculations become more important for hydrogen-containing systems and have been investigated in liquid water and methane for vibrational spectra simulation (Rossi et al., 2014a, b; Medders and Paesani, 2015; Marsalek and Markland, 2017). A recent approach improves upon the accuracy and speed of ab initio MD by combining a dipole moment model (Gastegger et al., 2017) and potentials (Behler and Parrinello, 2007) derived from machine learning. Trained on only several hundred reference electronic structure calculations, spectra of several alkanes and small peptides were simulated with accuracy reflecting improved treatment of anharmonicities and proton transfer, with reductions in computational cost by 3 orders of magnitude (Gastegger et al., 2017). However, this machine-learned method still inherits some common limitations of ab initio calculations upon which models are trained. One example is the apparent blue shift of the C–H stretching peak, likely due to an insufficient treatment of improper hydrogen bonding or the deficiency of the electron exchange functional (Thomas et al., 2013). While such methods may be useful in aiding interpretation of environmental spectra (Kubicki and Mueller, 2010; Pedone et al., 2010), they are not yet mature for reproducing spectra of suitable quality for quantitative calibration or (white-box) inverse modeling.

Early applications of artificial intelligence to mid-IR spectra interpretation also included efforts to generate synthetic spectra of individual compounds. Mid-IR spectra of new compounds were simulated from neural networks trained on three-dimensional molecular descriptors (radial distribution functions) paired with corresponding mid-IR spectra, matched by a similarity (nearest neighbor) search in a structural database, or generated from spectra–structure correlation databases (Dubois et al., 1990; Weigel and Herges, 1996; Baumann and Clerc, 1997; Schuur and Gasteiger, 1997; Selzer et al., 2000; Yao et al., 2001; Gasteiger, 2006). Drawing upon internal or commercial libraries (Barth, 1993), predictions were made for compounds in the condensed phase with a diverse set of substructures including methanol, amino acids, ring-structured acids, and substituted benzene derivatives. Many structural features including peak location, relative peak heights, and peak widths were reproduced, provided that relevant training samples were available in the library. Much of the work was motivated by pattern matching and classification of spectra for unknown samples (Robb and Munk, 1990; Novic and Zupan, 1995), and automated band assignment and identification of the underlying fragments was typically performed by trained spectroscopists (Sasaki et al., 1968; Gribov and Elyashberg, 1970; Christie and Munk, 1988; Munk, 1998; Hemmer, 2007; Elyashberg et al., 2009). This approach has been able to generate spectra for more complex molecules than mechanistic modeling relying on ab initio calculations. However, the extent of evaluation has been limited; extension to multicomponent mixtures and usefulness for quantitative calibration is currently not known. While these research fields remain an active part of cheminformatics, we propose another approach for calibration model development that can be used for atmospheric PM analysis.

1.2 Use of collocated measurements

As an alternative to laboratory-generated mixtures and simulated spectra, collocated measurements of substances for which there are IR-active vibrational modes can be used as reference values for calibration (also referred to as in situ calibration). This data-driven approach permits the complexity of atmospheric PM spectra with overlapping absorbances from both analytes and interferences to be included in a calibration model. For instance, Allen et al. (1994) demonstrated the use of collocated ammonium sulfate measurements by ion chromatography to quantify the abundance of this substance from FT-IR spectra, though some uncertainties arose from the time resolution among the sampling instruments.

The benefit of building data-driven calibration models to reproduce concentrations reported by available measurements is twofold. One is to provide equivalent measurements when the reference measurements are expensive or difficult to obtain. For example, FT-IR spectra can be acquired rapidly, nondestructively, and at low cost from polytetrafluoroethylene (PTFE) filters commonly used for gravimetric mass analysis in compliance monitoring and health studies. That vibrational spectra contain many signatures of chemical constituents of PM (which also gives rise to challenges in spectroscopic interpretation) provides the basis for quantitative calibration of a multitude of substances. This capability for multi-analyte analysis is beneficial when a single filter may be relied upon during short-term campaigns, or at network sites for which installation of the full suite of instruments is prohibitive. The second benefit is the ability to gain a better understanding of atmospheric constituents measured by other techniques by associating them with important vibrational modes and structural elements of molecules identified in the FT-IR calibration model. Such an application can be enlightening for studying aggregated metrics such as carbon content or functional group composition in atmospheric PM quantified by techniques requiring more sample mass and user labor: ultraviolet–visible spectrometry or nuclear magnetic resonance spectroscopy (Decesari et al., 2003; Ranney and Ziemann, 2016).

In this paper, we demonstrate an extensive application of this approach in the statistical calibration of FT-IR spectra to collocated measurements of carbonaceous aerosol content – organic carbon (OC) and elemental carbon (EC) – characterized by a particular type of evolved gas analysis (EGA). EGA includes thermal–optical reflectance (TOR) and thermal–optical transmittance (TOT), which apportions total carbon into OC and EC fractions according to different criteria applied to the changing optical properties of the filter under stepwise heating (Chow et al., 2007a). EGA OC and EC are widely measured in monitoring networks (Chow et al., 2007a; Brown et al., 2017), with historical significance in regulatory monitoring, source apportionment, and epidemiological studies. While EC is formally defined as sp2-bonded carbon bonded only to other carbon atoms, EC measured by EGA is an operationally defined quantity that is likely associated with low-volatility organic compounds (Chow et al., 2004; Petzold et al., 2013; Lack et al., 2014). EGA OC comprises a larger fraction of the total carbon and therefore is less influenced by pyrolysis artifacts that affect quantification of EGA EC. In addition to OC estimates independently constructed from laboratory calibrations of functional groups, prediction of EGA OC and EC from FT-IR spectra will provide values for which strong precedent in atmospheric studies exist. Thus, use of collocated measurements complements conventional approaches in expanding the capabilities of FT-IR spectroscopy to extract useful information contained in vibrational spectra.

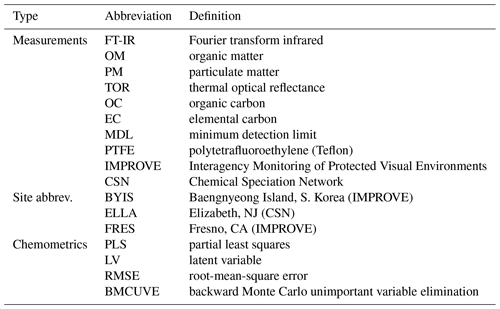

We review the current state of the art for quantitative prediction of OC and EC as reported by TOR using FT-IR spectroscopy at selected sites of the Interagency Monitoring of Protected Visual Environments (IMPROVE) monitoring network (Malm and Hand, 2007; Solomon et al., 2014) and the Chemical Speciation Network (CSN) (Solomon et al., 2014). This work is placed within the context of overseeing the life cycle of a statistical calibration model more generally: reporting further developments in anticipating errors due to precision and bias in new samples and describing a road map for future work. While partial least squares (PLS) regression and its variants figure heavily in the calibration approach taken thus far, related developments in the fields of machine learning, chemometrics, and statistical process monitoring are mentioned to indicate the range of possibilities yet available to overcome future challenges in interpreting complex mid-IR spectra of PM. We expect that many concepts described here will also be relevant for the emerging field of statistical calibration and deployment of measurements in a broader environmental and atmospheric context (e.g., Cross et al., 2017; Kim et al., 2018; Zimmerman et al., 2018). In the following sections, we describe the experimental methods for collecting data (Sect. 2), the calibration process (Sect. 3), assessing suitability of existing models for new samples (Sect. 4.1), and maintaining calibration models (Sect. 4.2). Finally, we conclude with a summary and outlook (Sect. 5). A list of recurring abbreviations can be found in Appendix A.

First, we review the basic principles of FT-IR spectroscopy and how the measured absorbances can be related to underlying constituents, including carbonaceous species (Sect. 2.1). We then describe the samples used for calibration and evaluation (Sect. 2.2). We then conclude the section with discussion regarding quality assurance and quality control (QA/QC) of the FT-IR hardware performance (Sect. 2.3). Under the assumption that these hardware QA/QC criteria are met, we dedicate the remainder of the paper to outlining model evaluation on the assumption that the performance in prediction can be attributed to differences in sample composition.

2.1 Fourier transform infrared spectroscopy

In this section, we cover the background necessary to understand FT-IR spectroscopy in the analysis of PM collected onto PTFE filter media, which is optically thin and permits an absorbance spectrum to be obtained by transmission without additional sample preparation (McClenny et al., 1985; Maria et al., 2003). The wavelengths of IR are longer than visible light (400–800 nm) and FT-IR spectroscopy refers to a nondispersive analytical technique probing the mid-IR range, which is radiation from 2500 to 25 000 nm or in the vibrational frequency units used by spectroscopists, wavenumbers, 4000 to 400 cm−1. Molecular bonds absorb mid-IR radiation at characteristic frequencies of their vibrational modes when interactions between electric dipole and electric field induce transitions among vibrational energy states (Steele, 2006; Griffiths and Haseth, 2007). Based on this principle, the spectrum obtained by FT-IR spectroscopy represents the underlying composition of organic and inorganic functional groups containing molecular bonds with a dipole moment.

In transmission-mode analysis in which the IR beam is directed through the sample, absorbance (A) can be obtained by ratioing the measured extinction of radiation through the sample (I) by a reference value (I0), also called the “background”, and taking the negative value of their decadic logarithm (first relation of Eq. 1).

The sample is the PTFE filter (with or without PM) and the background is taken as the empty sample compartment. The quality of the absorbance spectrum depends on how accurately the background reflects the conditions of the sample scan, and the background is therefore acquired regularly as discussed in Sect. 2.3.

When absorption is the dominant mode of extinction, the measured absorbance (A) is proportional to the areal density of molecules (n(a)) in the beam in the sample (Eq. 1) (Duyckaerts, 1959; Kortüm, 1969; Nordlund, 2011). The superscript “(a)” is used to denote the area-normalized quantity. ε is the proportionality constant and is called the molar absorption coefficient. Although scattering off of surfaces present in the sample can generate a significant contribution to the absorbance spectrum, its effects can be modeled as a sum of incremental absorbances by a linear calibration model or minimized through spectral preprocessing procedures (baseline correction) as discussed in Sect. 3.3.1.

A composite metric of PM such as carbon content presumably results from contributions by a myriad of substances. The abundances of these underlying molecules concurrently give rise to the apparent mass of carbon (mC) (Eq. 2) measured by evolved gas analysis and the absorbance spectrum (A) (Eq. 3) measured by FT-IR spectroscopy (Ottaway et al., 2012):

fC,k denotes the number of (organic or elemental) carbon atoms in molecule k, and 12.01 is the atomic mass of carbon. Non-carbonaceous substances (e.g., inorganic compounds) that give rise to additional (possibly interfering) absorbance are indexed by k′. “{…}” indicates contributions from instrumental noise, ambient background, and additional factors such as scattering. Using TOR measurements from collocated quartz fiber filters, our objective is to develop a calibration model for estimating the abundance of carbonaceous material () in the PTFE sample that may have led to the observed pattern of mid-IR absorbances (). A common approach is to explore the relationship between response and absorbance spectra through a class of models that take on a multivariate linear form (Griffiths and Haseth, 2007):

The set of wavelength-dependent regression coefficients bj comprise a vector operator that effectively extracts the necessary information from the spectrum for calibration. These coefficients (bjs) presumably represent a weighted combination of coefficients expressed in Eqs. (2) and (3) (also correcting for non-carbonaceous interferences). The remaining term, ei, characterizes the model residual (in regression fitting) or prediction error (in application to new samples). The relationship with underlying substances (k) that comprise OC and EC is implicit, though some efforts to interpret these constituents have been made through examination of latent (or hidden) variables obtained from the calibration model (discussed in Sect. 3.4).

Using complex, operationally defined TOR measurements as reference for calibration, some caution in interpretation and application is warranted. For instance, these coefficients may not necessarily capture the true relationship expressed by Eqs. (2) and (3), but rather rely on correlated rather than causal variables for quantification. Particles and the PTFE substrate itself can confer a large scattering contribution to the extinction spectrum (Eq. 1), and additional sample matrix interactions among analytes may challenge assumptions regarding the linear relationship (Eq. 3) underlying the model for quantification (Eq. 4) (Geladi and Kowalski, 1986). Furthermore, the relationship between spectra and concentrations embodied by the regression coefficients is specific to the chemical composition of PM at the geographic location and sampling artifacts due to composition and sample handling protocols of the calibration samples. To address these concerns, extensive evaluation regarding model performance in various extrapolation contexts is necessary to investigate the limits of our calibration models, and methods for anticipating prediction errors provide some guidance on their general applicability in new domains. Regression coefficients and underlying model parameters are inspected to determine important vibrational modes that provide insight into the infrared absorption bands that drive the predictive capability of our regression models.

2.2 Sample collection (IMPROVE and CSN)

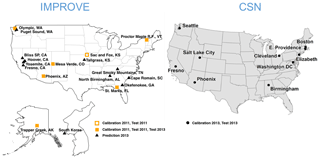

Figure 1Map of IMPROVE and CSN sites used for this work. The Sac and Fox, KS, IMPROVE site was only operational for the first half of 2011. Samples from Fresno, CA, and South Korea were additionally used for a separate calibration.

The IMPROVE network consists of approximately 170 sites in rural and pristine locations in the United States primarily national parks and wilderness areas (Malm and Hand, 2007). Data from the IMPROVE network are used to monitor trends in particulate matter concentrations and visibility. IMPROVE collects ambient samples midnight to midnight every third day by pulling air at 22.8 L min−1 through filters. PTFE (25 mm, Pall Corp.), or more commonly referred to as Teflon, filters are routinely used for gravimetric, elemental, and light-absorption measurements and are used in this work for FT-IR analysis. Quartz filters are used for TOR measurements to obtain OC and EC. Nylon filters are used to measure inorganic ions, primarily sulfate and nitrate.

The CSN consists of about 140 sites located in urban and suburban area and the data are used to evaluate trends and sources of particulate matter (Solomon et al., 2014). Ambient samples are collected in the CSN on a midnight-to-midnight schedule once every third or once every sixth day. Quartz filters for TOR analysis are collected with a flow rate of 22.8 L min−1. PTFE filters (Whatman PM2.5 membranes, 47 mm, used through late 2015; MTL filters (Measurement Technology Laboratories, 47 mm) have been used thereafter) and nylon filters are collected at a flow rate of 6.7 L min−1. All sites in CSN have used TOR for carbon analysis since 2010.

PTFE filters are used for gravimetric analysis on account of their low vapor absorption (especially water) and standardization in compliance monitoring, while quartz fiber filters are separately collected on account of their thermal stability (Chow, 1995; Chow et al., 2007b, 2015; Malm et al., 2011; Solomon et al., 2014). TOR analysis consists of heating a portion of the quartz filter with the IMPROVE_A temperature ramp and measuring the evolved carbon (Chow et al., 2007a). The initial heating is performed with an inert environment and the material that is removed is ascribed to OC. Oxygen is added at the higher temperatures and the measured material is ascribed to EC. Charring of ambient particulate carbon is corrected using a laser that reflects off the surface of the sample (hence reflectance) (Chow et al., 1993). The evolved carbon is converted to methane and measured with a flame ionization detector. Organic carbon data are corrected for gas-phase adsorption using a monthly median blank value specific to each network (Dillner, 2018).

For this work, we examine a subset of these sites in which PTFE filters were analyzed for FT-IR spectra (Fig. 1). For model building and evaluation (Sect. 3), we use seven sites consisting of 794 samples for IMPROVE in 2011 and 10 sites consisting of 1035 samples for CSN in 2013. Two sites in 2011 IMPROVE are samplers collocated at the same urban location in Phoenix, AZ, and one site (Sac and Fox) that was discontinued midyear. Additional IMPROVE samples were analyzed by FT-IR spectroscopy during sample year 2013, which included six of the same sites and 11 additional sites. This data set is used for evaluation of the operational phase of the model (Sect. 4).

Given the different sampling protocols that result in different spectroscopic interferences from PTFE (due to different filter types) and range of mass loadings (due to flow rates), and the difference in expected chemical composition (due to site types), calibrations for the CSN and IMPROVE networks have been developed separately (Weakley et al., 2016). Advantages of building such specialized models in favor of larger, all-inclusive models are discussed in Sect. 3.5. Therefore, TOR-equivalent carbon predictions for 2011 and 2013 IMPROVE samples discussed for this paper are made with a calibration model using a subset of samples from 2011 IMPROVE, and TOR predictions for 2013 CSN samples are made with a calibration model using a subset of samples from 2013 CSN. One exception is a special model constructed to illustrate how new samples can improve model prediction (Sect. 4.2); a subset of samples from two sites – Fresno, CA (FRES), and Baengnyeong Island, S. Korea (BYIS) – in 2013 IMPROVE are used to make predictions for the remaining samples at those sites. In all cases, analytical figures of merit for model evaluation are calculated for samples that are not used in calibration.

2.3 Laboratory operations and quality control of analysis

IMPROVE and CSN PTFE sample and blank filters are analyzed without pretreatment on either Tensor 27 or Tensor II FT-IR spectroscopy instruments (Bruker Optics, Billerica, MA) equipped with a liquid nitrogen-cooled detector. Filters are placed in a small custom-built sample chamber, which reliably places each filter the same distance from the source. IR-active water vapor and CO2 are purged from the sample compartment and instrument optics to minimize absorption bands of gas-phase compounds in the aerosol spectra. Samples are measured in transmission mode and absorbance spectra, which are used for calibration and prediction, are calculated using the most recent empty chamber spectrum as a reference (collected hourly). The total measurement time for one filter is 5 min. Additional details on the FT-IR analysis are described by Ruthenburg et al. (2014) and Debus et al. (2018).

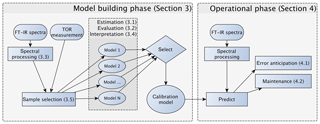

Figure 2Diagram of the model building, evaluation, and monitoring process. Sections and subsections covering the illustrated topics are denoted in parentheses. Note that the any of the calibrations can be a multilevel model (Sect. 3.5.3) consisting of an ensemble of models.

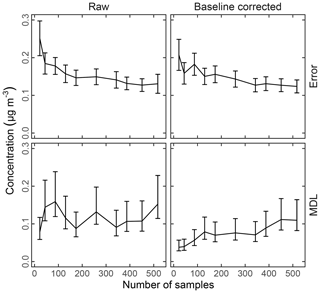

Daily and weekly quality control checks are performed to monitor the comparability, precision, and stability of the FT-IR spectroscopy instruments. Duplicate spectra are collected every 50 filters (once or twice per day) per instrument in order to evaluate measurement precision. Measured precision values are low and smaller than the 95th percentile of the standard deviation of the blanks for both TOR OC and EC, indicating that instrument error has a relatively minor influence on the prediction of TOR OC and EC and is smaller than the variability observed among PTFE filters. Quality control filters – blank filters and ambient samples – are analyzed weekly to monitor instrument stability. Debus et al. (2018) conclude that predictions of TOR OC and EC remain relatively stable over a 2.5-year period based on analyses of quality control filters and that observed changes are small. These data enable us to track instrumental changes that will require recalibration (Sect. 4.2). A subset of ambient filters are analyzed on all FT-IR spectroscopy instruments to evaluate spectral dissimilarities and differences in prediction. These samples show that differences in spectral response among instruments are small and due mainly to variability in PTFE. In addition, these samples indicate that careful control of laboratory conditions and detector temperature, sample position, relative humidity (RH), and CO2 levels in the FT-IR spectroscopy instrument enables instrument-agnostic calibrations that predict accurate concentrations independent of the instrument on which a spectrum is collected. The quality control data show that the TOR OC and EC measurements obtained from multiple FT-IR spectroscopy instruments in one laboratory are precise, stable (over the 2.5-year period evaluated) and agnostic to the instrument used for analysis (Debus et al., 2018).

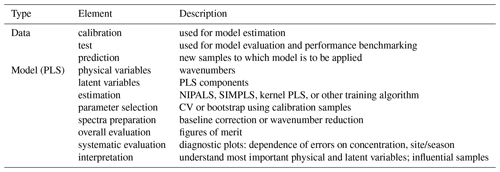

In this section, we describe the model building process for quantitative calibration. The relationship between spectra and reference values to be exploited for prediction can be discovered using any number of algorithms, the method of spectra pretreatment, and the calibration set of samples to be used for model training and validation. As the best choices for each of these categories are not known a priori, the typical strategy is to generate a large set of candidate models and select one that scores well across a suite of performance criteria against a test set of samples reserved for independent evaluation. The process of building and evaluating a model conceptualized in the framework of statistical process control is depicted in Fig. 2. In the first stage, various pathways to model construction are evaluated, and expectations for model performance are determined. The second stage involves continued application and monitoring of model suitability for new samples (prediction set), which is discussed in Sect. 4.1. Where applicable, the sample type in each data set should include several types of samples. For instance, the calibration set can include blank samples in which analyte (but not necessarily interferent) concentrations are absent. Test and prediction set samples can include both analytical and field blank samples. Collocated measurements can be used for providing replicates for calibration or used as separate evaluation of precision. Immediately below, we describe the procedure for model specification, algorithms for parameter estimation, and model selection in Sect. 3.1. Methods for spectra processing are described in Sect. 3.3 and sample selection in Sect. 3.5. In each section, the broader concept will be introduced and then its application to TOR will be reviewed.

3.1 Model estimation

Many algorithms in the domain of statistical learning, machine learning, and chemometrics have demonstrated utility in building calibration models with spectra measurements: neural networks (Long et al., 1990; Walczak and Massart, 2000), Gaussian process regression (Chen et al., 2007), support vector regression (Thissen et al., 2004; Balabin and Smirnov, 2011), principal component regression (Hasegawa, 2006), ridge regression (Hoerl and Kennard, 1970; Tikhonov and Arsenin, 1977; Kalivas, 2012), wavelet regression (Brown et al., 2001; Zhao et al., 2012), functional regression (Saeys et al., 2008), and PLS (Rosipal and Krämer, 2006), among others. There is no lack of algorithms for supervised learning with continuous response variables that can potentially be adapted for such an application (Hastie et al., 2009). Each of these techniques maps relationships between spectral features and reference concentrations using different similarity measures, manifolds, and projections, largely in metric spaces where the notion of distances among real-valued data points is well-defined (e.g., Zezula et al., 2006; Russolillo, 2012). The best mathematical representation for any new data set is difficult to ascertain a priori, but models can be compared by their fundamental assumptions and their formulation: e.g., linear or nonlinear in form; globally parametric, locally parametric, or distribution free (random forest, nearest neighbor); feature transformations; objective function and constraints; and expected residual distributions. Approaches that incorporate randomized sampling can return slightly different numerical results, but reproducibility of any particular result can be ensured by providing seed values for the pseudo-random number generator. A typical procedure for model development is to select candidate methods that have enjoyed success in similar applications and empirically investigate which techniques provide meaningful performance and interpretability for the current task, after which implementation measures are then pursued (Kuhn and Johnson, 2013). In lieu of selecting a single model, ensemble learning and Bayesian model averaging approaches combine predictions from multiple models (Murphy, 2012).

For FT-IR calibration targeting prediction of TOR-equivalent concentrations, we focus on finding solutions to the linear model introduced in Sect. 2.1. Letting , , b=[bi], and e=[ei], we re-express Eq. (4) in array notation to facilitate further discussions of linear operations:

Equation (5) is an ill-posed inverse problem; therefore, it is desirable to introduce some form of regularization (method of introducing additional information or assumptions) to find suitable candidates for b (Zhou et al., 2005; Friedman et al., 2010; Takahama et al., 2016). In this paper, we summarize the application of PLS (Wold, 1966; Wold et al., 2001) for obtaining solutions to this equation, with which good results have been obtained for our application and FT-IR spectra more generally (Hasegawa, 2006; Griffiths and Haseth, 2007). This technique has been a classic workhorse of chemometrics for many decades and is particularly well-suited for characteristics of FT-IR analysis, for which data are collinear (neighboring absorbances are often related to one another) and high-dimensional (more variables than measurements in many scenarios). These issues are addressed by projection of spectra onto an orthogonal basis of latent variables (LVs) that take a combination of spectral features, and regularization by LV selection (Andries and Kalivas, 2013). Furthermore, PLS is agnostic with respect to assumption of residual structure (e.g., normality) for obtaining b, which circumvents the need to explicitly account for covariance or error distribution models to characterize the residuals (Aitken, 1936; Nelder and Wedderburn, 1972; Kariya and Kurata, 2004). PLS is also used as a preliminary dimension reduction technique prior to application of nonlinear methods (Walczak and Wegscheider, 1993). Therefore, it is sensible that PLS should be selected as a canonical approach for solving Eq. (5).

Mathematically, classical PLS represents a bilinear decomposition of a multivariate model in which both X and y are projected onto basis sets (“loadings”) P and q, respectively (Wold et al., 1983, 1984; Geladi and Kowalski, 1986; Mevik and Wehrens, 2007):

T is the orthogonal score matrix and EX denotes the residuals in the reconstruction of the spectra matrix. Common solution methods search for a set of loading weight vectors (represented in a column matrix W) such that covariance of scores (T) with respect to the response variable (y) is maximized. The weight matrix can be viewed as a linear operator that changes the basis between the feature space and FT-IR measurement space. These weights and their relationship to the score matrix and regression vector are expressed below:

For univariate y as written in Eq. (5), a number of commonly used algorithms – nonlinear iterative partial least squares (NIPALS; Wold et al., 1983), SIMPLS (deJong, 1993), kernel PLS (with linear kernel; Lindgren et al., 1993) – can be used to arrive at the same solution (while varying in numerical efficiency). Kernel PLS can be further extended into modeling nonlinear interactions by projecting the spectra onto a high-dimensional space and applying linear algebraic operations akin to classical PLS, with comparative performance to support vector regression and other commonly used nonlinear modeling approaches (Rosipal and Krämer, 2006). However, likely due to the linear nature of the underlying relationship (Eq. 4), linear PLS has typically performed better than nonlinear algorithms for FT-IR calibration (Griffiths and Haseth, 2007). In addition, the linearity of classical PLS regression has yielded more interpretable models than nonlinear ones (Luinge et al., 1995). Therefore, past applications of PLS to FT-IR calibration of atmospheric aerosol constituents has focused on its linear variants and will be the focus of this paper.

An optimal number of LVs must be selected to arrive at the best predictive model. A larger number of LVs are increasingly able to capture the variations in the spectra, leading to reduction in model bias. Some of the finer variations in the spectra are not part of the analyte signal that we wish to model; including LVs that model these terms leads to increased variance in its predictions. A universal problem in statistical modeling is to find a method for characterizing model bias and variance such that one with the lowest apparent error can be chosen. There is no shortage of methods devised to capture this bias–variance tradeoff, and their implications for model selection continue to be an active area of development (Hastie et al., 2009). With no immediate consensus on the single best approach for all cases, the approach often taken is to select and use one based on prior experience until found to be inadequate (as with model specification).

One class of methods characterizes the bias and variance using the information obtained from fitting of the data. For instance, the Akaike information criterion (AIC; Akaike, 1974) and Bayesian information criterion (BIC; Schwarz, 1978) consider the balance between model fidelity (fitting error, which monotonically decreases with number of parameters) and penalties incurred for increasing model complexity (which serves as a form of regularization). The fitting error may be characterized by residual sum of squares or maximum likelihood estimate (e.g., Li et al., 2002), and the penalty may be a scaled form of the number of parameters or norms of the regression coefficient vector. An effective degrees of freedom (EDF) or generalized EDF parameter aims to characterize the resolvable dimensionality as apparent from the model fit to data (Tibshirani, 2014), though the EDF may not always correspond to desired model complexity (Krämer and Sugiyama, 2011; Janson et al., 2015).

Another class of methods relies on assessment of the bias and variance contributions implicitly present in prediction errors, which are obtained by application of regression coefficients estimated using a training data set and evaluated against a separate set of (“validation”) data withheld from model construction to fix its parameters. To maximize the data available for both training and validation, modern statistical algorithms such as cross-validation (CV) (Mosteller and Tukey, 1968; Stone, 1974; Geisser, 1975) and the bootstrap method (Efron and Tibshirani, 1997) allow the use of the same samples for both training and validation, which comprise what we collectively refer to as the calibration set. The essential principle is to partition the same calibration set multiple times such that the model is trained and then validated on different samples over a repeated number of trials. In this way, a distribution of performance metrics for models containing different subsets of the data can be aggregated to determine a suitable estimate of a parameter (number of LVs). The number and arrangement of partitions vary by method, with CV using each sample exactly once for validation and bootstrap resamples with replacement. Both have reported usable results (Molinaro et al., 2005; Arlot and Celisse, 2010). For an increasingly smaller number of samples, leave-one-out (LOO) CV or bootstrap may be favored as it reserves a larger number of samples to train each model, though it is generally appreciated that LOO leads to suboptimal estimates of prediction error (Hastie et al., 2009). Evaluation metrics are calculated on samples that have not been involved in the model-building process (Esbensen and Geladi, 2010). Examples of metrics include the minimum root-mean-square error of cross-validation (RMSECV) (one of the most widely used metrics; Gowen et al., 2011), 1 standard deviation above RMSECV (Hastie et al., 2009), Wold's R criterion (Wold, 1978), coefficient of determination (R2), and randomization p value (van der Voet, 1994; Wiklund et al., 2007), among others. A suite of these metrics can also be considered simultaneously (Zhao et al., 2015). The final model is obtained by refitting the model to all of the available samples in the calibration set and using the number of parameters selected in the CV process. Other strategies and general discussions on the topic of performance metrics and statistical sampling are covered in many textbooks (e.g., Bishop, 2009; Hastie et al., 2009; Kuhn and Johnson, 2013).

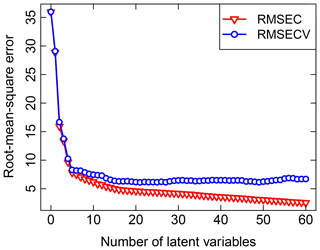

Past work on TOR and FT-IR spectroscopy measurements has used V-fold CV, with Dillner and Takahama (2015a, b) using minimum RMSECV and Weakley et al. (2016) using Wold's R criterion for performance evaluation. In V-fold CV, the data are partitioned into V groups, and V−1 subsets are used to train a model to be evaluated on the remaining subset (repeated for V arrangements). Dillner and Takahama (2015a) found that V=2, 5, and 10 selected a different number of LVs but led to similar overall performance. To keep the solution deterministic (i.e., no random sampling) and representative (i.e., the composition of training sets and validation sets is representative of the overall calibration sets across permutations), samples in the calibration set are ordered according to a strategy amenable for stratification. For instance, samples are arranged by sampling site and date (used as a surrogate for source emissions, atmospheric processing, and composition, which often vary by geography and season), or with respect to increasing target analyte concentration, and samples separated by interval V are used to create each partition in a method referred to as Venetian blinds (also referred to as interleaved or striped) CV. An illustration of RMSECV compared to the fitting errors represented by the root-mean-square error calibration (RMSEC) for TOR OC is shown in Fig. 3. Other strategies for arranging CV include maximizing differences among samples in each fold to reduce chances of overfitting (Kuhn and Johnson, 2013) but have not been explored in this application.

Even with specification of model and approach for parameter selection fixed, spectral processing and sample selection can lead to differences in overall model performance. We first discuss how different models can be generated from the same set of samples according to these decisions before proceeding to protocols for model evaluation using the test set reserved for independent assessment (Sect. 3.2). The test set is used to compare the merits of models built in different ways and establish control limits for the operational phase (Sect. 4).

3.2 Model evaluation

Statistical models can be evaluated using many of the same techniques also used by mechanistic models (Olivieri, 2015; Seinfeld and Pandis, 2016). In this section, we describe methods for evaluating overall performance (Sect. 3.2.1) and occurrence of systematic errors (Sect. 3.2.2).

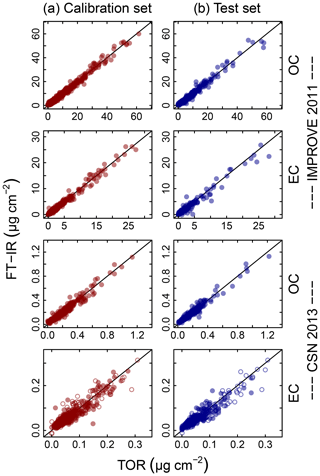

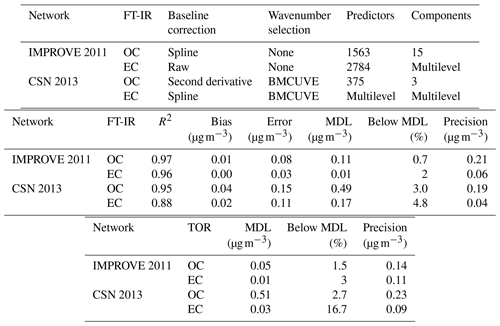

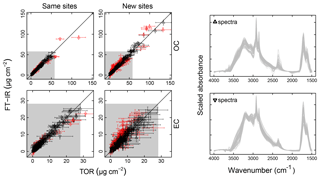

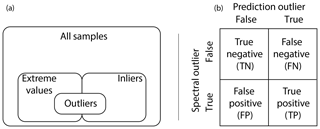

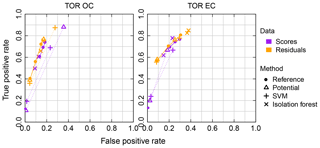

Figure 4Illustration of model fits (“Calibration set”, column a) and predictions (“Test set”, column b) for the 2011 IMPROVE and 2013 CSN networks. Open circles for CSN EC indicate anomalous samples (discussed in Sect. 3.5.3). Note units are in areal mass density on the filter.

3.2.1 Overall performance

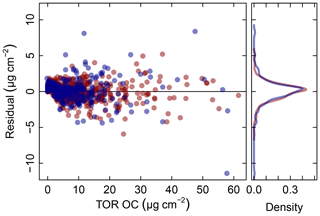

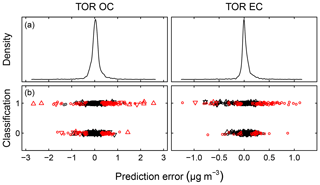

Predictions for a set of selected models for 2011 IMPROVE and 2013 CSN are shown in Fig. 4. Details of sample selection for calibration are provided in Sect. 3.5) but here we present results for the “base case” models which contain representations of all sites and seasons for each network. There are many aspects of each model that we wish to evaluate by comparing predictions against known reference values. These aspects include the bias and magnitude of dispersion, but also our capability to distinguish ambient samples from blank samples at the low end of observed concentrations. Metrics that capture these effects can effectively be derived from the term e in the multivariate regression equation (Eq. 5) when predictions and observations are compared in the test set spectra. e is referred to as the residual when describing deviations from observations in fitted values and prediction error when describing deviations from observed values when the model is used for prediction in new samples. However, by convention we often resort to the negative of the residual such that deviation in prediction is calculated with respect to the observation, rather than the other way around. Example distributions for residuals and prediction errors for TOR OC in 2011 IMPROVE are shown in Fig. 5.

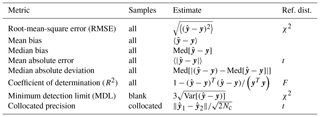

While the use of the minimum root-mean-square error (RMSE) is pervasive in chemometrics and machine learning as a formal parameter tuning or model selection criterion, another family of metrics is more commonly used in the air quality community (Table 1). For instance, the mean bias and mean error and their normalized quantities are often used for model–measurement evaluation of mechanistic (chemical transport) models (Seinfeld and Pandis, 2016). R2 is commonly used in intercomparisons of analytical techniques. Many of the statistical estimators in Table 1 converge to a known distribution from which confidence intervals can be calculated, or otherwise estimated numerically (e.g., by bootstrap). In addition to conventional metrics, alternatives drawing upon robust statistics (Huber and Ronchetti, 2009) are also useful when undue influence from a few extreme values may lead to misrepresentation of overall model performance (Barnett and Lewis, 1994). For instance, the mean bias is replaced by the median bias, and mean absolute error is replaced by median absolute deviation. Even if a robust estimator is unbiased, it may not have the same variance properties as its non-robust counterpart (Venables and Ripley, 2003); therefore, comparison against a reference distribution for statistical inference may be less straightforward.

For TOR-equivalent values predicted by FT-IR spectroscopy, the median bias and errors have been typically preferred for characterizing overall model performance, together with R2 and the minimum detection limit (MDL). Mean errors have been examined primarily to make specific comparisons among models. Having derived these metrics, we place them in context by comparing them to those reported by the reference (TOR) measurement, which include collocated measurement precision and percent of samples below MDL (Table 2).

Figure 5Residuals (red symbols) and prediction errors (blue symbols) from 2011 IMPROVE OC (baseline corrected, base case) predictions. The corresponding kernel density estimate of the distribution is shown on the right.

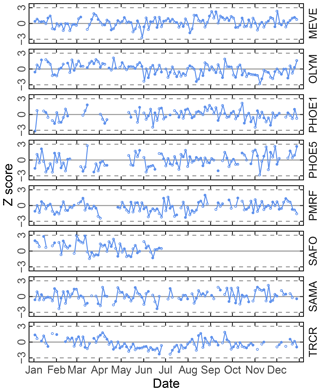

Figure 6Time series chart of TOR-equivalent OC residuals (for calibration samples) and prediction errors (for test set samples) separated by site. Each value (residual: open circle; prediction error: filled circle) is mapped to a median-centered inverse hyperbolic sine function using 175 values (approximately 20 % of the 2011 IMPROVE set) from neighboring TOR OC concentrations to derive distribution parameters so that values are defined within a normal distribution (p value > 0.2). Dotted horizontal lines indicate ±3 standard deviations of the standard normal variate (Z score).

Table 1Definition for figures of merit for overall assessment of prediction error, samples to which they are applied, and their reference distribution (if available) used for significance testing. y is the (mean-centered) response vector (i.e., TOR OC or EC mass loadings), and is the predicted response (Eq. 5). 〈⋅〉 is the sample mean, Med[⋅] is the sample median, and Var[⋅] is the unbiased sample variance. Nc is the number of paired collocated samples.

3.2.2 Systematic errors

In addition to the aggregate metrics discussed above, we evaluate whether essential effects appear to be accounted for in the regression by examining errors across different classes of samples. Systematic patterns or lack of randomness can be evaluated by examining the independence of the individual prediction errors with respect to composition or using time and location of sample collection as surrogates for composition. For instance, high prediction errors elevated over multiple days may be associated with aerosols of unusual composition transported under synoptic-scale meteorology that is not well-represented in the calibration samples. A special exception is made for concentration, as errors can be heteroscedastic (i.e., nonconstant variance) on account of the wide concentration range of atmospheric concentrations that may be addressed by a single calibration model. This heteroscedasticity leads to a distribution that is leptokurtic (i.e., heavy tailed) compared to a normal distribution, as shown in Fig. 5. As solution algorithms for PLS are agnostic with respect to such residual structure, their application to this type of problem is well-suited.

Given the propensity of prediction error distributions to be long-tailed, error and residual values are transformed to standard-normal variates using inverse hyperbolic sine (IHS) functions (Johnson, 1949; Burbidge et al., 1988; Tsai et al., 2017) using parameters derived from samples with similar analyte (TOR) concentrations. Such a transformation aids identification of systematic errors in prediction related to sample collection time and location; a control chart is displayed for TOR-equivalent OC in Fig. 6. Each prediction error is then characterized by its Z score, which gives an immediate indication of its relation to other prediction errors for samples with similar concentrations. Because of the IHS transformation, the magnitude of errors does not scale linearly in vertical distance on the chart but conveys its centrality, sign, and bounds of the error (e.g., three units from the mean encompasses 99 % of errors in samples similar in concentration). In this data set, we can see that prediction errors for Sac and Fox (SAFO) in each concentration regime are biased positively during the winter but systematically trend toward the mean toward the summer months. Other high error samples near the 99th percentile (±3 probits) occur in the urban environment of Phoenix, where the TOR OC concentrations are also highest. However, the prevalence of higher errors in only one of the two Phoenix measurements (PHOE5) may be indicative of sampler differences, rather than unusual atmospheric composition. Errors are negatively biased during the summer months in Trapper Creek, when TOR OC concentrations are typically low.

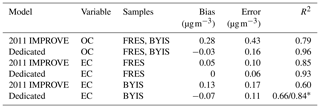

Table 2Description and figures of merit for “base case” models. “Predictors” describes the number of wavenumbers and “Components” describes the number of LVs. Bias and errors are estimated by ensemble medians.

Systematic errors arising from underrepresentation of concentration or composition range in the calibration set of IMPROVE were investigated by deliberate permutations of calibration and test set samples by Dillner and Takahama (2015a, b). This study is discussed together with model interpretation (Sect. 3.5.1). Weakley et al. (2018b) found systematic errors with respect to OC ∕ EC ratios when predicting TOR-equivalent EC concentrations in the CSN network. These samples were found to originate from Elizabeth, NJ, (ELLA), which differed from the nine other examined sites on account of the high contributions from diesel PM and extent of reduced charring compared to other samples. The solution was to build a separate calibration model (Sect. 3.5.3).

3.3 Spectral preparation

Mid-IR spectra can be processed in many different ways for use in calibration. The primary reasons for spectral processing are to remove influences from scattering such that calibration models follow the principles of the linear relation outlined in Eq. (4) and to remove unnecessary wavenumbers or spectral regions that degrade prediction quality or interpretability. Scattering of particles manifests itself in a broad contribution to the signal that is present in the measured spectrum by FT-IR spectroscopy and is addressed by a class of statistical methods referred to as baseline correction (Sect. 3.3.1). It is even possible to model nonlinear relationships such as the scattering contribution to the signal using a linear model with additional LVs, but these phenomena may not be mixed together with the noise (Borggaard and Thodberg, 1992; Despagne and Luc Massart, 1998). Elimination of unnecessary wavenumbers can reduce noise in the predictions and confer interpretation on the important absorption bands used for prediction; the class of procedures used in this is referred to as variable selection and uninformative variable elimination, among other names (Sect. 3.3.2). Some algorithms can separate the influence of the background and select variables in the process of finding the optimal set of coefficients b in Eq. (5). In each of the following sections, each of the topics in spectral processing will be introduced before describing their applications to TOR calibrations.

3.3.1 Baseline correction

Baseline correction can be fundamental to the way spectra are analyzed quantitatively. Significant challenges exist in separating the analyte signal from the baseline of mid-IR spectra, which include the superposition of broad analyte absorption bands (O–H stretches in particular) to the broadly varying background contributions from scattering. The algorithm for baseline correction may therefore depend on the type of analyte and the broadness of its profile; optimization of the correction becomes more important as concentrations decrease such that they become difficult to distinguish from the baseline. Approaches can be categorized as reference dependent or reference independent (Rinnan et al., 2009) and can be handled within or outside of the regression step. Reference-dependent methods define the baseline with respect to an external measurement, which may be a reference spectrum (Afseth and Kohler, 2012) or concentrations of an analyte. For instance, orthogonal signal correction (OSC) (Wold et al., 1998) isolates contributions to the spectrum that are uncorrelated with the analyte, and can be conceptualized as containing baseline effects. OSC can be incorporated into PLS, in which the orthogonal contribution would be represented by underlying LVs (Trygg, 2002). Even without explicit specification of orthogonal components, the influence of baseline effects is accounted for by multiple LVs in the standard PLS model (Dillner and Takahama, 2015a). Reference-independent baseline correction methods remove baseline contributions based on the structure of the signal without invocation of reference values. Two examples described below include interpolation and derivative correction methods. A more comprehensive discussion on this topic is provided by Rinnan et al. (2009).

While theories for absorption peak profiles are abundant, the lack of corollaries for baselines (Dodd and DeNoyer, 2006) leads to semiempirical approaches for modeling their effects. If we conceptualize the broad baseline as an Nth-order polynomial, we can approximate this expression with an analytical function or algorithm. Models can be considered to be (globally) parametric (e.g., polynomial, exponential) across a defined region of a spectrum, or nonparametric (e.g., spline or convex hull; Eilers, 2004), in which case local features of the spectrum are considered with more importance. These approaches typically determine the form of the curve by training a model on regions without significant analyte absorption and interpolated through the analyte region. The modeled baseline is then subtracted from the raw spectrum such that the analyte contribution remains. Model parameters are selected such that processed spectra conform to physical expectations – namely, that blank absorbances are close to zero and analyte absorbances are nonnegative. In general, these approaches aim to isolate the absorption contribution to the spectra that are visually recognizable and therefore most closely conform to traditional approaches for manual baseline removal used by spectroscopists. In addition to quantitative calibration or factor analytic applications (e.g., multivariate curve resolution; de Juan and Tauler, 2006), these spectra are more amenable for spectral matching.

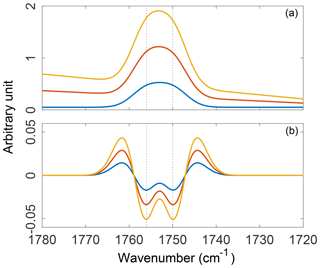

Alternatively, taking the first nth derivatives of the spectrum will remove the first n terms of the Nth-order polynomial and transform the rest of the signal (DeNoyer and Dodd, 2006). Since Gaussian (and most absorption) bands are not well approximated by low-order polynomials, they are not eliminated; i.e., their relative amplitudes and half-widths (ideally) remain unaffected by the transformation. This ensures that their value is retained for multivariate FT-IR calibrations (Weakley et al., 2016). Moreover, derivative-based methods can improve resolution of absorption bands after transformation (illustrated in Fig. 7). Derivative transformations can affect the signal-to-noise (S∕N) ratio, however, inflating the relative contribution of small perturbations. Therefore, smoothed derivative methods such as the three-parameter Savitzky–Golay filter (Savitzky and Golay, 1964) are favored in order to minimize this effect and, in practice, only first and second derivatives are generally used with vibrational spectra to maintain a reasonable S∕N ratio (Rinnan, 2014). In complex aerosol spectra caution must be exercised when interpreting the bands resolved by smoothed derivative filters since the filter parameters (i.e., bandwidth, kernel) all influence the outcome of the transformation. A major disadvantage of derivative filtering, in addition to the reduced visual connection to the original spectrum, relates to the inadvertent removal of broad absorption bands (Griffiths, 2006). Tuning filter parameters by trial and error may limit this type of band suppression to some extent. As a rule of thumb, the broad O–H stretches of alcohols (3650–3200 cm−1), carboxylic acids (3400–2400 cm−1), and N–H stretches of amines (3500–3100 cm−1) are likely to be sacrificed as a result of derivative filtering (Shurvell, 2006). A willingness to balance this type of information loss against the simplicity and rapidity afforded by derivative methods must be considered in practice.

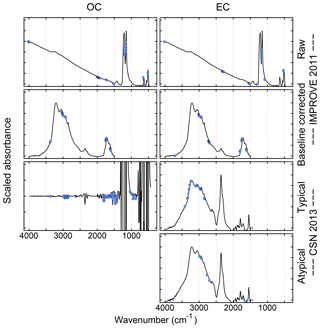

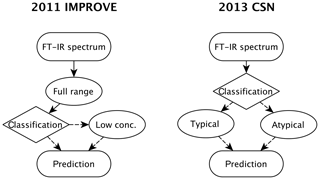

Different approaches have been used for processing of spectra for TOR calibration, including two interpolation and one derivative approach. Spectral processing is useful for spectra of PM collected on PTFE filters due to the significant contribution of scattering from the PTFE (McClenny et al., 1985). Small differences in filter characteristics lead to high variation in its contribution to each spectrum; a simple blank subtraction of similar blank filters or the same filter prior to PM loading is not adequate to obtain spectra amenable for calibration (Takahama et al., 2013). As the magnitude of this variability is typically greater than the analyte absorbances, baseline correction models trained on a set of blank filters typically do not perform adequately in isolating the nonnegative absorption profile of a new spectrum. Accurate predictions made by PLS without explicit baseline correction suggest that the calibration model is able to incorporate its interferences effectively within its feature space if trained on both ambient samples and blank samples together, though visually interpretable spectra for general use are not necessarily retrievable from this model. For this purpose, models based on interpolation from the sample spectrum itself have been preferred. Takahama et al. (2013) described semiautomated polynomial and linear fitting to remove PTFE residuals remaining from blank-subtracted spectra, which was based on prior work for manual baseline correction by Maria et al. (2003) and Gilardoni et al. (2007). This correction method had been used for spectral peak fitting, cluster analysis, and factor analysis (Russell et al., 2009; Takahama et al., 2011) previously, and was used for 2011 IMPROVE TOR OC and EC calibration shown in Table 2 (Dillner and Takahama, 2015a, b; Takahama et al., 2016). Kuzmiakova et al. (2016) introduced a smoothing spline method that produced baseline-corrected spectra (both visually and with respect to clustering and calibration) in ambient samples similar to in the polynomial method without need for PTFE blank subtraction. While the non-analyte regions of the spectra are implicitly assumed, the flexibility of the local splines combined with an iterative method for readjusting the non-analyte region effectively reduced the number of tuning parameters from four (in the global polynomial approach) to one. The spline baseline method was used for TOR EC prediction in 2013 CSN (Weakley et al., 2018b). The second derivative baseline correction method was applied to 2013 CSN TOR OC calibration (Weakley et al., 2016).

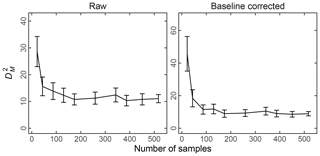

Overall, differences in calibration model performance in TOR prediction between spline-corrected and raw spectra models were minor for the samples evaluated in 2011 IMPROVE (results were comparable to metrics in Table 2). However, wavenumbers remaining after uninformative ones were eliminated (Sect. 3.3.2) differed when using baseline-corrected and raw spectra – even while the two maintained similar prediction performance. Weakley et al. (2016) and Weakley et al. (2018b) used the Savitzky–Golay method and spline correction method for TOR OC and EC, respectively, in the 2013 CSN network but did not systematically investigate the isolated effect of baseline correction on predictions without additional processing. A formal comparison between the derivative method against raw and spline-corrected spectra has not been performed, but this is an area warranting further investigation. Standardizing a protocol for spectra correction based on targeted analyte is a sensible strategy, as spectral derivatives are associated with enhancement in specific regions of the spectra. The selection of baseline correction method may also consider the areal density of the sample since the S∕N is reduced with derivative methods. However, the success of derivative methods demonstrated for TOR OC in CSN samples (with systematically lower areal loadings than IMPROVE samples) indicates that the reduction in S∕N is not likely a limiting factor for quantification in this application.

The derivative method appears to have a significant advantage in reducing the number of LVs as demonstrated for TOR OC (Table 2). The derivative-corrected spectra model for 2013 CSN resulted in only four components in contrast to the 35 selected by the raw spectra model. While wavenumber selection and a different model selection criterion were simultaneously applied to the derivative-corrected model, a large reason for the simplification is likely due to the baseline correction. For reference, reduced-wavenumber raw spectra models for 2011 IMPROVE TOR OC and EC still required seven to nine components (the full-wavenumber model required 15–28, depending on spectral baseline correction) (Takahama et al., 2016). A parsimonious model is desirable in that it facilitates physical interpretation of individual LVs as further discussed in Sect. 3.4.

The effect of baseline correction on reducing the scattering is illustrated by revisiting the TOR-equivalent OC predictions for the 2013 IMPROVE data set. Reggente et al. (2016) found that the raw spectra 2011 IMPROVE calibration model performed poorly in extrapolation to two new sites in 2013, particularly FRES and BYIS. When using baseline-corrected spectra, the median bias and errors are reduced from 0.28 and 0.43 and to 0.19 and 0.28 µg m−3, and R2 increases from 0.79 to 0.91 for samples from these sites (figure for baseline-corrected predictions shown in Sect. 4.1.1). As the filter type remained the same, this improvement in prediction accuracy is likely due to the removal of scattering contributions in PM2.5 particles in the new set that differs from the calibration set. Spectral signatures of nitrate and dust suggested the presence of coarse particles different than those in the 2011 calibration (and test) set samples (Sect. 4.1).

Figure 7Three synthetic absorption spectra constructed with varying contributions from a polynomial baseline and two unresolved Gaussian peaks (a) and their second-order, five-point, second-derivative Savitzky–Golay filter transformations (b). Absorption spectra were constructed such that the additive, linear, and polynomial components of the baseline scale with the amplitude of the absorption bands.

3.3.2 Wavenumber selection

Wavenumber or variable selection techniques aim to improve PLS calibrations by identifying and using only germane predictor variables (Balabin and Smirnov, 2011; Höskuldsson, 2001; Mehmood et al., 2012). Typically, such techniques remove variables deemed excessively redundant, enhance the precision of PLS calibration, reduce collinearity in the variables (and therefore model complexity) (Krämer and Sugiyama, 2011), and possibly improve interpretability of the regression. The simplest variable selection method based on physical insight rather than algorithmic reduction is truncation, in which regions for which absorbances are not expected or expected to be uninformative are removed a priori. Algorithmic variable selection techniques fall into three categories: filter, wrapper, and embedded methods (Saeys et al., 2007; Mehmood et al., 2012).

Filter methods provide a one-time (single-pass) measure of a variable importance with important and redundant variables distinguished according to a reliability threshold. Variables above such a threshold are retained and used for PLS calibration. Often, thresholds are either arbitrary or heuristically determined (Chong and Jun, 2005; Gosselin et al., 2010). In general, filter methods are limited by their need to choose an appropriate threshold prior to calibration, potentially leading to a suboptimal subset of variables.

The essential principle of wrapper methods is to apply variable filters successively or iteratively to sample data until only a desirable subset of quintessential variables remain for PLS modeling (Leardi, 2000; Leardi and Nørgaard, 2004; Weakley et al., 2014). Wrappers operate under the implicit assumption that single-pass filters are inadequate, requiring a guided approach to comprehensively search for the optimal subset of modeling variables. Since searching all 2p−1 combinations of wavenumbers is not tractable for multivariate FT-IR calibration problems (p>103), model inputs (or importance weights) are generally randomized at each pass of the algorithm to develop importance criteria, foregoing an exhaustive variable search. Genetic algorithms and backward Monte Carlo unimportant variable elimination (BMCUVE) are examples of two randomized wrapper methods (Leardi, 2000; Leardi and Nørgaard, 2004). Wrapper methods generally perform better than simple filter methods and have an additional benefit of considering both variables and PLS components simultaneously during optimization. The major drawback to wrapper methods is generally longer run times (which may be on the order of hours for large-scale problems) than filter methods.

As their name implies, embedded methods nest variable selection directly into the main body of the regression algorithm. For example, sparse PLS (SPLS) methods eliminate variables from the PLS loading weights (w), which reduce the number of nonzero regression coefficients (b) when reconstructed through Eq. (5) (Filzmoser et al., 2012). The zero-valued coefficients obtained for each LV can possibly confer component-specific interpretation of important wavenumbers but leads to a set of regression coefficients which are overall not as sparse as methods imposing sparsity directly on the regression coefficients (Takahama et al., 2016).

Many methods select informative variables individually, but for spectroscopic applications it is often desirable to select a group of variables associated with the same absorption band. Elastic net (EN) regularization (Friedman et al., 2010) adds an L2 penalty to the regression coefficient vector in addition to the L1 penalty imposed by the least absolute shrinkage and selection operator (LASSO) (Tibshirani, 1996), thereby imparting a grouping effect in selection. Interval variable selection methods (Wang et al., 2017) draw upon methods discussed previously but employ additional constraints or windowing methods to target selection of contiguous variables (i.e., an algorithmic approach to truncation).