the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

SegCloud: a novel cloud image segmentation model using a deep convolutional neural network for ground-based all-sky-view camera observation

Wanyi Xie

Ming Yang

Shaoqing Chen

Benge Wang

Zhenzhu Wang

Yingwei Xia

Yong Liu

Yiren Wang

Chaofan Zhang

Cloud detection and cloud properties have substantial applications in weather forecast, signal attenuation analysis, and other cloud-related fields. Cloud image segmentation is the fundamental and important step in deriving cloud cover. However, traditional segmentation methods rely on low-level visual features of clouds and often fail to achieve satisfactory performance. Deep convolutional neural networks (CNNs) can extract high-level feature information of objects and have achieved remarkable success in many image segmentation fields. On this basis, a novel deep CNN model named SegCloud is proposed and applied for accurate cloud segmentation based on ground-based observation. Architecturally, SegCloud possesses a symmetric encoder–decoder structure. The encoder network combines low-level cloud features to form high-level, low-resolution cloud feature maps, whereas the decoder network restores the obtained high-level cloud feature maps to the same resolution of input images. The Softmax classifier finally achieves pixel-wise classification and outputs segmentation results. SegCloud has powerful cloud discrimination capability and can automatically segment whole-sky images obtained by a ground-based all-sky-view camera. The performance of SegCloud is validated by extensive experiments, which show that SegCloud is effective and accurate for ground-based cloud segmentation and achieves better results than traditional methods do. The accuracy and practicability of SegCloud are further proven by applying it to cloud cover estimation.

- Article

(2395 KB) - Full-text XML

- BibTeX

- EndNote

Clouds are among the most common and important meteorological phenomena, covering over 66 % of the global surface (Rossow and Schiffer, 1991; Carslaw, 2009; Stephens, 2005; Zhao et al., 2019; Wang and Zhao, 2017). The analysis of cloud condition and cloud cover plays a key role in various applications (Papin et al., 2002; Yang et al., 2014; Yuan et al., 2015; Li et al., 2018; Ma et al., 2018; Bao et al., 2019). Localized and simultaneous cloud conditions can be accurately acquired with a high temporal and spatial resolution of ground-based observed clouds. Many ground-based cloud measurement devices, such as radar and lidar, are used to detect clouds (Zhao et al., 2014; Garrett and Zhao, 2013; Huang et al., 2012; Wang and Zhao, 2017). Especially, ground-based all-sky-view imaging devices have been increasingly developed in recent decades (Long et al., 2001; Genkova et al., 2004; Feister and Shields, 2005; Tapakis and Charalambides, 2013) because of their large field of view and low cost. Accurate cloud segmentation is a primary precondition for the cloud analysis of ground-based all-sky-view imaging equipment, which can improve the precision of derived cloud cover information and help meteorologists further understand climatic conditions. Therefore, accurate cloud segmentation has become a topic of interest, and many algorithms have been recently proposed for the cloud analysis of ground-based all-sky-view imaging instruments (Long et al., 2006; Kreuter et al., 2009; Heinle et al., 2010; Liu et al., 2014, 2015).

Traditional segmentation methods generally use “color” as a distinguishing factor between clouds and clear sky because cloud particles have similar scattering intensity in blue and red bands due to the Mie scattering effort. By contrast, air molecules have more scattering intensity in the blue band than in the red band due to the Rayleigh scattering theory. Thus, the blue and red channel values of a cloud image are available for identifying features as cloud segmentation. Long et al. (2006) and Kreuter et al. (2009) proposed a fixed-threshold algorithm using the ratio of red and blue channel values to identify clouds from whole-sky images. Particularly, pixels whose ratio of red and blue channel values are greater than the defined fixed threshold are identified as cloud, and as clear sky otherwise. Similarly, Heinle et al. (2010) treated the difference of red and blue channel values as a judgment condition to detect clouds. On the basis of red and blue channel values, Souzaecher et al. (2004) selected saturation as a complementary characteristic for cloud identification. These fixed-threshold algorithms, which strongly depend on cameras' specifications and atmospheric conditions, are not adaptable for varied sky conditions (Long and Charles, 2010). The graph-cut method (Liu et al., 2015) and superpixel segmentation algorithm (Liu et al., 2014) are also applied for cloud segmentation to overcome the drawback of the aforementioned fixed-threshold algorithms. Although certain improvement can be achieved, the performance of such algorithms remains unsatisfactory in real measurement applications. Therefore, accurate and robust cloud segmentation algorithms must be well developed.

Convolutional neural networks (CNNs) are outstanding and powerful object recognition technologies, which have been widely applied in many fields, such as computer vision and pattern recognition (LeCun and Bengio, 1998; Taigman et al., 2014). CNNs have also achieved breakthrough progress in cloud analysis (Xiao et al., 2019; Shi et al., 2016, 2017; Liang et al., 2017) due to their strong capability in cloud feature representation and advanced cloud feature extraction for accurate cloud identification (LeCun et al., 2015). Yuan et al. (2018) proposed an edge-aware CNN for satellite remote-sensing cloud image segmentation, which was proven to have superior detection results near cloud boundaries. Xiao et al. (2019) proposed an automatic classification model, namely, TL-ResNet152, to achieve accurate recognition of ice crystal in clouds. Zhang et al. (2018) proposed a CloudNet model for ground-based observed cloud categorization; the model could surpass the progress of other traditional approaches. However, few studies have evaluated the accuracy of CNNs in segmenting cloud images from ground-based all-sky-view imaging instruments.

In this study, we present a CNN model named SegCloud for the accurate segmentation of cloud images based on ground-based observation. The architecture of the proposed SegCloud is straightforward and clear; it comprises an encoder network, a corresponding decoder network, and a final Softmax classifier. SegCloud is characterized by powerful cloud discrimination and can automatically segment the obtained whole-sky images. It improves the accuracy of cloud segmentation and avoids misrecognition caused by traditionally color-based threshold methods. The SegCloud model is trained and tested by a database that consists of 400 whole-sky images and corresponding annotated labels. Extensive experimental results show that the proposed SegCloud model has effective and superior performance for cloud segmentation and has the advantage of recognizing the area near the sun. Moreover, the local cloud cover calculated by SegCloud model has a high correlation with human observation, which not only further proves the accuracy of SegCloud but also provides a practical reference for future automatic cloud cover observation.

The remainder of this paper is organized as follows. Section 2 describes the cloud segmentation database used in the experiment. Section 3 introduces the SegCloud architecture. Section 4 presents the experimental details and results. Finally, the conclusion and future work are depicted in Sect. 5.

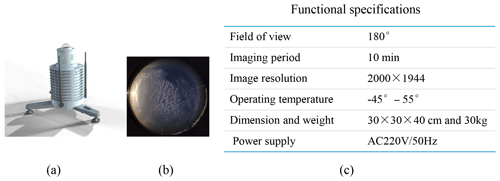

Figure 1(a) Appearance of ASC100. (b) Whole-sky images captured by ASC100. (c) Functional specifications of ASC100.

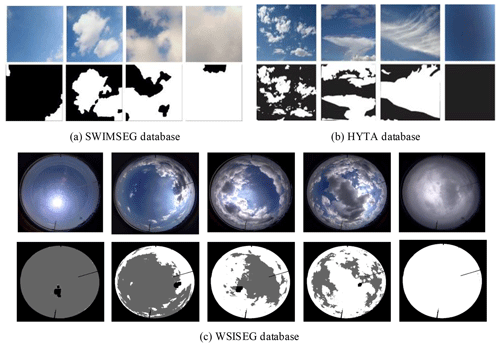

Figure 2Representative sky images and their corresponding labels from the (a) SWIMSEG database, (b) HYTA (hybrid thresholding algorithm) database, and (c) WSISEG database. The labels of the SWIMSEG and HYTA databases are binary images, where zero represents clear sky and one represents cloud. The labels of the proposed WSISEG database, namely, clouds, sky, and undefined areas (including sun and backgrounds), are marked with gray values 255, 100, and 0, respectively. In comparison with SWIMSEG and HYTA databases, the proposed WSISEG database has more advantages to reflect the whole-sky condition.

Sufficient whole-sky images are necessary to evaluate cloud segment algorithms comprehensively. In this study, the database used by Tao et al. (2019) is applied to train and test the proposed SegCloud model. The database called Whole-Sky Image SEGmentation (WSISEG) consists of 400 whole-sky images. These whole-sky images are captured by a ground-based all-sky-view camera (ASC). The appearance and functional specifications of ASC are shown in Fig. 1a and c, respectively. The basic imaging component of ASC is a digital camera equipped with a fish-eye lens with 180∘ field view angle. The digital camera faces the sky directly to capture complete hemispheric sky images, and no traditional solar occulting devices, such as a sun tracker or black shading strip, are required. The high-dynamic-range technique is also applied to obtain clear whole-sky images by fusing 10 photos with different (from low to high) exposure times to one image. Figure 1b presents one whole-sky image sample, which has RGB color with a resolution of 2000 pixels × 1944 pixels. The WSISEG database collects whole-sky images that cover various cloud covers, times of day, and azimuth and elevation angles of the sun. Details about the database are shown in Fig. 2c. The images are resized to 480×450 resolution to accommodate the input size of the CNN model, and their labels are manually created using photograph editing software. Different from the existing public database whose images are manually cropped from whole-sky images and whose areas near or including the sun and horizon are avoided, such as the SWIMSEG (Dev et al., 2017) and HYTA (Li et al., 2011) databases shown in Fig. 2a and b, respectively, the WSISEG database reflects a complete whole-sky condition. Thus, the whole-sky condition can be analyzed, and the performance of the segmentation algorithm in areas near the sun and horizon can be verified on this database. We divide the annotated images of the WSISEG database into a training set (340 annotated images) and a test set (60 annotated images) and ensure that no image overlaps between the two. Complex air condition images, such as cloud images under heavy haze and fog, are not included in the WSISEG database.

Detecting clouds from whole-sky images remains challenging for traditional cloud segmentation methods based on low-level visual information. CNNs can mine high-level cloud feature representation and are naturally considered as a novel choice for solving cloud segmentation problems. Thus, the SegCloud model is proposed and applied for whole-sky image segmentation. In this section, we initially describe an overall layout of the SegCloud model (Sect. 3.1) and then elaborate its training processes (Sect. 3.2).

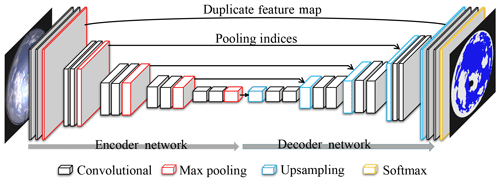

Figure 3Illustration of the proposed SegCloud architecture. Overall, the networks contain an encoder network, a corresponding decoder network, and a final Softmax classifier.

3.1 SegCloud architecture

SegCloud is an optimized CNN model that focuses on end-to-end cloud segmentation task. Figure 3 shows that SegCloud consists of an encoder network, a corresponding decoder network, and a final Softmax classifier. SegCloud evolves from an improvement of the VGG-16 network (Simonyan and Zisserman, 2015). The VGG-16 network is one of the best CNN architectures for image classification, which achieved huge success on the ImageNet Challenge in 2014 and has been applied in many other fields (Wang et al., 2016). In this study, we improve the VGG-16 network and propose our SegCloud model by replacing the fully connected layers of the original VGG-16 network with the decoder network to achieve end-to-end cloud image segmentation. SegCloud can take a batch of fixed-size whole-sky images as inputs. The encoder network transforms the input images to high-level cloud feature representation, whereas the decoder network enlarges the cloud feature maps extracted from the encoder network to the same resolution of input images. Finally, the outputs of the decoder network are fed to a Softmax classifier to classify each pixel and produce segmentation prediction.

3.1.1 Encoder network

The encoder network of SegCloud consists of 10 convolutional layers and 5 max-pooling layers. Each convolutional layer contains three operations, namely, convolution, batch normalization (Ioffe and Szegedy, 2015), and rectified linear unit (ReLU) activation (Hinton, 2010). First, the convolution operation accepts the input feature maps and produces a set of output feature maps using a trainable filter bank with a 3×3 window size and a stride of 1. Batch normalization is then used to normalize these obtained feature maps to accelerate the convergence of the SegCloud model and alleviate the vanishing gradient problem during later training. The following ReLU activation is applied to achieve nonlinear transformation and add the expression capability of the SegCloud model. Generally, the shallow convolutional layers tend to capture the fine texture, such as shape and edge, whereas the deeper convolutional layers compute more high-level and complex semantic features using these obtained shallow layer features (Liang et al., 2017). The max-pooling layers are also an essential content of the encoder network. They are located separately after the convolutional layers and achieve increased translation invariance for robust cloud image segmentation. Each max-pooling layer subsamples the input feature maps with 2×2 window size and a stride of 2. Thus, the size of output feature maps is reduced by half, and many predominant features are extracted. High-level and small-size cloud feature maps are finally formed for further pixel-wise cloud segmentation through 10 convolutional layers and 5 max-pooling layers.

3.1.2 Decoder network

The goal of the decoder network is to restore the obtained high-level cloud feature maps to the same resolution of input images and achieve end-to-end cloud image segmentation. The decoder network consists of 5 upsampling layers and 10 convolutional layers. Each upsampling layer upsamples input feature maps and produces sparse double-size feature maps, whereas each convolutional layer tends to densify these obtained sparse feature maps to produce many dense segmentation results. The upsampling layer uses feature information from the encoder network to perform upsampling and ensure the segmentation accuracy of whole-sky images. As shown by the black arrows in Fig. 3, the first four upsampling layers use pooling indices from the corresponding max-pooling layers to perform upsampling. The pooling indices are the locations of the maximum value in each max-pooling window of feature maps, which was proposed by Badrinarayanan et al. (2017) to ensure accurate feature restoration with less computational cost during inference. Thus, we introduce pooling indices into our first four upsampling layers and ensure the effective restoration of high-level cloud feature maps.

Although pooling indices have an advantage in computational time, they may lead to a slight loss of cloud boundary details. High-level semantic information and edge property play important roles in segmenting whole-sky images. Thus, the last upsampling layer directly uses whole feature maps duplicated by the first max-pooling layers to perform upsampling and improve cloud boundary recognition. The specific operation is divided into three steps. (1) The upsampling layer initially uses the bilinear interpolation method to upsample the feature maps of the nearest convolutional layers and obtains output feature maps that are double in size. (2) The feature maps from the first max-pooling layer of the encoder network are duplicated. (3) The feature maps obtained from steps (1) and (2) are concatenated to produce the final upsampling feature maps. In summary, high-level cloud feature maps are restored to the same resolution of input images step by step through the five upsampling layers.

3.1.3 Softmax classifier

The Softmax classifier is located after the decoder network to achieve final pixel-wise cloud classification (i.e., cloud image segmentation). Softmax classifier calculates the class probability of every pixel from feature maps through the following classification formula:

where xi and xj are the feature values of classes i and j, respectively. The output of the Softmax classifier is a three-channel probability image, where three is the number of categories (cloud, sky, and background areas, such as sun and surrounding building). The predicted results of each pixel are classes with maximum probability. Thus, the final segment results are outputted.

3.2 Training details of SegCloud model

SegCloud must be initially trained using the training set of the WSISEG database, such that it can be applied to cloud image segmentation. Before training the SegCloud, local contrast normalization is performed on the training set to further accelerate the training convergence of SegCloud. SegCloud is then trained on the NVIDIA GeForce GTX1080 hardware and machine learning software package named TensorFlow. Mini-batch gradient descent is used as an optimization algorithm to find the appropriate model weights. During training, the number of whole-sky images fed to the SegCloud model per batch is 10, and the momentum parameter with a decay of 0.9 is used (Sutskever et al., 2013). SegCloud is trained in 26 000 epochs, with a learning rate of 0.006. It uses the cross-entropy loss function defined in the following equation as the objective optimization function:

where N is the batch size, is the ground truth images (i.e., the corresponding labels from the training set), and yj is the predicated segmentation of the inputted whole-sky images. The calculated cross-entropy loss values are continuously optimized using a backpropagation algorithm (LeCun et al., 2014) until the loss values converge. The final trained model and the best model parameters are saved for actual whole-sky image segmentation.

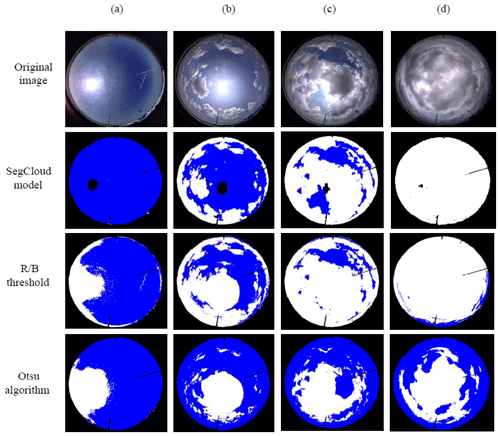

Figure 4Examples of segmentation results with three algorithms. The original images are presented in the top line; and the segmentation results of the SegCloud model, R/B threshold approach, and Otsu algorithm are presented in the second, third, and last lines, respectively. Clouds, sky, and sun are colored white, blue, and black, respectively. Masks are finally used in all result images to remove buildings around the circle for improved comparison of the sky and clouds.

Various experiments are designed and conducted to validate the performance of the proposed SegCloud model. First, vast segmentation experiments are performed to evaluate the effectiveness of the SegCloud. The superiority of the SegCloud is demonstrated by comparing it with other traditional cloud segmentation methods. Finally, the accuracy and practicability of the SegCloud are proven by applying it to cloud cover estimation.

4.1 Effectiveness of SegCloud model

The well-trained SegCloud model is evaluated using the test set of the WSISEG database to verify its effectiveness in segmenting whole-sky images. A series of whole-sky images are fed into the SegCloud, and segmented images are outputted. Some representative segmentation results are illustrated in the second row of Fig. 4. The SegCloud successfully segments the whole-sky images, as evident by the clouds, sky, and the sun in Fig. 4, which are colored white, blue, and black, respectively.

Furthermore, the effectiveness of the proposed SegCloud model is objectively quantified by calculating segmentation accuracy. The labels of the test set are set to the ground truths, and segmentation accuracy of the SegCloud is calculated by comparing the segmentation result with the ground truth, as defined in Eq. (3). Average segmentation accuracy is also determined using Eq. (4).

where T denotes the number of correctly classified pixels, which is the sum of true cloud pixels Ttcloud and true clear-sky pixels Ttsky; M denotes the total number of pixels (excluding background regions) in the corresponding cloud image; and n is the number of test images. In this experiment, we have 60 test images: 10 for clear-sky images, 10 for overcast-sky images, and 40 for partial-cloud images.

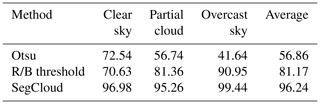

Table 1Segmentation accuracy (%) of three methods for clear-sky, partial-cloud, and overcast-sky images.

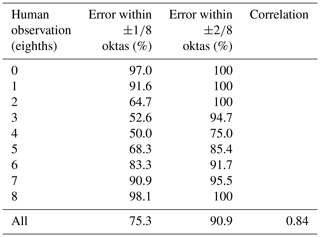

Table 2Comparison of derived cloud cover between SegCloud and human observation. The cloud cover provided by human observation is set to ground truth, and the error is defined as the cloud cover estimated by SegCloud minus the ground truth. The table presents the percentages of the error within oktas and the error within oktas. The correlation coefficient between SegCloud and human observation is also calculated.

Table 1 reports that SegCloud achieves a high average accuracy of 96.24 %, which further objectively proves its effectiveness. Moreover, SegCloud performs well on whole-sky images with different cloud cover conditions and achieves 96.98 % accuracy on clear-sky images, 95.26 % accuracy on partial-cloud images, and 99.44 % near-perfect accuracy on overcast-sky images. These experimental results show that the SegCloud is effective and accurate for cloud segmentation and can provide a reference for future cloud segmentation research.

4.2 Comparison with other methods

To verify its superiority, SegCloud is compared with two other conventional methods using the test set of the WSISEG database.

-

R/B threshold method. Given the camera parameters and atmospheric environment, the fixed ratio of red and blue channel values is set to 0.77 (Kreuter et al., 2009).

-

Otsu algorithm (the adaptive threshold method). The threshold is automatically calculated according to the whole-sky images to be segmented (Otsu, 1979).

We use the proposed SegCloud model, R/B threshold method, and Otsu algorithm to segment the whole-sky images of the test dataset. Figure 4 shows some representative cloud segmentation results. The Otsu algorithm has poor performance in segmenting whole-sky images because it requires pixels of the same class to have a similar gray value, but clouds exhibit the opposite behavior. The R/B threshold method has more accurate segmenting results compared with the Otsu algorithm. However, segmenting accuracy is poor especially for circumsolar areas (Fig. 4a, b and c) because circumsolar areas often have texture and intensity similar to the clouds due to the forward scattering of visible light by aerosols/haze and dynamic range limitation of the detectors of the sky imager (Long and Charles, 2010). Thus, traditional methods merely utilize low-level vision cues to segment images and tend to result in misclassification once the boundary between clouds and sky is unclear. However, SegCloud performs excellent segmentation compared with the two other conventional methods and exhibits segmentation advantages in the area near the sun. SegCloud learns from the given calibration database and constantly mines deep cloud features. Thus, it has improved capability to identify circumsolar areas, although these pixels have textures and intensities that are similar to those of clouds.

The average segmentation accuracy of the three algorithms is also calculated on the test dataset to verify the performance of the SegCloud objectively. Table 1 shows that SegCloud obtains higher average accuracy than the two other methods do and achieves better segmentation performance for clear-sky, partial-cloud, or overcast-sky images. Although SegCloud shows advantages in whole-sky image segmentation, some misidentification remains due to decreased recognition for extremely thin clouds, which should be investigated in the future.

4.3 Application of SegCloud in cloud cover estimation

We apply the SegCloud model in cloud cover estimation and compare its derived cloud cover with a human observation from 08:00 to 16:00 LT on 1–31 July 2018 to verify its accuracy and practicability. First, SegCloud derives cloud cover information by segmenting corresponding whole-sky images acquired by ASC100. For comparison, cloud covers of simultaneous human observation are provided by the Anhui Air Traffic Management Bureau, Civil Aviation Administration of China, where the ASC is located. Well-trained human observers record cloud cover every hour during day and night by dividing the sky in oktas. Thus, the cloud cover estimated by SegCloud is multiplied by 8 to be consistent with human observation.

A total of 279 pairs of cloud cover data are available, and the comparison results are shown in Table 2. The correlation coefficient between SegCloud and human observation is high (0.84). Statistically, the error (i.e., cloud cover difference between SegCloud and human observation) within oktas is 75.3 %, and the error within oktas is 90.9 % for all cases. These results clearly show that the proposed SegCloud model provides accurate cloud image segmentation and reliable cloud cover estimation. Moreover, the cloud cover derived by SegCloud and human observation demonstrates consistency in cases of clear sky ( oktas), indicating that the SegCloud has better cloud segmentation performance for circumsolar areas compared with the other methods. These experimental results prove the accuracy and practicability of the SegCloud and show its practical significance for future automatic cloud cover observations.

In this paper, a deep CNN model named SegCloud is proposed and applied for accurate cloud image segmentation. Extensive segmentation results demonstrate that the SegCloud model is effective and accurate. Given its strong capability in cloud feature representation and advanced cloud feature extraction, the SegCloud outperforms the traditional methods significantly. In comparison with human observation, SegCloud provides reliable cloud cover information by calculating the percentage of cloud pixels to all pixels, which further proves its accuracy and practicability. In our next work, certain challenges must be investigated. SegCloud must be further optimized to improve recognition accuracy in extremely thin clouds. On the basis of cloud segmentation, other important cloud parameters, such as cloud base altitude estimation, cloud phase identification, and cloud movement, are also crucial for meteorological applications and must be elaborated in the future.

Our database examples are available at https://github.com/CV-Application/WSISEG-Database (last access: 24 January 2019).

WX and YW designed the cloud image segmentation method. MY, SC, and BW prepared and provided the cloud cover data of human observation. DL, YL, YX , ZW, and CZ provided useful suggestions for the experiment and support for the writing. WX prepared the article with contributions from all the coauthors.

The authors declare that they have no conflict of interest.

We would like to thank Jun Wang and three anonymous reviewers for their helpful comments.

This research has been supported by the Science and Technology Service Network Initiative of the Chinese Academy of Sciences (grant no. KFJ-STS-QYZD-022); the Youth Innovation Promotion Association, CAS (grant no. 2017482); and the Research on Key Technology of Short-Term Forecasting of Photovoltaic Power Generation Based on All-sky Cloud Parameters (grant no. 201904b11020031).

This paper was edited by Jun Wang and reviewed by three anonymous referees.

Badrinarayanan, V., Kendall, A., and Cipolla, R.: Segnet: a deep convolutional encoder-decoder architecture for scene segmentation, IEEE T. Pattern Anal., 39, 2481–2495 https://doi.org/10.1109/TPAMI.2016.2644615, 2017.

Bao, S., Letu, H., Zhao, C., Tana, G., Shang, H., Wang, T., Lige, B., Bao, Y., Purevjav, G., He, J., and Zhao, J.: Spatiotemporal Distributions of Cloud Parameters and the Temperature Response Over the Mongolian Plateau During 2006–2015 Based on MODIS Data, IEEE J. Sel. Top. Appl., 12, 549–558, https://doi.org/10.1109/JSTARS.2018.2857827, 2019.

Carslaw, K.: Atmospheric physics: cosmic rays, clouds and climate, Nature, 460, 332–333, 2009.

Dev, S., Lee, Y. H., and Winkler, S.: Color-based segmentation of sky/cloud images from ground-based cameras, IEEE J. Sel. Top. Appl., 10, 231–242, 2017.

Feister, U. and Shields, J.: Cloud and radiance measurements with the vis/nir daylight whole sky imager at lindenberg (germany), Meteorol. Z., 14, 627–639, 2005.

Garrett, T. J. and Zhao, C.: Ground-based remote sensing of thin clouds in the Arctic, Atmos. Meas. Tech., 6, 1227–1243, https://doi.org/10.5194/amt-6-1227-2013, 2013.

Genkova, I., Long, C., Besnard, T., and Gillotay, D.: Assessing cloud spatial and vertical distribution with cloud infrared radiometer cir-7, P. Soc. Photo.-Opt. Ins., 5571, 1–10, 2004.

Heinle, A., Macke, A., and Srivastav, A.: Automatic cloud classification of whole sky images, Atmos. Meas. Tech., 3, 557–567, https://doi.org/10.5194/amt-3-557-2010, 2010.

Hinton, G. E.: Rectified Linear Units Improve Restricted Boltzmann Machines Vinod Nair, International Conference on International Conference on Machine Learning, 21–24 June 2010, Haifa, Israel, Omnipress, 2010.

Huang, D., Zhao, C., Dunn, M., Dong, X., Mace, G. G., Jensen, M. P., Xie, S., and Liu, Y.: An intercomparison of radar-based liquid cloud microphysics retrievals and implications for model evaluation studies, Atmos. Meas. Tech., 5, 1409–1424, https://doi.org/10.5194/amt-5-1409-2012, 2012.

Ioffe, S. and Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift, International Conference on International Conference on Machine Learning JMLR.org, July 2015, Lille, France, 2015.

Kreuter, A., Zangerl, M., Schwarzmann, M., and Blumthaler, M.: All-sky imaging: a simple, versatile system for atmospheric research, Appl. Optics, 48, 1091–1097, 2009.

LeCun, Y. and Bengio, Y.: Convolutional networks for images, speech, and time series, the handbook of brain theory and neural networks, MIT Press, 1998.

LeCun, Y., Boser, B., Denker, J. S., Henderson, D., Howard, R. E., Hubbard, W., and Jackel L. D.: Backpropagation applied to handwritten zip code recognition, Neural Comput., 1, 541–551, 2014.

LeCun, Y., Bengio, Y., and Hinton, G.: Deep learning, Nature, 521, 436–444, https://doi.org/10.1038/nature14539, 2015.

Li, J., Lv, Q., Jian, B., Zhang, M., Zhao, C., Fu, Q., Kawamoto, K., and Zhang, H.: The impact of atmospheric stability and wind shear on vertical cloud overlap over the Tibetan Plateau, Atmos. Chem. Phys., 18, 7329–7343, https://doi.org/10.5194/acp-18-7329-2018, 2018.

Li, Q., Lu, W., and Yang, J.: A hybrid thresholding algorithm for cloud detection on ground-based color images, J. Atmos. Ocean. Tech., 28, 1286–1296, 2011.

Liang, Y., Cao, Z., and Yang, X.: Deepcloud: ground-based cloud image categorization using deep convolutional features, IEEE T. Geosci. Remote, 55, 5729-5740, 2017.

Liu, S., Zhang, L., Zhang, Z., Wang, C., and Xiao, B.: Automatic cloud detection for all-sky images using superpixel segmentation, IEEE Geosci. Remote S., 12, 354–358, 2014.

Liu, S., Zhang, Z., Xiao, B., and Cao, X.: Ground-based cloud detection using automatic graph cut, IEEE Geosci. Remote S., 12, 1342–1346, 2015.

Long, C., Slater, D., and Tooman, T.: Total sky imager model 880 status and testing results, Office of Scientific and Technical Information Technical Reports, 2001.

Long, C. N. and Charles, N.: Correcting for circumsolar and near-horizon errors in sky cover retrievals from sky images, Open Atmospheric Science Journal, 4, 45–52, 2010.

Long, C. N., Sabburg, J. M., Calbó, J., and Pagès, D.: Retrieving cloud characteristics from ground-based daytime color all-sky images, J. Atmos. Ocean. Tech., 23, 633–652, 2006.

Ma, Z., Liu, Q., Zhao, C., Shen, X., Wang, Y., Jiang, J. H., Li, Z., and Yung, Y.: Application and evaluation of an explicit prognostic cloud-cover scheme in GRAPES global forecast system, J. Adv. Model. Earth Sy., 10, 652–667, https://doi.org/10.1002/2017MS001234, 2018.

Otsu, N.: A threshold selection method from gray-level histograms, IEEE T. Syst. Man Cyb., 9, 62–66, 1979.

Papin, C., Bouthemy, P., and Rochard, G.: Unsupervised segmentation of low clouds from infrared meteosat images based on a contextual spatio-temporal labeling approach, IEEE T. Geosci. Remote, 40, 104–114, 2002.

Rossow, W. B. and Schiffer, R. A.: ISCCP Cloud Data Products, B. Am. Meteorol. Soc., 72, 2–20, 1991.

Shi, C., Wang, C., Yu, W., and Xiao, B.: Deep convolutional activations-based features for ground-based cloud classification, IEEE Geosci. Remote S., 14, 816–820, 2017.

Shi, M., Xie, F., Zi, Y., and Yin, J.: Cloud detection of remote sensing images by deep learning, IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016.

Simonyan, K. and Zisserman, A.: Very deep convolutional networks for large-scale image recognition, Proc. Int. Conf. Learn. Representat., 1–14, 2015

Souzaecher, M. P., Pereira, E. B., Bins, L. S., and Andrade, M. A. R.: A simple method for the assessment of the cloud cover state in high-latitude regions by a ground-based digital camera, J. Atmos. Ocean. Tech., 23, 437–447, https://doi.org/10.1175/jtech1833.1, 2004.

Stephens, G. L.: Cloud feedbacks in the climate system: a critical review, J. Climate, 18, 237–273, 2005.

Sutskever, I., Martens, J., Dahl, G., and Hinton, G.: On the importance of initialization and momentum in deep learning, International Conference on International Conference on Machine Learning, JMLR.org, June 2013, Atlanta, GA, USA, 2013.

Taigman, Y., Ming, Y., Ranzato, M., and Wolf, L.: DeepFace: Closing the Gap to Human-Level Performance in Face Verification, IEEE Conference on Computer Vision and Pattern Recognition, 23–28 June 2014, Columbus, OH, USA, 2014.

Tapakis, R. and Charalambides, A. G.: Equipment and methodologies for cloud detection and classification: a review, Sol. Energy, 95, 392–430, 2013.

Tao, F., Xie, W., Wang, Y., and Xia, Y.: Development of an all-sky imaging system for cloud cover assessment, Appl. Optics, 58, 5516–5524, 2019.

Wang, D., Khosla, A., Gargeya, R., Irshad, H., and Beck, A.: Deep Learning for Identifying Metastatic Breast Cancer, 2016.

Wang, Y. and Zhao, C.: Can MODIS cloud fraction fully represent the diurnal and seasonal variations at DOE ARM SGP and Manus sites, J. Geophys. Res.-Atmos., 122, 329–343, 2017.

Xiao, H., Zhang, F., He, Q., Liu, P., Yan, F., Miao, L., and Yang, Z.: Classification of ice crystal habits observed from airborne Cloud Particle Imager by deep transfer learning, Earth and Space Science, 6, 1877–1886, https://doi.org/10.1029/2019EA000636, 2019.

Yang, H., Kurtz, B., Nguyen, D., Urquhart, B., Chow, C. W., Ghonima, M., and Kleissl, J.: Solar irradiance forecasting using a ground-based sky imager developed at UC San Diego, Sol. Energy, 103, 502–524, 2014.

Yuan, F., Lee, Y. H., and Meng, Y. S.: Comparison of cloud models for propagation studies in Ka-band satellite applications, International Symposium on Antennas and Propagation, 2–5 December 2014, Kaohsiung, Taiwan, https://doi.org/10.1109/ISANP.2014.7026691, 2015.

Yuan, K., Yuan, K., Meng, G., Cheng, D., Bai, J., and Pan, X. C.: Efficient cloud detection in remote sensing images using edge-aware segmentation network and easy-to-hard training strategy, IEEE International Conference on Image Processing, 17–20 September 2017, Beijing, China, https://doi.org/10.1109/ICIP.2017.8296243, 2018.

Zhang, J. L., Liu, P., Zhang, F., and Song, Q. Q.: CloudNet: Ground-based cloud classification with deep convolutional neural network, Geophys. Res. Lett., 45, 8665–8672, https://doi.org/10.1029/2018GL077787, 2018.

Zhao, C., Wang, Y., Wang, Q., Li, Z., Wang, Z., and Liu, D.: A new cloud and aerosol layer detection method based on micropulse lidar measurements, J. Geophys. Res.-Atmos., 119, 6788–6802, https://doi.org/10.1002/2014JD021760, 2014.

Zhao, C., Chen, Y., Li, J., Letu H., Su, Y., Chen, T., and Wu, X.: Fifteen-year statistical analysis of cloud characteristics over China using Terra and Aqua Moderate Resolution Imaging Spectroradiometer observations, Int. J. Climatol., 39, 2612–2629, https://doi.org/10.1002/joc.5975, 2019.