the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Noise filtering options for conically scanning Doppler lidar measurements with low pulse accumulation

Eileen Päschke

Carola Detring

Doppler lidar (DL) applications with a focus on turbulence measurements sometimes require measurement settings with a relatively small number of accumulated pulses per ray in order to achieve high sampling rates. Low pulse accumulation comes at the cost of the quality of DL radial velocity estimates and increases the probability of outliers, also referred to as “bad” estimates or noise. Careful filtering is therefore the first important step in a data processing chain that begins with radial velocity measurements as DL output variables and ends with turbulence variables as the target variable after applying an appropriate retrieval method. It is shown that commonly applied filtering techniques have weaknesses in distinguishing between “good” and “bad” estimates with the sensitivity needed for a turbulence retrieval. For that reason, new ways of noise filtering have been explored, taking into account that the DL background noise can differ from generally assumed white noise. It is shown that the introduction of a new coordinate frame for a graphical representation of DL radial velocities from conical scans offers a different perspective on the data when compared to the well-known velocity–azimuth display (VAD) and thus opens up new possibilities for data analysis and filtering. This new way of displaying DL radial velocities builds on the use of a phase-space perspective. Following the mathematical formalism used to explain a harmonic oscillator, the VAD’s sinusoidal representation of the DL radial velocities is transformed into a circular arrangement. Using this kind of representation of DL measurements, bad estimates can be identified in two different ways: either in a direct way by singular point detection in subsets of radial velocity data grouped in circular rings or indirectly by localizing circular rings with mostly good radial velocity estimates by means of the autocorrelation function. The improved performance of the new filter techniques compared to conventional approaches is demonstrated through both a direct comparison of unfiltered with filtered datasets and a comparison of retrieved turbulence variables with independent measurements.

- Article

(17587 KB) - Full-text XML

- BibTeX

- EndNote

Doppler lidars (DLs) are widely used for measurements of atmospheric wind and turbulence variables in different application areas, such as wind energy, aviation, and meteorological research (Liu et al., 2019; Sathe and Mann, 2013; Thobois et al., 2019; Krishnamurthy et al., 2013; Filioglou et al., 2022; Drew et al., 2013; O'Connor et al., 2010; Sathe and Mann, 2013; Bodini et al., 2018; Sanchez Gomez et al., 2021; Beu and Landulfo, 2022). The wide application range became possible due to the flexible configuration options of several modern systems benefitting from the all-sky-scanner technique. This technical flexibility allows for the employment of user-defined scan patterns with respect to azimuth and elevation as well as the choice of specific sampling frequencies in order to meet the data requirements for certain application-oriented retrieval processes.

At the Meteorological Observatory Lindenberg – Richard Aßmann Observatory (MOL-RAO) the interest in long-term operational DL profile observations for both wind and turbulence variables is motivated by different application aspects. The data can be helpful in analyzing and interpreting the kinematic properties of the vertical structure of the atmospheric wind and turbulence under different weather conditions and states of the ABL during the course of the day (e.g., stable ABL, convective mixed ABL, transitions between different ABL states). In addition, the profile information can be useful for regular validation purposes of atmospheric numerical models. This includes not only modeled wind profiles but also the performance of turbulence parameterizations (e.g., TKE closure) used to describe subgrid-scale processes. Due to increasingly higher model resolutions and the associated changes in the applicability and relative importance of parameterization schemes, long-term DL-based turbulence measurements are also interesting when it comes to developing appropriately adapted parameterization approaches that meet these new requirements.

A variety of scanning techniques and retrieval methods for vertical profiles of wind and turbulence variables based on DL measurements have been developed (Smalikho, 2003; Päschke et al., 2015; Sathe et al., 2015; Newsom et al., 2017; Bonin et al., 2017; Steinheuer et al., 2022). Several of these methods rely on specific scanning configurations and are tailored towards a specific data product. For the derivation of different data products this implies either the use of more than one DL system or cyclic configuration changes in a single DL. With respect to this limitation, the relatively new scanning and retrieval method introduced by Smalikho and Banakh (2017) stands out from other methods. Their approach is based on a carefully derived set of model equations, describing functional relationships between radial velocity observations measured along a conical scan with high azimuthal and temporal resolution (Δθ∼1°, Δt∼0.2 s) and a set of meaningful wind turbulence variables such as turbulence kinetic energy (TKE), eddy dissipation rate (EDR), momentum fluxes, and the integral scale of turbulence. Hence, the essential benefit of this approach relies on the deployment of an internally consistent set of simultaneous wind and turbulence profile observations based on just one scan strategy. As a further outstanding feature the method provides correction terms to account for the typical underestimation of the TKE due to the averaging over the pulse volume of the DL. This issue has frequently been mentioned as the most challenging task in turbulence measurements using DL (Sathe and Mann, 2013; Liu et al., 2019).

Because of the strength of the Smalikho and Banakh (2017) approach, the method has been implemented and tested for routine application at MOL-RAO. From the first quasi-routine test measurements with a StreamLine DL from the manufacturer HALO Photonics (now HALO Photonics by Lumibird) three things became apparent: (1) the measurements of radial velocity show an increased level of noise which is noticeable through an increased number of outliers (“bad” estimates) even at rather low height levels in the ABL; (2) the reliability of both retrieved wind and above all turbulence variables strongly depends on the degree of noise contamination, i.e., the number and distribution of bad radial velocity estimates, in the input data; and (3) if just the signal-to-noise ratio (SNR) thresholding technique is used to remove noise from the data, the final turbulence product availability is relatively low. The first finding can be attributed to short accumulation times, which is an inevitable consequence of the technical realization of the scanning strategy with high spatiotemporal resolution. The length of the accumulation time determines how many available spectra of backscattered light can be used to estimate the frequency shift fd (Doppler frequency) and therewith the radial velocity defined through . The longer a signal is sampled, the more accurate this estimation will be. For that reason it is a common approach to accumulate the spectra of backscattered light from multiple pulses Na (Frehlich, 1995; Rye and Hardesty, 1993; Banakh and Werner, 2005; Li et al., 2012). For the retrieval of wind profiles as proposed in Päschke et al. (2015), for instance, DL measurements have been performed using a comparably high number of Na = 75 000 pulses. At this point the method of Smalikho and Banakh (2017) requires a sensible compromise. Using a StreamLine DL, for technical reasons a conical scan with the required high azimuthal and temporal resolution can only be achieved with a rather low number of accumulated pulses per measurement ray, i.e., Na∼2000. This in turn has the consequence that the occurrence of bad estimates in the measurements becomes more likely (Frehlich, 1995). Such outliers contain no wind information (Stephan et al., 2018), and, if not excluded from the measured dataset, they may contribute to large errors in the retrieved meteorological variables (Dabas, 1999). The latter explains the aforementioned second finding and indirectly confirms the recommendations given in Banakh et al. (2021) that the method for determining wind turbulence parameters presented in Smalikho and Banakh (2017) is only applicable if the probability Pb of bad estimates of the radial velocity is close to zero. A closer examination of the third finding mentioned above revealed that with the proper choice of the threshold value the SNR thresholding technique is indeed very effective in removing noisy data, but it also bears the risk of discarding a lot of reliable measurements. This in turn proves to be ineffective for the overall product availability and would not justify a routine application of the retrieval method.

To overcome the issues described above, new filter methods were developed in the course of implementing the retrieval method by Smalikho and Banakh (2017) for routine applications at MOL-RAO. In particular, a filter method was sought which allows for a reliable removal of all noise contributions and circumvents an unnecessary refusal of reliable data at the same time. A detailed presentation of these methods is the main objective of this work. In addition, their advantages over commonly used filtering techniques for turbulence-measurement-oriented routine applications are presented. The article is organized as follows: in Sect. 2 technical information on the measuring system used, its configuration, and typical characteristics in measured data due to short accumulation times is given. To motivate the need for new ideas of improved filtering techniques, pros and cons of common filter methods to detect bad estimates are discussed in Sect. 3. In Sect. 4 a new type of visualization for analyzing DL measurements from conical scans is presented. Building on this, ideas for two new filter approaches are developed and discussed. An overview of how the new filter methods affect the quality of retrieved turbulence variables using the method by Smalikho and Banakh (2017) is provided in Sect. 5.

The DL measurements serving as the basis for this work were taken at the boundary-layer field site Falkenberg (in German: Grenzschichtmessfeld, GM, Falkenberg), which is an open field embedded in a flat landscape, with main wind directions from WSW, located about 5 km to the south of the MOL-RAO observatory site. The flat terrain characteristics meet the requirements for the application of the turbulence measurement approach by Smalikho and Banakh (2017) in non-complex terrain.

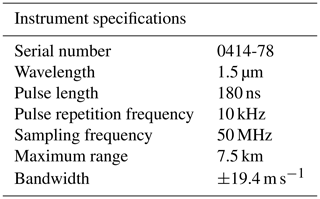

2.1 Technical system specifications and configuration

At GM Falkenberg a StreamLine DL from the manufacturer HALO Photonics with the specifications given in Table 1 was used and operated using a conical-scan-mode configuration to apply the turbulence retrieval approach by Smalikho and Banakh (2017). This configuration is defined by three key parameters, namely the elevation angle (ϕ=35.3°), the azimuthal resolution (Δθ∼1°), and the time duration for one single scan (Tscan=72 s). In order to realize this scanning strategy the DL was configured to be in continuous scan motion (CSM) while sampling data. A custom scan file (see Appendix A) has been defined for the scanner configuration including information about the angular rotation rate ωs, the start and end positions of the scanner, and the elevation angle ϕ. In analogy to the work of Smalikho and Banakh (2017) we set ωs=5° s−1 to nearly satisfy Δθ∼1°. Note that the latter implies measurements on an irregular grid which for analysis purposes later on requires the transfer of the data to an equidistant grid with Δθ=1°. The specific value for ϕ goes back to an earlier theoretical work of Kropfli (1986) and Eberhard et al. (1989), who focused on Doppler-radar-based turbulence measurements. In addition, Teschke and Lehmann (2017) have shown that using DL this value is also an optimum beam elevation angle for a mean wind retrieval with a minimum in the retrieval error. With the specifications for Δθ and ωs and due to the pulse repetition frequency fp=10 kHz (see Table 1) we had to adjust the configuration setting for the number of pulses per ray to Na=2000 using the relation (Banakh and Smalikho, 2013). This is a minor difference compared to the value suggested in Smalikho and Banakh (2017), i.e., Na=3000, which is due to a higher pulse repetition frequency, i.e., fp=15 kHz, characterizing their DL system. Note that for StreamLine DL systems the system-specific parameter fp cannot be changed by the user. The low value for Na is non-favorable if a high measurement quality is needed. For best possible measurement quality in the lower ABL it is therefore important to use the focus setting option to improve the signal intensities within a selected height range. For the DL used in our studies (DL78 hereafter) the focus was set to 500 m. Working with StreamLine DL systems, the range resolution ΔR along the line of sight (LOS) can also be adjusted. For reasons of compatibility with the pulse length of τp=180 ns the range resolution was set to m, where c denotes the speed of light.

Note that with StreamLine XR DL systems HALO Photonics by Lumibird offers a further development of the StreamLine series. XR systems operate with larger pulse length in order to increase the range, depending on the presence of scattering particles in the atmosphere. The larger pulse length, however, reduces the spatial resolution of the measurements along the line of sight (LOS), which is not an option for measurements in the ABL if the focus is on the detection and investigation of small-scale structures.

2.2 Typical measurement examples and their noise characteristics

For the measurements carried out in this work the relevant DL output variables are the radial velocity estimates Vr along each single LOS of the conical scan and the associated SNR values. The estimation of Vr is based on the determination of the Doppler shift fd of the backscattered signal by an onboard signal processor. A number of methods are available to determine fd (Frehlich, 1995), but the DL manufacturers usually do not disclose to the customer the details of the implemented algorithm. The performance of the estimation algorithms and thus the quality of Vr may vary. The assessment of the performance of the estimation algorithms is generally based on the probability density function (PDF) of the velocity estimates. According to Frehlich (1995), the PDF of velocity estimators performing well is characterized by a localized distribution of “good” estimates centered around the true mean velocity and a fraction of uniformly distributed bad estimates. This leads to the following frequent distinction in DL radial wind measurements:

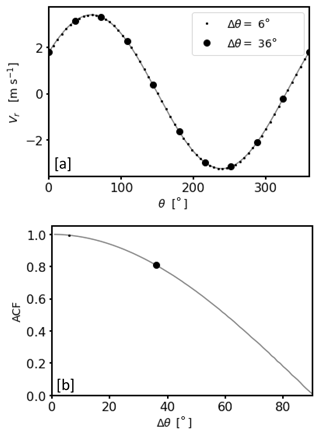

with Ve denoting a random instrumental error (Stephan et al., 2018). In the literature, bad estimates are mostly described as random outliers or noise uniformly distributed over the resolved velocity space (Frehlich, 1995; Dabas, 1999). It can be shown that the occurrence of noise in a series of radial velocity measurements based on a conical scan can be determined by means of the autocorrelation function (ACF) evaluated at lag 1 (Appendix B). In particular, for a conical scan with high azimuthal resolution of Δθ∼1° as used in this work, ACF = 1 indicates noise-free measurements, while ACF < 1 gives an indication of the occurrence of noise.

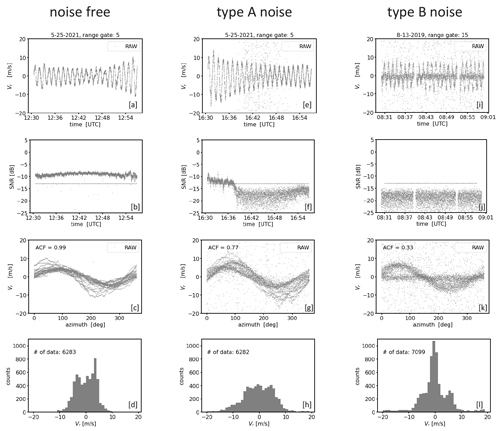

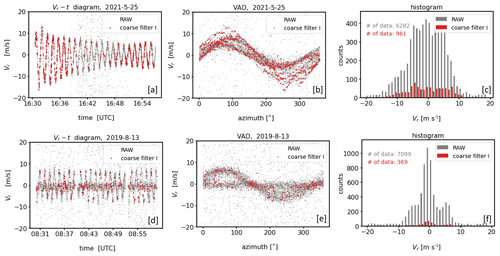

Figure 1Examples for measurements from one and the same conically scanning Doppler lidar. Each column represents measurements during a 30 min interval at different times and range gates (i.e., measurement heights along the line of sight) which are characterized by different kinds of noise (left: noise-free, middle: type A noise, right: type B noise). The plots of each row depict the measurements from different perspectives. The first row shows a time series plot of the radial velocities (Vr) (a, e, i). In a similar way the second row (b, f, j) illustrates the corresponding signal intensities (SNR) of the measurements. Here, the horizontal dotted line indicates an SNR threshold level calculated as proposed in Abdelazim et al. (2016) for Na=2000 (see Sect. 3.1). The third row (c, g, k) shows the DL measurements from a VAD perspective, i.e., a display of the radial velocity as a function of the azimuth angle. The ACF value indicates the degree of noise contamination in the measured time series (see Appendix B). The fourth row (d, h, l) shows histograms of Vr.

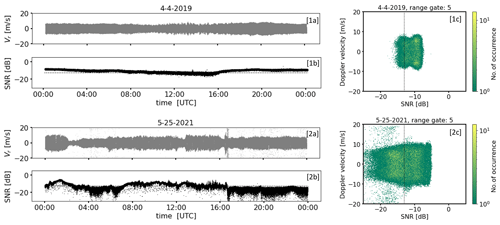

Typical examples for DL78 measurements that differ in terms of their noise characteristics are shown in Fig. 1. Each measurement example reflects a different 30 min time period and range gate height. Per column, different analysis diagrams are provided for each measurement example. Noise-free measurements indicated by ACF = 1 (Fig. 1c) are shown in the column on the left. Apart from the superimposed small-scale fluctuations which reflect natural turbulent fluctuations, the time series plot of radial velocities (Fig. 1a) and the corresponding velocity–azimuth display (VAD) plot (Fig. 1c) show a clean sinusoidal course without any random outlier. The sinusoidal course is typical for measurements with conically scanning DL systems and manifests itself in a U-shaped bimodal distribution of the radial velocities provided the wind field was stationary (Fig. 1d). In the middle and right columns the measurements are contaminated with noise, which is indicated by ACF = 0.8 and ACF = 0.3, respectively (Fig. 1g, k). Here, the periodic signals are temporarily interrupted by bad estimates randomly representing “any” value in the velocity range ±19 m s−1 (Fig. 1e, i). Furthermore, differences in the distribution of bad estimates are noticeable. In contrast to the measurements in the middle column where the bad estimates appear quite uniformly distributed (type A noise hereafter), an additional higher aggregation of bad estimates around zero (type B noise hereafter) is noticeable in the right column. This becomes particularly clear by comparing the corresponding panels with the VAD diagrams (Fig. 1g, k) and those with the histograms of the radial velocities (Fig. 1h, l). Note that contrary to Fig. 1d the characteristic U-shaped distribution in Fig. 1l can no longer be recognized because it mixes with a Gaussian-like distribution of bad estimates. Finally, for each measurement example a clear difference in the level of the signal intensities is noticeable (Fig. 1b, f, j). With SNR values around −10 dB the signals are strong in the noise-free case, and with values smaller than −15 dB the signals are weak in the noisy cases. It is important to point out that for the noisy cases the signal levels are mostly the same and thus do not provide any indication about the type of noise distribution.

All three measurement examples have been taken with the same DL system under identical configuration (e.g., Na = 2000 pulse accumulations). Despite the low pulse accumulations there are measurement cases with and without noise. This can be explained by the natural variability in the atmospheric aerosol content over the course of a day and with altitude. Aerosols act as backscattering targets and their atmospheric loading influences the quality of the DL signals and therewith the amount of noise in the measurements. A sufficiently large amount of aerosol can contribute to noise-free DL measurements even for low pulse accumulations. Little aerosol combined with low pulse accumulation, however, represents an unfavorable constellation for achieving good data quality.

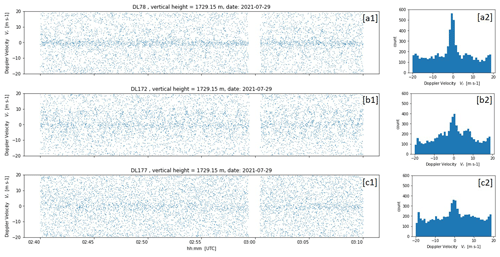

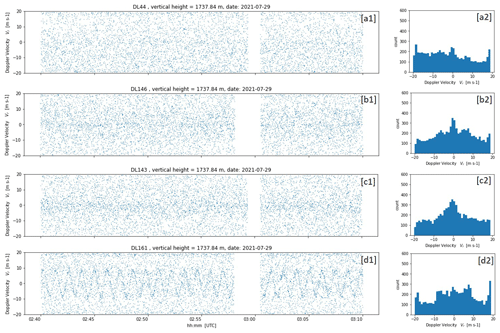

Concerning the differences in the bad estimate distributions, to the authors' knowledge up to now there have been no user reports about nonuniform bad estimate distributions in DL measurements available. A uniform distribution of bad estimates indicates that the noise component of the spectrum of the lidar signal is white noise (Stephan et al., 2018). It is believed that additional non-white DL noise sources such as shot noise, detector noise, relative intensity noise (RIN), and speckles (Hellhammer, 2018) cause these nonuniform type B noise characteristics. At this point more in-depth investigations would be necessary but cannot be carried out within the scope of this work. It is worth pointing out, however, that the occurrence of type B noise is not a system-specific DL78 problem. During the () (Field Experiment on Sub-mesoscale Spatio-Temporal Variability in Lindenberg) campaign (Hohenegger et al., 2023), there was the opportunity to compare the measured data from three StreamLine and four StreamLine XR Doppler lidars (see Sect. 2.1) positioned side by side and configured identically using the scan mode outlined in Smalikho and Banakh (2017). The comparison revealed type-B-like noise contamination within the measured data for several systems albeit to varying degrees (see Figs. C1 and C2 in Appendix C). This suggests that this type B noise issue is at least typical for StreamLine DL systems.

In the previous section it has been shown that DL radial velocity measurements obtained using the measurement strategy proposed in Smalikho and Banakh (2017) can show a strikingly high proportion of noise. The successful application of the associated retrieval method to determine turbulence variables from DL measurements, however, requires a probability of bad estimates close to zero (Smalikho and Banakh, 2017; Banakh et al., 2021). Hence, a careful pre-processing of measurement data to detect and remove noise is necessary.

Different filtering techniques to separate reliable data from noisy measurements can be found in the literature. A closer look at the underlying principles of radial velocity quality assessment allows a rough subdivision into two categories of filtering methods: (1) one category makes use of additional parameters from post-processing of Doppler spectra and (2) the other uses statistical analysis tools applied to time series of DL radial velocity estimates. The method behind the first category is the well-known SNR thresholding technique. Methods representing the second category are, for instance, the median absolute deviation (MAD) originating from Gauss (1816), consensus averaging (CNS) introduced by Strauch et al. (1984), the filtered sine wave fit (FSWF) by Smalikho (2003), or the integrated iterative filter approach by Steinheuer et al. (2022). The last two methods mentioned are directly integrated into a retrieval method for wind or wind gusts. A more detailed review of these and further filtering methods that belong to the second category mentioned above is given in Beck and Kühn (2017). In this section the advantages and disadvantages of these different filtering method categories are examined using the SNR and CNS filter as an example.

3.1 SNR thresholding

The signal-to-noise ratio (SNR) is determined from the Doppler spectra and is defined as the ratio between the signal power and the noise power. The first bears the meaningful information in a measurement and the latter is considered to be an unwanted signal contribution that is blurring this information. The higher the level of signal power and the smaller the level of noise power, the better the SNR and thus the quality of the radial velocity estimate.

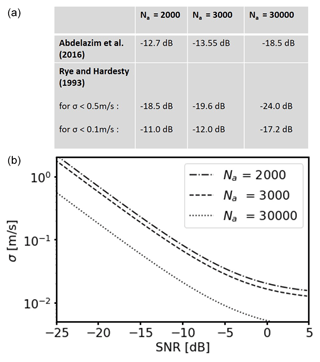

In practice, DL users are often faced with deciding on a suitable SNR threshold value (SNRthresh hereafter) to separate good from bad estimates. Depending on how the measurement data are used later on, the expected uncertainty of the radial wind velocity also plays a role in this decision. Pearson et al. (2009) provide a guideline on that issue based on an experimental approach. The results showed good agreement with theoretical results based on an approximate equation introduced by Rye and Hardesty (1993), reading

with and Np=M Na (SNR). Here, σ denotes the error estimate of the radial velocity in the weak signal, multipulse-averaged regime, Na the number of accumulated pulses, B the bandwidth, Δν the signal spectral width, and M the gate length in points. For more details see Appendix D. Note that Eq. (2) can be used in two ways. On the one hand, it provides an estimate for the uncertainty of the radial velocity estimate depending on the SNR. On the other hand, it provides guidance to calculate SNRthresh for a prescribed acceptable uncertainty in the Doppler lidar estimate. Examples for an evaluation of Eq. (2) for different numbers of Na are given in Fig. 2. The curves basically show how the uncertainty of the measurements decreases with increasing SNR. Additionally, the effect of pulse accumulation becomes visible. For the same requirement on the uncertainty of the Doppler estimate, e.g., σ<0.5 m s−1 or σ< 0.1 m s−1, the corresponding SNR threshold value for reliable data would be lower for Doppler estimates based on higher pulse accumulations (e.g., SNRthresh = −24 dB or SNRthresh = −17 dB for Na = 30 000) than for Doppler estimates based on a lower number of pulse accumulations (e.g., SNRthresh = −18.5 dB or SNRthresh = −11 dB for Na=2000). Another approximate equation to determine SNRthresh is suggested in Abdelazim et al. (2016). Taking into account the number of accumulated pulses Na only, they propose the following equation for an SNR threshold determination:

Example results for SNRthresh derived from Eq. (3) for different Na are also given in Fig. 2.

Figure 2(a) Examples of calculated SNR threshold values depending on the number of accumulated pulses Na based on the approach by Abdelazim et al. (2016) and the approach by Rye and Hardesty (1993). (b) Example plots for the change in the theoretical standard deviation σ of the Doppler velocity estimates depending on the signal-to-noise ratio (SNR) following the approach by Rye and Hardesty (1993). The curves are valid for different Na and the following system-specific parameters: B=2 × 19 m s−1, M=10, Δν=1.3 m s−1.

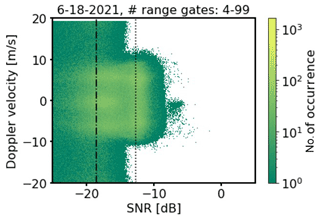

The turbulence retrieval proposed by Smalikho and Banakh (2017) requires measurements with a probability of bad estimates close to zero. The example shown in Fig. 3 clearly illustrates that with SNRthresh = −12.7 dB calculated by means of Eq. (3), a universally valid first-guess SNR threshold satisfying this requirement across all measuring height ranges can be obtained. Here, estimates for Vr from range gate number 4 to 99 are displayed against their associated SNR values. While bad estimates randomly filling the entire search band ±19 m s−1 can be observed to the left of the threshold line, none can be found to the right. If one further relaxed this threshold, the probability of bad estimates would increase. From Eq. (2) it can be additionally inferred that with SNRthresh = −12.7 dB measurement uncertainties less than 0.1 m s−1 can be expected. Though it is important to note that by applying dB to DL radial velocity measurements, as shown with the examples given in Fig. 1 as well as Fig. 5a, c, and e, a huge fraction of obviously good estimates would be discarded. A reduction of data availability would be the consequence, making a representative derivation of wind and turbulence products often difficult or even impossible. This is a limiting factor of this kind of approach to distinguish between good and bad estimates (Dabas, 1999).

Figure 3Doppler velocity vs. SNR plot from conically scanning Doppler lidar measurements with Na = 2000 accumulated pulses. The plot includes full-day measurements for all range gates between the 4th and 99th range gate. The vertical lines denote different SNR thresholds based on different approaches, namely by Abdelazim et al. (2016) with a Doppler velocity uncertainty of σ<0.1 m s−1 (dot) and by Rye and Hardesty (1993) with a Doppler velocity uncertainty of σ<0.5 m s−1 (dash–dot). See Fig. 2 for exact SNR threshold numbers.

3.2 Consensus averaging

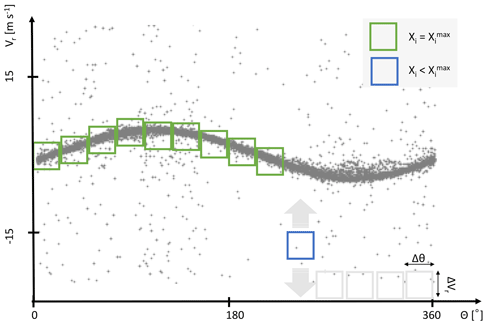

Methodically different from the SNR thresholding technique is the consensus averaging (CNS) method introduced by Strauch et al. (1984). The method was originally developed to exclude outliers from radar wind profiler data. A schematic that explains the CNS approach is shown in Fig. 4. Here noise-contaminated measurements of Vr from several single conical scans executed one after the other are displayed using the VAD perspective. Separating the range of measurement directions (0 to 360°) into equidistant intervals ( with n∈ℕ), the basic idea is to seek within each Ii along the Vr axis for this subset of data satisfying both (i) the occurrence within a prescribed interval ΔVr, which is assumed to be a typical value for the atmospheric wind variance, and (ii) the provision of data availability which, however, must not fall below a prescribed value Xthresh. Similar to the SNR thresholding technique, the difficulty exists in a meaningful choice of ΔVr and Xthresh as will be shown in more detail below.

Figure 4Schematic representation of a possible practical implementation of the CNS (consensus) averaging method based on an approach by Strauch et al. (1984) (see Sect. 3.2). The data in the green boxes have already been identified as reliable. The blue box illustrates the process of searching the reliable data, and the gray boxes stand for azimuthal intervals that still need to be analyzed.

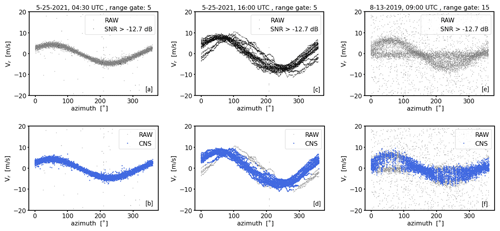

Figure 5VAD plot examples from conical DL measurements with Na = 2000 pulse accumulations illustrating the application of the SNR thresholding (a, c, e) and the CNS (b, d, f) noise filtering method. The examples represent three different 30 min measuring intervals at different range gates and with different levels of noise contamination. The SNR threshold value of −12.7 dB has been calculated using the approach by Abdelazim et al. (2016). Δθ=1° and ΔVr = 3 m s−1 were used for the application of the CNS.

If the focus is on the derivation of turbulence variables, including the determination of variances caused by eddies in turbulent flow, the problem of this CNS approach is that it requires an a priori estimate of the variance which is actually being attempted to be derived. If ΔVr does not correspond to the true atmospheric situation, e.g., the assumed value for ΔVr is too small or too large, it may happen that either measurements bearing relevant wind information are rejected or that bad estimates remain in the dataset. Examples of this are given in Fig. 5b, d, and f assuming ΔVr=3 m s−1 and Xthresh=60 %. The early morning 30 min measurement example from 25 May 2021 with the timestamp 04:30 UTC (Fig. 5b) shows noise-contaminated DL measurements during weak wind and turbulence conditions. At this time the actual wind variance was obviously lower than assumed by ΔVr so that the prescribed interval ΔVr gave room for the inclusion of bad estimates which had to be accepted as reliable due to the CNS concept. The afternoon example from 25 May 2021 with the timestamp 16:00 UTC (Fig. 5d) shows DL measurements during stronger wind and turbulence conditions than in the morning. Additionally, at some point during the 30 min interval a change in the wind direction contributes to a phase shift in the sine signal represented by some of the scan circles. Mainly because of this nonstationarity the variability of the Doppler velocity measurements is obviously larger than assumed by ΔVr=3 m s−1 in some azimuth sectors so that relevant information characterizing this nonstationarity remains outside of the interval ΔVr and is discarded by the CNS. Note that at this point the focus is on the performance of the CNS and not on reconstructed wind and turbulence variables. Hence, this non-applicability of the CNS method during nonstationary measuring intervals needs to be considered apart from the question of whether a derivation of wind and turbulence variables is meaningful if nonstationarity occurs. In Sect. 5 it will be shown that a wrong inclusion of bad estimates or a false exclusion of good estimates because of a non-compatible ΔVr compared to the actual atmospheric situation has the consequence that turbulence products (e.g., TKE) calculated based on improperly pre-filtered measurement data may be either overestimated or underestimated.

Another limitation of the CNS filtering technique is that it expects a uniform distribution of bad estimates for a successful application. This becomes evident by considering the CNS filtering results for the measurement example shown in Fig. 5f. This example represents the type B noise case shown in Fig. 1. Here, the subsets of Doppler velocities found by the CNS for some of the azimuthal sectors often do not represent the desired good estimates because the high density of bad estimates around zero erroneously shifts the range ΔVr bearing a maximum of data availability towards zero. In this case the nonuniform distribution of the bad estimates makes a successful application of the CNS impossible. Note that the disadvantages worked out here can be generalized to all statistical methods used for outlier detection which require additional assumptions about the distribution and the variance of the quantity of interest.

The filtering techniques discussed in the previous section are not efficient enough if DL measurements with both the highest possible data availability and a probability of bad estimates close to zero are required. New filtering techniques with improved performance concerning this demand are presented in this section. In particular, depending on the measurement's noise characteristics two different approaches (referred to as approach I and approach II hereafter) for new filter techniques are discussed. Including a coarse filter and a filter for post-processing each approach consists of two separate filtering steps which are carried out one after the other, i.e., approach I = coarse filter I + filter for post-processing and approach II = coarse filter II + filter for post-processing, while using different perspectives of data representation to analyze the occurrence of bad estimates in the DL measurements. Both coarse filters make use of the VV90D perspective, which will be introduced in Sect. 4.1. The application of the post-processing filter requires the well-known VAD perspective.

4.1 Framework of the VV90D perspective

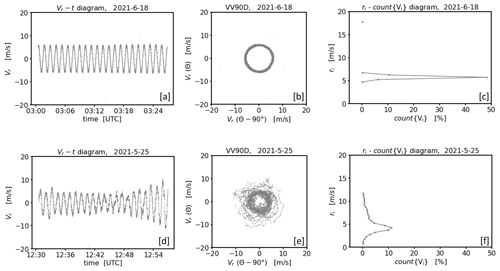

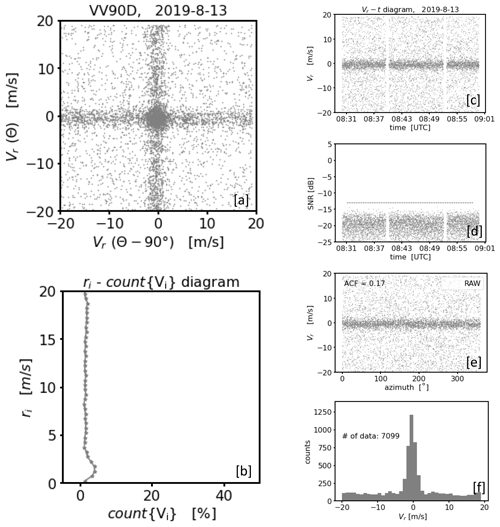

The VV90D perspective represents a diagram in a rectangular coordinate system where each radial velocity value Vr obtained from a conically scanning DL is plotted versus its counterpart measured at an azimuthal shift of 90°. In particular, for a time series of radial velocity measurements this means that is plotted along the y axis and is plotted along the x axis. Here, R denotes the range gate, θ the azimuth angle along the scan circle, and t the timestamp of the measurement. Note that t∗ denotes the timestamp of the shifted counterpart value. The motivation underlying this graphic representation of DL measurements will be explained in more detail using the two noise-free measurement examples shown in Fig. 6. In particular, measurements from a conically scanning DL visualized in both a Vr−t diagram and a VV90D plot are shown in Fig. 6a and d and Fig. 6b and e, respectively. The plots in the upper line reflect a homogeneous and stationary measurement example (case 1) which can be clearly seen by the smooth sinusoidal course of the radial velocity, i.e., V∼sin (θ), with a nearly constant amplitude (Fig. 6a). The same measurement example visualized in a VV90D diagram shows clear circular patterns (Fig. 6b). The latter can be explained by taking the phase shift identity into account, yielding . Therewith paired data points () plotted in a rectangular coordinate system describe a circle. Note that this way of looking at DL measurements shows analogies to the harmonic oscillator where the time evolution of both displacement and motion are frequently visualized in a phase-diagram plot to show the 90° phase relationship between velocity and position much more clearly (Vogel, 1997). The plots in the lower line of Fig. 6 represent a nonstationary measurement example (case 2). Due to the sinusoidal course superimposed with smaller fluctuations and a varying amplitude (Fig. 6d) the corresponding VV90D diagram (Fig. 6e) of the same measurements shows a slightly wider and more blurred ring structure.

Figure 6Examples of a graphical visualization of radial velocity measurements from a conically scanning DL using the framework of the VV90D perspective for two cases with stationary (a–c) and nonstationary (d–f) winds. The panels in each row illustrate in sequence: a time series plot of the measured radial velocity over a 30 min time period (a, d), the same data plotted using the VV90D perspective (b, e), and the frequency distribution of data points in the VV90 plane binned by circular rings , where (n∈ℕ) and Δr=0.5 m s−1 (c, f).

A quantitative description of the VV90D ring structures is provided with the diagrams shown in Fig. 6c and f. These diagrams are referred to as ri−count{Vi} diagrams hereafter. Here, ri denotes the radius of a pre-defined circular ring with origin at () and width Δr in the VV90 plane. The quantity count{Vi} denotes the number of measurement data that can be found in this ring. Note that these data represent a circular-ring-related subset Vi of the whole measurement series, i.e., Vi⊂{V}. Taking the equation of a circle into account, i.e., , in practice both the subset Vi and count{Vi} can be determined by identifying the radial velocities of the measurement time series that satisfy the relation

with the range of radii r defined through the boundaries of the circular ring . In order to generate the ri−count{Vi} diagrams shown in Fig. 6c and f the full area of the VV90 plane has been subdivided into closely spaced circular rings of increasing radius ( with n∈ℕ) with discrete fixed steps Δr=0.5 m s−1. Note that this value turned out to be a viable choice if using the ri−count{Vi} diagram as a tool in a filtering procedure (see Sect. 4.2). For case 1, the data availability of measured radial velocities is constrained to only a few circular rings with ri ranging between 5 and 6.5 m s−1, with the largest fraction of measurements in the circular ring with ri = 5.5 m s−1 (see Fig. 6c). Additionally, due to the stationary wind field conditions the obtained availability distribution is strictly unimodal and symmetric. For case 2 the measurements are distributed over a broader range of circular rings with ri taking values between 0.5 and 12.5 m s−1. The largest fraction of the measurements occurs within a circular ring with ri = 4.5 m s−1 (see Fig. 6f). The distribution of data availability is nearly unimodal but asymmetric. The examples shown in Fig. 6 represent just two specific situations, and a great variety of VV90D and associated ri−count{Vi} diagrams may result for different atmospheric and lidar signal conditions, including multi-modal distributions (not shown here). In the following we refer to the VV90D diagram and the associated ri−count{Vi} diagram as the framework of the VV90D perspective.

Compared to the commonly used VAD visualization technique, the framework of the VV90D perspective represents an alternative way of displaying radial velocity measurements from a conically scanning DL and opens up new possibilities for data analysis at the same time. In the next section it will be shown how this framework can be used to develop suitable filtering techniques of bad estimates in noisy DL data.

4.2 Coarse filtering techniques

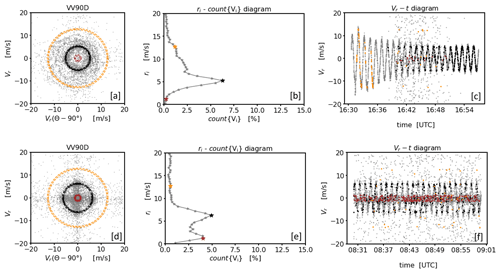

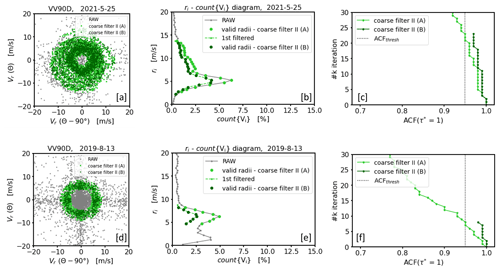

Two different coarse filtering techniques are presented next. The underlying ideas are motivated by characteristic features of good and bad estimates in the VV90D diagram, which will be briefly explained. For this purpose the noise-contaminated measurement examples of type A and type B from Sect. 2.2 are used. Compared to noise-free DL measurements, noticeable features of noise-contaminated DL measurements in the VV90D are the greater spread of paired DL data () and a lack of clear circular patterns (Fig. 7a, d). In the associated ri−count{Vi} diagrams (Fig. 7b, e) this also goes along with a broader distribution of available data points over a larger range of circular rings. Furthermore it is obvious that for the type B noise measurement example the more densely distributed bad estimates around zero make a cross-shaped region visible in the VV90D (Fig. 7d) and cause a pronounced secondary peak in the ri−count{Vi} diagram (Fig. 7e). Additionally, by examining in more detail the properties of the data occurring in the three color-coded circular rings, it becomes apparent that circular rings with a high data number mostly contain reliable DL radial velocities, i.e., good estimates. This can be seen from the fact that the data occurring in these rings mostly follow the expected sinusoidal course of the DL measurements (see the black dots in Fig. 7c, f). It is also striking that this happens in a dense sequence of data points. For circular rings with an increasingly lower data number the associated data subsets Vi contain increasingly more measurements that deviate from the sine, i.e., radial velocities which reflect bad estimates taking any value within the velocity space ±19 m s−1 (see the orange dots in Fig. 7c, f). It is noticeable here that bad estimates in such subsets Vi mostly occur as singular points having no further data points in the immediate environment. Note again that the type B measurement example represents an exception here. While the circular ring ri with the global peak in the ri−count{Vi} diagram contains mostly good data, the circular ring with the secondary peak contains mostly bad estimates (see the red dots in Fig. 7f).

Figure 7Examples of noise-contaminated DL measurements over a 30 min time period analyzed using the framework of the VV90D perspective. The upper (a–c) and lower (d–f) rows show measurements contaminated with type A and type B noise, respectively (see Fig. 1). The plots in each row show, from left to right, the VV90D plot (a, d), the frequency distribution binned by circular rings , where (n∈ℕ) and with Δr=0.5 m s−1 (b, e), and the time series plot (c, f). Additionally for each measurement example three specific circular rings have been chosen to illustrate where the measurement data contained in the circular ring (highlighted in different colors) are located in the time series plot.

4.2.1 Filtering by single point analysis – coarse filter I

One specific property emerging from the analysis of noisy DL data above is that if subsets Vi of data points binned by circular rings are analyzed individually, good estimates mostly occur in a dense sequence of points following the sinusoidal course of the measurements, while bad estimates mostly occur as singular points having no further data points in the immediate environment and take any value within the velocity space ±20 m s−1. These properties open a first way for the development of a filter technique for bad estimates, namely, by detecting and discarding singular points in circular-ring-related subsets Vi of measurements. Practically, this can be implemented as follows. Use the framework of the VV90D perspective. Consider all circular rings spanning the VV90 plane individually. To select the ring-related data points, always start with the original time series and set all measurement points to a non-numeric flag value (e.g., NaN) which do not satisfy Eq. (4). This gives for each circular ring a certain ring-specific time series which has the length of the original one but where only measurement points are allocated with a numerical value which occur in the respective circular ring. Then, for each of the ring-specific time series sequentially check each position of the time series for flagged predecessor and successor positions within a pre-defined azimuthal environment. Positions occupied by an unflagged value, i.e., a numerical value, but with flagged predecessor and successor positions can be regarded as a singular point and discarded. Finally, the resulting circular-ring-related time series have to be merged back to one full time series which then represents a filtered time series where most of the bad estimates should be excluded. This filtering technique is referred to as coarse filter I hereafter. Note, however, that not all bad estimates necessarily occur as singular points. Hence, it is possible that a minor portion of bad estimates will still remain in the measurement series. Those can be discarded using classical outlier detection methods (e.g., the 3σ rule applied to differences of radial velocity measurements of two consecutive azimuthal measurement points) which are only effective if outliers are real outliers in the sense that they represent only a few unusual observations. In the case of noise-contaminated measurements the fraction of bad estimates is too high, which would not justify considering them to be outliers in the original sense.

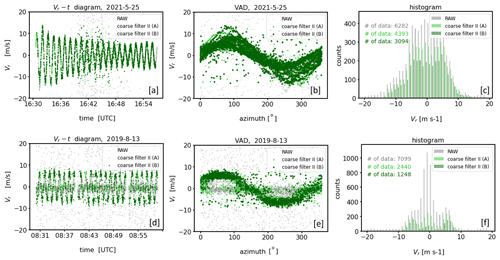

Figure 8Examples of the outcome of coarse filter I. The upper (a–c) and lower (d–f) rows show measurements contaminated with type A and type B noise, respectively (see Fig. 1). The plots in each row show, from left to right, a comparison of the time series before (RAW) and after the application of coarse filter I using a Vr−t diagram (a, d), a comparison of the time series before and after filtering using the VAD perspective (b, e), and a comparison of the associated histograms of the radial velocities (c, f).

Results that can be obtained using coarse filter I applied to the measurement examples of Fig. 7 are shown in Fig. 8. It turns out that bad estimates can be best removed from measurements including type A noise (Fig. 8a). During the sub-interval of enhanced noise in the time series of the radial velocities, however, a severe thinning of data is striking, which reflects good estimates (Fig. 8b). This erroneous exclusion of good estimates happens when the number of measurement data, i.e., count{Vi}, is comparatively low for a larger number of circular rings. That is because low values of count{Vi} also mean that there is an increased probability that good estimates appear more frequently as singular points. Unfortunately, the latter makes the performance of coarse filter I weaker the more bad estimates are included in the time series of Doppler velocities (Fig. 8d, e) since an increased number of bad estimates increases the number of sparsely filled circular rings extending over the whole VV90 plane. From the VAD perspective, however, it can be seen that despite the strong data thinning the remaining data points represent a suitable first guess for good estimates of the time series which reveal the range of good estimates for each azimuthal direction (Fig. 8b). Considering the results of coarse filter I for the type B noise example the performance of the filtering technique is not convincing. Here, a huge fraction of bad estimates belonging to the type “noise around zero” remains in the dataset after applying the filtering method (Fig. 8e). From that we conclude that the specific distribution characteristics of type B noise measurements prohibit the possibility to distinguish between good and bad estimates by means of singular point detection.

4.2.2 Filtering based on ACF analysis – coarse filter II

The underlying idea of coarse filter II makes use of another specific property that can be derived from the analysis of noise-contaminated measurements using the framework of the VV90D perspective. It has already been described above that circular rings with a comparatively high data number count{Vi} mostly include good estimates and for circular rings with a decreasing data number the occurrence of bad estimates increases. Thus, it is to be expected that an evaluation of the ACF of circular-ring-related data subsets Vi with a high data number would yield ACF () ∼ 1. In contrast, for circular-ring-related data subsets Vi with a low data number this value would be comparably low (see Sect. 2.2 and Appendix B). This property opens up a second way for the development of a filter technique for bad estimates, namely by selecting only circular rings and the associated subsets of the entire time series of measurements with an ACF value not falling below a pre-defined threshold (ACFthresh hereafter). Practically, this can be implemented as follows. Use the framework of the VV90D perspective. Seek in a first step that circular ring in the ri−count{Vi} diagram with an absolute maximum in the data number (i.e., search for ri with MAX(count{Vi}). Next, temporarily set all data points of the original measurement time series to a non-numeric flag value (e.g., NaN) which do not satisfy Eq. (4) for the circular ring with the central radius ri previously determined. This gives an initial guess for a filtered time series . To check for low noise contamination by means of the ACF, replace the flagged positions of the time series Vf with an estimated numerical value from the respective unflagged predecessor and successor positions using linear interpolation and calculate the ACF. The replacement of flagged positions is a necessary technical step to maintain the length of the time series and therewith the azimuthal distances between the single measurement points of the series. The latter is important since consecutive measurement data with different azimuthal distances would correlate differently with each other, which in turn would affect the ACF (see Appendix B). If the good quality of this filtered time series is reasonably assured, i.e., if ACF, the unflagged values of can be regarded as reliable. In the same way as just described, by means of further iteration steps it is possible to gradually increase the number of reliable measurement data by repeating the above-described procedure taking not only the data from subsets Vi at circular rings with MAX(count{Vi}) into account but also those from adjacent circular rings , whereas the data number determines the order. This effectively results in the consideration of a wider circular ring with an accordingly higher number of data. The latter are constituents of a newly filtered time series after the kth iteration. As long as the added subsets Vi±1 from adjacent circular rings include mostly good estimates, the associated ACF of the newly generated times series will remain close to 1, i.e., ACF, and the iteration can be continued. The iteration has to be stopped if the ACF of the newly generated time series falls below a pre-defined threshold, i.e., if ACF < ACFthresh. That happens when the recently added data represent subsets of circular rings with an increased fraction of bad estimates. In this case, the result from the previous iteration step can be considered the best possible filtered time series with a maximum possible data availability and a low proportion of bad estimates at the same time. More detailed information useful for practical implementation of coarse filter II is given in Appendix F.

Figure 9Example of the treatment of noise-contaminated DL measurements over a 30 min time period illustrated using the framework of the VV90D perspective in combination with intermediate results when coarse filter II has been applied. The upper (a–c) and lower (d–f) rows show measurements contaminated with type A and type B noise, respectively (see Fig. 1). The plots in each row show, from left to right, the VV90D plot (a, d), the frequency distribution binned by circular rings , where (n∈ℕ) and with Δr=0.5 m s−1 (b, e), and a graphic illustrating the change in the ACF with each iteration step (for more details see Sect. (4.2.2)). Additionally, intermediate results from two consecutive applications of coarse filter II, indicated by A and B, are shown.

Figure 10Examples of the outcome of coarse filter II. The upper (a–c) and lower (d–f) rows show measurements contaminated with type A and type B noise, respectively (see Fig. 1). The plots in each row show, from left to right, a comparison of the time series before (RAW) and after application of coarse filter II (a, d), a comparison of the time series before and after filtering using the VAD perspective (b, e), and a comparison of the associated histograms of the radial velocities (c, f).

For the measurement examples shown in Fig. 7 relevant technical details concerning coarse filter II are shown in Fig. 9. The change in ACF with each iteration step is illustrated in Fig. 9c and f. The circular rings included with each iteration step until the iteration has been stopped and the associated data points of the subsets Vi are color-coded in Fig. 9b and e and Fig. 9a and d, respectively. The final filter results of the measurement series that can be obtained using ACFthresh=0.95 are shown in Fig. 10. The filter results based on coarse filter II differ from the results obtained based on coarse filter I (see Fig. 8) in two respects. One advantage of coarse filter II over coarse filter I is that fewer good estimates are incorrectly rejected, which is accompanied by a noticeably higher availability of good estimates. This can be verified based on the histograms comparing the distributions of the measurements from both the original and the filtered time series, which are shown in Figs. 8c and f and 10c and f, respectively. One disadvantage of coarse filter II over coarse filter I is that the hit rate of the filter goal of rejecting bad estimates is lower. This disadvantage of coarse filter II is more frequently observed for type A noise-contaminated measurements if the conditions during the measurement interval are nonstationary (see Fig. 10a–b) than for stationary intervals (not shown here). In this case an increase in the threshold value (e.g., ACFthresh=0.99) would help to better remove bad estimates; however, this would be at the expense of removing more good estimates which describe the nonstationarity in the wind field. For type B noise-contaminated measurement intervals this issue also occurs for nonstationary measurement intervals (see Fig. 10d–e).

4.3 Post-processing filter for optimization

The coarse filter results presented in Sect. 4.2.1 and 4.2.2 are not yet satisfactory for the following reasons. Firstly, the frequently unjustified rejection of good estimates after applying coarse filter I results in an unnecessary reduction of reliable measurement data. Secondly, the number of remaining bad estimates after applying coarse filter II is still too high. Hence, additional efforts are required to further optimize the filter results. Therefore, the results of coarse filters I and II will be treated as intermediate results only at this point. Possible further optimization steps are considered in more detail next. The entirety of these steps represents the filter for post-processing. Note that all analyses are from now on carried out using the VAD perspective.

4.3.1 Two-stage MAD filter

The median absolute deviation (MAD) is a well-known statistical tool for outlier detection in measured datasets (Iglewicz and Hoaglin, 1993) having a unimodal and symmetrical distribution (see Sect. 3). Here, the MAD is used as an additional filter step following coarse filter II. The term “outlier” refers to a few uncontrollable and abnormal observations which seem to lie outside the considered population. If , with n ∈ℕ, is a given dataset of measurements that is normally distributed (i.e., 𝒩(μ, σ2) with mean μ and variance σ2 ), the MAD is defined through

According to Iglewicz and Hoaglin (1993), values xi are regarded as outliers if they are not included in an interval given by

The cut-off value q is mostly chosen arbitrarily. Iglewicz and Hoaglin (1993) suggest q=3.5. A modification of the MAD is the so-called double MAD, which can be used for nonsymmetric distributions (Rosenmai, 2013). The MAD outlier detection method works in analogy to the 3σ rule of thumb (Gränicher, 1996) but is classified as the more robust one. Robust in this context means that the median and MAD itself are less affected by outliers than the mean or the standard deviation σ. Having this in mind, care has to be taken when applying the MAD method to DL radial velocity measurements including a huge fraction of bad estimates. In such a case bad estimates can no longer be considered only a few unusual observations and it is not unlikely that the median is also influenced by them so that the requirements for an application of the MAD are no longer met. For this reason, the MAD is only used here as a post-processing filter for DL measurements that were previously filtered with coarse filter II.

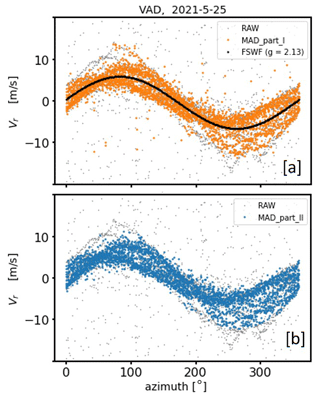

Looking at the pre-filtered DL measurements from the VAD perspective (Fig. 10b, e), the application of the MAD outlier detection method is followed in two steps. In the first stage (MAD_part_I, hereafter) we apply the MAD azimuth-wise, i.e., to datasets representing measurements from only one direction. In the second stage (MAD_part_II, hereafter) we apply the MAD to a dataset representing squared deviations of radial velocity measurements Vr from sine wave fit radial velocities . In order to determine the latter the so-called filtered sine wave fit (FSWF) as a wind vector estimation technique introduced by Smalikho (2003) has been used. This technique requires knowledge about the standard deviation σ of good estimates which has been estimated based on the filter results of MAD_part_I. Intermediate results of the two-stage MAD applied to the outcome of coarse filter II (Fig. 10b) are illustrated in Fig. 11. From our experience we know that employing MAD_part_I, particularly by means of the double MAD filter technique (Rosenmai, 2013), may contribute to retain the azimuthal variability in Vr. The latter is important, especially when the wind field was inhomogeneous and nonstationary during the 30 min measurement interval. This can be seen in Fig. 11a, illustrating the outcome of MAD_part_I. Here, relevant measurements reflecting the nonstationarity of the wind field still remain in the filtered dataset even if they deviate substantially from the rest of the azimuthal dataset, as can be seen, for instance, around the azimuth angles θ=80° and θ=250°. However, it is also noticeable in Fig. 11b that after applying MAD_part_I not all bad estimates could be removed from the dataset. This can be explained by the fact that often not enough data per azimuth sector were available for a reliable calculation of the median and MAD. For that reason MAD_part_II becomes necessary to further improve the bad estimate detection rate. Corresponding filter results are illustrated in Fig. 11b and clearly show that the fraction of remaining bad estimates could be substantially reduced. Unfortunately, MAD_part_II also contributes to a severe cut-off of a huge fraction of directional variability which was actually possible to avoid by applying MAD_part_I. This can be attributed to the choice of the cut-off (here: q=3.5) and very clearly shows the fundamental issues when using statistical filter methods where cut-off values have to be carefully chosen and cannot be generalized as would be required for a routine application.

Figure 11Intermediate results of the two-stage MAD filter applied to the outcome of coarse filter II for the type A measurement example shown in Fig. 10b. The outcome of MAD_part_I and MAD_part_II is shown in panels (a) and (b), respectively. Furthermore in panel (a) the results of a filter sine wave fit (FSWF) are shown, which has been calculated based on a standard deviation (here m s−1) obtained from the colored data reflecting the results of MAD_part_I.

4.3.2 Determination of the sinusoidal corridor of good estimates

The main advantage of coarse filter I over coarse filter II is the better performance with respect to the detection of bad estimates, which makes the two-stage MAD filter as a follow-up filter step of coarse filter I redundant at this point. The disadvantage of coarse filter I, however, lies in the strong rejection of many obviously good estimates (see Sect. 4.2.1). Next a possibility is described regarding how to reverse wrong data rejection decisions in order to increase the availability of reliable measurements again.

Figure 12Results of the filter for post-processing applied to the outcome of coarse filter I (see Fig. 8) for two measurement examples characterized by a different type of noise distribution (top: type A noise, bottom: type B noise). The panels in each row show, from left to right, the identified borders of the area which defines the corridor of good estimates (a, d), the outcome of the re-activation step of previously discarded good estimates (b, e), and the associated histograms which provide an overview of the final data availability of Vr estimates identified as good data (c, f).

It has been shown in Sect. 4.2.1 that the outcome of coarse filter I for noise-contaminated measurements of type A (Fig. 8a–c) is a dataset representing a suitable first guess for good estimates if visualized using the VAD perspective (Fig. 8b). Hence, the roughly filtered data can be used to narrow down the sinusoidal area in the VAD space where most of the good estimates can be found. This in turn offers the possibility to re-activate radial velocities within the area boundaries as good data that were discarded after applying coarse filter I. The outcome of such a re-activation as a post-processing step of coarse filter I is shown in Fig. 12. The identified borders of the area which define the corridor of good estimates shown in Fig. 12a and d have been determined in the following two consecutive steps: firstly by calculating the min and max radial velocity values for each azimuthal direction and secondly by calculating the upper envelope of the max values and the lower envelope of the min values over the interval 0 to 360°. Then the re-activation of falsely rejected good estimates is done by considering all measurement points within the corridor defined by the upper and lower envelopes to be good estimates. The corresponding results of this step are shown in Fig. 12b and e. Note that the procedure described above to determine the area of good estimates is relatively simple and has its weaknesses, especially in the case of low data availability, which complicates the determination of the envelope due to a small number of available min and max values. For such conditions a more sophisticated approach is needed. The re-activation results for the measurement example characterized by type A noise shown in Fig. 12b match the data one would identify as good data well from a visual point of view. Furthermore, the re-activation step is accompanied by a strong increase in reliable data compared to the outcome of coarse filter I (compare Figs. 8c and 12c). Hence the higher data availability achieved in this way may contribute to an improvement of the variance statistics required for a turbulence product retrieval. In contrast, the re-activation step fails if applied to type B noise-contaminated measurements, which is shown in Fig. 12e. This is due to both the poor first-guess results for good estimates after applying coarse filter I, which does not contain enough details to correctly narrow down the sinusoidal area of good estimates, and some of the remaining bad estimates that belong to the specific class of noise around zero. Note that to discard the latter, here we omitted the two-stage MAD as a follow-up filter of coarse filter I. This is because we know from our experience that the generally significantly lower data availability of reliable measurement data after the application of coarse filter I compared to coarse filter II turns out to be unfavorable for a successful MAD application.

Figure 13Results of the filter for post-processing applied to the outcome of coarse filter II in combination with a follow-up two-stage MAD filter step (see Figs. 10 and 11) for two measurement examples characterized by a different type of noise distribution (top: type A noise, bottom: type B noise). The panels in each row show, from left to right, the identified borders of the area which defines the corridor of good estimates (a, d), the outcome of the re-activation step of previously discarded good estimates (b, e), and the associated histograms which provide an overview of the final data availability of good estimates (c, f).

So far the re-activation step of falsely rejected good estimates introduced above has been discussed in the context of a post-processing of filter results after applying coarse filter I. Even if an unjustified data loss of good estimates after an application of coarse filter II is not that substantial the above-described re-activation step can also be applied to the outcome of coarse filter II. However, for the reasons mentioned in Sect. 4.3.1 this requires a previously executed two-stage MAD filter. The corresponding results are shown in Fig. 13 where the significantly better results for the type B noise example (Fig. 13d–f) are obvious. The disadvantage, however, is that with the re-activation step a substantial number of bad estimates in the region around the reflection point of the sinusoidal corridor of good estimates is also assigned to the set of good data. At this point the corridor of good estimates and the horizontal band reflecting a higher concentration of noise around zero overlap, and no clear distinction between good and bad estimates is possible. This can also be seen by comparing the histograms shown in Figs. 10f and 13f.

Finally, it should be mentioned that with the re-activation of initially discarded data in the identified corridor of good estimates there is always a risk of returning a certain number of bad estimates if the raw measurements were contaminated with noise. Since bad estimates can be distributed over the whole measurement space of ±19 m s−1 they potentially also occur in the corridor of good estimates. However, as long as the interest is only in mean wind and turbulence statistics, which are primarily obtained using the VAD perspective, the effect of such a small fraction of bad estimates is expected to be negligible.

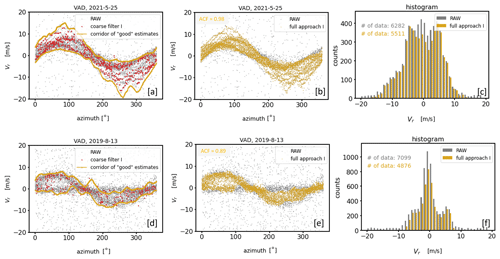

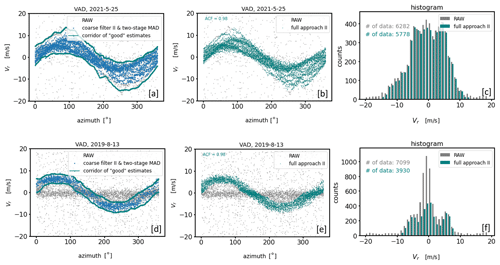

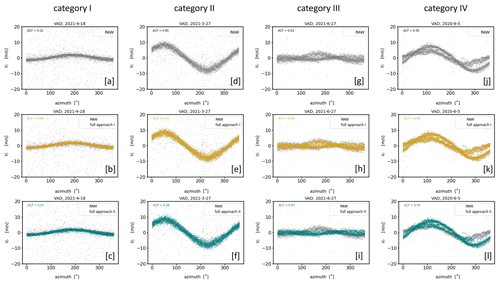

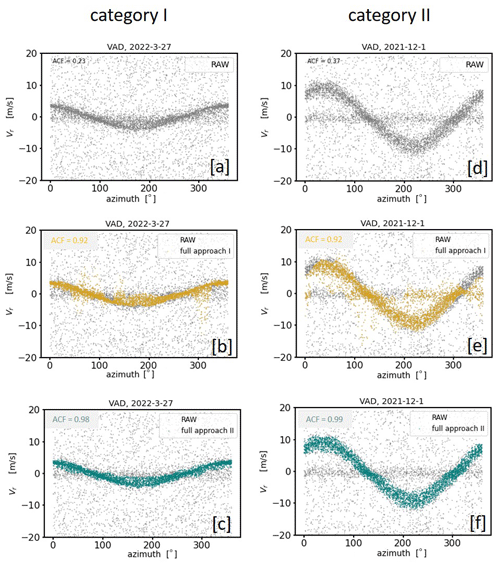

4.4 Intercomparison of approach I and approach II under different atmospheric wind conditions

In the previous subsections the limits of the usability of approach I and approach II depending on the type of noise have been discussed. The type of noise, however, is not the only factor affecting the applicability of the two different filtering techniques. Their success is also linked to the strength and temporal evolution of the wind during the measurement period. This becomes obvious by comparing the filter results of approach I and approach II for both type A and type B noise (Figs. 14 and 15) while considering the following categories: (I) weak and stationary wind, (II) strong and stationary wind, (III) weak and nonstationary wind, and (IV) strong and nonstationary wind.

Figure 14Overview of the final filter results of approach I and approach II for DL measurement examples contaminated with type A noise. The examples of each column represent four selected atmospheric conditions with respect to the wind situation: weak and stationary wind (category I), strong and stationary wind (category II), weak and nonstationary wind (category III), and strong and nonstationary wind (category IV). The panels in the first row show the time series of the respective RAW data of the DL radial velocity measurements over a measurement interval of 30 min. The panels in the second (third) row show both the RAW data and the filter results of approach I (approach II) in different colors.

Figure 15Same as in Fig. 14 except that DL measurements contaminated with type B noise are considered. Categories III and IV are not available.

Comparing the filter results for measurements with type A noise the outcomes of approach I and approach II are equally good for category I and II (Fig. 14b, e and c, f), but for category III and IV the results based on approach I (Fig. 14h, k) turn out better than based on approach II (Fig. 14i, l). The differences in the results for category III and IV are not because bad estimates have not been correctly detected by approach II but rather due to the wrong rejection of a substantial number of obviously good estimates. This error can be traced back to a bimodal distribution in the measurement-related ri−count{Vi} diagram (not shown) caused by the nonstationarity of the wind field during the 30 min measurement interval. Here, the secondary peak was incorrectly interpreted as noise around zero (see Sect. 4.2.2). Note that coarse filter II of approach II is not designed to make this distinction and thus fails when applied to situations belonging to category III and IV. In contrast, when comparing the filter results for type B noise measurements (Fig. 15) approach II is better than approach I for category I and II. This can be seen not only visually but also numerically by means of the ACF. While the ACF values were between 0.23 and 0.37 in the unfiltered time series (Fig. 15a, d), after applying the filtering technique the ACF takes values between 0.98 and 0.99 for approach II (Fig. 15c, f) but only values around 0.92 for approach I (Fig. 15b, e). Finally, two more findings are considered worth mentioning here: firstly, measurements during wind conditions belonging to category III are obviously difficult to manage for both approaches, indicated by the comparably poor ACF values of the fully filtered time series in a range between 0.87 and 0.89 (Fig. 14h, i). Secondly, it becomes obvious that, particularly during weak wind conditions (i.e., category I and III), the bands of good estimates sometimes seem too narrow from a purely visual point of view. This observation holds for both of the filter approaches (Fig. 14b, c and h, i). The reason for this lies in the method to determine the envelope in connection with the final re-activation step of previously discarded good estimates (see Sect. 4.3.2). As a result, some information on the actual variability is lost in the filtered dataset. This in turn may result in an underestimation of variances as will be shown in Sect. 5.1.

Knowledge and insights gained from the overview given in this section are important to develop a strategy to implement the filtering techniques for operational use. More detailed information on a strategy that can be used for an implementation of approach I and approach II is given in Appendix G.

Depending on the filtering technique used, the decision about which of the radial velocities are classified as good or bad estimates can turn out very differently. Hence, it is to be expected that differently pre-filtered DL measurements may result in differences in the retrieved turbulence variables. This section aims to demonstrate that due to the higher sensitivity of the newly introduced filtering techniques concerning both the rejection of bad estimates and the acceptance of good estimates, the quality and data availability of DL-based turbulence measurements (e.g., TKE retrieved following Smalikho and Banakh, 2017) can be improved. For DL measurements with a probability of bad estimates close to zero this method delivers reasonable results (see Appendix H). Thus, if differently pre-filtered DL data are used as input for the retrieval process, large errors in the retrieved TKE can be attributed to either a faulty noise filtering that leaves bad estimates in the filtered dataset or to an overfiltering that removes too many reliable data points.

5.1 Comparisons with sonic anemometer as an independent reference

In order to be able to assess the quality of TKE variables based on differently pre-filtered DL measurements, as an independent reference, sonic data from a 99 m tall meteorological mast are used. The measurements were performed with a USA-1 sonic anemometer (Metek GmbH) at a sampling rate of 20 Hz, and the raw data were processed with EddyPro (LiCor Inc.) software. The mast is operated at GM Falkenberg at a distance of about 80 m towards SSW from the DL system.

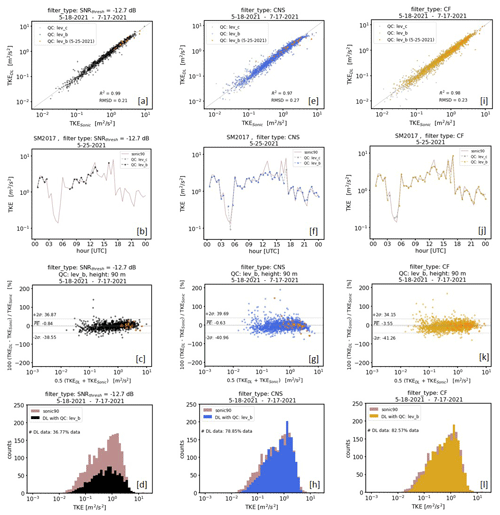

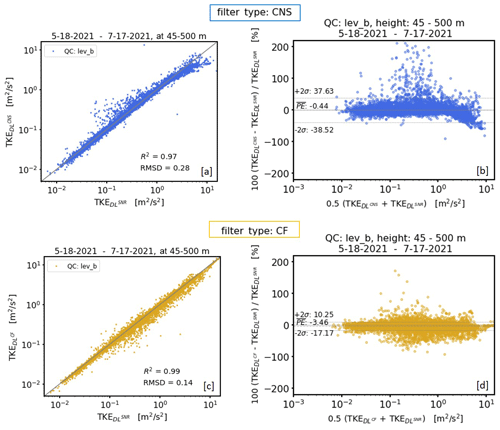

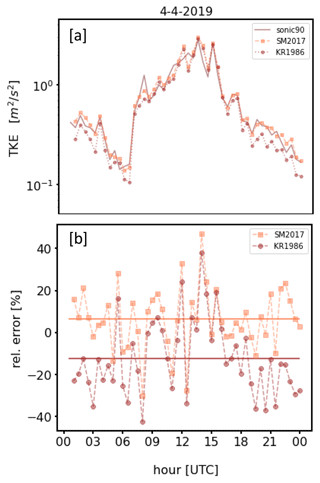

Figure 16Comparison of Doppler-lidar-based TKE at 95 m height with data from a mast equipped with a sonic device at 90 m height. The measurement period was from 18 May to 17 July 2021. Each column represents the comparison of different TKE products based on differently pre-filtered input data. SNR threshold filtered data with SNRthresh = −12.7 dB and consensus (CNS) filtered data have been used in the left and middle column, respectively. Input data obtained using a combined filter (CF) application of approach I and approach II have been used in right column. The panels of each column represent comparisons between DL and sonic data using different visualization techniques. These are, from top to bottom, scatterplots, time series plots (1 d only, 25 May 2021), Bland–Altman plots, and histograms. The abbreviation “QC: lev_x” in the scatterplots (a, e, i) refers to different levels of product quality control (see Sect. 5.1).

Results of an intercomparison of TKE retrievals based on differently pre-filtered DL data versus sonic TKE measurements are summarized in Fig. 16. Three different cases are analyzed: TKE retrievals calculated by means of (i) SNR-threshold-based filtered input data using SNRthresh = −12.7 dB (Fig. 16a–d), (ii) CNS-based filtered data (Fig. 16e–h), and (iii) CF-based filtered data (Fig. 16i–l). Here CF (combined filter) stands for a combined application of approach I and approach II because of the occurrence of type A and type B noise in the DL78 measurements (see Sect. 2.1–2.2 and Appendix G). For each case an additional distinction is made between TKE products which are subject to different quality control (QC) steps. Here, the minimum requirement for a TKE value representing a 30 min mean is that its retrieval is based on more than 60 % of reliable measurements of Vr during the measurement interval (lev_c hereafter). Since the TKE reconstruction method relies on a variety of theoretical assumptions (e.g., see Eq. 22 in Smalikho and Banakh, 2017) a further QC step proves the fulfillment of these requirements (lev_b hereafter). To obtain meaningful results the evaluations are based on a 2-month dataset of DL measurements at GM Falkenberg which were collected during () from 18 May to 17 July 2021.

Considering the Doppler lidar TKE retrieval based on a pre-filtering of the measurements using the SNR threshold approach, the problems that arise in connection with routine turbulence measurements (see Sect. 1) can be supported here numerically. Although good data quality is achieved compared to sonic measurements (R2 = 0.99 and RMSD = 0.21; Fig. 16a), data availability is very low (36.77 %; Fig. 16d). TKE values based on CF pre-filtered DL data have almost comparable data quality (R2 = 0.98 and RMSD = 0.23; Fig. 16i) but score additionally with a significantly higher data availability (82.57 %; Fig. 16l). TKE results based on CNS pre-filtered DL data are also characterized by a higher data availability (78.85 %; Fig. 16h) but have poorer data quality (R2 = 0.97 and RMSD = 0.27; Fig. 16e). To explain the latter, the time series plot comparisons between DL data and sonic data displayed in Fig. 16b and f for the example day 25 May 2021 are helpful. This day is a typical example day with noise-contaminated DL measurements over the whole day but with an obvious higher density of bad estimates in the early morning between 04:00 and 07:00 UTC and in the afternoon from 16:00 UTC onwards (see Fig. H1, 2 – Appendix H). Note that during these time intervals no reliable TKE values are available if an SNR threshold pre-filtering is used. In contrast, using CNS pre-filtered data, retrieved TKE values are available for these time periods, which, however, are partly subject to errors if compared with sonic data. For instance, DL-based TKE values obtained at 04:30 and 16:00 UTC show either a pronounced overestimation or underestimation if compared with sonic-based TKE measurements. With a closer look into the DL radial velocity measurements from which these 30 min TKE values have been retrieved, this overestimation and underestimation can be easily explained (see Fig. 5b, d). For the measurement period between 04:00 and 04:30 UTC the prescribed search interval ΔVr=3 m s−1 was too large so that bad estimates remained in the dataset and introduced an additional variance contribution, yielding an overestimation of the TKE. For the measurement period between 15:30 and 16:00 UTC the value for ΔVr was too small so that relevant features of the wind field were not captured. As a consequence, the dataset and thus the derived variances are not representative for the 30 min measurement interval, yielding an underestimation of the TKE value. These examples clearly show the weaknesses of the CNS filtering technique if the ΔVr search interval is inadequately selected. With a fixed ΔVr during routine measurements such situations occur quite frequently, which explains why the data quality of TKE values retrieved from CNS pre-filtered DL data is worse compared to TKE retrieved from pre-filtered DL data using the SNR thresholding technique. Note that with the newly introduced CF filtering technique the above-described issues can be avoided and therewith more reliable TKE values can be derived (Fig. 16j, l).

One additional interim note should be given on the high sonic TKE value of ∼ 7 m2 s−2 at 16:00 UTC (Fig. 16f). This value is likely caused by an instationary wind field during the 30 min measuring interval rather than due to eddies in the turbulent flow. For that reason this value should be flagged as non-reliable. The problem, however, is that with the CNS filtering technique relevant wind information characterizing this nonstationarity would be rejected so that the identification of such nonstationarity would not be possible.