the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Estimation of biogenic volatile organic compound (BVOC) emissions in forest ecosystems using drone-based lidar, photogrammetry, and image recognition technologies

Xianzhong Duan

Guotong Wu

Suping Situ

Shengjie Zhu

Qi Zhang

Yibo Huangfu

Weiwen Wang

Weihua Chen

Xuemei Wang

Biogenic volatile organic compounds (BVOCs), as a crucial component that impacts atmospheric chemistry and ecological interactions with various organisms, play a significant role in the atmosphere–ecosystem relationship. However, traditional field observation methods are challenging for accurately estimating BVOC emissions in forest ecosystems with high biodiversity, leading to significant uncertainty in quantifying these compounds. To address this issue, this research proposes a workflow utilizing drone-mounted lidar and photogrammetry technologies for identifying plant species to obtain accurate BVOC emission data. By applying this workflow to a typical subtropical forest plot, the following findings were made: the drone-mounted lidar and photogrammetry modules effectively segmented trees and acquired single wood structures and images of each tree. Image recognition technology enabled relatively accurate identification of tree species, with the highest-frequency family being Euphorbiaceae. The largest cumulative isoprene emissions in the study plot were from the Myrtaceae family, while those of monoterpenes were from the Rubiaceae family. To fully leverage the estimation results of BVOC emissions directly from individual tree levels, it may be necessary for communities to establish more comprehensive tree species emission databases and models.

- Article

(5027 KB) - Full-text XML

- BibTeX

- EndNote

Biogenic volatile organic compounds (BVOCs) are the medium of communication for plants to realize their wide ecological functions (Laothawornkitkul et al., 2009). BVOCs are involved in plant growth, reproduction, and defense (Peñuelas and Staudt, 2010). Plants respond to the feeding of herbivores by emitting BVOCs to attract potential predators or as repellents (Kegge and Pierik, 2010). The communication process between plants is also based on BVOCs (Šimpraga et al., 2016). For example, gnawed plants will emit BVOCs to induce the production of defensive substances in non-attack objects (Dicke and Baldwin, 2010). In addition, BVOCs components are also used by plants to attract pollinators to bloom (Loreto et al., 2014). For the plants themselves, under heat waves or high ozone concentrations, BVOCs seem to reduce oxidative stress and other stresses caused by the complex non-biological urban environment (Ghirardo et al., 2016; Chen et al., 2018).

At the same time, BVOCs are emitted into the atmosphere from vegetation and have significant impacts on other organisms and atmospheric chemistry and physics (Peñuelas and Staudt, 2010). BVOCs account for 90 % of VOCs in atmospheric chemistry research, which were considered the fuel to drive atmospheric chemical processes and the key component of the atmosphere (Heald and Kroll, 2020). The atmospheric chemical activity of BVOC species is very sprightly, and its lifetime usually ranges from only a few minutes to a few hours (Mellouki et al., 2015; Canaval et al., 2020). The contribution of BVOC emissions to global secondary organic aerosol (SOA) generation is about 90 %, which is the main source of global atmospheric SOA (Henze et al., 2008). At the same time, BVOCs contributed about 10 %–30 % of the surface ozone in urban areas (Ran et al., 2011; Tsimpidi et al., 2012; Wu et al., 2020; Chen et al., 2022).

However, there is considerable uncertainty in the estimation of BVOCs (about 90 %–120 %), which constrains our understanding of the atmospheric environment and ecological effects of BVOCs (Situ et al., 2014; Wang et al., 2021). Especially for the forest ecosystem with the highest biodiversity, forest vegetation is considered to be the main contributor of BVOC emissions, accounting for more than 70 % of global BVOC emissions, but the uncertainty of estimation of BVOC emissions from forest vegetation is the most significant (Hartley et al., 2017). This uncertainty arises from two aspects: the lack of field observations and the simplification of numerical simulations. There are different methods for measuring BVOC emissions on various scales. At the leaf and plant scale, scholars have used confined-chamber and various improved confined-chamber methods (open-top chamber, free-air concentration enrichment, etc.) to conduct a large number of outstanding observational studies on the BVOC emissions of leaves, branches, and the whole tree and create different BVOC databases of single-tree BVOC component emissions (Isidorov et al., 1990; Komenda and Koppmann, 2002; Baghi et al., 2012; Curtis et al., 2014). Existing potential BVOC emission databases include seBVOC (Steinbrecher et al., 2009), the tree BVOC index (Simpson and McPherson, 2011), MEGAN (Guenther et al., 2012), and other general inventories (e.g., http://itreetools.org/, last access: 27 June 2024; http://www.es.lancs.ac.uk/cnhgroup/, last access: 27 June 2024). These studies mainly quantify the emission rate of BVOCs from specific tree species, which can help understand the processes and factors that affect the emissions of BVOCs. At the forest landscape and canopy scale, flux towers are generally established at specific forest sites to observe the BVOC emissions of the entire vegetation canopy (Sarkar et al., 2020). This method is relatively reliable and widely used and can estimate the vegetation canopy emission flux within a range of several hundred meters from the flux tower. The closed-chamber method and flux tower observation results can indirectly calculate the BVOC emission flux at ecological scales with low biodiversity, but for ecosystems with high biodiversity, such as tropical-rainforest areas, this method is difficult for defining the characteristics of all species.

In order to bypass the detailed investigation of ecosystem species, the academic community used aerial surveys and satellite remote sensing methods for indirect inversion of the emission flux of BVOCs at the ecosystem and regional scales (Batista et al., 2019). However, its inversion accuracy is relatively low, and there are still significant errors. Similarly, due to the chemical composition of BVOCs and the diversity of their emitting tree species, as well as the influence of many environmental factors on the emission process of BVOCs, accurately simulating BVOC emissions using numerical models faces significant challenges. Existing numerical models (for example, BEIS, Biogenic Emission Inventory System; g95, the first global model used to estimate BVOC emissions; MEGAN; BEM, boundary element method) mainly use land use, leaf biomass, emission factors, and meteorological elements to estimate BVOCs emitted by vegetation (Wang et al., 2016; Chen et al., 2022). And the key source of uncertainty in its estimation comes from the inaccuracy of the numerical model on the parameterization and characterization of land use types, forest tree species composition, and leaf biomass. Recent studies have found significant spatial heterogeneity of BVOCs at the sub-forest scale (e.g., hundreds of meters on mountain slopes) (Li et al., 2021). Due to differences in the distribution of forest tree species, their BVOC emissions are more complex than commonly assumed in biosphere emission models. Overall, for the calculation of BVOC emissions, accurately characterizing the spatial distribution of emission factors is a scientific challenge that needs to be overcome to accurately quantify the spatial distribution of BVOC emissions.

In recent years, consumer-grade unoccupied aerial vehicle (UAV) platforms, lidar measurement technology, and computer image recognition technology have developed rapidly. UAVs equipped with measuring instruments for rapid sample observation technology has gradually matured, and their positioning accuracy can reach the centimeter level. Even in areas such as forest protection areas, it is possible to set up routes to carry out surveys based on suitable forest gaps. UAVs equipped with sensors to measure atmospheric components have also begun to emerge (Villa et al., 2016). Many scholars have installed sensors in drone-based platforms for the low-cost and flexible measurement of VOC, black carbon (BC), ozone, aerosol particles, etc. (Brosy et al., 2017; Rüdiger et al., 2018; Shakhatreh et al., 2019; Li et al., 2021; Wu et al., 2021). And the camera carried by the drone can also obtain very high-resolution images and even multispectral images (Nebiker et al., 2008; Villa et al., 2016; Dash et al., 2017). At the same time, the miniaturization of lidar measurement technology has also made it possible for instruments to be carried by UAVs (Zhao et al., 2016). As the most accurate surveying instrument to date, lidar can characterize the canopy structure of each tree in the measurement range by obtaining point clouds compared to existing measurement methods (Li et al., 2012; Jin et al., 2021). The characterization of the forest community structure and morphological and physiological forest traits has been greatly enriched by combined laser scanning and imaging spectroscopy (Schneider et al., 2017).

The recognition of plant species has undergone rapid development with computer image recognition technology (Fassnacht et al., 2016; Cheng et al., 2023). Usually, machine learning and deep learning methods are used to call plant image libraries to train machine vision interpretation learning models and then violently interpret high-resolution multispectral remote sensing images and laser point clouds to obtain accurate plant populations and species result (Sylvain et al., 2019). At present, there are several vegetation species classifiers that have been applied: logistic regression, linear discriminant analysis, random forest, support vector machine, k-nearest neighbor (kNN), and 2D or 3D convolutional neural network (CNN) (Michałowska and Rapiński, 2021). With the maturity of various technologies and recognition training databases, various communities have created a batch of open-source, shared, and API-callable (application programming interface) recognition apps or platforms for the public. The users only need to upload photos to get the recognized result, and the accuracy is quite good. Open-source recognition tools for lidar results have also been developed rapidly. The accuracy of species classification methods based on structural features based on lidar height, intensity, and a combination of height and intensity parameters can reach from 87 % to 92 % (You et al., 2020). Many publications have proven that the combination of lidar data and multispectral or hyperspectral images produces a higher accuracy of species classification compared to lidar data alone (Michałowska and Rapiński, 2021).

Therefore, the intent of this research is to establish a technical framework based on the lidar and photogrammetry carried by drones and image recognition technologies from the community to identify plant species to obtain accurate BVOC emissions. It is expected that the combination of the accurate lidar characterization technology of the forest canopy, the ascendant accurate identification technology of tree species, and the tree species emission factor database obtained from long-term surveys could create a new way to accurately quantify biogenic emissions.

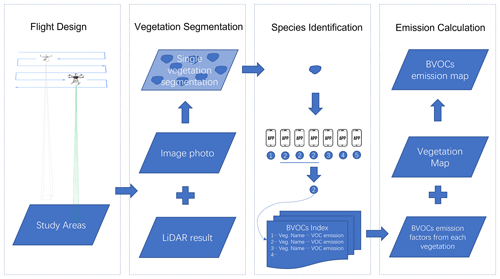

2.1 Description of the workflow

The entire workflow includes the following aspects (as shown in Fig. 1): first, the selection of drones equipped with lidar and high-resolution cameras and, second, the interpretation of photogrammetry results. The third step is to give the images of each tree to API-callable plant species identification platforms and then establish a match between the interpreted tree species and the single-tree-species BVOC emission factor database. The fourth step is to calculate the BVOC emissions of the study area based on the match results and emission factors.

2.2 Study area

The location of the study area is the coniferous–broadleaved mixed forest of the Dinghushan Forest Ecosystem Research Station of the Chinese Ecosystem Research Network (CERN). The Dinghushan station is located in south subtropical zone and belongs to a subtropical–tropical monsoon climate zone, with an obvious winter and summer climate. The average annual temperature is 20.9 °C, the average annual rainfall is 1900 mm, the annual sun radiation is about 4665 , the average annual sunshine amount is 1433 h, the average annual evaporation amount is 1115 mm, and the average relative humidity over many years is 82 %. The position is near the northern return line and its elevation is 300–350 m, while the slope is about 25–30° and the slope direction is south. Its soil is lateritic red soil, and the soil layer depth is about 30 to 90 cm. This plot has a long-term on-site survey of tree species, which facilitates the comparison of test results. There are 260 families, 864 genera, 1740 species, and 349 species of cultivated plants in the Dinghushan forest. At the same time, Li et al. (2021) used drones equipped with online mass spectrometers at the Dinghushan station to observe the composition of VOCs. Their results are expected to be compared to explore the influence of tree species on the spatial heterogeneity of VOCs.

2.3 Flight equipment and instruments

The main UAV platform used in this technical framework is DJI® Matrice 600 Pro, which is a universal platform that can carry various sensors. We equipped the GreenValley® LiAir V lidar scanning system on this platform, which includes a set of integrated navigation systems composed of a global navigation satellite system (GNSS), an inertial measurement unit (IMU), and attitude calculation software.

At the same time, we simultaneously used a DJI® Phantom 3 Professional UAV to get visible-light images. Its camera model is an FC300X (RGB), and the camera image sensor (CMOS) is in. (6.16×4.62 mm), with an effective pixel count of 12.4 million (12.76 million total pixels). According to the image attribute information, the camera parameters used in this work are an aperture value of , maximum aperture of 2, exposure time of s, ISO speed of 100, and focal length of 4 mm.

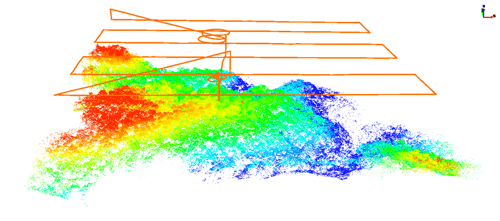

The DJI® pilot software is employed to design the flight route and guide the flight of the UAVs. The flight mode of the two planes is designed for the same flight route so that a consistent measurement area can be obtained. It is worth noting that in forest areas, due to the dense layers of trees, there are significant risks during takeoff and landing, so it is usually necessary to find a suitable landing location. We usually choose the location at the “forest gap”, which is usually a tomb, ridge, or other natural bare ground. At the beginning of the takeoff phase, we manually operated the UAV to avoid trees near the forest gap to reduce the risk of a crash while completing inertial guidance for the IMU. After the takeoff reaches the specified height, it changes to automatic flight (as shown in Fig. 2).

2.4 Lidar-based tree segmentation and canopy structure calculation

The study specifically uses GreenValley® LiDAR360 and Esri® ArcGIS software to carry out this part of the work. First, the laser point cloud results are coordinated and spliced, and then the noise is removed when it surpasses 5 times the standard deviation. After this the improved progressive triangulated irregular network (TIN) densification (IPTD) algorithm is used to separate the ground points (Zhao et al., 2016). On this basis, a digital elevation model is generated based on the inverse distance weight (IDW) method (Ismail et al., 2016).

The processing of obtaining single-tree features based on lidar is based on the layer-stacking algorithm (Ayrey et al., 2017). According to the layer height of different trees, the position of the seed point in the laser point cloud is determined for segmentation, and then the boundary of each tree is obtained. The principle of this algorithm is to first obtain the seed points of each single tree and then find its watershed (Li et al., 2012). On this basis, the default calculation module of LiDAR360 is used to obtain the structural characteristic parameters of tree canopy, such as the canopy height and crown radius (Ma et al., 2017). At the same time, we fuse and concatenate the airborne visible-light image into image raster data. Based on the individual tree boundary, the results of the visible-light raster data segmentation through the overlay analysis of ArcGIS are used to obtain the raster of each individual tree. The specific parameter settings for airborne image data processing are shown in Table 1. After that, the raster of individual trees is given to different apps to obtain the plant species identification results.

2.5 Vegetation identification

With the continuous improvement of a new generation of plant recognition algorithms based on deep learning methods, a variety of plant recognition apps and platforms continue to appear (Irimia et al., 2020; Otter et al., 2021). They can all import and identify plant images from mobile phones or communicate via an application programming interface (API) with public researchers. There are also quite a lot of open-source deep-learning-trained models and datasets, allowing for researchers to submit visible-light images and obtain recognition results (Ma et al., 2019; Zhanhui et al., 2020).

The apps and platforms shown in Table 2 were used in this study to identify the visible-light image after point cloud segmentation. They are usually trained based on a certain national or international plant classification picture database. For example, AiPlants® is based on the database of the Plant Photo Bank of China (PPBC) (Zhanhui et al., 2020). With the rise of cloud computing services, individual calling methods on various platforms have arisen, such as the Aliyun® general image recognition service (GIRS), Amazon® Rekognition service, and Baidu® PaddlePaddle platform. And their identification results can be obtained using a simple script submission (Jin, 2017). However, due to differences in their respective training sets, the accuracy of plant recognition varies among different apps. It is currently unclear whether the reliability, accuracy, and portability of these simple retrieval methods can support their application in investigating plant emissions. In this study, a simple method of recognition and judgment was adopted to ensure our recognition accuracy. We perform a conditional judgment on all results, and if one piece of input data obtains the same recognition result on two or more platforms, the recognition result is accepted.

Zhanhui et al. (2020)Jin (2017)Ma et al. (2019)Kumar et al. (2012)Joly et al. (2016)Otter et al. (2021)Mäyrä et al. (2021)2.6 BVOC emission factor and emission calculation

In this study, calculations are based on the database of detailed BVOC emission factors (EFs) for tree species provided by MEGAN3.2, which contains a set of EF libraries with more than 40 000 tree species (Guenther et al., 2018). When the tree species determined based on Sect. 2.5 is clear, the corresponding BVOC EF can be obtained by lookup in the table.

For the types of trees that are not contained in the EF library, we obtain the BVOC emission factor of the tree species based on the literature survey method (Chen et al., 2022; Mu et al., 2022). For tree species that cannot be found even through literature research, we choose to replace them with plants from the same family. Because there are quite a few types of BVOCs obtained by observation experiments, they are generally dominated by isoprenes and monoterpenes (Li et al., 2021). Therefore, our study is also characterized by the distribution of emissions using a genus-specific average emission factor.

Since the images we use to identify tree species are singularly temporal, we only attempt to calculate the maximum and minimum emissions of the forest in the sample plot. The calculation method is based on the emission factors corresponding to the species of each tree multiplied by its biomass and the area occupied by its crown diameter.

3.1 The morphological composition of the vegetation

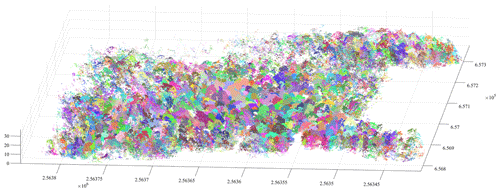

Based on the point cloud results measured by lidar, more accurate arboreal morphological characteristics can be obtained. Then we split the individual trees, and the point clouds of each individual tree are shown in Fig. 3. It can be seen that due to the influence of terrain, the point cloud at the edge has a much lower density than the point cloud in the center, which may cause higher uncertainty in the segmentation of the single forest in this area.

Figure 3Point cloud of each individual tree obtained based on the layer-stacking algorithm excluding topographic.

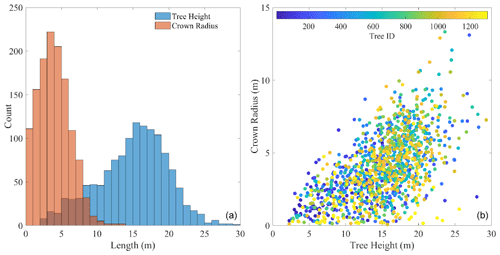

After the statistics of single-tree segmentation, there are 1291 trees in the sample plot. The overall distribution of morphological parameters and the corresponding relationship between the tree height and crown diameter of each tree are shown in Fig. 4. It can be seen that the tree height in the sample plot obtained by the measurement follows the GaussAmp skew distribution. Its distribution range spans from 2 to 30 m, and its average is 14.9 m. At the same time, its crown radius presents a lognormal distribution, and its average value is about 4 m.

3.2 The composition of vegetation species

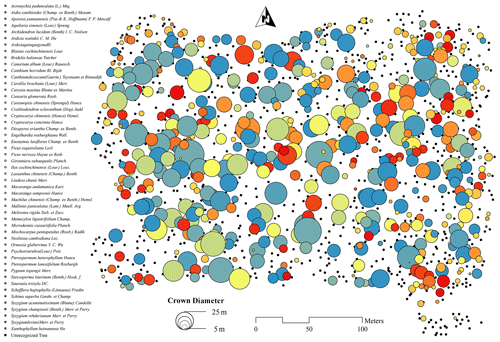

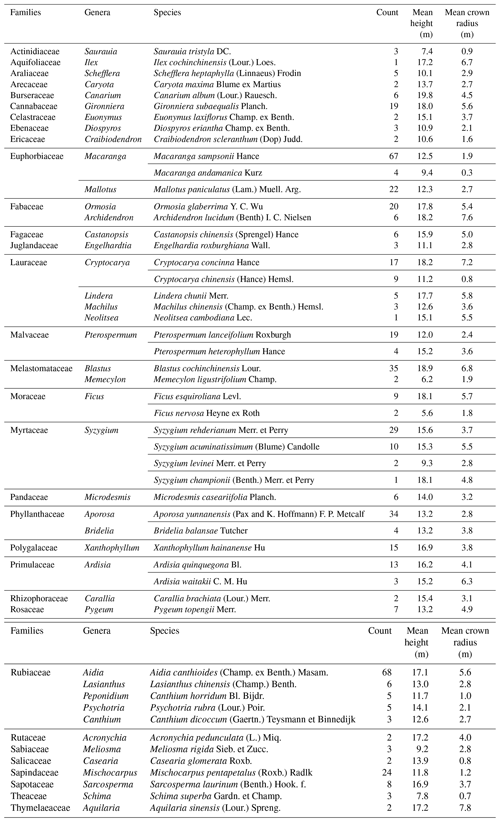

The plant identification apps were called to identify the tree species based on the segmentation results. The spatial distribution and frequency of tree species are shown in Fig. 5 and Table 3. It can be seen from its spatial distribution that different tree species appear to be scattered and gathered. Among them, the three most frequent tree species are Aidia canthioides (Champ. ex Benth.) Masam., Macaranga sampsonii Hance, and Blastus cochinchinensis Lour., while the highest-frequency family is Euphorbiaceae. The ratio of the top three species is about 12 %, 11 %, and 6 %. Other identified tree species are also shown in Table 3. Combined with their canopy morphology distribution, it can be seen that the plot presents significant coniferous–broadleaved mixed-forest characteristics, and coniferous or broadleaved trees occupy the position of the dominant tree species. Meanwhile, it still can be see from Fig. 5 that lots of trees could not recognized.

3.3 The BVOC emission at the family and individual scale

The emissions we obtained for isoprenes and monoterpenes for each family are shown in Table 4. It can be seen that in the study area of Dinghu Mountain, the largest cumulative isoprene emissions were from the Myrtaceae family (maximum of 18.7 ), followed by the Salicaceae family (maximum of 3.8 ), while for monoterpenes their cumulative emissions were largest in the Rubiaceae family (maximum of 3.9 ), followed by the Theaceae family (maximum of 2.8 ). However, it is worth noting that since we cannot confirm the leaf type, leaf age, and corresponding phenological period of each tree, we only calculated the maximum and minimum possible emissions based on their standard emission factors and biomass.

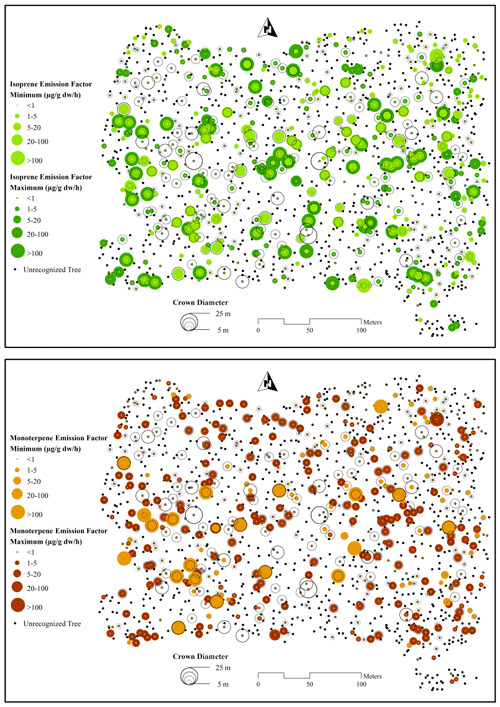

At the same time, the spatial distribution of individual plant emissions from Fig. 6 shows that there are clusters of BVOC-emitting plants in the study area, which are caused by the aggregation of plants of the same family. The clusters of isoprene- and terpene-emitting plants are homogeneous, while there are some non-BVOC-emitting plants between the different clusters, which may be related to their ecological-competition strategy (Fitzky et al., 2019). According to the forest competition theory, the emissions of BVOCs are related to its competitive pressure, relative size, and area overlap rate (Contreras et al., 2011). On the other hand, the strategies adopted by different species are different. The intra-specific competition and inter-specific competition play a specific role through different biopheromones, which are all BVOCs (Šimpraga et al., 2019). In addition, it is noteworthy from Fig. 6 that the number of plants not discriminated in the study area is quite large, implying that this is an important source of uncertainty in the estimation of BVOC emissions in this method.

4.1 The uncertainty sources of this method

4.1.1 Flight route design and acquisition process of aerial survey data

During the field flight using this workflow, we found that the height of the flight and the pixel area occupied by each tree in the resulting visible-light image is the decisive link that determines whether the image recognition tool can effectively identify the plant species in the image. In a practice flight, we designed different flight altitude routes, namely 60, 120, and 200 m, in order to find a suitable flight altitude. We checked and found that for the images obtained at a flying altitude of 120 m or more, the number of pixels per tree obtained after being cut and paired by a single tree in the lidar point cloud is less (about 200×300 pixels). The description of tree leaf characteristics is very unclear and presents mosaic-like characteristics, which makes it impossible to accurately identify hidden plant species in different image recognition tools and which also makes the calculation of BVOC emissions hard. Especially due to the fact that the images obtained from drone flight surveys are all orthophoto images, their expression in canopy morphology features is missing.

At the same time, the vegetation below the forest canopy also emits a considerable number of BVOCs. Although airborne lidar can detect their presence through leaf gaps, visible-light images cannot obtain their information due to canopy occlusion, making it an important source of underestimation of BVOC emissions for this method. It may be possible to try using lateral aerial photography or airborne multi-band enhanced penetrating lidar technology to achieve detection and modeling recognition of understory plants.

4.1.2 Single-tree segmentation and recognition process of images

The single-tree segmentation technique used in this study is based on the layer-stacking algorithm. This single-tree segmentation process first obtains the seed points of each tree and then determines the boundaries between each individual tree through its watershed (Li et al., 2012). This method may result in undetected or incorrectly detected trees, depending on the density of the laser point cloud per unit area. In this study, the density of laser point clouds was 42.6 points m−2, which, although very high, may still result in some small saplings not being correctly identified. In future research, techniques such as ground-based lidar segmentation and coarse-to-fine algorithms can be combined to improve its accuracy (Zhao et al., 2023).

It also can be seen that the recognition accuracy of these apps is not as high as it claims for the visible-light images obtained by drones. Among them, platforms trained by satellite images give quite accurate results of tree species recognition. EasyDL gave back “unrecognizable” feedback for quite a lot of individual tree images, while AiPlants and Leafsnap gave incorrect classification and recognition results, such as succulents and garden plants. This result may related to the fact that the apps did not train on image inputs with correct tree species tags during the collection and training of the general image datasets.

In general, for the refined calculation of vegetation VOC emission factors, the platform based on deep learning training from remote sensing images can provide faster and more reliable tree species identification results than traditional methods. However, these recognition platforms still require more accurate and large-scale training datasets to support their classification accuracy. Although various crowdsourcing-based apps are widely used, most of the vegetation tree species information uploaded by users is concentrated in garden tree species or common tree species, and more sample images are needed for rare tree species. Due to the differences of functions and selected training datasets of different platforms, it is difficult to quantify the range of uncertainty of this process. With the development of open-source databases and open-source training sets, further uncertainty source control of this process can be promoted.

Guenther et al. (2012)Gao et al. (2011)Situ et al. (2013)Tang et al. (2007)Wu et al. (2016)Li et al. (2021)4.1.3 The estimation process of BVOC emissions

Although this study integrated MEGAN's emission tree species information and literature obtained information as input calculations, MEGAN mainly consists of common tree species at the global scale. Therefore, the emission factors of various tree species used in this study mainly came from the Mu et al. (2022) database of measured results. This has made it quite difficult to measure over a hundred species of trees in southern China, allowing for the discovery of emission factor information for most of the tree species in the sample plot of this study. But this situation indicates that there are several technical issues in selecting the BVOC emission factor library when applying this framework. Firstly, there is still a considerable number of cases in this study where the tree species analyzed is not within the range of the MEGAN EF database. Secondly, the emissions of BVOCs from trees are subject to various photochemical and hydrothermal conditions, but, currently, various databases are unable to provide a detailed characterization of the impact of these environmental factors at the tree species level. Thirdly, different BVOC emission factor databases have a different emphasis on the emission parameters of BVOC components for the same tree species. The shortcomings above limit our further application and migration of this method to other forests. However, this method can quickly obtain an independent set of upper and lower limits of BVOC emissions for its sample plot, which is helpful for conducting model validation work based on the sample plot. It is recommended that community peers refer to our workflow and combine it with their local tree species emission factor library to further BVOC emission estimation.

Meanwhile, it is important for the academic community to understand that existing BVOC computational emission models such as MEGAN have already taken into account various meteorological conditions, leaf growth, and other factors relatively completely. However, the definition of vegetation itself often depends on the definition of land use types in the coupled regional models. For example, in the commonly used WRF-Chem (Weather Research and Forecasting) model, vegetation types are usually classified using the MODIS 20 or USGS 24 classification systems, which still use a combination of coniferous forests, broadleaved forests, mixed forests, evergreen forests, and deciduous forests for forest classification. This means that there is a need for further improvement in the characterization of emissions from different tree species. Therefore, future regional estimation of BVOCs can be carried out by combining the method framework obtained in this study with the coupling of BVOC computational emission models.

4.2 The differences in BVOC emission from other methods and potential impact

We compared the BVOC results obtained in this study with the emission results obtained by different methods in the Dinghu Mountain sample plot, as shown in Table 5. It can be seen that there are many different research methods (including remote sensing inversion, model calculation, understory sampling observation, UAV-mounted sensor observation), and the magnitude of the BVOC emissions from Dinghu Mountain varies greatly. The results obtained from this study indicate that a more accurate description of forest biodiversity can make the calculated results more consistent with those obtained from direct observations in the forest canopy. At the same time, it has been found that in previous model estimations based on simple plant functional type (PFT) methods to estimate biomass, the emissions of BVOCs may be underestimated to a considerable extent. This poses new requirements for the estimation and parameterization of BVOC emissions; that is, when simulating the emissions of BVOCs, the biodiversity of the forest in the region should be considered and not only vegetation factors from purely canopy physical size indicators such as leaf area index (LAI) and crown diameter.

This research has established a workflow for identifying plant species based on lidar, photogrammetry, and image recognition technologies carried by drones to obtain accurate BVOC emissions. The intention of this research is to combine the newly developed rapid survey method of plant species with the calculation of BVOC emissions and discuss the main uncertainty sources of the BVOC emissions obtained in this method. The current limitation of this study is that although lidar can capture the multi-layer structure of tree crowns, visible light is difficult to identify other vegetation below trees, such as shrubs and herbs, which can result in a certain loss of BVOC emissions.

The implication of this study is that, with the advancement of novel technologies in computer science, the obstacle of tree species identification, which previously impeded the estimation of BVOC emissions, will gradually be addressed through large-scale image recognition technology. However, open-source and standardized image recognition techniques, along with the BVOC emission factor library for tree species, have emerged as new bottlenecks, necessitating the relevant research community to contemplate how to share corresponding data and technologies more openly.

The code and data in this study are available on request from Ming Chang (changming@email.jnu.edu.cn).

XD: data curation, writing (original draft preparation). MC: supervision, conceptualization, methodology, visualization, writing (original draft preparation). GW: data curation, software, validation, visualization. SS: formal analysis, investigation. SZ: resources, drone techniques. QZ: data curation. YH: writing (review and editing). WW: writing (review and editing). WC: writing (review and editing). BY: writing (review and editing). XW: project administration.

At least one of the (co-)authors is a member of the editorial board of Atmospheric Measurement Techniques. The peer-review process was guided by an independent editor, and the authors also have no other competing interests to declare.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

The authors thank the Dinghushan Forest Ecosystem Research Station for providing sample plots.

This work was supported by the National Key Research and Development Program of China (grant no. 2023YFC3706202), the National Natural Science Foundation of China (grant nos. 42275107, 42121004), the Marine Economic Development Special Program of Guangdong Province (grant no. GDNRC [2024]36), Science and Technology Projects in Guangzhou (grant no. 2023A04J0251), and the Special Fund Project for Science and Technology Innovation Strategy of Guangdong Province (grant no. 2019B121205004).

This paper was edited by Haichao Wang and reviewed by two anonymous referees.

Ayrey, E., Fraver, S., Kershaw Jr., J. A., Kenefic, L. S., Hayes, D., Weiskittel, A. R., and Roth, B. E.: Layer stacking: a novel algorithm for individual forest tree segmentation from LiDAR point clouds, Can. J. Remote Sens., 43, 16–27, 2017. a

Baghi, R., Helmig, D., Guenther, A., Duhl, T., and Daly, R.: Contribution of flowering trees to urban atmospheric biogenic volatile organic compound emissions, Biogeosciences, 9, 3777–3785, https://doi.org/10.5194/bg-9-3777-2012, 2012. a

Batista, C. E., Ye, J., Ribeiro, I. O., Guimarães, P. C., Medeiros, A. S., Barbosa, R. G., Oliveira, R. L., Duvoisin, S., Jardine, K. J., Gu, D., Guenther, A. B., McKinney, K. A., Martins, L. D., Souza, R. A. F., and Martin, S. T.: Intermediate-scale horizontal isoprene concentrations in the near-canopy forest atmosphere and implications for emission heterogeneity, P. Natl. Acad. Sci. USA, 116, 19318–19323, 2019. a

Brosy, C., Krampf, K., Zeeman, M., Wolf, B., Junkermann, W., Schäfer, K., Emeis, S., and Kunstmann, H.: Simultaneous multicopter-based air sampling and sensing of meteorological variables, Atmos. Meas. Tech., 10, 2773–2784, https://doi.org/10.5194/amt-10-2773-2017, 2017. a

Canaval, E., Millet, D. B., Zimmer, I., Nosenko, T., Georgii, E., Partoll, E. M., Fischer, L., Alwe, H. D., Kulmala, M., Karl, T., Schnitzler, J.-P., and Hansel, A.: Rapid conversion of isoprene photooxidation products in terrestrial plants, Communications Earth & Environment, 1, 1–9, 2020. a

Chen, W., Guenther, A. B., Wang, X., Chen, Y., Gu, D., Chang, M., Zhou, S., Wu, L., and Zhang, Y.: Regional to global biogenic isoprene emission responses to changes in vegetation from 2000 to 2015, J. Geophys. Res.-Atmos., 123, 3757–3771, 2018. a

Chen, W., Guenther, A. B., Jia, S., Mao, J., Yan, F., Wang, X., and Shao, M.: Synergistic effects of biogenic volatile organic compounds and soil nitric oxide emissions on summertime ozone formation in China, Sci. Total Environ., 828, 154218, https://doi.org/10.1016/j.scitotenv.2022.154218, 2022. a, b, c

Cheng, K., Su, Y., Guan, H., Tao, S., Ren, Y., Hu, T., Ma, K., Tang, Y., and Guo, Q.: Mapping China's planted forests using high resolution imagery and massive amounts of crowdsourced samples, ISPRS J. Photogramm., 196, 356–371, 2023. a

Contreras, M. A., Affleck, D., and Chung, W.: Evaluating tree competition indices as predictors of basal area increment in western Montana forests, Forest Ecol. Manag., 262, 1939–1949, 2011. a

Curtis, A., Helmig, D., Baroch, C., Daly, R., and Davis, S.: Biogenic volatile organic compound emissions from nine tree species used in an urban tree-planting program, Atmos. Environ., 95, 634–643, 2014. a

Dash, J. P., Watt, M. S., Pearse, G. D., Heaphy, M., and Dungey, H. S.: Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak, ISPRS J. Photogramm., 131, 1–14, 2017. a

Dicke, M. and Baldwin, I. T.: The evolutionary context for herbivore-induced plant volatiles: beyond the “cry for help”, Trends Plant Sci., 15, 167–175, 2010. a

Fassnacht, F. E., Latifi, H., Stereńczak, K., Modzelewska, A., Lefsky, M., Waser, L. T., Straub, C., and Ghosh, A.: Review of studies on tree species classification from remotely sensed data, Remote Sens. Environ., 186, 64–87, 2016. a

Fitzky, A. C., Sandén, H., Karl, T., Fares, S., Calfapietra, C., Grote, R., Saunier, A., and Rewald, B.: The interplay between ozone and urban vegetation–BVOC emissions, ozone deposition, and tree ecophysiology, Frontiers in Forests and Global Change, 2, 50, https://doi.org/10.3389/ffgc.2019.00050, 2019. a

Gao, X., Zhang, H., Cai, X., Song, Y., and Kang, L.: VOCs fluxes analysis based on micrometeorological methods over litchi plantation in the Pearl River Delta, China, Acta Scientiarum Naturalium Universitatis Pekinensis, 47, 916–922, 2011. a

Ghirardo, A., Xie, J., Zheng, X., Wang, Y., Grote, R., Block, K., Wildt, J., Mentel, T., Kiendler-Scharr, A., Hallquist, M., Butterbach-Bahl, K., and Schnitzler, J.-P.: Urban stress-induced biogenic VOC emissions and SOA-forming potentials in Beijing, Atmos. Chem. Phys., 16, 2901–2920, https://doi.org/10.5194/acp-16-2901-2016, 2016. a

Guenther, A., Jiang, X., Shah, T., Huang, L., Kemball-Cook, S., and Yarwood, G.: Model of Emissions of Gases and Aerosol from Nature Version 3 (MEGAN3) for Estimating Biogenic Emissions, International Technical Meeting on Air Pollution Modelling and its Application, 187–192, 14–18 May 2018, Ottawa, ON, Canada, https://doi.org/10.1007/978-3-030-22055-6_29, 2018. a

Guenther, A. B., Jiang, X., Heald, C. L., Sakulyanontvittaya, T., Duhl, T., Emmons, L. K., and Wang, X.: The Model of Emissions of Gases and Aerosols from Nature version 2.1 (MEGAN2.1): an extended and updated framework for modeling biogenic emissions, Geosci. Model Dev., 5, 1471–1492, https://doi.org/10.5194/gmd-5-1471-2012, 2012. a, b

Hartley, A., MacBean, N., Georgievski, G., and Bontemps, S.: Uncertainty in plant functional type distributions and its impact on land surface models, Remote Sens. Environ., 203, 71–89, 2017. a

Heald, C. L. and Kroll, J.: The fuel of atmospheric chemistry: Toward a complete description of reactive organic carbon, Science Advances, 6, eaay8967, https://doi.org/10.1126/sciadv.aay8967, 2020. a

Henze, D. K., Seinfeld, J. H., Ng, N. L., Kroll, J. H., Fu, T.-M., Jacob, D. J., and Heald, C. L.: Global modeling of secondary organic aerosol formation from aromatic hydrocarbons: high- vs. low-yield pathways, Atmos. Chem. Phys., 8, 2405–2420, https://doi.org/10.5194/acp-8-2405-2008, 2008. a

Irimia, C., Costandache, M., Matei, M., and Lipan, M.: Discover the Wonderful World of Plants with the Help of Smart Devices, in: RoCHI – International Conference on Human-Computer Interaction, 73, 22–23 October 2020, Sibiu, Romania, https://doi.org/10.37789/rochi.2020.1.1.12, 2020. a

Isidorov, V., Zenkevich, I., and Ioffe, B.: Volatile organic compounds in solfataric gases, J. Atmos. Chem., 10, 329–340, 1990. a

Ismail, Z., Abdul Khanan, M., Omar, F., Abdul Rahman, M., and Mohd Salleh, M.: Evaluating error of lidar derived dem interpolation for vegetation area, Int. Arch. Photogramm., 42, https://doi.org/10.5194/isprs-archives-XLII-4-W1-141-2016, 2016. a

Jin, R.: Deep Learning at Alibaba., in: Twenty-Sixth International Joint Conference on Artificial Intelligence, 11–16, 19–25 August 2017, Melbourne, Australia, https://doi.org/10.24963/ijcai.2017/2, 2017. a, b

Jin, S., Sun, X., Wu, F., Su, Y., Li, Y., Song, S., Xu, K., Ma, Q., Baret, F., Jiang, D., Yanfeng, D., and Qinghua, G.: Lidar sheds new light on plant phenomics for plant breeding and management: Recent advances and future prospects, ISPRS J. Photogramm., 171, 202–223, 2021. a

Joly, A., Bonnet, P., Goëau, H., Barbe, J., Selmi, S., Champ, J., Dufour-Kowalski, S., Affouard, A., Carré, J., Molino, J.-F., Boujemaa, N., and Barthélémy, D.: A look inside the Pl@ntNet experience, Multimedia Syst., 22, 751–766, 2016. a

Kegge, W. and Pierik, R.: Biogenic volatile organic compounds and plant competition, Trends Plant Sci., 15, 126–132, 2010. a

Komenda, M. and Koppmann, R.: Monoterpene emissions from Scots pine (Pinus sylvestris): field studies of emission rate variabilities, J. Geophys. Res.-Atmos., 107, ACH–1, https://doi.org/10.1029/2001JD000691, 2002. a

Kumar, N., Belhumeur, P. N., Biswas, A., Jacobs, D. W., Kress, W. J., Lopez, I., and Soares, J. V. B.: Leafsnap: A Computer Vision System for Automatic Plant Species Identification, in: The 12th European Conference on Computer Vision (ECCV), 7–13 October 2012, Florence, Italy, https://doi.org/10.1007/978-3-642-33709-3_36, 2012. a

Laothawornkitkul, J., Taylor, J. E., Paul, N. D., and Hewitt, C. N.: Biogenic volatile organic compounds in the Earth system, New Phytol., 183, 27–51, 2009. a

Li, W., Guo, Q., Jakubowski, M. K., and Kelly, M.: A new method for segmenting individual trees from the lidar point cloud, Photogramm. Eng. Rem. S., 78, 75–84, 2012. a, b, c

Li, Y., Liu, B., Ye, J., Jia, T., Khuzestani, R. B., Sun, J. Y., Cheng, X., Zheng, Y., Li, X., Wu, C., Xin, J., Wu, Z., Tomoto, M. A., McKinney, K. A., Martin, S. T., Li, Y. J., and Chen, Q.: Unmanned Aerial Vehicle Measurements of Volatile Organic Compounds over a Subtropical Forest in China and Implications for Emission Heterogeneity, ACS Earth and Space Chemistry, 5, 247–256, 2021. a, b, c, d, e

Loreto, F., Dicke, M., Schnitzler, J.-P., and Turlings, T. C.: Plant volatiles and the environment, Plant Cell Environ., 37, 1905–1908, 2014. a

Ma, Q., Su, Y., and Guo, Q.: Comparison of canopy cover estimations from airborne LiDAR, aerial imagery, and satellite imagery, IEEE J. Sel. Top. Appl., 10, 4225–4236, 2017. a

Ma, Y., Yu, D., Wu, T., and Wang, H.: PaddlePaddle: An open-source deep learning platform from industrial practice, Frontiers of Data and Domputing, 1, 105–115, 2019. a, b

Mäyrä, J., Keski-Saari, S., Kivinen, S., Tanhuanpää, T., Hurskainen, P., Kullberg, P., Poikolainen, L., Viinikka, A., Tuominen, S., Kumpula, T., and Vihervaara, P.: Tree species classification from airborne hyperspectral and LiDAR data using 3D convolutional neural networks, Remote Sens. Environ., 256, 112322, https://doi.org/10.1016/j.rse.2021.112322, 2021. a

Mellouki, A., Wallington, T., and Chen, J.: Atmospheric chemistry of oxygenated volatile organic compounds: impacts on air quality and climate, Chem. Rev., 115, 3984–4014, 2015. a

Michałowska, M. and Rapiński, J.: A Review of Tree Species Classification Based on Airborne LiDAR Data and Applied Classifiers, Remote Sens.-Basel, 13, 353, https://doi.org/10.3390/rs13030353, 2021. a, b

Mu, Z., Llusià, J., Zeng, J., Zhang, Y., Asensio, D., Yang, K., Yi, Z., Wang, X., and Peñuelas, J.: An Overview of the Isoprenoid Emissions From Tropical Plant Species, Front. Plant Sci., 13, 1–14, 2022. a, b

Nebiker, S., Annen, A., Scherrer, M., and Oesch, D.: A light-weight multispectral sensor for micro UAV–Opportunities for very high resolution airborne remote sensing, Int. Arch. Photogramm., 37, 1193–1200, 2008. a

Otter, J., Mayer, S., and Tomaszewski, C. A.: Swipe Right: a Comparison of Accuracy of Plant Identification Apps for Toxic Plants, Journal of Medical Toxicology, 17, 42–47, 2021. a, b

Peñuelas, J. and Staudt, M.: BVOCs and global change, Trends Plant Sci., 15, 133–144, 2010. a, b

Ran, L., Zhao, C. S., Xu, W. Y., Lu, X. Q., Han, M., Lin, W. L., Yan, P., Xu, X. B., Deng, Z. Z., Ma, N., Liu, P. F., Yu, J., Liang, W. D., and Chen, L. L.: VOC reactivity and its effect on ozone production during the HaChi summer campaign, Atmos. Chem. Phys., 11, 4657–4667, https://doi.org/10.5194/acp-11-4657-2011, 2011. a

Rüdiger, J., Tirpitz, J.-L., de Moor, J. M., Bobrowski, N., Gutmann, A., Liuzzo, M., Ibarra, M., and Hoffmann, T.: Implementation of electrochemical, optical and denuder-based sensors and sampling techniques on UAV for volcanic gas measurements: examples from Masaya, Turrialba and Stromboli volcanoes, Atmos. Meas. Tech., 11, 2441–2457, https://doi.org/10.5194/amt-11-2441-2018, 2018. a

Sarkar, C., Guenther, A. B., Park, J.-H., Seco, R., Alves, E., Batalha, S., Santana, R., Kim, S., Smith, J., Tóta, J., and Vega, O.: PTR-TOF-MS eddy covariance measurements of isoprene and monoterpene fluxes from an eastern Amazonian rainforest, Atmos. Chem. Phys., 20, 7179–7191, https://doi.org/10.5194/acp-20-7179-2020, 2020. a

Schneider, F. D., Morsdorf, F., Schmid, B., Petchey, O. L., Hueni, A., Schimel, D. S., and Schaepman, M. E.: Mapping functional diversity from remotely sensed morphological and physiological forest traits, Nat. Commun., 8, 1–12, 2017. a

Shakhatreh, H., Sawalmeh, A. H., Al-Fuqaha, A., Dou, Z., Almaita, E., Khalil, I., Othman, N. S., Khreishah, A., and Guizani, M.: Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges, IEEE Access, 7, 48572–48634, 2019. a

Šimpraga, M., Takabayashi, J., and Holopainen, J. K.: Language of plants: where is the word?, J. Integr. Plant Biol., 58, 343–349, 2016. a

Šimpraga, M., Ghimire, R. P., Van Der Straeten, D., Blande, J. D., Kasurinen, A., Sorvari, J., Holopainen, T., Adriaenssens, S., Holopainen, J. K., and Kivimäenpää, M.: Unravelling the functions of biogenic volatiles in boreal and temperate forest ecosystems, Eur. J. For. Res., 138, 763–787, 2019. a

Simpson, J. and McPherson, E.: The tree BVOC index, Environ. Pollut., 159, 2088–2093, 2011. a

Situ, S., Guenther, A., Wang, X., Jiang, X., Turnipseed, A., Wu, Z., Bai, J., and Wang, X.: Impacts of seasonal and regional variability in biogenic VOC emissions on surface ozone in the Pearl River delta region, China, Atmos. Chem. Phys., 13, 11803–11817, https://doi.org/10.5194/acp-13-11803-2013, 2013. a

Situ, S., Wang, X., Guenther, A., Zhang, Y., Wang, X., Huang, M., Fan, Q., and Xiong, Z.: Uncertainties of isoprene emissions in the MEGAN model estimated for a coniferous and broad-leaved mixed forest in Southern China, Atmos. Environ., 98, 105–110, 2014. a

Steinbrecher, R., Smiatek, G., Köble, R., Seufert, G., Theloke, J., Hauff, K., Ciccioli, P., Vautard, R., and Curci, G.: Intra-and inter-annual variability of VOC emissions from natural and semi-natural vegetation in Europe and neighbouring countries, Atmos. Environ., 43, 1380–1391, 2009. a

Sylvain, J.-D., Drolet, G., and Brown, N.: Mapping dead forest cover using a deep convolutional neural network and digital aerial photography, ISPRS J. Photogramm., 156, 14–26, 2019. a

Tang, J., Chan, L., Chan, C., Li, Y., Chang, C., Liu, S., Wu, D., and Li, Y.: Characteristics and diurnal variations of NMHCs at urban, suburban, and rural sites in the Pearl River Delta and a remote site in South China, Atmos. Environ., 41, 8620–8632, https://doi.org/10.1016/j.atmosenv.2007.07.029, 2007. a

Tsimpidi, A. P., Trail, M., Hu, Y., Nenes, A., and Russell, A. G.: Modeling an air pollution episode in northwestern United States: Identifying the effect of nitrogen oxide and volatile organic compound emission changes on air pollutants formation using direct sensitivity analysis, J. Air Waste Manage., 62, 1150–1165, 2012. a

Villa, T. F., Gonzalez, F., Miljievic, B., Ristovski, Z. D., and Morawska, L.: An overview of small unmanned aerial vehicles for air quality measurements: Present applications and future prospectives, Sensors, 16, 1072, https://doi.org/10.3390/s16071072, 2016. a, b

Wang, H., Wu, Q., Guenther, A. B., Yang, X., Wang, L., Xiao, T., Li, J., Feng, J., Xu, Q., and Cheng, H.: A long-term estimation of biogenic volatile organic compound (BVOC) emission in China from 2001–2016: the roles of land cover change and climate variability, Atmos. Chem. Phys., 21, 4825–4848, https://doi.org/10.5194/acp-21-4825-2021, 2021. a

Wang, X., Situ, S., Chen, W., Zheng, J., Guenther, A., Fan, Q., and Chang, M.: Numerical model to quantify biogenic volatile organic compound emissions: The Pearl River Delta region as a case study, J. Environ. Sci., 46, 72–82, 2016. a

Wu, C., Liu, B., Wu, D., Yang, H., Mao, X., Tan, J., Liang, Y., Sun, J. Y., Xia, R., Sun, J., Guowen, H., Mei, L., Tao, D., Zhen, Z., and Yongjie, L.: Vertical profiling of black carbon and ozone using a multicopter unmanned aerial vehicle (UAV) in urban Shenzhen of South China, Sci. Total Environ., 801, 149689, https://doi.org/10.1016/j.scitotenv.2021.149689, 2021. a

Wu, F., Yu, Y., Sun, J., Zhang, J., Wang, J., Tang, G., and Wang, Y.: Characteristics, source apportionment and reactivity of ambient volatile organic compounds at Dinghu Mountain in Guangdong Province, China, Sci. Total Environ., 548–549, 347–359, https://doi.org/10.1016/j.scitotenv.2015.11.069, 2016. a

Wu, K., Yang, X., Chen, D., Gu, S., Lu, Y., Jiang, Q., Wang, K., Ou, Y., Qian, Y., Shao, P., and Lu, S.: Estimation of biogenic VOC emissions and their corresponding impact on ozone and secondary organic aerosol formation in China, Atmos. Res., 231, 104656, https://doi.org/10.1016/j.atmosres.2019.104656, 2020. a

You, H., Lei, P., Li, M., and Ruan, F.: Forest Species Classification Based on Three-dimensional Coordinate and Intensity Information of Airborne LiDAR Data with Random Forest Method, Int. Arch. Photogramm., 42, 117–123, 2020. a

Zhanhui, X., Shiyao, L., Ying, Z., Wenqin, T., Zhaofeng, C., Entao, Z., Jing, G., Di, Z., Jun, G., Gaoying, G., Chunpeng, G., Lulu, G., Jing, W., Chunyang, X., Chuan, P., Teng, Y., Mengqi, C., Weicheng, S., Jiantan, Z., Haotian, L., Chaoqun, B., Heqi, W., Jingchao, J., Jinzhou, W., Cui, X., and Keping, M.: Evaluation of the identification ability of eight commonly used plant identification application softwares in China, Biodiversity Science, 28, 524, https://doi.org/10.17520/biods.2019272, 2020. a, b, c

Zhao, X., Guo, Q., Su, Y., and Xue, B.: Improved progressive TIN densification filtering algorithm for airborne LiDAR data in forested areas, ISPRS J. Photogramm., 117, 79–91, 2016. a, b

Zhao, Y., Im, J., Zhen, Z., and Zhao, Y.: Towards accurate individual tree parameters estimation in dense forest: optimized coarse-to-fine algorithms for registering UAV and terrestrial LiDAR data, Gisci. Remote Sens., 60, 2197281, https://doi.org/10.1080/15481603.2023.2197281, 2023. a