the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Benchmarking and improving algorithms for attributing satellite-observed contrails to flights

Vincent Meijer

Rémi Chevallier

Allie Duncan

Kyle McConnaughay

Scott Geraedts

Kevin McCloskey

Condensation trail (contrail) cirrus clouds cause a substantial fraction of aviation's climate impact. One proposed method for the mitigation of this impact involves modifying flight paths to avoid particular regions of the atmosphere that are conducive to the formation of persistent contrails, which can transform into contrail cirrus. Determining the success of such avoidance maneuvers can be achieved by ascertaining which flight formed each nearby contrail observed in satellite imagery. The same process can be used to assess the skill of contrail forecast models. The problem of contrail-to-flight attribution is complicated by several factors, such as the time required for a contrail to become visible in satellite imagery, high air traffic densities, and errors in wind data. Recent work has introduced automated algorithms for solving the attribution problem, but it lacks an evaluation against ground-truth data. In this work, we present a method for producing synthetic contrail detections with predetermined contrail-to-flight attributions that can be used to evaluate – or “benchmark” – and improve such attribution algorithms. The resulting performance metrics can be employed to understand the implications of using these observational data in downstream tasks, such as forecast model evaluation and the analysis of contrail avoidance trials, although the metrics do not directly quantify real-world performance. We also introduce a novel, highly scalable contrail-to-flight attribution algorithm that leverages the characteristic compounding of error induced by simulating contrail advection using numerical weather models. The benchmark shows an improvement of approximately 25 % in precision versus previous contrail-to-flight attribution algorithms, without compromising recall.

- Article

(6914 KB) - Full-text XML

- BibTeX

- EndNote

Condensation trails (contrails) are the ice clouds that trail behind an aircraft as a result of the warm, moist engine exhaust mixing with colder, drier ambient air (Schumann, 1996). When the ambient air is sufficiently humid (i.e., supersaturated with respect to ice), these contrails can persist for several hours (Minnis et al., 1998). They perturb the Earth's energy budget by reflecting incoming solar radiation and reducing outgoing longwave radiation (Meerkötter et al., 1999). The net effect of all persistent contrails is estimated to be warming and of a magnitude comparable to the warming impact of aviation CO2 emissions (Lee et al., 2021).

Several mitigation options for the climate impact of contrail cirrus exist, such as the use of alternative fuels (Voigt et al., 2021; Märkl et al., 2024) and trajectory modifications (Mannstein et al., 2005; Teoh et al., 2020; Martin Frias et al., 2024). Although the latter approach, referred to as contrail avoidance, may lead to additional fuel burn and concomitant climate impacts, several simulation studies (Teoh et al., 2020; Martin Frias et al., 2024; Borella et al., 2024) have assessed this trade-off and conclude that this is a cost-effective mitigation strategy. These studies do, however, make use of forecast and reanalysis data to quantify the climate impact of contrails. While corrections to the weather data inaccuracies are applied sufficiently to draw the conclusions of the studies, other studies have demonstrated that these corrections are insufficient for the accurate prediction of formation and persistence of individual contrails by specific flights (Gierens et al., 2020; Geraedts et al., 2024; Meijer, 2024). Real-world avoidance trials have established the operational feasibility of avoiding detectable contrail formation using existing forecast models (Sausen et al., 2024; Sonabend et al., 2024), but they have also demonstrated that the forecasts are imperfect and that larger-scale trials will be necessary in order to determine whether the cost-effectiveness concluded by the modeling studies is achievable in practice.

Contrail avoidance trials are generally evaluated using contrail observations, such as those acquired by satellite imagers. The automated recognition of contrails is possible in infrared satellite images captured by both low-Earth-orbit and geostationary satellites (Mannstein et al., 1999; McCloskey et al., 2021; Meijer et al., 2022; Ng et al., 2024). Detections of contrails in geostationary satellite images are particularly interesting for the monitoring of contrail avoidance due to their high temporal resolution and broad spatial coverage, which allow one to track individual contrails over part of their lifetime (Vazquez-Navarro et al., 2010; Chevallier et al., 2023). However, imaging instruments aboard geostationary satellites such as GOES-16 (Goodman et al., 2020) have coarser image resolutions of approximately 2 km at nadir. This affects the number of contrails that are observable in these images (Driver et al., 2025) at any given time. Specifically, contrails are not observable at the moment they form; moreover, those that do eventually become observable require some time before they have become sufficiently large and/or optically thick. Previous studies using GOES-16 Advanced Baseline Imager (ABI) data indicate that the time taken to become observable is highly variable, generally ranging from 5 min to 1 h (Chevallier et al., 2023; Geraedts et al., 2024; Gryspeerdt et al., 2024). As a consequence, the contrail advects away from where it formed before becoming observable, which complicates the process of attributing it to the flight that formed it. The lack of altitude information associated with the observed contrails, owing to the satellite's 2D view of the 3D space, further enhances the difficulty of the problem. Once an observed contrail is attributed to an aircraft, this information can be used to study the relation between observed contrail properties and aircraft parameters (Gryspeerdt et al., 2024), evaluate the performance of contrail prediction models (Geraedts et al., 2024), train machine learning algorithms for better predictions of contrails (Sonabend et al., 2024), and monitor contrail avoidance trials.

Two recent contrail avoidance trials, Sausen et al. (2024) and Sonabend et al. (2024), each demonstrated a statistically significant reduction in the number of observed contrails when avoidance was performed. Neither of them, however, relied on automated attribution of contrails to flights when evaluating the trial: Sausen et al. (2024) evaluated the presence of detectable contrails in the satellite imagery for an entire airspace region, whereas Sonabend et al. (2024) relied on the time-consuming manual review of satellite imagery by the study's authors. Both studies emphasized the need for improved evaluation methods that are more scalable than what was used, in order to progress to the size and format of trial that could inform the operational requirements and impact of fleet- or airspace-wide contrail avoidance.

There has additionally been recent interest in establishing monitoring, reporting, and verification (MRV) systems for contrail climate impact, at the airspace, national, or continental levels. One example is the proposal for an MRV system for non-CO2 effects of aviation in the European Union (Council of European Union, 2024). Among the goals of these systems are to monitor the contrail impact of each airline and encourage its reduction. For any such implementation, there will be a need for both an assessment of the quality of contrail forecasts and accurate and scalable methods that can retrospectively determine contrail formation on a per-flight basis.

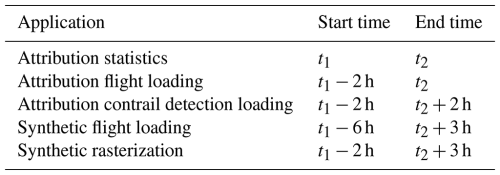

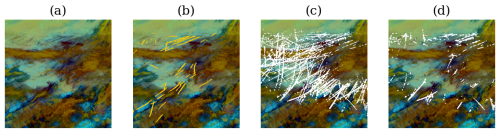

Figure 1A high-level visualization of a generic contrail-to-flight attribution process. All panes show a portion of a GOES-16 ABI image from 16:40 UTC (coordinated universal time) on 6 May 2019 over Ontario, Canada, rendered using the Ash color scheme to map infrared brightness temperatures to the visible spectrum. In panel (a), we see just the image, with some contrails visible in dark blue and some other clouds in yellow and brown partially obscuring some of the contrails. In panel (b), we show the result of running an automated contrail detector on the image, with the detected contrail pixels outlined in white and the results of linearizing the detector outputs as black line segments. Notably, some contrails appear segmented due to occlusion from other clouds. In panel (c), we take all flight paths that passed nearby in the preceding 2 h and simulate their advection to the capture time of the GOES image. This estimates the expected location of a hypothetical contrail that each flight formed. Each advected flight is shown using a unique color, while the contrails are still in black with white outlines (we render the satellite image in grayscale to improve visibility). Note that there is not a perfect alignment between observed contrails and flights; in some cases, there appear to be many candidate matches, whereas there appear to be none in other cases. In panel (d), we show the results of a contrail-to-flight attribution. Contrails that have been attributed are now color-coded to match the flight to which they were attributed, and only those flights are shown. Contrails in black were not attributed to a flight. The attributed flights are not always what appeared to be the best match in panel (c), as the attribution algorithm can take additional signals, like temporal dynamics, into account.

Several approaches have been developed to address the problem of automatically attributing contrails observed in satellite imagery to flights. All of them to some degree follow the approach visualized in Fig. 1: contrails visible in geostationary imagery (Fig. 1a) are detected and often then individually transformed into representative line segments (Fig. 1b); joined with flight tracks advected with weather model data (Fig. 1c); and, finally, attributed to flights using some form of optimization algorithm (Fig. 1d). Duda et al. (2004) apply this approach using the minimum average perpendicular distance between the advected flight track and the observed contrail in a single satellite frame for determining attribution. Geraedts et al. (2024) build on this approach by adding rotational and age-based components to the optimization. Gryspeerdt et al. (2024) first track contrail detections across frames using wind data at a fixed altitude, and they then use the resulting chains of detections to identify flights that passed through before the earliest detection and whose advected tracks are within distance and angle thresholds of the set of detections. Chevallier et al. (2023) replace the linearizations with contrail instance masks and then perform a discrete optimization that simultaneously tracks the contrail masks over successive satellite images and attributes them to the flight that formed them. We observe that, in all of these approaches, the advected flight that is closest to a given contrail detection, in some cases subject to additional temporal constraints, is presumed to have formed the contrail. In this study, we will show that the error in the simulated advection of the flight increases as the contrail ages, implying that the advected flight nearest to the contrail detection is often not the correct attribution.

We further observe that these previous studies carried out limited to no evaluation of the performance of these algorithms. Of the four studies mentioned, only Geraedts et al. (2024) provide any quantitative evaluation, using 1000 manual labels that indicated whether a flight segment formed a contrail or not. Ideally, such labels should also provide information on which flight segment formed which particular observed contrail. Benchmarking these attribution algorithms is complicated by the lack of ground-truth data. As discussed, the moment of formation of a particular contrail is not observed in geostationary satellite imagery. A ground-truth dataset for these attribution algorithms therefore requires observing the moment of formation using some higher-resolution instrument, possibly a ground-based camera, and following the contrail until it becomes observable in the satellite imagery of interest. While ground-based contrail observation datasets exist (Gourgue et al., 2025; Low et al., 2025; Schumann et al., 2013), including a small one that matches its observations to those of a geostationary satellite (Mannstein et al., 2010), no dataset of sufficient size and diversity to suit our needs is available at the time of writing. Even with such a dataset in hand, the metrics used to evaluate the performance of a contrail-to-flight attribution algorithm and their implications for downstream usage of the algorithm output data are relatively underexplored. For example, an attribution algorithm that is conservative with respect to the number of contrail-to-flight attributions that it assigns by prioritizing quality over quantity may be suitable for comparing the per-flight predictions of a contrail forecast model to satellite observations. However, such an algorithm would perhaps be less suitable for the evaluation of a large-scale contrail avoidance experiment using satellite imagery. Additionally, one attribution algorithm may outperform others only under certain circumstances (such as high air traffic density), which could further motivate choosing a particular approach over others.

We thus conclude that there are several relevant applications for attributing satellite-observed contrails to the flights that formed them, but this potential has not yet been fully realized, in part due to the combination of the inability to assess the performance of automated approaches and the limited scalability of the manual counterparts. This study, therefore, introduces a large-scale benchmark dataset of synthetic contrail detections with predetermined flight attributions, named “SynthOpenContrails”, and a new, scalable attribution algorithm, named “CoAtSaC” (short for “Contrail Attribution Sample Consensus”). In Sect. 2, we introduce SynthOpenContrails, how it is generated, and how to apply it to benchmarking attribution performance. In Sect. 3, we describe CoAtSaC and show how to use SynthOpenContrails to tune its performance. Section 4 shows that CoAtSaC provides substantial improvement when compared to existing approaches when evaluated on the new benchmark. It further shows how the size and diversity of SynthOpenContrails enables one to verify the scalability of a particular attribution algorithm and to study its performance under differing conditions, such as different contrail densities, contrail altitudes, seasons, and times of the day.

We start by addressing the question of how to determine the skill level of a given attribution algorithm. Ideally, we would use a dataset of ground-truth contrail attributions in geostationary imagery to tune and evaluate our attribution algorithm. Currently, no such dataset exists, as it is an extremely challenging task even for a skilled human to perform without additional evidence. In the absence of such a dataset, we propose a synthetic contrail dataset. Specifically, we aim to provide a set of synthetic contrail detections that can be directly input to an attribution algorithm. The synthetic contrail detections should be as statistically similar as possible to real detections, while specifying which flight created each contrail. While not a strict requirement, we choose to produce a dataset corresponding to the capture times and pixel grid of real satellite scans, as that allows for both quantitative and qualitative comparison with the real contrail detections from the corresponding scan.

Importantly, these synthetic contrail detections are simulating a particular detection algorithm run over imagery from a particular geostationary satellite, including the flaws of both. They are not attempting to model a physical reality or what an expert human labeler might produce for a given satellite image. It is not a goal of this dataset to have exactly the same flights that formed detectable contrails in reality also form contrails in this dataset, nor do the synthetic contrails need to end up being in exactly the same locations as the contrails that the detection algorithm finds in the same scene. Ultimately the critical element is that the dataset has statistics as similar as possible to the real detections, in terms of contrail density, dynamics, detectable lifetime, and advection error relative to the weather model data, so that we can measure the attribution algorithm's performance across all scenarios that it is likely to encounter with real contrail detections. An added benefit that the dataset provides is access to the physical properties of the synthetic contrails that allow one to study the attribution algorithm's performance as a function of these properties.

While the resulting dataset takes the form of contrail labels corresponding to satellite imagery, due to the aforementioned caveats, it is not suitable for training contrail detection models and is, thus, intended only for use in contrail attribution algorithms, where the labels need not align with actual satellite radiances.

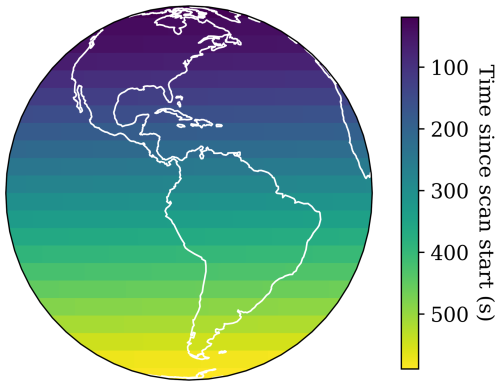

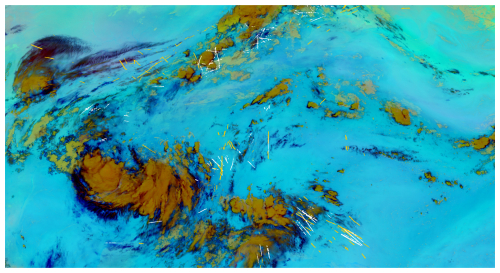

The dataset described here, which we name “SynthOpenContrails”, is tuned towards the performance of the contrail detection algorithm introduced along with the OpenContrails dataset in Ng et al. (2024), specifically when applied to the GOES-16 ABI Full Disk imagery (Goodman et al., 2020). The Full Disk imagery covers much of the Western Hemisphere, with approximately 2 km nadir spatial resolution and scans every 10 min. The Ng et al. (2024) algorithm uses a convolutional neural network to produce a prediction that each satellite pixel contains a contrail and thresholds the results to produce a binary mask. It then fits line segments to the individual contrails in the mask.

The approach presented here for generating the synthetic contrail detections should be adaptable to other detection algorithms and other satellites, but some details and parameter values may need to change. We also expect that attribution algorithms built around other detection methods should still be able to use SynthOpenContrails in its present form, and we demonstrate this in Sect. 4 by evaluating the Chevallier et al. (2023) algorithm with only minor modifications.

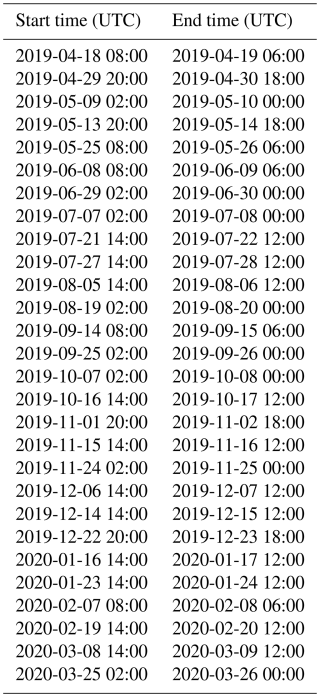

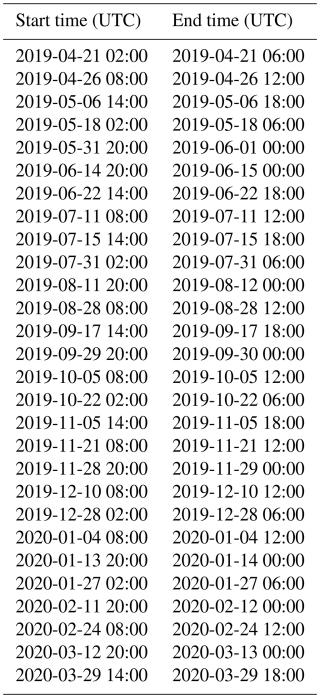

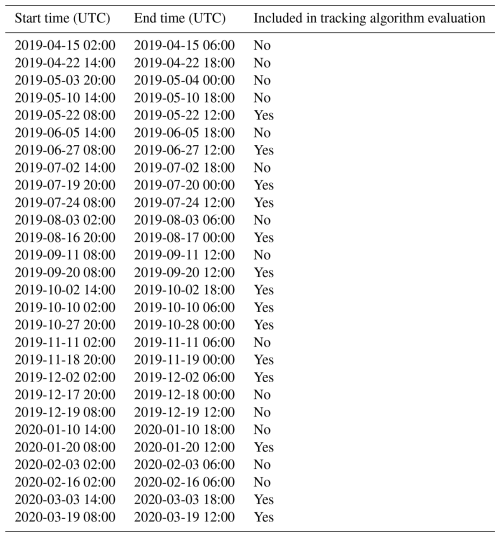

2.1 Data

The data used to produce the synthetic contrails consist of flight paths and historical weather data. We generate the dataset for the spatial region used in Geraedts et al. (2024), which covers roughly the contiguous United States, bounded by great-circle arcs joining 50.0783° N, 134.0295° W; 14.8865° N, 121.2314° W; 10.4495° N, 63.1501° W; and 44.0734° N, 46.0663° W. The dataset is designed to enable attribution algorithms to run over time spans that are sampled throughout the year between 4 April 2019 and 4 April 2020, divided into 84 time spans of between 4 and 22 h long, aiming to capture seasonal, day-of-week, and diurnal effects on contrail formation, requiring a minimum of 36 h of separation between time spans to ensure no overlap of flights or contrails between time spans. These time spans, specified in Appendix F, are almost identical to those used in Geraedts et al. (2024), but a few have been changed slightly to avoid GOES-16 ABI outages. To accommodate attribution algorithms that rely on temporal context, we also generate synthetic contrails for 2 h before the start and 3 h after the end of each time span, but we exclude these buffer periods from the benchmark metrics.

2.1.1 Flight trajectories

We use flight trajectories provided by FlightAware (https://flightaware.com, last access: 17 July 2025). This includes a mixture of Automatic Dependent Surveillance–Broadcast (ADS-B) data received by ground-based stations and Aireon satellites (Garcia et al., 2015). For the purposes of benchmarking contrail attribution, it is critical to recognize that these data are incomplete, as they may lack information on particular flights because operators may request their data to be obfuscated or excluded. The implication is that there may be detectable contrails formed by flights that are missing from the data, and the benchmark needs to assess whether the attribution algorithm can handle these contrails appropriately and avoid incorrectly attributing them to the best-matching flight that is in the data. We assume that it is unknown what fraction of flights are missing or whether they are in some way biased with respect to likelihood of persistent contrail formation. Our tuning and benchmarking protocols described in Sects. 2.3 and 3.5 take this into account.

In order to provide spatiotemporal context that an attribution algorithm might need in order to resolve the attributions for contrails at the borders of the space–time regions provided by the dataset, we consider all flight waypoints that were flown at any point between 6 h before the start of each time span and 3 h after it ends. We also dilate the spatial region by 720 km in each direction, to allow contrails formed by flights outside the region to advect in from all directions without presuming anything about the wind direction. We resample each flight to CTflight=5 s in between waypoints, such that there will end up being roughly two waypoints per GOES-16 ABI pixel at typical aircraft speeds.

2.1.2 Weather data

In selecting weather data that will be used to determine synthetic contrail formation, dynamics, and evolution from the candidate flights, it is important that we do not use the same weather data as are used for flight advection in the attribution algorithm itself, as that would result in having an unrealistically low advection error. As the majority of recent approaches use the nominal ERA5 reanalysis product (Hersbach et al., 2020) from the European Centre for Medium-Range Weather Forecasts (ECMWF) for attribution, we use the control run of the ERA5 Ensemble of Data Assimilations (EDA), which has a coarser resolution than the nominal ERA5 reanalysis product. The ensemble data are at 3 h intervals and a 0.5° spatial resolution, and they are vertically discretized to 37 pressure levels that are separated by roughly 25–50 hPa. We unintentionally excluded the levels between 450 and 975 hPa, which led to some minor weather interpolation artifacts at the low end of the contrail formation altitudes (see Sect. 4.2).

The EDA control run does share an underlying model with the nominal ERA5 reanalysis; as such, shared systematic biases may exist that would not exist when relating the nominal ERA5 reanalysis to real contrail observations. See Appendix B1 for a further discussion of the appropriateness of selecting this source of weather data. Future research is necessary to identify or generate a source of weather data that achieves all of the necessary error characteristics in a fully unbiased fashion.

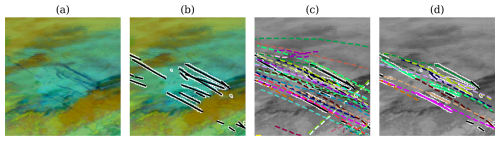

Figure 2A flow diagram of the process for generating synthetic contrails. The initial stages operate independently over each flight and determine the contributions of each flight to each relevant satellite scan. The later stages combine information from all flights that contribute to a given satellite scan and produce a contrail mask and a set of linear contrails for that scan.

2.2 Dataset generation

The process for generating the synthetic contrail detections is visualized in Fig. 2. We summarize each subroutine in the following, with further details found in Appendix A:

-

RunCoCiP. We simulate contrail formation and evolution using CoCiP (Schumann, 2012), which is a Lagrangian model simulating contrail formation and evolution, as implemented in the pycontrails library (Shapiro et al., 2024). We configure pycontrails as specified in Appendix A1. We need CoCiP to provide outputs for each flight at the times when the GOES-16 ABI Full Disk scan would have captured it. We note that the GOES-16 ABI does not capture the Full Disk scan instantaneously at the nominal scan time; rather, it captures it as 22 west-to-east swaths, starting in the north and moving south over the course of 10 min (see Appendix B2). This approach can be generalized to other geostationary satellites, as they have similar scan patterns (Okuyama et al., 2015). Each pixel then has a “scan-time offset”, based on when its location would be captured by the GOES-16 ABI relative to the nominal scan start time (Meijer et al., 2024). We do not know which pixels will capture a contrail formed by a given flight before running CoCiP. Furthermore, pycontrails can produce outputs only at fixed time intervals. Thus, in order to capture the outputs we need at the times corresponding to GOES-16 scans with the correct scan-time offsets, we configure pycontrails to produce outputs at 30 s intervals for the duration of the longest-lived contrail formed by the provided flight. If a flight does not form a contrail according to CoCiP, pycontrails will have no outputs, so we do not consider this flight any further. For flights that do form contrails, pycontrails outputs contrail properties for each contrail-forming input flight waypoint at each 30 s time step. We are, however, only interested in the properties that would manifest at the times that the GOES-16 ABI would capture the contrail. Therefore, we compute the scan-time offset corresponding to the location of each output and then select just the time step that is closest to each satellite scan plus scan-time offset for each waypoint. This results in a maximum of 15 s of error, which is negligible for our purposes (see Appendix B3). At this stage, we split up each flight's outputs according to the corresponding satellite scan and subsequent subroutines operate on them each independently.

-

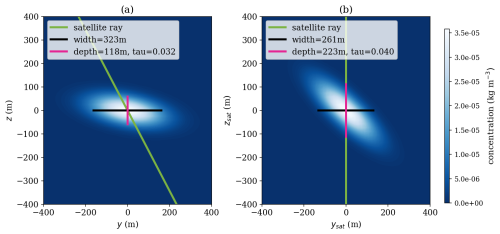

ReprojectGeostationary. The goal of this subroutine is to reproject CoCiP's outputs from its native frame of reference to the perspective of the geostationary imager. CoCiP computes the parameters of the contrail plume cross-section at each flight waypoint such that attributes like width and optical thickness are measured along a viewing ray that passes directly through the center of the contrail to the center of the Earth. In order to render off-nadir contrails in the perspective of a geostationary satellite, we need to recompute these values using the viewing ray of the instrument. The details of how this is accomplished are given in Appendix A2.

-

FilterUndetectable. This subroutine's purpose is filtering CoCiP's outputs to just those that the Ng et al. (2024) detector would be likely to find if a contrail with these physical parameters were captured by the GOES-16 ABI. This amounts to codifying whether the training data for the detector would have included a label for this contrail. It computes a per-waypoint detectability mask, considering a few criteria, as detailed in Appendix A3.

-

AdaptToDetector. Before actually rasterizing the CoCiP data, we apply some adaptations directly to CoCiP's outputs, in order to better reflect the behavior of the detector being emulated. These are specified in Appendix A4.

-

Rasterize. In this subroutine, we map the filtered and adapted CoCiP outputs to pixel values in the geostationary imager's native projection and resolution. The most important component is determining what quantity should be rasterized in order to best imitate the detector. As the Ng et al. (2024) detector exclusively operates on longwave infrared bands, when estimating detectability, we need not account for factors affecting shortwave bands such as solar insolation; the quantity that we can extract from CoCiP that will best reflect detectability is, therefore, opacity. According to the Beer–Lambert law (Beer, 1852), opacity can be expressed as , where τ is the contrail optical depth produced by CoCiP. Appendix B5 discusses the appropriateness of applying the Beer–Lambert law here. The actual rasterization process adapts the process described in Appendix A12 of Schumann (2012) to geostationary satellite imagery. This is detailed in Appendix A5. The output of this subroutine is an opacity value κras for each pixel in the geostationary image that a flight contributed to in a single frame, along with the relevant CoCiP metadata for each waypoint that contributed to the pixel.

-

CombineRasters. We can then combine the rasters for all flights at the same time step, keeping track of the per-flight contrail parameters contributing to each pixel for later analysis. For simplicity, we resolve different flights contributing to the same pixel in the final raster by taking the maximum. The more correct approach would be to sum the optical thicknesses before converting to opacity, but CoCiP does not model these inter-flight effects and, in practice, it does not matter much for our use case. In order to simulate some of the smoothing effect that the detector has over the relatively noisy satellite imagery, we apply a spatial Gaussian blur, with a standard deviation of 1 pixel, without allowing any zero-valued pixels to become nonzero. We produce a binary contrail mask by thresholding the raster by .

-

HandleOutbreaks. This subroutine addresses the mismatch between how CoCiP and the Ng et al. (2024) detector operate in regions of very high contrail density, which we refer to as “contrail outbreaks”. Generally CoCiP will cover the entire region in contrails, to the point where individual contrails cannot be identified, while the detector will only identify the few most optically thick contrails. Appendix A6 details how we adapt these regions to behave more like the detector.

-

Linearize. In this subroutine, we map the rasterized opacities, which include per-pixel attribution metadata, to linear contrail segments that can be used in a contrail-to-flight attribution algorithm. This process is a close analog to the processing that Ng et al. (2024) applied to real satellite imagery and the resulting detector outputs, although with some additional bookkeeping. The full process is described in Appendix A7.

The final dataset consists of a set of synthetic linear contrail detections, each labeled with the flight that formed it, as well as other potentially useful physical properties derived from CoCiP. The full rasterized contrail mask is also available for each satellite frame, although we only use the linearized outputs in this study.

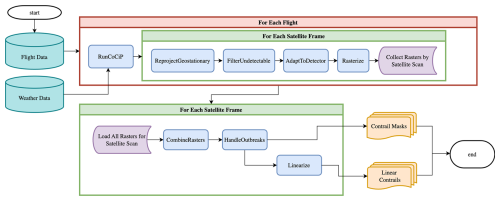

Figure 3An Ash-color-scheme false-color GOES-16 ABI image taken at 12:40 UTC on 11 July 2019 over the southeastern United States, showing the contrail mask produced by the Ng et al. (2024) detector (yellow) and the SynthOpenContrails mask (white). While the SynthOpenContrails contrails generally appear in the same regions as the detected contrails, there is far from perfect alignment, but that is unnecessary for the purposes of this dataset.

2.3 Tuning the synthetic dataset parameters

The pipeline that we have described for generating synthetic contrails includes a number of parameters whose values need to be determined. The intention here is to allow the same fundamental approach to be used to produce synthetic contrails that emulate different detection algorithms or different satellite imagers, just by setting different values for the parameters. As mentioned previously, for SynthOpenContrails, we produce synthetic contrails using the actual flights and weather model outputs corresponding to the capture times of real GOES-16 ABI Full Disk images. This allows us to tune towards matching the behavior of the Ng et al. (2024) detector on the real data.

Importantly, we divide the 84 time spans for which the dataset is generated into train, validation, and test splits, with 28 time spans each, as specified in Appendix F. This allows us to tune the dataset itself on one split, while using another split to verify that we have not “overfit” to the scenes in the split used for tuning. When the dataset is later used for tuning and benchmarking attribution algorithms, the same splits will again be useful to avoid overfitting.

We manually tune to quantitatively match the statistics for number of contrail pixels and number of linear contrails per frame. We can further qualitatively compare by overlaying the real and synthetic contrail masks on sequences of GOES-16 ABI imagery. We use the Ash color scheme, as used previously in Kulik (2019), Meijer et al. (2022), and Ng et al. (2024), to map infrared radiances to RGB imagery that makes optically thin ice clouds, like contrails, appear in dark blue. An example frame of this imagery with both real and synthetic detections overlaid is shown in Fig. 3. For tuning purposes, we compute the real and synthetic contrail detections for the full validation set of time spans and apply our comparisons over those. We note that there are likely multiple sets of parameters that match our real data equally well, and the parameters used for SynthOpenContrails are just a single instantiation of this. For example, there is likely a set of parameters that allow contrails to be detectable at an older age by increasing the width or age thresholds inside FilterUndetectable and AdaptToDetector but that compensate for the resulting increase in contrail density by having higher thresholds for rasterized contrail opacity. Therefore, we caution against attempting to extract physical insights from SynthOpenContrails, as it has been designed only for evaluating contrail-to-flight attribution and is, in essence, a filtering of CoCiP simulations. The tuned parameter values that we use for generating SynthOpenContrails are in Table 1.

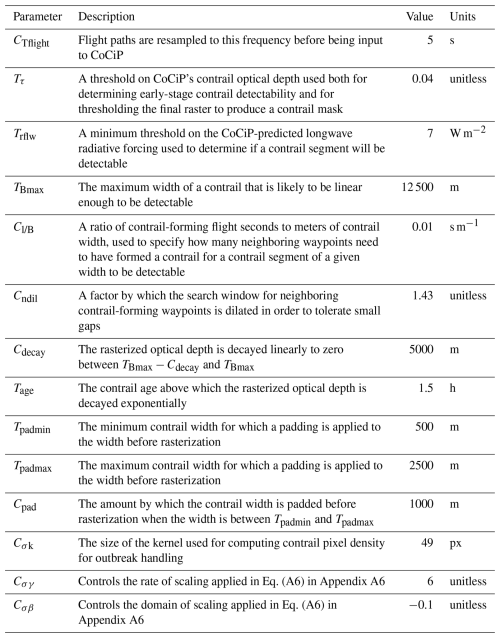

Table 1The parameter values used for generating SynthOpenContrails. Note that many of the parameters are introduced in Appendix A.

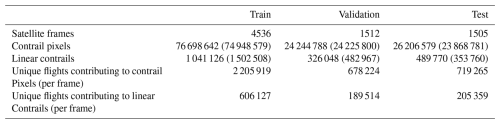

Table 2Statistics of the SynthOpenContrails splits. Values for the corresponding detector outputs on real satellite imagery are in parentheses, where applicable.

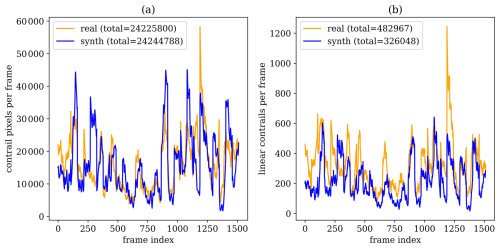

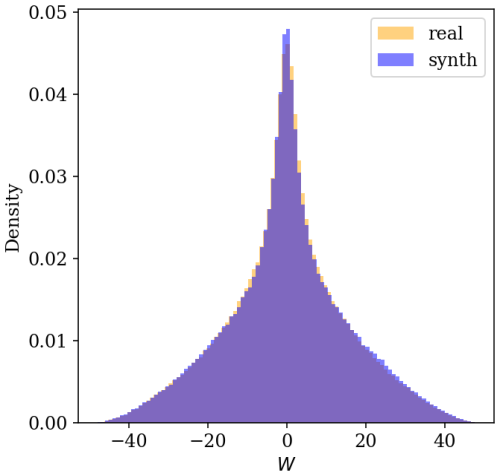

Figure 4Comparisons of contrail statistics between the outputs of the Ng et al. (2024) detector run on GOES-16 ABI imagery (in orange) and SynthOpenContrails (in blue), shown for satellite frames in the validation split. Panel (a) presents the number of contrail pixels per frame. Panel (b) shows the number of linear contrails per frame.

2.4 Properties of the SynthOpenContrails dataset

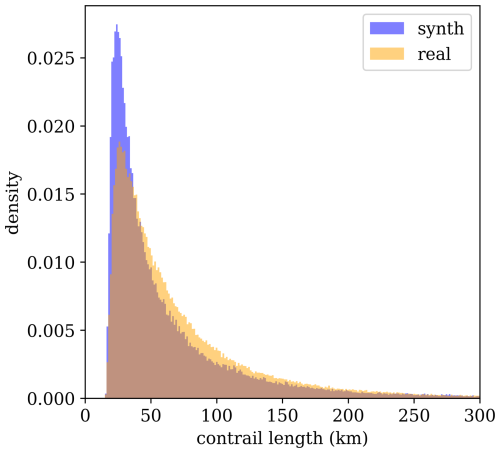

We show some top-level statistics comparing SynthOpenContrails to real detections for the same space–time regions, per dataset split in Table 2. We can also look at the per-frame contrail pixel and linear contrail counts, which are shown for the validation set in Fig. 4. The pixel counts in aggregate are very similar: there are only a few time spans during which SynthOpenContrails has meaningfully more contrail pixels and one notable span during which the real detection masks have many more pixels. On the whole, the peaks and valleys align very well. The linear contrail counts also match the overall trends, but the total counts are somewhat farther apart. The vast majority of the discrepancy comes from a single time span with a large outbreak, during which our adjustments to reduce the number of synthetic contrails in outbreaks seems to have overcompensated. We hope that future work can find a better approach to handling these cases. We can also compare the lengths of the linear contrails between real data and SynthOpenContrails, as shown in Fig. 5. The distributions match quite well, but SynthOpenContrails skews slightly shorter.

We also qualitatively evaluated the dataset with respect to how well it matches the Ng et al. (2024) detector outputs for the corresponding GOES-16 ABI scans, using visualizations like Fig. 3. We compared the geographic distribution of contrails, temporal dynamics, and the appearance of individual contrails in the mask. Of these characteristics, all appeared qualitatively similar, in the authors' opinion, with the exception of certain aspects of individual contrail appearance, as expanded upon below. We observe that the SynthOpenContrails contrail detections generally appear in the same regions as the real detections, but there is far-from-perfect alignment. While there are a few instances in which the SynthOpenContrails mask actually exposes contrails visible in the Ash-color-scheme imagery that the detector missed, the vast majority of the time the real detector better reflects what a skilled human would see in the satellite imagery. This is consistent with previous work (Gierens et al., 2020; Agarwal et al., 2022; Geraedts et al., 2024) which found that weather model data have difficulty predicting contrail formation at the per-flight level. The temporal dynamics from frame to frame do appear qualitatively similar to those of real detections. We reiterate that, for the purposes of our contrail-to-flight attribution system benchmark, it is not necessary that SynthOpenContrails be correct with respect to which flights actually formed contrails; it is only necessary that the distribution of properties of the synthetic data are similar to the real data. The individual synthetic contrails look qualitatively fairly similar to their detector-produced counterparts in overall form. The most noticeable difference is that the synthetic contrails have a slightly higher rate of appearing discontinuous. This likely arises from CoCiP evaluating each waypoint pair independently, in contrast with the smoothing tendencies of the detector. This could perhaps be rectified by a slight blurring of the CoCiP outputs across neighboring waypoints prior to rasterization. The fact that more discontinuous contrails are present in SynthOpenContrails masks does not affect CoAtSaC, as it only utilizes the linearizations of the contrail mask, which are for the most part unaffected by the discontinuities. Any attribution algorithm that directly uses the pixels within the contrail mask, however, may be affected, and this discrepancy should therefore be explored in greater detail for such approaches.

2.5 Benchmark metrics

Here, we define a set of metrics employed as the top-line results when SynthOpenContrails is used to benchmark attribution algorithm performance. The metrics are divided into per-contrail metrics and per-flight metrics. Generally the per-flight metrics will better assess the binary determination of whether a flight formed a contrail, while the per-contrail metrics will be more suitable for accounting for the number of contrails formed and how long they persisted.

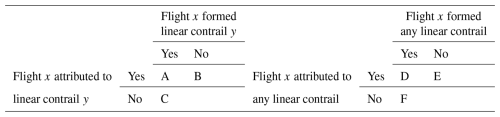

Each metric is composed of cell values from Table 3. The values in each per contrail cell, A, B, and C, are computed by joining each linear contrail in the benchmark dataset with any flight attributions that an algorithm made for that linear contrail. Each linear contrail will have zero or more attributions associated with it. If there are zero attributions, C is incremented. For each attribution, if the flight is the same as the true flight that formed the linear contrail, A is incremented. Otherwise B is incremented. The per-flight-cell values, D, E, and F, are similarly computed by grouping together all linear contrails in the benchmark dataset by the flight that formed them and similarly grouping all attributions by attributed flight. Each flight will then have zero or more linear contrails that it formed and zero or more linear contrails attributed to it. If both are zero, we ignore this flight. If the flight formed linear contrails and there are attributions to it, we increment D. If it formed linear contrails but there were no attributions, we increment F. If there were attributions but it did not form any linear contrails, we increment E.

Once the table is populated, we compute the following metrics. For each, we provide the formula and a prose definition:

-

Contrail precision, A/(A + B). The percentage of the attribution algorithm's attributions to linear contrails that are correct (note that the algorithm can choose not to attribute any flight to a linear contrail).

-

Contrail recall, A/(A + C). The percentage of linear contrails to which the algorithm has attributed the correct flight.

-

Flight precision, D/(D + E). The percentage of flights to which the attribution algorithm has attributed at least one linear contrail that also formed at least one linear contrail in SynthOpenContrails.

-

Flight recall, D/(D + F). The percentage of flights that formed at least one linear contrail in SynthOpenContrails to which the attribution algorithm has attributed at least one linear contrail (regardless of whether that specific attribution is correct).

As (1) there is substantial variation in the properties of the different time spans that might affect attribution performance (see Fig. 4) and (2) we want to avoid the statistics being dominated by the contrail- and flight-dense scenes, we do not recommend computing these metrics uniformly over all of the flights and synthetic contrail detections in the dataset. For the purposes of the benchmark, we compute a central estimate and confidence intervals of the metric value using block bootstrapping (Cameron et al., 2008). Specifically, in each of 1000 iterations, we sample, with replacement, 28 time spans (i.e., the number of time spans in each dataset split) and compute each metric from the union of those time spans. We can then compute the mean, 5th percentile, and 95th percentile from these 1000 measurements.

As the goal is to assess the performance of the attribution algorithms in isolation, these metrics are all computed relative to the filtered and adapted view of CoCiP provided by SynthOpenContrails, and they do not attempt to account for performance relative to the raw CoCiP outputs. This affects the case in which a given flight formed one or more contrails according to CoCiP, but, due to the dataset's post-processing steps, SynthOpenContrails contains no detections of its contrails. If an attribution algorithm were to attribute a synthetic detection to such a flight, it would hurt the per-flight precision and not increase its per-flight recall.

Critically, the flights used to generate SynthOpenContrails are from the same database as those that will be used for the attribution algorithm, but that database is known to be incomplete: at a minimum, military aircraft are unlikely to be fully present, which Lee et al. (2021) estimate to be 5 % of air traffic globally (although this may be higher over our region of study). In order to ensure that the attribution algorithms can handle contrails formed by flights that are missing from the database, we conservatively exclude a fixed random sample of 20 % of flights when tuning and benchmarking. The selection of this value imposes an upper bound on the metrics, which may not be realistic for an MRV system that is run by a government with access to its own military aircraft locations. Because of this, the metrics should not be interpreted directly as the performance of an attribution algorithm in the real world in an absolute sense. They should, however, provide a relative measure of performance between different attribution algorithms. We ran a sensitivity analysis on the impact of excluding different percentages of flights over the attribution algorithm from Geraedts et al. (2024), as well as the CoAtSaC algorithm introduced in Sect. 3. This showed that the recall metrics for both algorithms appear to improve linearly with the fraction of flights available. For the Geraedts et al. (2024) algorithm, the precision metrics were both unaffected by the fraction of flights excluded, whereas for CoAtSaC, the precision metrics improve linearly with the fraction of flights available. While it may be tempting to use the metrics with 100 % of flights available as an absolute measure of performance, this would only hold if the flights missing from the database are a representative sample with respect to contrail formation and attribution performance, which is unlikely to be the case. Therefore, we do not provide the metric values here.

In this study, we benchmark all attribution algorithms using the nominal ERA5 reanalysis weather data, and we recommend that future algorithms evaluating on this benchmark do the same. Using other weather data could result in the improvements over the results presented in Sect. 4 being primarily due to the weather data, rather than the algorithms themselves. As SynthOpenContrails is constructed using data from a weather model, such improvements would not necessarily even indicate the superiority of the weather data when applied to attributing real contrail detections. It is, therefore, also critical that a future attribution algorithm that uses SynthOpenContrails for tuning or benchmarking does not use the same weather data as were used to create the dataset, as specified in Sect. 2.1, because that would provide unrealistically low advection errors.

In this section, we present a novel algorithm for attributing contrails to the flights that created them and demonstrate how it can be tuned and benchmarked using SynthOpenContrails. We call this algorithm “CoAtSaC”, short for “Contrail Attribution Sample Consensus”.

3.1 Data

The inputs to our attribution algorithm consist of linear contrail detections, flight trajectories, and weather data, and they are the same as those used in Geraedts et al. (2024). The spatial regions and time spans used are the same as those for which we generated SynthOpenContrails, as specified in Sect. 2.1.

3.1.1 Contrail detections

When running on real data, we obtain our contrail detections by running the contrail detection algorithm used in Ng et al. (2024) on infrared imagery from the GOES-16 ABI Full Disk product (Goodman et al., 2020). We can alternatively consume the synthetic contrails from SynthOpenContrails as a drop-in replacement that has known ground-truth attribution.

3.1.2 Flight trajectories

We use the same database of flight trajectories provided by FlightAware (https://flightaware.com, last access: 17 July 2025) as was used for generating the synthetic dataset. As we discussed in Sect. 2.5, this dataset is incomplete; therefore, we elide a random sample of the flight data when tuning and benchmarking the dataset on synthetic contrails. We apply the same filtering and preprocessing of flight data as in Geraedts et al. (2024), to filter out erroneous waypoints and those that could not have formed contrails and to achieve a uniform frequency of waypoints across all flights. For each time span of contrail detections, we load flight data starting 2 h before the start of the span and ending at the end of the span, in order to account for the aforementioned delay between contrail formation and detection.

3.1.3 Weather data

The weather data that we use come from the European Centre for Medium-Range Weather Forecasts (ECMWF). For our attribution algorithm, we use the ARCO-ERA5 dataset (Carver and Merose, 2023), which is derived from the ERA5 nominal reanalysis product (Hersbach et al., 2020). This product comprises hourly data at a 0.25° resolution at 37 pressure levels.

3.2 Advection of flight tracks

For the purposes of our contrail attribution approach, we need to answer the following question for each flight waypoint: “Where would we expect a hypothetical contrail formed by the given flight waypoint to appear in a particular satellite scan?”. To answer this, we simulate the advection of each waypoint to each of the subsequent 11 GOES-16 ABI Full Disk images (roughly 2 h at 10 min intervals; see Appendix C1 for the implications of only advecting for 2 h). We again need to account for the GOES-16 ABI capture pattern (see Appendix B2) and compute the expected “scan-time offset” for each waypoint (Meijer et al., 2024). The set of target times for our advection is then the nominal scan times of the 11 scans, with the scan-time offset added. A small amount of error is introduced by the fact that the scan-time offset is not updated as the waypoint advects; if it advects across a capture swath boundary, the scan-time offset would jump by roughly 30 s. The advection itself is performed in exactly the same way as in Geraedts et al. (2024), which we detail in Appendix C2.

This approach to simulating flight advection is subject to a number of sources of error, including (but not limited to) inaccuracies in the interpolated weather data, approximations in sedimentation rate, and not accounting for all physical processes that can affect the vertical location of the contrail (e.g., radiative heating). We expect that these errors will compound over time. As a result, our estimation of where a hypothetical contrail would appear in a particular satellite image will be increasingly wrong as the hypothetical contrail ages, and the errors in successive satellite images will be highly correlated.

Once all flights are advected, we will have advected flights and detected contrails at each satellite frame starting 2 h before the start of a time span and ending 2 h after. This is to ensure that the attribution algorithm can consider flights and contrail detections that are near the beginning and end of the time span in the context of their temporal dynamics.

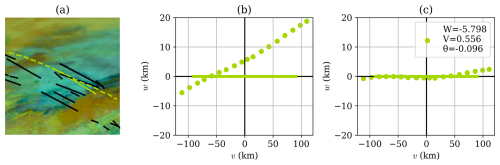

Figure 6A visualization of the single-frame matching process. This is the same scene as in Fig. 1 but focusing in on a single flight and a single contrail detection, rendered in green over a false-color GOES-16 ABI image in panel (a). In panel (b), we show the same data on the v–w plane, with the linear contrail defining the v axis and the flight waypoints projected accordingly to points (wi,vi). Panel (c) shows the results of applying the transformation in Eq. (1) after optimizing the parameters W, V, and θ in Eq. (2), producing points ().

3.3 Single-frame attribution algorithm from Geraedts et al. (2024)

CoAtSaC is an extension of the single-frame attribution algorithm from Geraedts et al. (2024). Here, we summarize just the portions of the Geraedts et al. (2024) algorithm that are critical for understanding CoAtSaC.

The algorithm defines a new 2D spatial coordinate system, which is an orthographic projection centered on a linearized detected contrail, with the v axis along the contrail and the w axis orthogonal to it (we adopt the axis names from Geraedts et al., 2024, but caution the reader not to confuse them with the conventional usage of these variables as directional wind speeds). Distances along each axis are specified in kilometers. Parallax-corrected advected waypoints of a single flight are projected onto this plane to coordinates (wi,vi). Waypoints are excluded if their vi values are outside the span of the contrail, with a small additional tolerance. An example is shown in Fig. 6b.

In this projection, the algorithm measures the advection error that would be implied if this flight formed this contrail, in terms of relative orientation and distance, which are combined into the following coordinate transformation:

The parameters W and V are translation distances along the respective axes and θ is a rotation angle. These parameters are optimized by minimizing the objective function:

which essentially tries to move the flight waypoints as close as possible to the contrail (i.e., v axis), subject to regularization terms. The coefficients Cfit, Cshift, Cangle, and Cage vary with age to allow for a higher tolerance for advection error for flights that have advected longer. The result of the optimization in Eq. (2) is visualized in Fig. 6c, showing both the transformed waypoints and the optimized parameter values. The flight is deemed to have formed the associated contrail if Sattr<3 after the optimization. Section 2.2 of Geraedts et al. (2024) includes some additional logic used to help resolve cases in which multiple flights are attributed to the same contrail detection.

This approach has a few shortcomings that we aim to improve upon. Firstly, an advected flight at a substantially different altitude than the contrail, subject to different wind speeds, could happen to align perfectly in the 2D projection in a single frame as one passes directly above the other at the moment that the satellite captured it. This flight would be erroneously attributed instead of the true flight, which likely incurred some advection error along the way. Secondly, the advection error for each flight segment is treated as independent between satellite frames, when in reality it is highly dependent. We aim to rectify these issues by leveraging the expected behavior of the advection error for the same flight segment as it advects over time.

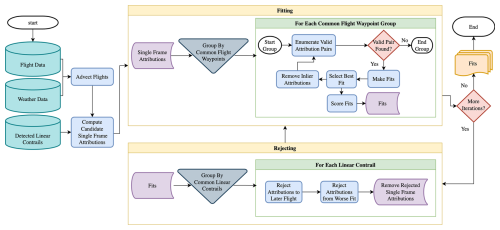

3.4 CoAtSaC attribution algorithm

CoAtSaC improves upon the single-frame algorithm by considering the temporal evolution of the transformation parameters V, W and θ from Eq. (1), with a particular focus on W. The algorithm is visualized in Fig. 7. The algorithm is composed of two stages that run alternately. The first stage, called “Fitting”, looks at all single-frame attributions to a single group of consecutive flight waypoints and leverages the expected temporal evolution of W in order to group together detections of the same physical contrail in different frames. The second stage, called “Rejecting”, combines the evidence from the first stage across multiple candidate flights for each contrail detection and uses that to determine a subset of the single-frame attributions which can be confidently rejected. “Fitting” is then run again but without the potential confounders that were eliminated in the second stage. The stages can then continue to be run for more iterations, if desired.

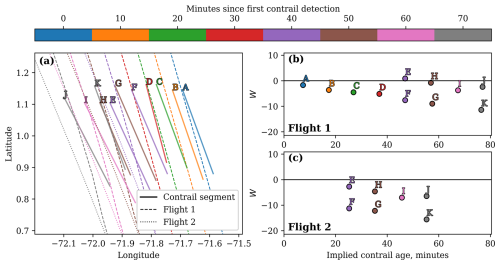

Figure 8Visualization of a contrail-to-flight attribution problem involving two flights that both formed a contrail. Panel (a) shows the detected linear contrails for a 70 min period (covering eight GOES-16 ABI Full Disk scans), accompanied by the flight tracks advected to the GOES-16 capture times. Each linear contrail and flight track is color-coded according to its corresponding satellite capture time. For Flight 1 and Flight 2, in panels (b) and (c), respectively, we show the value of the single-frame attribution parameter W, which approximately measures the advection error perpendicular to the contrail, as a function of the time between the passage of the flight and the moment of detection (i.e., the implied contrail age).

3.4.1 Case study

Before discussing the details of the algorithm, we first present a case study to provide some intuition. We consider the situation in Fig. 8a, which shows two contrails formed by two different flights over a period of 70 min. In Fig. 8a, Flight 1 passes through the domain approximately 20 min before Flight 2 and forms a contrail that is detected in seven consecutive GOES-16 ABI images (line segments A, B, C, D, F, G, and K). The contrail formed by Flight 2 (line segments E, H, I, and J) is first detected approximately 40 min after line segment A is detected. The Flight 1 and Flight 2 flight tracks, advected to the time of each relevant GOES-16 ABI image, are also shown in Fig. 8a as dashed and dotted lines, respectively. Figure 8b and c show the values of the transformation parameter W for each detected contrail for Flights 1 and 2, respectively. For the single-frame attribution algorithm, an ambiguous situation occurs 40 min after the first contrail detection, when line segment E (which is the first detection of the contrail formed by Flight 2) is close to the advected flight tracks of both flights. In fact, Sattr for line segment E is smaller for Flight 1 than for Flight 2 (which is the correct flight). Thus, a single-frame attribution algorithm may erroneously match Flight 1 to line segment E. If, however, we consider the temporal evolution of the value of W for both flights, as shown in Fig. 8b and c, we see that, for both flights, we can identify two sets of single-frame matches, each of which can be connected by a line. For Flight 1, we can imagine points A, B, C, D, F, G, and K forming such a line, while points E, H, I, and J form another line. To understand why this is the case, we note that, for a constant error in the wind data used for advection, we would expect a displacement error between the advected flight track and detected contrail that linearly increases with time, which roughly corresponds to W increasing linearly with time. Importantly, for a flight that formed a contrail, we expect the line connecting the detections to intersect the W axis near zero, implying that if the satellite could have observed this contrail forming, it would be exactly at the location of the flight waypoints before any advection. A contrail that is near an advected flight that did not form it will usually have a nonzero intercept. Considering Fig. 8b, this would lead us to attribute A, B, C, D, F, G, and K to Flight 1 (but not E, H, I, and J). Looking at Fig. 8c in isolation is somewhat more ambiguous, as E, H, I, and J, as well as F, G, and K, form lines with relatively small W intercepts for Flight 2. Only after we also see that Flight 1 forms a line that includes F, G, and K, in addition to A, B, C, and D – some of which formed before Flight 2 had even passed through the region – can we confidently conclude that Flight 2 did not form F, G, and K, but it is the best candidate to have formed E, H, I, and J.

3.4.2 Computing candidate single-frame attributions

The algorithm, based on this intuition, requires access to all single-frame attributions for each flight and the ability to analyze the temporal evolution of the W parameter (Appendix C3 discusses why we do not use V and θ also). For the time dimension of this analysis, as shown in Fig. 8b and c, we use the same “implied contrail age” as was used to set the coefficient values in Eq. (2). Specifically, this is the mean of the advection times of the included waypoints. This “implied contrail age” can vary dramatically for the same contrail detection when attributed to different flights, and the age is in no way inferred from the satellite data directly.

In order to gain access to W values that have a meaningful temporal evolution, we require slight modifications to the single-frame algorithm described in Sect. 3.3. We make the regularization coefficients Cfit, Cshift, and Cangle consistent regardless of contrail age, specifically fixing them at the values they would take on for a flight that had advected for 30 min. We also need to avoid W arbitrarily changing sign across satellite scans for the same flight and physical contrail. For the single-frame algorithm, the sign is unimportant, as the values are always squared in Eq. (2), so making the sign consistent has no negative effect on it. In order to impose consistency, we require that the advected flight be represented with v values increasing with the timestamp of the original waypoint and with positive w values being to the right with respect to the advected flight heading. Specifically, we start from the projected waypoints (wi,vi) described in Sect. 3.3. If the v value for the earliest waypoint is greater than for the latest waypoint, we multiply all of the wi and vi values by −1. For an advected flight segment that is monotonic in v as a function of time, this achieves the desired invariant. Occasionally there are advected flights that loop back on themselves, either due to unusual flight paths or unusual wind patterns, and these can result in inconsistent signs for the w values. We opt to tolerate failures in these cases, as contrails produced by these flight segments are anyway highly unlikely to be successfully attributed, or even detected, by an algorithm based on linearized detected contrails.

We ignore the score thresholds used by Geraedts et al. (2024) and, instead, keep all candidate single-frame attributions whose Sattr score is below a different, tunable threshold, TS, making them available to the “Fitting” stage.

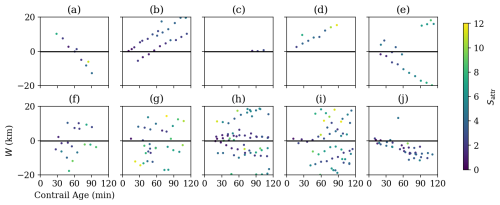

Figure 9Examples of single-frame attributions that share common waypoints of individual flights, plotted on the implied contrail age by W axes. Each single-frame attribution is color-coded according to its single-frame score Sattr. Panel (a) shows two contrails for which the detections at 60 min have a small W value and low Sattr. The single-frame algorithm would incorrectly attribute these detections to this flight; however, because of the large W intercept, we can be confident that they were formed by a different flight. Panel (b) shows three contrails, only one of which was likely actually caused by this flight. Panel (c) presents a contrail with a shallow slope and near-zero W intercept that is first detected long after this flight passed through. This was due to a later flight forming a contrail near the advection path of this flight, but such cases can also be caused by occlusion or small wind shear causing the contrail to remain undetectable for longer. Panel (d) shows a case in which the Sattr values move out of the match range for the single-frame algorithm as the contrail ages, leaving them available to incorrectly match to other flights. Panel (e) presents one long-lived contrail that is likely caused by this flight, with a few other nearby contrails that might make it tricky to fit lines correctly. Panel (f) shows a few short-lived contrails nearby that cause a danger of fitting spurious vertical lines across contrails, unless there is a prior to prefer shallow slopes. Panels (g)–(j) present examples of a higher contrail detection density that result in different degrees of difficulty in identifying the linear structures that track individual contrails.

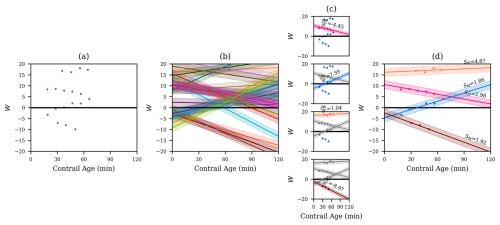

Figure 10A visual depiction of the “Fitting” stage of CoAtSaC for a set of waypoints from a single flight. Panel (a) shows the results of “Group by Common Flight Waypoints” and plots the resulting single-frame attributions in implied age by W space. In panel (b), we “Enumerate Valid Attribution Pairs” and “Make Fits”. In this example, there are 18 single-frame attributions, producing 29 pairs that satisfy the validity criteria. Each of them defines a line, which is plotted in an opaque distinct color, and a surrounding semitransparent region where other attributions would be considered inliers to this fit. Panel (c) shows the “Select Best Fit” and “Remove Inlier Attributions” processes, applied iteratively from top to bottom. In the top panel, we have all fits available, so we pick the best fit, shown in pink, with its slope above it. In the second from the top, we show the first selected fit and its inliers in gray, depicting that we have removed the inliers. We then repeat the process of generating fits from the remaining attributions and selecting the best one, shown in blue. One single-frame attribution would have been an inlier to this fit, but it was claimed by the previous fit, so it is excluded here. The process is repeated until no more candidate fits remain. In this example, four fits are produced. In panel (d), we show the four fits along with their Sfit values produced by “Score Fits”. Note that the orange fit has the highest Sfit score and the shallowest slope, meaning that we are confident that it represents a single physical contrail and also that it was not formed by this flight. Meanwhile, the pink fit has a large W intercept but a relatively low Sfit. The first round of “Fitting” generally has more of these types of fits that will then get removed in the “Rejecting” phase and will not appear in the subsequent rounds of “Fitting”.

3.4.3 Fitting

The intra-flight “Fitting” stage aims to identify groups of single-frame attributions of a given flight that are likely to be the same physical contrail. The stage as a whole is adapted from the Sequential Random Sample Consensus (RANSAC) algorithm (Torr, 1998), which similarly aims to find multiple linear structures among noisy data. An example of this stage is visualized in Fig. 10. The various subroutines of this stage are given a italicized name, for ease of reference to the flow diagram in Fig. 7, and are outlined in the following:

-

Group by Common Flight Waypoints. Having computed candidate single-frame attributions for all flights and all detected contrails, we can now group together candidate single-frame attributions that attribute detected contrails to overlapping sets of waypoints belonging to the same flight. No two resulting groups should contain attributions to the same flight waypoint. The remainder of the fitting stage operates over each of these groups independently.

Within these groups, we can then observe the temporal evolution of W for the single-frame attributions. As we saw in Fig. 8, there is a clear pattern where detections of the same contrail in nearby frames result in a W value that varies linearly in time, even when measured against a flight that did not form the contrail. We show a number of additional examples in Fig. 9, including some where identifying the linear structures is more challenging due to there being large numbers of nearby contrails.

-

Enumerate Valid Attribution Pairs. We enumerate all pairs from the set of attributions in a single group. From each pair, we can then produce a candidate line. We filter out some of these pairs if they do not satisfy the criteria of being temporally within Tt hours of each other, have an absolute slope , and have overlapping attributed waypoints. The slope term, in particular, is important for avoiding fitting lines that span multiple linear structures in the data. If the allowed slopes were unbounded, an example like Fig. 9f could end up with a near-vertical line that groups together what is likely five or six different contrails. This term, in effect, encodes an expected upper bound on the rate of W growth for a contrail. If no valid pairs are found, the Fitting stage is terminated for this group.

-

Make Fits. A pair that passes all of these conditions defines a line, with slope and W intercept Wt=0. The other attributions in the group are labeled as inliers or outliers to this line based on a residual threshold Tres. Specifically, an attribution with implied age ti and W value Wi is an inlier if . This threshold acts as a tolerance for measurement noise that is relatively independent across satellite frames, such as from contrail linearization and quantization of contrail location due to satellite image resolution. Another fairly common scenario that this helps with is if a contrail is detected as a single linear contrail in one frame but is split in two, lengthwise, in the subsequent frame. The attributions to the two smaller contrails would end up with slightly different implied ages than if they were merged, but they likely have the same W value, so the residual allowance enables them to still be inliers. This process of computing fit lines and inliers is shown in Fig. 10b. Hereafter, we refer to the fit line and its set of inliers as a “fit”, and we note that a single-frame attribution can be an inlier to more than one fit at this stage.

-

Select Best Fit. The goal of this subroutine is to identify the candidate fit that is most likely to represent a single physical contrail, irrespective of whether the contrail was formed by this flight. Multiple single-frame attributions attributing a single physical contrail to a flight that did not form it will still form a line, but the line will generally have a nonzero intercept, Wt=0. We do not prioritize finding fits with near-zero intercepts, as it is often easy to spuriously fit a line that spans multiple physical contrails and has a near-zero intercept. Given the set of candidate fits, we select the best fit to be the one with the most inliers. We break ties by selecting the fit with the smallest absolute slope, as steep slopes are more likely to join together different physical contrails, particularly in scenes with many short-lived contrails, like Fig. 9f. The best fit is then stored as an output of the “Fitting” stage.

-

Remove Inlier Attributions. We remove all of the best fit's inliers from the set of candidate attributions in the group. We then return to “Enumerate Valid Attribution Pairs” with the remaining candidate single-frame attributions, repeating until a valid pair cannot be found. This is shown in Fig. 10c.

-

Score Fits. At the end of “Fitting”, we have some number of fits for each group of flight waypoints. Unlike in “Select Best Fit”, where our goal was just to identify fits that most likely represent a single physical contrail, independent of whether it was formed by this flight, we can now make an initial determination of whether the contrail in each fit was likely to have been formed by this flight. To this end, we compute a score as

where is the absolute value of the slope of the fit line; is the absolute value of the W intercept of the fit line; and Cslope, Cintercept, and Csingle are tunable coefficients. This encodes the assumption that a small W intercept, combined with a low minimum Sattr (which primarily helps avoid substantial rotation error) are indicators that the contrail tracked by this fit was formed by this flight. The presence of the slope term is perhaps surprising, as information about the slope was already used in the “Make Fits” and “Select Best Fit” subroutines. The black-box optimizer described in Sect. 3.5 could have set Cslope to zero and did not, but we can only speculate as to why. We hypothesize that it may be due to “Select Best Fit” only considering slope in the context of ties in the number of inliers. In a scene with many short-lived contrails nearby (Fig. 9g, for example), this could produce fits with moderately steep slopes that cut across many physical contrails and, therefore, have more inliers than the fits that only contain a single contrail. The slope term here then allows such fits to have high Sfit values and to, thus, likely be handled by the “Rejecting” phase. The results of the scoring process can be seen in Fig. 10d.

The “Fitting” stage does not itself act on the Sfit score, but a subsequent “Rejecting” stage will consume these scores, and the final time “Fitting” is run, these scores will determine the final attribution decisions.

3.4.4 Rejecting

Whereas the “Fitting” stage uses evidence from one flight at a time to make assessments about which of its single-frame attributions are correct, the inter-flight “Rejecting” stage combines this evidence across flights to eliminate as many incorrect single-frame attributions as possible. Without this stage, there is a strong possibility that the “Fitting” stage would produce fits for multiple flights containing the same contrail detections, all with Sfit scores below the target threshold. This is not inherently problematic, as there can be errors in the contrail detection process that result in merging together distinct contrails. Even when that is not the case, we could express some of the uncertainty in the algorithm by dividing the attribution between multiple candidate flights with different confidences. However, there are cases in which looking across the different flights that have fits containing the same contrail can be used to refine our results.

The existence of the “Rejecting” stage also allows for “Score Fits” to be somewhat more permissive in allowing uncertain fits through to the next stage. For example, in Fig. 10d, the pink fit has an Sfit score just below the threshold that would result in a positive attribution decision, despite having a relatively large W intercept. In most cases, a fit like this is unlikely to result in a correct attribution. In cases of substantial linearization error, however, such a fit can produce correct attributions. Without a “Rejecting” stage the optimal strategy would be to score such a fit above the threshold and not attribute the correct cases. However, by considering further evidence from other flights, the vast majority of the incorrect cases can be ruled out and correct ones can be kept.

The subroutines of “Rejecting”, each given an italicized name to correspond to Fig. 7, work as follows:

-

Group By Common Linear Contrails. The mechanism for combining information across flights is to group together fits produced by the “Fitting” stage that contain attributions to the same detected linear contrail. As fits contain attributions to multiple detected linear contrails, the same fit can end up in multiple such groups.

-

Reject Attributions to Later Flights. The first case of interest is if any pair of fits share at least two contrail detections and if one of them also includes contrail detections that predate the other flight waypoints. In this case, we can assume that the later flight just flew very close to the existing contrail, and we reject the single-frame attributions between the common contrails and the later flight. An example of this can be seen in Fig. 8, where a fit to contrails F, G, and K for Flight 2 might have produced a low Sfit score. Only when we consider Flight 1's fit to A, B, C, D, F, G, and K do we notice that Flight 1's fit includes all of the contrail observations from Flight 2's fit as well as four earlier ones, some of which were observed before Flight 2 even passed through. With access to that information, we can confidently say that Flight 2 did not form this contrail.

-

Reject Attributions from Worse Fit. The second case relies on the quality of the fits produced in the “Fitting” stage. As we saw in Fig. 10, some fits that it produces have W intercepts far from zero, implying a low likelihood that the constituent single-frame attributions are correct. This and other measures of fit quality factor into the Sfit score. Therefore, we compare these values for each of the fits, and if any is more than a threshold Tb higher than the lowest value, we reject all of its single-frame attributions as well. In Fig. 10d, the orange and pink fits, as well as their constituent single-frame attributions, which have W intercepts far from zero, should be eliminated by this process, assuming that the algorithm has access to the flights that did form those contrails.

-

Remove Rejected Single Frame Attributions. The single-frame attributions that were rejected as a result of the two prior subroutines are then removed from the set of candidate single-frame attributions made available to the next iteration of “Fitting”. As more confidently incorrect single-frame attributions get removed, fitting lines to the messier cases – like Fig. 9g–j – becomes easier.

3.4.5 Final attribution decisions

In principle, one could iterate between “Fitting” and “Rejecting” arbitrarily many times, until the algorithm converges. Note that the “Fitting” stage should always be run last. In practice, with the tuned parameter values that we use, there are very few remaining contrails attributed to multiple flights after running just “Fitting–Rejecting–Fitting”. The resulting fits define the final attribution decision for their constituent detected contrails, which is determined by Sfit<3, with the value 3 being chosen for consistency with Geraedts et al. (2024).

3.4.6 Scalability

A critical benefit of CoAtSaC is that it, like the Geraedts et al. (2024) algorithm, is highly scalable. The “Fitting” stage can be parallelized over flights, and the “Rejecting” stage can be parallelized over contrail detections. This lends itself well to being implemented in the Dataflow Model (Akidau et al., 2015) using a framework like Apache Beam (Apache Software Foundation, 2024). In principle, this enables the algorithm to scale to all flights and all contrail detections globally, where the speed of the algorithm is proportional to the number of compute nodes provided to it. This is in contrast to approaches like Chevallier et al. (2023) that optimize over a full graph of flights and contrail detections, which requires holding the complete graph in the memory of a single computer.

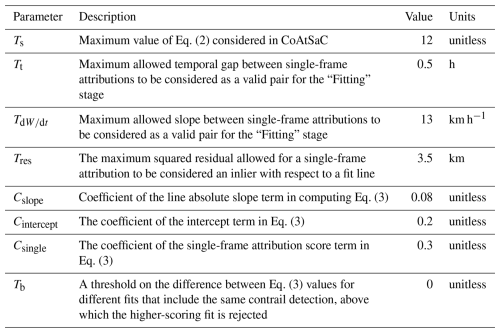

3.5 Tuning the attribution algorithm

Given a dataset of synthetic linear contrails labeled with the flight that formed them, divided by time span into train, validation, and test splits, we can then apply it both to tuning and to benchmarking an attribution algorithm. Specifically, we simply run the attribution algorithm using SynthOpenContrails's linear contrails instead of detector-produced contrails and then drop 20 % of flights (as discussed in Sect. 2.5) and compare the resulting attributions to the ground-truth labels that we have for each synthetic linear contrail. From that, we can compute the metrics of interest, as defined in Sect. 2.5.

Using this setup, we apply Google Vizier (Golovin et al., 2017) as a black-box optimization service to search through the space of parameters of CoAtSaC, aiming to find the optimal set producing the highest values for the four metrics of interest using the train split of SynthOpenContrails. We can simultaneously monitor performance on the validation split to ensure that the optimizer has not overfit. How one chooses to prioritize each of the metrics relative to each other – an increase in one often leads to a decrease in another – depends largely on the intended use case for the attributions. If the goal is an MRV system that aims to capture the largest possible fraction of contrail warming – while tolerating some inaccuracies in the specifics – contrail recall might be the most important metric. If one instead aims to generate training data for a contrail forecast model, where noise in the labels could impair the model, flight precision might be the better metric. Using the attributions to evaluate a contrail avoidance trial might require more of a balance between the metrics, depending on the size of the trial. For the purposes of this study, we slightly prioritized flight precision, while keeping the other metrics above reasonable performance thresholds. The parameters chosen by this tuning are given in Table 4.

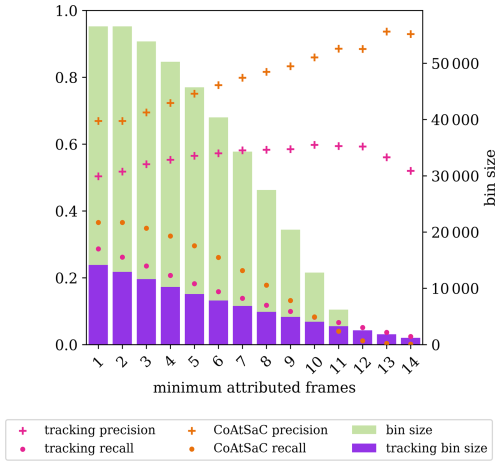

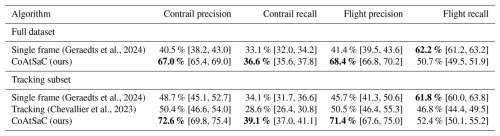

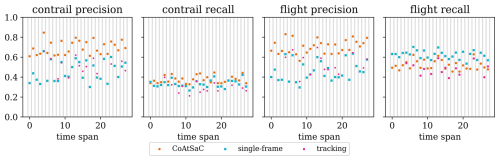

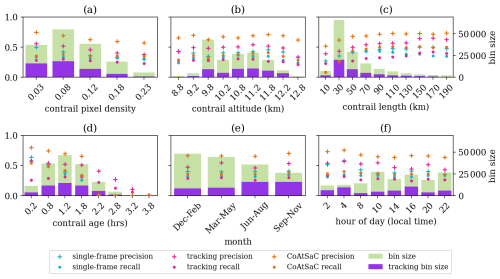

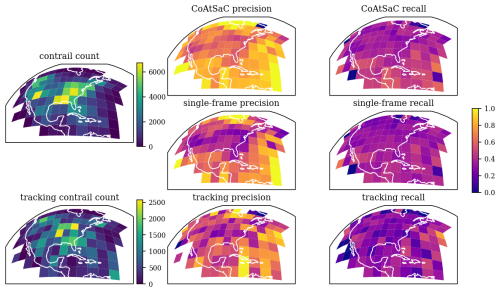

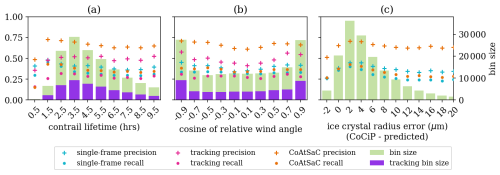

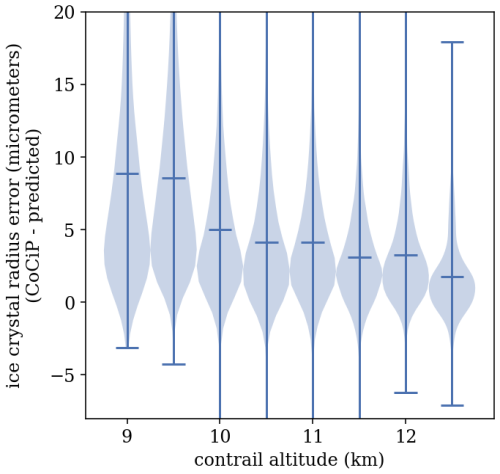

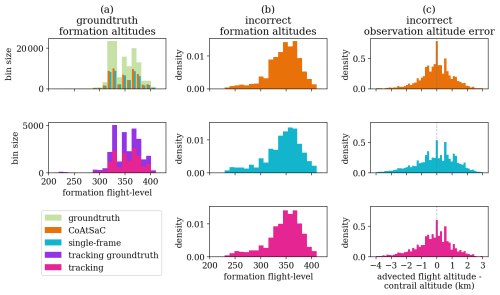

4.1 Benchmarking attribution algorithms on SynthOpenContrails

We compare the performance of CoAtSaC with the single-frame algorithm of Geraedts et al. (2024) and the tracking algorithm of Chevallier et al. (2023) on the metrics specified in Sect. 2.5 over the SynthOpenContrails test split. Both of the previously published algorithms were slightly modified, as detailed in Appendix D, in order to produce these results, but they were not retuned. Importantly, the tracking algorithm was adapted to operate on the linearized contrails, rather than the contrail instance masks for which it was designed, which may have negatively impacted its performance metrics presented here. Due to time and computational constraints, the tracking algorithm was only evaluated on half of the time spans in the test split, as detailed in Table F3 in Appendix F. This subset is hereafter referred to as the “tracking subset”.