the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Testing the performance of field calibration techniques for low-cost gas sensors in new deployment locations: across a county line and across Colorado

Joanna Gordon Casey

Michael P. Hannigan

We assessed the performance of ambient ozone (O3) and carbon dioxide (CO2) sensor field calibration techniques when they were generated using data from one location and then applied to data collected at a new location. This was motivated by a previous study (Casey et al., 2018), which highlighted the importance of determining the extent to which field calibration regression models could be aided by relationships among atmospheric trace gases at a given training location, which may not hold if a model is applied to data collected in a new location. We also explored the sensitivity of these methods in response to the timing of field calibrations relative to deployment periods. Employing data from a number of field deployments in Colorado and New Mexico that spanned several years, we tested and compared the performance of field-calibrated sensors using both linear models (LMs) and artificial neural networks (ANNs) for regression. Sampling sites covered urban and rural–peri-urban areas and environments influenced by oil and gas production. We found that the best-performing model inputs and model type depended on circumstances associated with individual case studies, such as differing characteristics of local dominant emissions sources, relative timing of model training and application, and the extent of extrapolation outside of parameter space encompassed by model training. In agreement with findings from our previous study that was focused on data from a single location (Casey et al., 2018), ANNs remained more effective than LMs for a number of these case studies but there were some exceptions. For CO2 models, exceptions included case studies in which training data collection took place more than several months subsequent to the test data period. For O3 models, exceptions included case studies in which the characteristics of dominant local emissions sources (oil and gas vs. urban) were significantly different at model training and testing locations. Among models that were tailored to case studies on an individual basis, O3 ANNs performed better than O3 LMs in six out of seven case studies, while CO2 ANNs performed better than CO2 LMs in three out of five case studies. The performance of O3 models tended to be more sensitive to deployment location than to extrapolation in time, while the performance of CO2 models tended to be more sensitive to extrapolation in time than to deployment location. The performance of O3 ANN models benefited from the inclusion of several secondary metal-oxide-type sensors as inputs in five of seven case studies.

- Article

(9872 KB) - Full-text XML

-

Supplement

(7290 KB) - BibTeX

- EndNote

In places like the Denver-Julesburg (DJ) and San Juan (SJ) basins, along Colorado's Front Range and in the Four Corners region, oil and gas production activities have been increasing with the advent of horizontal drilling that can be effectively used in conjunction with hydraulic fracturing to produce hydrocarbons from unconventional geologic formations. Public health concerns have arisen about the increasing number of people living alongside these industrial activities and emissions (Adgate et al., 2014; McKenzie et al., 2014; McKenzie et al., 2012, 2017). We previously developed methods to quantify ozone (O3), carbon dioxide (CO2), methane (CH4), and carbon monoxide (CO) using low-cost gas sensors in an area where the ambient mole fractions of these species are influenced by oil and gas production activities (Casey et al., 2018). Such low-cost sensor measurements could enable greater understanding of air quality in oil and gas production basins, informing the spatial and temporal scales on which people live and work in a way that current technologies used by regulatory agencies cannot feasibly accomplish. In our previous work, we tested and compared the performance of direct and inverted linear models (LMs) as well as artificial neural networks (ANNs) as regression tools in the field calibration of low-cost sensor arrays to quantify these target gas species using month-long test datasets, training each model with approximately 1 month of data prior to and 1 month of data subsequent to this test period. ANNs are powerful pattern recognition tools. They were found to perform better than both inverted and direct LMs in our previous study, but concerns arose when findings suggested that the performance of ANNs was being augmented by the relationships among gas mole fractions in the atmosphere at a given location. Low-cost gas sensor systems have the potential to inform spatial and temporal variability in pollution. Calibration equations for each sensor system can be generated in one location based on co-located measurements with reference instruments, and then the sensor systems can be moved into a spatially distributed network. Since the relationships among gas mole fractions will differ at different sampling sites across a spatially distributed network, calibration models may not hold at new sampling sites. In this work, we test calibration model performance when extended to new locations.

1.1 Low-cost sensors for air quality measurements

The use of low-cost metal oxide, electrochemical, and nondispersive infrared sensors to characterize air quality is becoming increasingly common across the globe (Clements et al., 2017; Kumar et al., 2015). While low-cost sensors have been emerging on the market with sufficient sensitivity to resolve variations in ambient mole fractions of target gases of interest, they are also sensitive to temperature and humidity variations that occur in the ambient environment. Nondispersive infrared (NDIR) sensors, like the ELT S-300 CO2 sensor employed in this study, have good selectivity, but, since pressure and temperature are not controlled in the optical cavity of ELT S-300 CO2 sensors, the influence of temperature on sensor signals plays an important role. The influence of humidity is also important to address because changes in water vapor are known to influence NDIR measurements of CO2 in terms of spectral cross-sensitivity due to absorption band broadening (LI-COR, 2010).

Both metal-oxide- and electrochemical-type sensors operate on the principle of oxidizing or reducing reactions at sensor surfaces. For electrochemical sensors, like the Alphasense CO-B4 sensor employed in this study, oxidizing or reducing compounds react at the working electrode, resulting in the transfer of ions across an electrolyte solution from the working electrode to the counter electrode, balanced by the flow of electrons across the circuit connecting the working electrode to the counter electrode. A linear relationship is expected between this current and the target gas mole fraction. Electrochemical sensors can be tuned to respond more or less strongly to specific gases by adjusting the material properties of the working electrode. A membrane is located between the working electrode and the exterior of the sensor in order to control redox reaction rates. The rates at which gases diffuse through the membrane to reach the working electrode and the electron transfer rates have been shown to increase at higher temperatures (Xiong and Compton, 2014), and since chemical reaction rates are also influenced by temperature, electrochemical sensor responses can be influenced by sensor operating temperature. Changes in ambient humidity levels can cause sensors to lose or gain the electrolyte solution, by mass, also influencing electrochemical sensor response (Xiong and Compton, 2014).

For metal oxide sensors, and to a lesser extent for electrochemical sensors, resolving the response of a sensor attributable to the target gas species can also pose a challenge in the presence of interfering gas species. Metal oxide sensors, like those used in this study, have a resistive heater circuit that warms up the sensor surface, causing O2 molecules to adsorb to the sensor surface, which leads to increased resistance across the surface of the sensor. In the presence of an oxidizing compound, like O3, more oxygen molecules are adsorbed to the sensor surface and the resistance across the sensor surface is increased further. In the presence of a reducing compound, like CO, oxygen molecules are removed from the sensor surface, allowing electrons to flow more freely, resulting in decreased resistance across the sensor surface. For metal oxide sensors, the resistance across the sensor surface can then be used to determine the mole fraction of a given oxidizing or reducing compound, often according to a nonlinear relationship. Exposure to humidity has been shown to significantly lower the sensitivity of metal oxide gas sensors, making it an important parameter to address in a gas quantification model (Wang et al., 2010). Metal oxide sensor operating temperature has also been shown to strongly influence sensor sensitivity and selectivity to different gas species (Wang et al., 2010). Metal-oxide-type sensors can be tuned to respond differently from one another to oxidizing and reducing gas species by using different metal oxide materials and doping agents for the sensor surface, but selectivity is difficult to achieve.

1.2 Low-cost air quality sensor quantification

Because low-cost gas sensor signals are influenced, sometimes significantly, by interfering gas species and changing weather conditions in the ambient environment, field normalization methods to quantify atmospheric trace gases using low-cost sensors have been found to be more effective than lab calibration (Cross et al., 2017; Piedrahita et al., 2014; Sun et al., 2016). Our previous study and several others have compared the performance of field calibration models generated using LMs (simple and multiple linear regression) relative to supervised learning methods (including ANNs and random forests), all finding that ANNs (Casey et al., 2018; Spinelle et al., 2015, 2017) and random forests (Zimmerman et al., 2017) outperformed LMs in the ambient field calibration of low-cost sensors. Like earlier laboratory-based studies (Brudzewski, 1999; Gulbag and Temurtas, 2006; Huyberechts and Szeco, 1997; Martín et al., 2001; Niebling, 1994; Niebling and Schlachter, 1995; Penza and Cassano, 2003; Reza Nadafi et al., 2010; Srivastava, 2003; Sundgren et al., 1991), ANN-based calibration models, incorporating signals from an array of gas sensors with overlapping sensitivity as inputs, have been able to effectively compensate for the influence of interfering gas species and resolve the target gas mole fraction.

ANNs are known to be able to very effectively represent complex, nonlinear, and collinear relationships among input and output variables in a system (Larasati et al., 2011). ANNs are useful in the field calibration of low-cost sensors because, through pattern recognition of a training dataset, they are able to effectively represent the complex processes and relationships among sensors and the ambient environment that would be very challenging to represent analytically or based on empirical representation of individual driving relationships. In practice though, the reason multiple gas sensors are able to improve the performance of calibration models may be in part the result of correlation among mole fractions of target gases themselves that hold for one model training location, but might not remain effective at alternative sampling sites or during other time periods.

1.3 Summary of previous study

Our previous study was carried out using sensor measurements collected over the course of several months in the spring of 2017, in Greeley, Colorado, which lies within the Denver-Julesburg oil and gas production basin. Others had recently demonstrated the utility of machine learning methods in the quantification of atmospheric trace gases using arrays of low-cost sensors in urban (De Vito et al., 2008, 2009; Zimmerman et al., 2017) and rural (Spinelle et al., 2015, 2017) areas. Our previous study tested the relative performance of machine learning methods and LMs in the quantification of CH4, O3, CO2, and CO in an area strongly influenced by oil and gas production activities, where enhanced levels of hydrocarbons and other industry-related pollutants could potentially confound measurements. The previous study was also the first to compare machine learning regression techniques with LMs toward the quantification of CH4 using arrays of low-cost sensors in any setting. The study tested and compared calibration models using data from two U-Pod sensor systems containing arrays of low-cost gas sensors; these systems were co-located with optical gas analyzers at a Colorado Department of Public Health and Environment monitoring site. ANNs and LMs were trained using a variety of sensor signal input sets from a month of co-located data collected prior to and following a month-long test period. The performance of each model was then evaluated relative to reference instrument measurements during the test period. For quantification of all four trace gases that we tested in this oil- and gas-influenced setting, we found that ANNs performed better than LMs. The better performance of ANNs over LMs was likely largely attributable to the ability of ANNs to more effectively represent complex and nonlinear relationships among sensor responses, environmental variables, and trace gas mole fractions than LMs. However, the performance of these powerful regression methods could be aided by relationships among atmospheric trace gases specific to the training location, which would not necessarily hold at different sampling sites.

1.4 Spatially distributed networks of sensors and spatial extension of calibration models

Distributed spatial networks of low-cost sensor systems have the potential to inform air quality with high spatial and temporal resolution. As such, studies have begun to deploy spatial networks of low-cost sensor systems. These studies rely on the spatial transferability of quantification techniques. In the present work, we test model performance under conditions of spatial transferability, wherein a model is trained using data from one location and then applied to a test dataset using data from a new location. In testing spatial extension of a model, we investigate how well the field calibration of low-cost sensors can inform target gas mole fractions when sensors are deployed in a new location and a new microenvironment of oxidizing and reducing compounds. We also test model performance under conditions of temporal extension, wherein a model is trained using data that was collected only prior or subsequent to the model application period. In testing temporal extension of models, we investigate how model performance is influenced by sensor drift over time. We opportunistically utilize measurements collected with low-cost sensors in Denver, Boulder County, and the DJ and SJ oil and gas production basins in recent years. This effort focuses on the analysis for O3 and CO2 using both LMs and ANNs, including a comparison of models with a number of different input sets. In previous work (Casey et al., 2018), we have additionally addressed the quantification of CO and CH4 using arrays of low-cost sensors together with field normalization methods, but these species are not included in the present analysis because analogous reference data to those we present for O3 and CO2 were not available for CO and CH4.

1.5 Oil and gas production and air quality

Emissions related to oil and gas production, namely nitrogen oxides (NOx) and volatile organic compounds (VOCs), have been shown to influence tropospheric ozone (O3), which is particularly relevant in regions that are in non-attainment of the United States Environmental Protection Agency (USEPA) National Ambient Air Quality Standards (NAAQS) for ozone, like the Colorado Front Range where the DJ Basin is situated. NOx and VOC emissions, including those from oil and gas production activities, react in the atmosphere in the presence of sunlight to form tropospheric O3. A number of studies have demonstrated that oil- and gas-related emissions contribute to increased O3 in the DJ Basin (Cheadle et al., 2017; Gilman et al., 2013; McDuffie et al., 2016). Mole fractions of ozone as high as 140 and 117 ppb during winter months have also been observed and attributed directly to oil and gas production emissions in the Upper Green River basin of Wyoming and Utah's Uinta Basin, respectively (Ahmadov et al., 2015; Edwards et al., 2013, 2014; Field et al., 2015; Oltmans et al., 2016; Schnell et al., 2009). Additionally, a modeling study concluded that oil and gas production activities could significantly impact ozone near emissions sources, beginning 2 and 8 km downwind of compressor engine and flaring activities, respectively (Olaguer, 2012).

Emissions of industry-related air pollutants, including O3 precursors NOx and VOCs, are expected to occur on spatially distributed scales, across components on well pads, transmission lines, transportation routes, and gathering stations that are each distributed throughout production basins (Litovitz et al., 2013; Mitchell et al., 2015; Allen et al., 2013). Spatially distributed networks of low-cost sensors have the potential to better inform spatial variability in air quality than existing regulatory air quality monitoring stations, which cannot feasibly cover such spatially resolved measurements continuously and may not be representative of air quality across smaller spatial scales (Bart et al., 2014; Jiao et al., 2016; Moltchanov et al., 2015). Abeleira and Farmer show that ozone production throughout much of the Front Range, outside of downtown Denver, is likely to be NOx limited, implying that local NOx sources are likely influencing ozone on small spatial scales (Abeleira and Farmer, 2017). Oil- and gas-industry-related NOx sources, such as diesel truck traffic, flaring, and compressor engines, could lead to pockets of elevated O3 throughout the DJ Basin. While emissions from truck traffic (and in some cases a generator to power a drill rig) at a given well pad are expected to be highest during the drilling, stimulation, and completion phases, industry truck traffic often persists as the contents of produced water and condensate tanks are frequently collected from well pads throughout the production phase, as do emissions from flaring and compressor engines. Low-cost O3 sensors could augment the few and far apart regulatory sites that currently monitor O3 levels in places like the DJ Basin, which has better coverage than many other production basins in the United States. While elevated ambient CO2 levels are not directly harmful to human health, continuous CO2 measurement can provide information about nearby combustion-related pollution and atmospheric dynamics that lead to the accumulation of potentially harmful compounds associated with the oil and gas production industry during periods of atmospheric stability.

In this work, we present and compare models designed to address the unique challenges that come with using low-cost sensors in the quantification of atmospheric trace gases of interest in oil and gas production basins, where ambient hydrocarbon mole fractions are potentially elevated, exerting a uniquely confounding influence on low-cost gas sensors. Calibration models that were found to perform best in our previous study are applied to data collected in different locations. For the first time, we investigate how well models can be transferred from one microenvironment to another, with different dominant local emissions source characteristics and different relative abundance of oxidizing and reducing compounds. Microenvironments explored in this work include a basin where both natural gas and heavier hydrocarbons are produced (the DJ Basin) and a basin where natural gas is prominently produced (the SJ Basin), with much smaller proportional emissions of heavier hydrocarbons and likely lower atmospheric concentrations of alkanes, alkenes, and aromatics. Within and bordering the DJ Basin, additional microenvironments include an urban location, with significant mobile source emissions (NOx, CO, and VOCs), and a peri-urban site with fewer mobile emissions and closer proximity to oil and gas production activities. We explore how robust model performance is when a model is trained in one microenvironment and transferred to another, challenged by different relative abundances of oxidizing and reducing gas species. Additionally, we test how well models can represent and address sensor stability over time and the potential for drift.

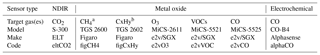

2.1 Sensors and U-Pods

All U-Pod sensor systems (mobilesensingtechnology.com) employed in the case studies, described below, were populated with seven low-cost gas sensors, as in our previous study (Casey et al., 2018). The gas sensors are listed in Table 1 along with their target gas and the model input codes we assigned to each. A RHT03 sensor was used in each U-Pod to measure temperature (temp) and relative humidity (RH). A Bosch BMP085 sensor was used to measure pressure in each U-Pod.

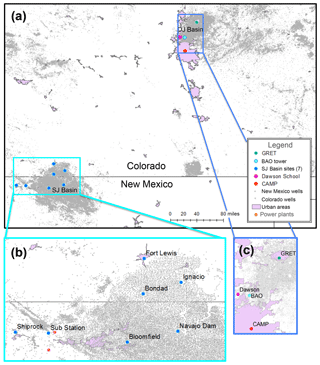

Figure 1(a) Training and test deployment locations are identified in the SJ and DJ basins in context with urban centers and oil and gas production wells. (b) Panel zoomed in on the SJ Basin, covering approximately 11 000 km2 (137×80 km). (c) Panel zoomed in on the DJ Basin covering approximately 4000 km2 (45×89 km).

2.2 Case studies

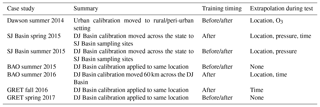

Five to 10 U-Pods were deployed at sampling sites in and around the DJ and SJ basins from 2014 to 2017. Deployments generally consisted of co-location with reference measurements prior to and following approximately 1-month periods of spatially distributed measurements. During some of the distributed measurement periods, a subset of U-Pods remained co-located with reference instruments where the field calibrations took place. During some distributed measurement periods, some U-Pods were also deployed in new locations that were equipped with reference measurements. In between periods of distributed sensor system deployments, sensor systems were co-located with reference instruments for as long as possible, as logistics and coordination with other regulatory agencies and researchers would allow. In this way, we hoped to maximize our ability to encompass full ranges of temperature, humidity, and trace gases that occur across seasons in order to minimize extrapolation with respect to these parameters when models were applied to measurements from distributed deployment periods. The locations where all or a subset of U-Pods were co-located with reference instruments are indicated in Fig. 1. In this exploratory study, we opportunistically employ data from these sensor deployments, treating them as case studies in order to characterize the performance of field calibration models when they are extended to new locations. For each case study, described below, data were divided into training and test periods. Time lines for these dataset pairs are detailed in Fig. 2. Some U-Pods employed in these case studies (indicated in grey font in Fig. 2) were constructed, populated with sensors, and deployed at field sites in the spring of 2014, approximately a year before the rest of the U-Pods were constructed, populated with sensors, and deployed at field sites in the spring of 2015. The relative age of sensor systems included in some case study comparisons could have contributed to some discrepancy in model performance, though systematic differences based on U-Pod age are not apparent.

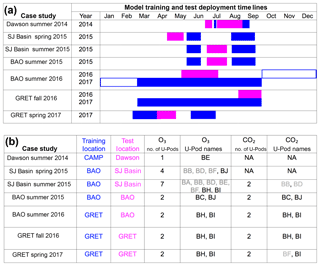

Figure 2(a) ANN and LM training and test deployment time lines. The Dawson, BAO, and GRET sampling sites are all located in the DJ Basin. Model training periods for each test deployment are shown in blue, and model test periods are shown in magenta. For the BAO summer 2016 case study, the period outlined in blue shows data that were used to train the O3 model but not CO2 models since CO2 reference data were not available during winter months. (b) Information about each of the case studies presented in the above time lines, including model training and testing locations, as well as the number and names of U-Pods included in each case study for both O3 and CO2 models. The U-Pods with names shown in grey were constructed and deployed starting in May 2014. The U-Pods with names shown in black were constructed and deployed starting in April 2015.

As available data from each case study allowed, we used approximately 1 month of training data before and after a given test period. When training data were not available within several months of a test period, significantly longer training datasets were used in order to attempt capture and effectively represent trends in sensor drift over time, as well as to avoid extrapolation of model parameters (particularly temperature) during the test data period. As a result, model training durations varied across case studies and sometimes significantly exceeded model testing durations. Each case study is similar in representing an approximately 1-month-long deployment of sensor systems. This study design serves a primary goal of this work, supporting the quantification of atmospheric trace gases from low-cost gas sensor data in new locations, relative to model training locations, for periods of approximately 1 month at a time.

Making quantitative measurements of atmospheric trace gases with low-cost sensors is challenged by unique variations in individual sensor responses associated with variations in the manufacturing process, sensor age, and sensor exposure history. For these reasons, we generated unique calibration models using data from sensors in each individual U-Pod sensor system. The closest available data prior and/or subsequent to a test data period were used for model training to avoid complications associated with significant sensor drift and degradation in sensor sensitivity to target gas species over time. Table 2 lists the O3 and CO2 reference instruments that were co-located with U-Pods at each sampling site, along with instrument operators, calibration procedures, and reference data time resolution. The selected case studies, described in Sect. 2.2.1 through 2.2.7 below, were aimed to support methods to quantify atmospheric trace gases during the distributed deployments we carried out from 2014 through 2017 as well as future distributed sensor network measurements. Figure 1 shows sampling site locations in context with urban areas and oil and gas production wells. Figure 2 shows the time line of each of these deployments, highlighting the training and testing periods defined for both O3 and CO2.

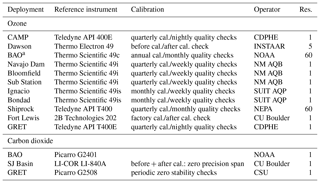

Table 2Reference instrument measurements at U-Pod sampling sites.

a McClure-Begley et al. (2017);

res: time

resolution of measurements in minutes.

2.2.1 Dawson summer 2014

The first distributed measurement campaign took place during the summer of 2014 when five U-Pods were sited at locations around Boulder County, with four distributed along the eastern boundary of the county, adjacent to Weld County where dense oil and gas production activities were underway. A background site, further from oil and gas production activities, was also included to the west, near a busy traffic intersection on the north end of the city of Boulder. Co-locations with reference measurements that were used for field calibration of the sensors took place at the Continuous Ambient Monitoring Program (CAMP) Colorado Department of Health and Environment (CDPHE) air quality monitoring site in downtown Denver. One of the distributed sampling sites, Dawson School, was also equipped with a Thermo Electron 49 O3 reference instrument operated by Detlev Helmig's research group from the Institute of Arctic and Alpine Research (INSTAAR). In this work, a case study is developed using data from one U-Pod located at the CAMP site in downtown Denver for O3 model training and data from one U-Pod located at the Dawson School for O3 model testing. This case study is used to test model performance when extrapolated in terms of O3 mole fractions and applied in a new location, transferred from an urban to a peri-urban environment.

2.2.2 SJ Basin spring 2015

In the spring of 2015, we augmented our original fleet of five U-Pods (BA, BB, BD, BE, and BF) with five more (BC, BG, BH, BI, and BJ) and deployed these sensor systems in the SJ Basin while a targeted field campaign was underway to understand more about a CH4 “hot spot” that was discovered from satellite-based remote-sensing measurements (Frankenberg et al., 2016; Kort et al., 2014). The primary goal of this sensor deployment was to inform spatial and temporal patterns in atmospheric trace gases like CH4, O3, CO, and CO2 across the SJ Basin. Most U-Pods were located at existing air quality monitoring sites operated by the New Mexico Air Quality Bureau (NM AQB), the Southern Ute Indian Tribe Air Quality Program (SUIT AQP), and the Navajo Environmental Protection Agency (NEPA), which supported validation of sensor measurements for O3. After this deployment period, all U-Pods were moved to the Boulder Atmospheric Observatory (BAO) site in the DJ Basin for approximately 1 month and were co-located with reference instruments there that were operated by National Oceanic and Atmospheric Administration (NOAA) researchers. A case study is developed with data from the BAO site to train O3 models for four U-Pods and data from SJ Basin sites to test O3 models for four U-Pods. This case study is used to test model performance when extrapolated in temperature and time and applied in a new location, extended from one oil and gas production basin to another across Colorado.

2.2.3 SJ Basin summer 2015

In the summer of 2015, after an approximately month-long co-location with reference instruments at the BAO site, seven U-Pods were deployed again at existing regulatory monitoring sites for approximately 1 month, after which they were moved back to the BAO site for another month of co-location with reference instruments there. We equipped two of the regulatory monitoring sites in the SJ Basin with LI-COR LI-840A CO2 analyzers to provide reference measurements for CO2. A case study is developed with data from the BAO site, before and after the SJ Basin summer 2015 deployment to train models, and data from SJ Basin sites during the summer deployment period to test models. Data from seven U-Pods were used to train and test O3 models and data from two U-Pods were used to train and test CO2 models. This case study is used to test model performance when training took place both before and after the test period, and when extended to a new location, from one oil and gas production basin to another across Colorado.

2.2.4 BAO summer 2015

During the SJ Basin summer 2015 deployment period, two U-Pods remained at the BAO site. A case study is developed using data from those two U-Pods that remained at the BAO site. This case study is used to test model performance when training took place both before and after the test period and when the model was tested on data that were collected in the same location as model training.

2.2.5 BAO summer 2016

U-Pods were deployed at the BAO site again in 2016 for several months during the summer. In August 2016 the U-Pods were moved to the Greeley Tower (GRET) CDPHE air quality monitoring site in Greeley, Colorado, a location which, like the BAO site, is also strongly influenced by DJ Basin oil and gas production activities. The U-Pods remained there for 1 year. For the GRET co-location period, CDPHE shared reference measurements for O3. Additionally, Jeffrey Collett and Katherine Benedict of Colorado State University (CSU) shared CO2 reference measurements from an instrument they operated at the site before 1 October 2016 and after 7 March 2017, when the instrument was located at the GRET site. A case study is developed using data from two U-Pods. Data from the yearlong deployment at the GRET site were used to train models for O3, and data from the BAO site during the summer 2016 deployment were used test models for O3. Because reference data for CO2 were not available at the GRET site during winter months, only 8 months of data from these two U-Pods during the GRET deployment were used to train models for CO2, but again, data from the BAO summer 2016 deployment were used to test models for CO2. A significantly longer training duration is implemented in this case study because the training period took place more than several months after the model testing period. We reasoned that a longer training duration would be better able to represent patterns in sensor drift over time, as well as encompass the temperature range of the test dataset period. Significantly less training time is needed when training occurs directly before and/or after a given model application period. This case study is used to test model performance when extrapolated significantly (more than several months) in time and extended to a new location, from one location in the DJ Basin to another.

2.2.6 GRET fall 2016

In order to test model performance, under similar circumstances in terms of relative model training and testing durations and timing of the BAO summer 2016 case study, but with no extension of models to a new location, we developed another case study. This time, models for O3 and CO2 were trained using data from two U-Pods at GRET over the course of 8 months and models for O3 and CO2 were tested using data from two U-Pods at GRET over the course of approximately a month in the fall of 2016. This case study is used to test model performance when extrapolated significantly (more than several months) in time and applied in the same location as training took place.

2.2.7 GRET spring 2017

We include findings from our previous work as a case study in order to provide context. Models for CO2 and O3 were tested using data from two U-Pods collected over the course of approximately 1 month at the GRET site in the spring of 2017. Data from two U-Pods during approximately month-long periods before and after the test period were used to train O3 and CO2 models. This case study provides another example of model performance when training took place both before and after the test period, and testing took place in the same location as training.

2.3 Reference and sensor data preparation

Each of the U-Pod sensor signals was logged to an onboard micro SD card. For metal-oxide-type sensors, voltage signals were converted into resistance and then normalized by the resistance of the sensor in clean air, R0. A single value for R0 was used for each sensor across the study duration. This R0 value was taken as the resistance of each sensor during the GRET spring 2017 field deployment period, when the target pollutant had approached background levels (at night for the metal oxide O3 sensors and midday for all other metal oxide sensors) and when the ambient temperature was approximately 20 ∘C and RH was approximately 25 %. RH, temperature, and pressure measured in each U-Pod were used to calculate absolute humidity. Over the course of multiple field deployments, RH sensors in four of the U-Pods drifted down, causing the lower humidity levels to be cut off or “bottomed out”. RH sensors were not replaced during field deployments in order to preserve consistency across different deployment periods, allowing for the possibility of a single comprehensive model to apply to all data from a single U-Pod. After some experimentation in generating a “master model” that could be applied to data from a given U-Pod for all collected field measurements, across several years, we determined that individual models for each deployment would be more effective, and replacing RH sensors that had drifted down would have been appropriate in support of the methods presented here. We have since upgraded to Sensirion AG SHT25 sensors, which appear to be more robust and consistent over the course of long-term field deployments. For measurements collected in the spring and summer of 2015 and the spring of 2017, we replaced the RH signal of U-Pods with malfunctioning humidity sensors with signals from the closest U-Pod with a good humidity sensor and complete data coverage as noted in Table S1 in the Supplement. Temperature and RH sensor measurements are usually collected from within each U-Pod sensor system in order to gain representative information about the environment the gas sensors are being operated in. Using an alternative source for RH data that are not onboard an individual U-Pod has the potential to increase uncertainty of quantified gas mole fractions. We used replacement RH data from the closest available U-Pod instead of ambient measurements in order to more closely approximate humidity at the operating temperature within a U-Pod enclosure. The closest U-Pod with good humidity sensors ranged from approximately 1 m, when U-Pods were co-located during deployments in the DJ Basin at the BAO and GRET sites, to approximately 80 km during deployments in the SJ Basin.

When the U-Pods were initially deployed at the GRET site, on 23 August 2016, the RH sensors in all 10 U-Pods malfunctioned, logging an error code of −99 instead of the RH. This malfunction seemed to coincide with the implementation of radio communication from each U-Pod to a central node in an effort to reduce trips to the field site to download data and to identify issues with data acquisition promptly. No other impacts to sensor systems were observed in connection with radio communications. RH signals in the U-Pods began logging correctly again in October when we stopped remote communication. We replaced RH values for the U-Pods during this time period by utilizing data from the Picarro cavity ring-down spectrometer that was co-located at GRET with the U-Pods. Water mole fractions measured by the Picarro were converted into mass-based mixing ratios to match the units of the absolute humidity signal in the U-Pod data. We applied an adjustment to this absolute humidity signal so that it matched observations in U-Pods during the following month when good RH sensor data were available to account for the fact that temperatures were higher in U-Pod enclosures than the ambient environment. We then replaced the RH signal in each U-Pod from 23 August through 1 October 2016 with the mixing ratios derived from Picarro measurements. Using the temperature and pressure logged in each U-Pod along with the absolute humidity from the Picarro, RH was calculated for each U-Pod during this period.

To perform regressions toward field calibration of sensors, the reference and U-Pod data needed to be aligned. When reference measurements with minute time resolution were available for both training and corresponding testing periods, minute median data from the U-Pods were used. Medians were used as opposed to averages in order to reduce the potential influence of sensor noise as well as to remove short-duration spikes in the reference and sensor data that resulted from air masses that may not have been well mixed across the reference instrument inlets and the U-Pod enclosures. When reference data were instead available with only 5 or 60 min time resolution, U-Pod medians were calculated to match that time step. In order to test models using the same time resolution they were trained with, the time resolution of reference and sensor measurements for corresponding training and testing datasets was matched, if necessary, by taking medians of the dataset with higher time resolution to match the data with the longer time resolution. The first 15 min of data after any period that the U-Pods had not recorded data for the previous 5 min were removed in order to filter transient behavior associated with sensor warm-up. During a given deployment, the data removed to avoid sensor warm-up transients constituted less than 1 %.

When time was included in a model as an input, the absolute time was used. Specifically, we used the datenum value from the MATLAB environment, which is defined by the number of days that have elapsed since the start of 1 January, in the year 0000. A model was extrapolated in time whenever training data did take place both before and after a given test deployment period. In several case studies we present, model training only took place after the test deployment period, comprising an “after-only” calibration. In Colorado, and more broadly in the western United States, ambient temperatures change significantly across the seasons throughout the year, so if a model is extrapolated in time, extrapolation in temperature often results as well.

2.4 Calibration model techniques

In this work, we explore how well field calibration methods hold up in new locations, a topic which has not yet been sufficiently addressed by the scientific community. As in Casey et al. (2018), direct LMs and ANNs were trained with a number of different sensor input sets to map those inputs to target gas mole fractions measured by reference instruments. Direct LMs implemented were multiple linear regression models given by

where r is the target gas mole fraction (measured by a reference instrument) s1–sn−1 are sensor signals from U-Pods that are included as model predictor variables, and p1–pn are corresponding predictor coefficients.

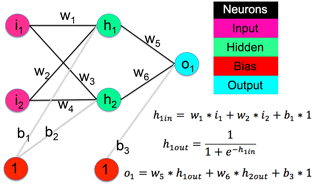

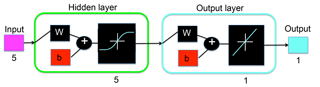

Figure 3Example of a simple feed forward neural network, showing how inputs are propagated through the network during each of the training iterations (Casey et al., 2018).

ANNs designed for regression tasks, like those employed in this work, generally consist of artificial neuron nodes that are connected with weights. Weights are initiated with randomly assigned values. An optimization algorithm is then employed to iteratively adjust the values of these weights in order to map a given set of input values to corresponding target values. An example of a very simple feed forward neural network, and how weights are propagated through it, is depicted in Fig. 3. In this work, ANNs were designed by assigning U-Pod sensor signals to artificial neurons in an input layer and assigning target gas mole fractions for an individual gas species, measured by a reference instrument to a single output neuron. Nonlinear, tansig, artificial neurons in one hidden layer for O3 or two hidden layers for CO2 (in accordance with our earlier findings for each target gas species; Casey et al., 2018) were then added between the input layer and the network output neuron. Additionally, bias neurons, each assigned a value of 1, were connected to neurons in the hidden layer(s) so that individual connecting weights could be activated or deactivated during the optimization process. The number of neurons in each hidden layer was set equal to the number of inputs included in a given ANN. Figure 4 shows a diagram of an ANN architecture employed in this work, when there were five inputs.

Figure 4Diagram of an example ANN with the same color-coded components as are presented in Figure SM3 in Sect. S2.2 of the Supplement. This ANN has five inputs, one hidden layer with five tansig hidden neurons, and one linear output layer leading to one output. The network is fully connected with weights and biases (Casey et al., 2018).

For ANN training we employed the Levenberg–Marquardt optimization algorithm with Bayesian regularization (Hagan et al., 1997). The Levenberg–Marquardt algorithm combines the Gauss–Newton and gradient decent methods, towards incremental minimization of a cost function, which is defined by the summed squared error between the ANN output and target values as a function of all of the weights in the network. Training begins according to the Gauss–Newton method, in which the Hessian matrix, the second-order Taylor series representation of the local shape of the error surface, is approximated as a function of the Jacobian matrix and its transpose, significantly reducing required training time. Network weights are adjusted accordingly during each training step to reduce error. If the cost function is not reduced in a given training step, an algorithm parameter is adjusted so that optimization more closely approximates the gradient decent method (a first-order Taylor series representation of the local shape of the cost function), providing a guarantee of convergence on a cost function minimum. Since local minima may exist across the error surface, it is important to train the same network multiple times, with different randomly assigned starting weights, in order to assess the stability of ANN performance. In this work, each ANN was trained five times.

In the implementation of Bayesian regularization, a term is added to the sum of squared error cost function as a penalty for increased network complexity in order to guard against over fitting. A two-level Bayesian inference framework is employed, operating on the assumptions that the noise in the training data is independent and normally distributed and also that all of the weights in the ANN are small, normally distributed, and unbiased (Hagan et al., 1997). In preliminary ANN tests we found that over fitting occurred even when Bayesian regularization was used, so we additionally implemented early stopping, which proved to be effective in the reduction of over fitting. To implement early stopping, during training a portion of training data are set aside as a validation dataset. Training continues so long as the error associated with the validation dataset is reduced. When the error associated with the validation dataset is no longer being reduced, training stops early. For ANNs, training datasets were divided in half on an alternating 24 h basis, with half used for training and half used as validation data for early stopping. Input signals for both LMs and ANNs were normalized so that they ranged in magnitude from −1 to 1 since this practice is recommended for the ANN optimization algorithm used (Hagan et al., 1997).

2.5 Calibration model evaluation and testing

To evaluate the performance of each of the ANN and LM models that were generated using training data then applied to test datasets, we explored residuals, the coefficient of determination (r2), root-mean-squared error (RMSE), mean bias error (MBE), and centered root-mean-squared error (CRMSE). The CRMSE is an indicator of the distribution of errors about the mean, or the random component of the error. The MBE, alternatively, is an indicator of the systematic component of the error. The sum of the squares of the CRMSE and the MBE is equal to the square of the total error, the square root of which is defined by the RMSE.

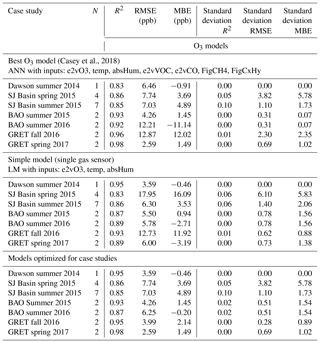

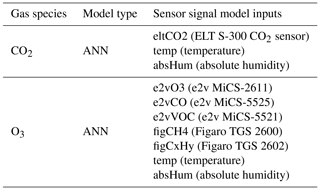

Table 3The best-performing models, as determined for each gas species, in the previous study (Casey et al., 2018).

First, we generated and applied the best-performing model, as determined in our previous work (presented in Table 3), to data from each new case study. Each new case study was selected to challenge models in different ways in order to evaluate the resiliency of the findings from our previous study when challenged by different circumstances. Then we tested LMs for CO2 and O3 that contained only the primary target gas sensor for each species, as well as temperature and absolute humidity as inputs. Finally, we generated, applied, and evaluated the performance of a number of LMs and ANNs with different sets of inputs for each case study in order to see which specific model performed the best for each individual case study. The r2, RMSE, and MBE for each of these alternative models when applied to test data are presented in the Supplement in Figs. S2 through S7, along with representative scatter plots and time series comparing the performance LMs and ANNs for a given set of inputs. In Figs. S2 through S7, the best-performing model inputs for each training and test data pair are shaded in purple. The type of model that performed the best (ANN vs. LM) is indicated in the caption of each figure. We discuss both the performance of the previously determined best-fitting model (generated using data from the GRET spring 2017 case study) when applied and generated to data from new case studies and the performance of models that were tuned to perform the best for each individual case study. From these comparisons, we draw insight into circumstances that challenge model performance in terms of relative local emissions characteristics, location, and timing between model training and testing pairs. Table 4 lists the relative timing and parameter coverage between model training and testing periods for dataset pairs, highlighting instances of incomplete coverage during training that led to model extrapolation during testing.

Table 4Relative timing and parameter coverage between model training and test deployment dataset pairs. Incomplete coverage of time occurred if training only took place before or after the test data period and not before and after (before and after). Incomplete coverage of location occurred when training took place in one location and testing took place in another. Incomplete coverage of parameters, or extrapolation of models, including the target gas mole fraction, temperature, time, and pressure occurred when the values observed during training did not encompass the values observed during testing. Extrapolation in time occurred when training only took place after the test period (after model training timing). Extrapolation in location occurred when a model was trained in one location and then applied to data collected in a new location.

3.1 BAO and SJ Basin summer 2015

The set of deployments we conducted in the summer of 2015 is particularly useful to the objective of characterizing how well field calibration models can be extended to a new location relative to their performance where they were trained. During the testing period, two U-Pods were located at BAO, where training took place, while seven U-Pods were co-located with reference measurements for O3, and two U-Pods were co-located with reference measurements for CO2 in the SJ Basin, across Colorado, and over the state line in New Mexico. Sampling sites at BAO, in the DJ Basin, and throughout the SJ Basin were all influenced by oil and gas production activities and their associated emissions to some extent, but the composition of the production stream is different in each basin. In the SJ Basin, particularly the northern portion of the basin where all our sampling sites were located, production is dominated by coal bed methane. In contrast, many wells in the DJ Basin produce both oil and gas, leading to greater relative abundance of heavier hydrocarbons in emissions. The DJ Basin airshed is also more strongly impacted by urban emissions than the SJ Basin airshed, and is more strongly influenced by mobile sources with Denver, Boulder, Fort Collins, Greeley, and many other smaller communities in its midst and along its borders. The Four Corners region, where the SJ Basin is situated, has a much smaller population density. Additionally, while there are some agricultural activities and associated emissions in and around the SJ Basin, there is a significantly larger agricultural industry in and around the DJ Basin. SJ Basin sampling sites spanned a range of elevations, including some that were higher and some that were lower than the BAO tower, coinciding with a wide range of atmospheric pressure at the distributed sampling sites.

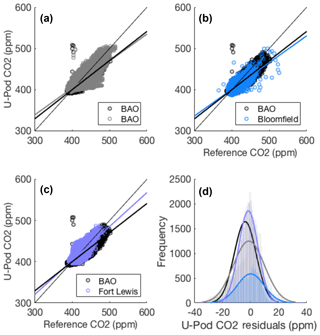

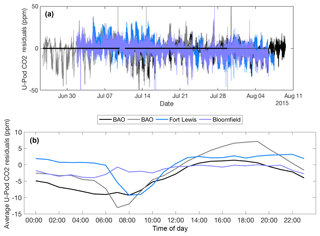

Figure 5Scatter plots of U-Pod CO2 vs. reference CO2 and overlaid histograms of U-Pod CO2 residuals for (a) BAO and BAO, (b) BAO and Bloomfield, and (c) BAO and Fort Lewis. A 1:1 single-weight reference line is included in each scatter plot along with double-weight lines of best fit for U-Pods at each sampling location. Data from U-Pod BC at BAO are plotted in black along with U-Pods BJ, BB, and BD at BAO, Fort Lewis, and Bloomfield, respectively. Sensor signal inputs include eltCO2, temp, and absHum. (d) Overlaid histograms of model residuals with respect to reference CO2.

Figure 6U-Pod CO2 residuals by (a) data and (b) time of day and throughout the duration of the deployment period. Sensor signal inputs include eltCO2, temp, and absHum.

We began by testing the best-performing CO2 model, as determined in our previous work (Casey et al., 2018), on data from this case study, during the summer of 2015. ANNs were trained for each U-Pod using data from the BAO tower with the following inputs from each U-Pod: eltCO2 (ELT S-300 CO2 sensor), temp (temperature), and absHum (absolute humidity); the ANNs were then tested on data collected at the BAO tower and at sampling sites in the SJ Basin. The performance of these ANNs when applied to the test data is presented in Figs. 5 and 6. Figure 5 shows scatter plots of U-Pod CO2 vs. reference CO2 during the test data period for sensors located at BAO as well as sensors that were located at distributed sampling sites throughout the SJ Basin. The scatter plots show that while there was generally a smaller dynamic range of CO2 at the SJ Basin sites relative to BAO, model performance did not appear to be impacted or degraded by spatial extension to these locations in the SJ Basin. The line of best fit for the Fort Lewis site (periwinkle) is even closer to the 1:1 than the lines of best fit for two U-Pods located at BAO (black and grey). Overlaid histograms of residuals in the bottom right corner of Fig. 5 show that CO2 residuals from each of the SJ Basin U-Pods are generally centered and evenly distributed about zero with similar spread.

U-Pod CO2 average residuals during this test period, using the best-performing ANNs from our previous study, are plotted according to time of day and date in Fig. 6. While the use of ANNs in place of LMs reduces U-Pod CO2 residuals significantly with respect to temperature, some daily periodicity in the residuals for all four U-Pods is apparent in the upper plot in Fig. 6 that shows residuals by date. The lower plot in Fig. 6, showing residuals by time of day, demonstrates that CO2 from three of four U-Pods was generally underpredicted during early hours of the morning and generally overpredicted during afternoon and evening hours. Interestingly, this trend in residuals by time of day is more pronounced for the two U-Pods that remained at BAO. Upon examination of overlaid histograms showing distributions of parameters during model testing and training periods, in Fig. S12, and model time series and residuals plots in Fig. S3, there is no indication of model extrapolation at the BAO site, nor the sites in the SJ Basin (with the exception of pressure due to sampling site altitudes) and no significant trends of concern with respect to residuals and model inputs.

Next we evaluated the best model type and set of inputs for CO2 based on this specific case study. Differing from our previous findings, for this group of training and testing data pairs from the summer of 2015 at the BAO and SJ Basin sites, the inclusion of the e2vVOC (e2v MiCS-5521) and alphaCO (Alphasense CO-B4) sensor signals noticeably improved the RMSE in the quantification of CO2. While the inclusion of these two secondary sensor signals did not result in the best performance in our previous study, using data from the GRET site (Casey et al., 2018), their inclusion did not degrade performance relative to the models that included just eltCO2, temp, and absHum signals as inputs; thus including these sensor signals may be appropriate as a general rule in areas that are strongly influenced by oil and gas production activities. Generally, using RH vs. absHum signals as ANN inputs did not have a measurable impact on model performance, though linear models were sometimes found to perform better when the absHum signal is used instead of the RH signal. From Fig. S2, it is apparent that inputs including e2vCO (e2v MiCS-5525), temp, RH, e2vVOC, and alphaCO sensor signals resulted in the lowest RMSE for U-Pods at BAO as well as at the two SJ Basin sites. Plots analogous to those presented in Figs. 5 and 6, but with this best-performing set of inputs for the present dataset pairs, are presented in the Supplement in Figs. S24 and S25, respectively.

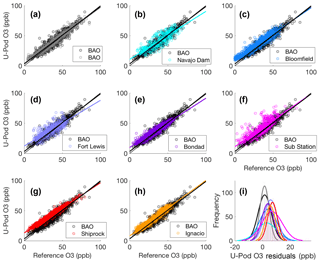

Figure 7Scatter plots of U-Pod vs. reference O3, comparing U-Pod BC at BAO, in black, with (a) U-Pod BJ at BAO, (b) U-Pod BA at Navajo Dam, (c) U-Pod BB at Fort Lewis, (d) U-Pod BD at Bloomfield, (e) U-Pod BE at Bondad, (f) U-Pod BF at the Sub Station, (g) U-Pod BH at Shiprock, and (h) U-Pod BI at Ignacio. (i) Overlaid histograms of model residuals with respect to reference O3.

Figure 8Residuals of U-Pod O3 spanning of the deployment period, by (a) date, (b) time of day, and (c) temperature.

For O3, we similarly began by testing the model that was found to perform the best from our previous study on data from this case study. O3 was quantified using data from the two U-Pods deployed at BAO and seven of the U-Pods deployed at SJ Basin sampling sites using ANNs with the following inputs: e2vO3 (e2v MiCS-2611), temp, absHum, e2vCO, e2vVOC, figCH4 (Figaro TGS 2600), and figCxHy (Figaro TGS 2602). These same inputs and model configuration were also found to be the best performing for the U-Pods at the BAO site and the majority of SJ Basin 2015 dataset pairs as noted in Fig. S2. Interestingly though, LMs with this same set of inputs performed competitively well for three of the seven U-Pods in the SJ Basin in terms of RMSE and r2. The observation that LMs performed competitively well at a subset of SJ Basin sites is likely connected to the relative abundance of hydrocarbons and other potentially interfering oxidizing and reducing gas species at individual sampling sites, diverging from conditions present during model training at the BAO site. ANNs can better represent the influence of these interfering species than LMs during training, but appear to have lost their ability to do so for this subset of microenvironments in the SJ Basin.

Scatter plots and trends in residuals are presented in Figs. 7 and 8 for O3. These plots show the performance of U-Pods at BAO relative to those at SJ Basin sites in the quantification of O3 during the test data period. U-Pod O3 measurements at Fort Lewis, Navajo Dam, and the Sub Station did not agree with reference measurements as well as U-Pod O3 measurements from the other four SJ Basin sites. As noted earlier, U-Pods at the Navajo Dam and Sub Station sites had faulty RH sensor data, so humidity from the U-Pod located at the Ignacio site was used in place of their humidity signals. Since the Ignacio site was located approximately 35 and 80 km away from the Navajo Dam and Sub Station sites, respectively, this could have introduced some additional error into the application of a calibration equation, particularly since we showed earlier that O3 ANNs like the ones we employed here are very sensitive to humidity inputs (Casey et al., 2018). Spatial variability in humidity across tens of kilometers could be significant as isolated storms (which are on average 24 km in diameter) propagate throughout the region in the summer. At the Fort Lewis site, a 2B Technologies model 202 O3 analyzer was employed as a reference instrument, differing from the Thermo Scientific 49i, Thermo Scientific 49is, and Teledyne API T400 instruments utilized for reference measurements elsewhere in the SJ Basin, and the Thermo Scientific 49c that was operated at the BAO site and used for model training. Of all the reference instruments, only the 2B Technologies model 202 O3 at the Fort Lewis site was operated in a room that was not temperature controlled, as such, some bias may have been introduced to the Fort Lewis O3 reference measurements. Different instruments, operators, calibration, and data quality checking procedures could have contributed to observed discrepancies. It is also possible that the microenvironment at each of these three sites contributed to lower model performance. Figure S1 shows that differences among U-Pod O3 performance during the test deployment period were larger than those observed during the training period among the same U-Pods. Therefore, the incongruous field calibration performance phenomena we observed seem to be connected to unique characteristics associated with humidity sensor signal replacement or individual sampling site characteristics, possibly relative abundance of oxidizing and reducing molecules in the local atmosphere, which could interfere with sensor responses to their target gas species, as opposed to the quality of individual gas sensors in each of those U-Pods.

All SJ Basin U-Pod O3 measurements systematically overestimate lower levels of O3 each night, a trend apparent in the scatter plots in Fig. 7 and in the plot of residuals by time of day in Fig. 8. Upon examination of the scatter plots in Fig. 7, U-Pods at some sampling sites had positive bias for higher O3 measurements as well (Shiprock, Ignacio, Sub Station, and Bloomfield), while for others, bias at the higher end of O3 distributions did not appear to be present (Navajo Dam, Fort Lewis, and Bondad). The plot of residuals by time of day in Fig. 8 shows that the two U-Pods at BAO did not have significant trends in their residuals according to the time of day, but that U-Pods deployed at SJ Basin sites consistently overestimated nighttime O3. The residuals are also plotted with respect to temperature in Fig. 8, where all U-Pods, even those at BAO to a lesser extent, appear to overpredict O3 at lower temperatures, which generally occurred at night. In general, the times of day that correspond to the highest O3 levels had the lowest residuals, with some exceptions at the Fort Lewis and Navajo Dam sites.

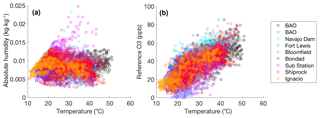

Figure 9Scatter plots showing the combined parameter space of (a) absolute humidity with temperature and (b) reference O3 with temperature for each of the U-Pod sampling sites at BAO and the SJ Basin.

Figure 8 includes a plot of the residuals across the duration of the deployment period, showing no significant sensor drift in measurements for any of the U-Pods. This plot also shows that the highest residuals observed generally occurred over short periods in time, particularly for the Fort Lewis (blue) and Sub Station (magenta) sites. In order to further explore the performance of field calibration models for O3 at SJ Basin sites relative to BAO, the combined parameter space of temperature with O3 reference mole fractions and temperature with absolute humidity are presented in Fig. 9. The combined temperature and reference O3 parameter space appears to be similar for all of the U-Pods, at both the BAO and the SJ Basin sites. However, there appears to be some outlying combined temperature and humidity parameter space at the Sub Station site and at the Navajo Dam site. Brief excursions, lasting approximately 2–4 h, of high humidity (up to 0.025 kg kg−1, relative to the upper bound of absolute humidity observed at other sampling sites of 0.013 kg kg−1) may be connected to some of the large short-term residuals observed at these two sampling sites.

The majority of U-Pods stopped logging data, unfortunately, at one point or another during these deployments. Periods of missed data during the month-long deployment included approximately 1 day at the Shiprock site, 2 days at the Bloomfield site, 4 days at the Sub Station site, 9 days at the Fort Lewis site, and 17 days at the Navajo Dam site. We carried out frequent sampling site visits (on a weekly or fortnightly basis as logistics and travel to remote locations in some cases allowed) in order to identify and fix problems as they arose during field deployments. Operational issues were predominantly attributable to power supply problems associated with BNC (Bayonet Neill–Concelman) bulkhead fittings and corrupted micro SD cards. The periods of missing data are reflected in the plots of residuals by date in Fig. 6 for CO2 and in Fig. 8 for O3. Fortunately, no drift over the course of the deployment period was observed in these plots.

3.2 Insight from additional case studies of field calibration extension to new locations

3.2.1 Urban calibration moved to rural/peri-urban setting: Dawson summer 2014

The Boulder County deployment in the summer of 2014 was used to test how well a field calibration for sensors in one U-Pod, generated in a busy urban area (at CAMP in downtown Denver), could be extended to a peri-urban setting (at Dawson School in eastern Boulder County). Training took place at CAMP for several days each month, before and after each approximately month-long deployment period at Dawson School over the course of 4 months. Figure S7 shows the performance of a number of ANN- and LM-based CAMP field calibrations with different sets of inputs at this Dawson School test site. In this case study, LMs performed better than ANNs across all sets of sensor inputs. Unlike findings from our previous study (Casey et al., 2018), including secondary metal-oxide-type sensors as inputs did not help to improve model performance. The best-performing set of inputs included just e2vO3, temp, and absHum signals. The very different relative abundance of various oxidizing and reducing compounds in downtown Denver relative to the Dawson School site, surrounded by open grassy fields, and in closer proximity to oil and gas production activities, may be the reason why including additional gas sensors as model inputs and the use of ANNs failed to improve the quantification of U-Pod O3 in this case. Relatively short training durations could also contribute to this finding, based on findings from our previous work (Casey et al., 2018).

The fact that LMs performed better than ANNs in this case (with an r2 of 0.95 and RMSE of 0.35 ppb for LMs, as opposed to an r2 of 0.9 and an RMSE of 5.1 ppb for ANNs) may have to do with the general expectation that LMs be more resilient to extrapolation than ANNs. Notably though, neither ANNs nor the LMs captured the highest levels of O3 at Dawson School well. We attribute the poor performance at high levels of O3 at this site, those in exceedance of about 70 ppb, to extrapolation of the O3 mole fractions encompassed during the training period. The LM generally performed well within the O3 levels covered during the training period. Across applications, ANNs have been found to be unreliable when extrapolated, due to the nonlinear nature and complexity of the relationships they represent. Though LMs are generally expected to be more robust to extrapolation than ANNs, increased uncertainty in measurements can also be introduced to LMs when parameters are extrapolated. In order to have high confidence in measurements of uncommonly high mole fractions of a target gas, the model training period has to encompass the full possible range. Combining both field calibration and lab calibration data together in a training dataset could accomplish this type of coverage. If extrapolation is expected to occur with respect to the target gas mole fraction, as in this case study, the use of an inverted LM may yield better results than LMs or ANNs. We describe inverted LMs and their potential advantages in our previous work (Casey et al., 2018). Keeping in mind this finding about O3 extrapolation, for ambient measurements in the DJ Basin, for subsequent deployments, we selected field calibration sites that were more representative of distributed sampling site locations, outside of the dense urban environment in downtown Denver, where O3 did not get as high, likely due to increased titration of O3 at night in connection with abundant NOx compounds.

3.2.2 After-only calibration moved across the state: SJ Basin spring 2015

We also examined model performance that was subject to extrapolation in time and temperature. We present O3 model performance data from four U-Pods that were co-located with reference instruments in the SJ Basin in the spring of 2015, at the Navajo Dam, Sub Station, and Bloomfield sites. Two U-Pods at the Bloomfield site provide a set of duplicate measures. Figure S4 shows the performance of a number of ANN- and LM-based BAO field calibrations with different sets of inputs at these SJ Basin test sites in the spring of 2015, just prior to the summer 2015 BAO training period. U-Pod O3 was quantified for these deployments using training data from the same co-location period at BAO that was used toward quantification of the summer 2015 SJ Basin deployment, described in Sect. 3.1.

The addition of time as a model input did not seem to improve the performance of either ANNs or LMs and ANNs generally outperformed LMs. Gas sensor manufactures do not clearly define sensor lifetimes, but sensors are generally expected to lose sensitivity over time. For example, Alphasense CO-B4 electrochemical sensors are expected to have 50 % of their original sensitivity after 2 years (Alphasense, 2015). The heater resistance in a given metal-oxide-type sensor is expected to drift over time, influencing sensor measurements (e2v Technologies Ltd., 2007). Masson and colleagues observed a significant drift in a metal oxide sensor heater resistance over the course of a 250-day sampling period in a laboratory setting (Masson et al., 2015). While we did not measure and record metal oxide sensor heater resistance for sensors included in U-Pods, we have investigated eltCO2 and e2vO3 sensor signal drift from the summer of 2015 through the summer of 2017. These data are presented in Fig. S26. Systematic downward drift in all eltCO2 sensor signals is apparent over this time frame. A clear and consistent pattern of systematic drift over this time period is less apparent for e2vO3 sensors. Since the training data were collected immediately after the test data period and since the test data period was relatively short (approximately 1 month), sensor drift could be negligible across the combined training and testing time frame. U-Pods experienced colder temperatures during this spring deployment than were subsequently encompassed in the BAO training period. Linear models generally resulted in more bias than ANNs. Again, the model for O3 that was found to perform best in our previous study (Casey et al., 2018), an ANN with temp, absHum, and all metal oxide sensor signals as inputs, performed the best at sites included in this case study, with one exception. At the Sub Station site the inclusion of the figCxHy sensor signal decreased model performance. Additionally, the performance of all models tested at the Sub Station site during the SJ Basin spring 2015 deployment was significantly worse in terms of MBE than model performance at other sites, both LMs and ANNs with different sets of inputs. Since this sensor signal input augmented model performance at the same sampling location during the summer deployment period, this finding could be attributable to the extrapolation with respect to temperature that occurred during the test period of this case study. As discussed in the introduction, metal oxide sensor sensitivity to different gas species can vary along with sensor surface temperature. Models were trained to use the figCxHy sensor signal, across the ambient temperatures encompassed by the training data, to help account for the influence of confounding gas species at the BAO site. We think it is possible that the different temperatures in combination with the unique mix of gas species present at the Sub Station site, which the figCxHy sensors are highly sensitive to, caused the ANN to perform worse. The Sub Station site is close to two large coal-fired power plants, indicated in Fig. 11 by orange markers in the SJ Basin pane. It is possible that emissions from the San Juan Generating Station (north) and/or the Four Corners Power Plant (south) uniquely influenced the response of this particular Figaro sensor in ways that are not well represented at BAO in the DJ Basin or present at other SJ Basin sampling sites. Several-hour-long enhancements or spikes are apparent in the raw eltCO2 and alphaCO sensor signals in the U-Pod deployed at the Sub Station site, indicating the presence of a nearby combustion-related emissions source. Another indication of a near-field power plant plume across the deployment area is apparent, in the form of several-hour-long enhancements of reference measurements of NO and NO2 at the site.

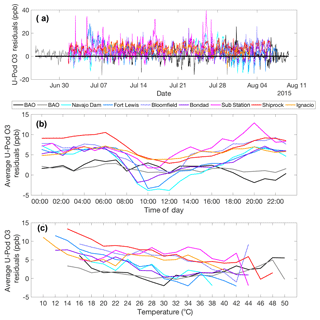

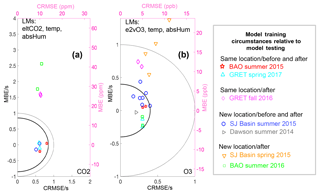

Figure 10Target diagrams demonstrating performance of a previously determined best-performing model across all new test datasets. (a) CO2 and (b) O3 LM performance when only the primary gas sensor, temperature, and humidity are inputs. (c) CO2 and (d) O3 ANN performance with inputs that were found to perform best at the GRET site in the spring of 2017 (Casey et al., 2018). Model input definitions: eltCO2 (ELT S-300 CO2 sensor), e2vO3 (e2v MiCS-2611 sensor), temp (temperature), and absHum (absolute humidity).

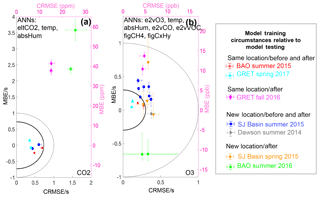

Figure 11Target diagrams demonstrating performance of a previously determined best-performing model across all new test datasets. (a) CO2 and (b) O3 ANN performance with inputs that were found to perform best at the GRET site in the spring of 2017 (Casey et al., 2018). Model input definitions: eltCO2 (ELT S-300 CO2 sensor), e2vCO (e2v MiCS-5525 sensor), e2vVOC (e2v MiCS-5521 sensor), e2vO3 (e2v MiCS-2611 sensor), figCH4 (Figaro TGS 2600 sensor), figCxHy (Figaro TGS 2602 sensor), temp (temperature), and absHum (absolute humidity).

3.2.3 After-only calibration moved 60 km across the DJ Basin: BAO summer 2016

In testing the performance of field calibrations that were generated using data collected at the GRET site in 2017 and applied for the quantification of O3 at BAO in 2016, across the DJ Basin, we were interested to find that again, the inclusion of time as a model input did not yield any improvements in calibration equation performance, even though model training took place several months after the test period. Figure S5 shows the performance of a number of ANN and LM-based GRET field calibrations with different sets of inputs at this BAO test site the previous summer. Another interesting finding from this training and testing dataset pair was that the addition of secondary metal-oxide-type gas sensors did not seem to help improve the performance of field calibration equations either. Figure S5 shows that ANNs performed better than LMs and that the most useful set of inputs included just e2vO3, temp, and absHum. Similarly, the performance of field calibration equations for CO2 generated at GRET in 2017 and applied to data from BAO in the summer of 2016 did not seem to be augmented by the inclusion of additional gas sensor signals, though the inclusion of time as a predictor was useful. In the case of CO2, LMs outperformed ANNs, which could be largely attributable to notable instability associated with the performance of ANNs when time was included as an input. For CO2, we expected the inclusion of time as an input to be useful to model performance across this time frame, owing to observed trends of decreased CO2 sensor sensitivity in time. To keep the power requirements for the U-Pod sensor systems low, and to keep systems quiet, fans as opposed to pumps were used to exchange air in the enclosures. As a result, the air entering the enclosures was not filtered, and sensors were exposed to some dust over time. This dust exposure is likely largely responsible for observed decreases in CO2 sensor sensitivity over time, shown in Fig. S26. Decreases in infrared lamp intensity over time may also play a role. In the case of CO2 sensors, the implementation of pumps to draw new, filtered air into sensor enclosures could likely significantly reduce loss rates in the sensitivity of an individual sensor over periods of continuous deployment in an ambient environment. While we are not sure why ANN performance tended not to benefit from the addition of a time input, while LM performance did, it is likely attributable to the extrapolation of the time input, since only data that were collected significantly subsequent to the test data period were used for training. ANNs are expected to be able to better represent time decay trends if data from measurements both prior and subsequent to the test period are used in training, so that there is no extrapolation with respect to the time input.

3.2.4 After-only calibration applied to the same location: GRET fall 2016

To investigate if reduced performance from these GRET to BAO field calibration tests was more connected to the new deployment location or to the significant extrapolation with respect to time of the calibration models, we generated calibration equations based on similarly long training periods at GRET and applied them to data collected prior to the training period at GRET in the fall of 2016. We could not draw strong conclusions from this comparison, unfortunately, because of an issue with humidity sensors, described in Sect. 2 and below. Figure S6 shows the performance of a number of ANN- and LM-based GRET field calibrations with different sets of inputs at the GRET test site during fall of the previous year. For O3 models, the best-performing ANN inputs for this dataset pair were the same ones that we found in our previous study (Casey et al., 2018), with the exception of the humidity signal. The fall 2016 GRET test period coincided with the time period U-Pod absolute humidity was replaced using mixing ratios from a co-located Picarro due to missing humidity sensor data. Interestingly, when this “borrowed” humidity signal was not included as an input, the model performance markedly increased and became competitive with other “same location” test deployment case studies. In our previous work, we showed that O3 models were very sensitive to the humidity signal input (Casey et al., 2018). In this case study, it seems that replacing actual humidity signals with closely approximated humidity signals negatively influenced model performance. In order to investigate this observation further, we tested the influence of replacing humidity data in the same manner, using mixing ratios from the same co-located Picarro, on test data from the GRET spring 2017 case study. A comparison of model performance under normal and this borrowed RH circumstance is presented in Fig. S27 in the Supplement. O3 model performance was negatively impacted when borrowed RH values based on Picarro data replaced U-Pod RH sensor signals. From these findings, it seems likely that the inclusion of multiple metal-oxide-type sensors, which all respond strongly to humidity fluctuations as inputs in the model, helped the ANN to effectively represent the influence of humidity in the system, more so than including a borrowed RH signal from another instrument. We tested models with multiple gas sensor signals and no humidity signal as inputs for a number of other case studies as well (as seen in Figs. S2, S4, and S5), when good humidity data from U-Pod enclosures were available, but they did not turn out to be the best-performing model in any of these other tests.

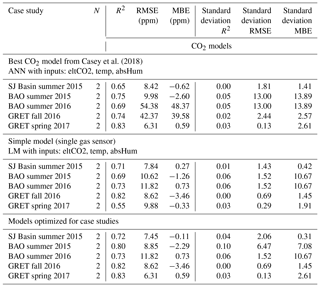

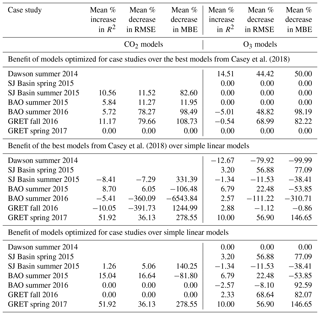

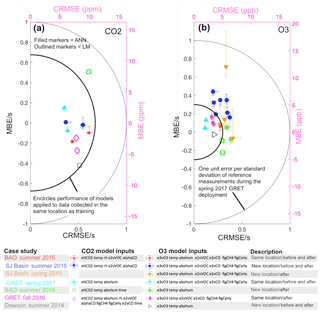

3.3 Evaluation of models across training and testing dataset pairs