the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

High-fidelity retrieval from instantaneous line-of-sight returns of nacelle-mounted lidar including supervised machine learning

Thomas G. Herges

Wind turbine applications that leverage nacelle-mounted Doppler lidar are hampered by several sources of uncertainty in the lidar measurement, affecting both bias and random errors. Two problems encountered especially for nacelle-mounted lidar are solid interference due to intersection of the line of sight with solid objects behind, within, or in front of the measurement volume and spectral noise due primarily to limited photon capture. These two uncertainties, especially that due to solid interference, can be reduced with high-fidelity retrieval techniques (i.e., including both quality assurance/quality control and subsequent parameter estimation). Our work compares three such techniques, including conventional thresholding, advanced filtering, and a novel application of supervised machine learning with ensemble neural networks, based on their ability to reduce uncertainty introduced by the two observed nonideal spectral features while keeping data availability high. The approach leverages data from a field experiment involving a continuous-wave (CW) SpinnerLidar from the Technical University of Denmark (DTU) that provided scans of a wide range of flows both unwaked and waked by a field turbine. Independent measurements from an adjacent meteorological tower within the sampling volume permit experimental validation of the instantaneous velocity uncertainty remaining after retrieval that stems from solid interference and strong spectral noise, which is a validation that has not been performed previously. All three methods perform similarly for non-interfered returns, but the advanced filtering and machine learning techniques perform better when solid interference is present, which allows them to produce overall standard deviations of error between 0.2 and 0.3 m s−1, or a 1 %–22 % improvement versus the conventional thresholding technique, over the rotor height for the unwaked cases. Between the two improved techniques, the advanced filtering produces 3.5 % higher overall data availability, while the machine learning offers a faster runtime (i.e., ∼ 1 s to evaluate) that is therefore more commensurate with the requirements of real-time turbine control. The retrieval techniques are described in terms of application to CW lidar, though they are also relevant to pulsed lidar. Previous work by the authors (Brown and Herges, 2020) explored a novel attempt to quantify uncertainty in the output of a high-fidelity lidar retrieval technique using simulated lidar returns; this article provides true uncertainty quantification versus independent measurement and does so for three techniques rather than one.

- Article

(6377 KB) - Full-text XML

- BibTeX

- EndNote

This article has been authored by an employee of National Technology & Engineering Solutions of Sandia, LLC under Contract No. DE-NA0003525 with the U.S. Department of Energy (DOE). The employee owns all right, title and interest in and to the article and is solely responsible for its contents. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a non-exclusive, paid-up, irrevocable, world-wide license to publish or reproduce the published form of this article or allow others to do so, for United States Government purposes. The DOE will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan https://www.energy.gov/downloads/doe-public-access-plan (last access: 2 February 2022).

Despite the continuing growth of wind energy technology, several sub-fields of wind energy are still not mature (Veers et al., 2019). Real-time control of turbines within the stochastic atmosphere and better understanding of turbine-to-turbine wake interactions represent two areas needing further advances and areas for which accurate wind field sensing around the turbine is imperative. Such sensing is enabled through Doppler lidar instruments, and nacelle-mounted lidars, in particular, have made recent inroads with applications in monitoring and control (Harris et al., 2006; Mikkelsen et al., 2013; Sjöholm et al., 2013; Simley et al., 2014, 2018) as well as wake aerodynamics model validation (Doubrawa et al., 2020; Brown et al., 2020; Hsieh, 2021). Continuing investment in such lidar technology includes efforts to reduce the uncertainty of wind field measurements over the whole field of view, which is critical for both forward-mounted lidar used in feed-forward control applications and rear-mounted lidar used in wake measurements for model validation. Uncertainties in processed lidar data stem from both the lidar line-of-sight velocity, ulos, readings themselves and from imperfect assumptions in modeling approaches for reconstruction of the velocity vector (Lindelöw-Marsden, 2009; van Dooren, 2021). This work focuses on quantification of the former, more fundamental source of lidar uncertainty that is present in all lidar measurements regardless of any flow reconstruction approach that is later applied to the data.

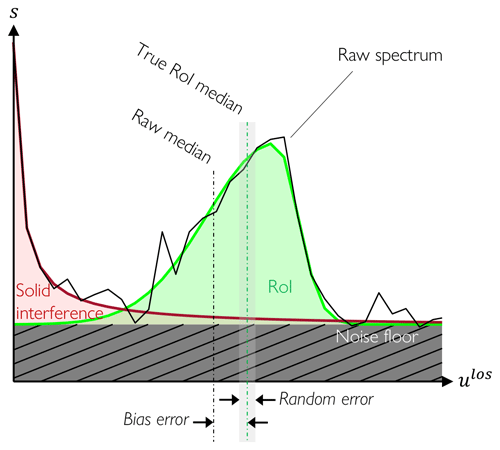

An example of the raw return from a line-of-sight reading of a CW lidar is given in Fig. 1. The fast-Fourier-transformed power spectral density, s, returned from the scattering along the laser path is distributed across a range of Doppler shift frequencies, f, which are related to the line-of-sight velocity according to , where λ is the wavelength of the laser. Some aspects of the uncertainty in ulos have been found to be small for typical commercial and research lidar setups such as the accuracy of the positioning of the line of sight itself (Herges et al., 2017) and beam motion during data capture (i.e., the blurring effect) (Simley et al., 2014). Other aspects are well documented and can be quantified a priori through virtual lidar techniques. Most notably, there is significant broadening of the lidar spectra (and thus alteration of the processed quantities of interest) from flow inhomogeneities such as mean gradients and turbulence within the measurement volume (Stawiarski et al., 2013; Simley et al., 2014; Wang et al., 2016; Forsting et al., 2017; Sekar et al., 2020). This broadening, which is also a function of the line-of-sight weighting distribution for a CW lidar, is observed as the width of the region of interest (RoI) in Fig. 1. On the other hand, we find several error sources in the measured lidar results whose impact cannot be known a priori. These sources are due to spectral features embedded in the lidar signal that stem from both instrument errors and non-aerosol returns as shown in Fig. 1, and these are especially prevalent for nacelle-mounted lidars as described below.

Figure 1Example of a power spectral density, s, distribution versus line-of-sight velocity, ulos, of a raw CW lidar return illustrating the contamination of the region of interest (RoI) by solid interference and amplitude noise. The raw geometric median contains bias error due to the solid interference as well as random error due to the amplitude noise. Figure adapted from Brown and Herges (2020).

Amplitude noise in the spectrum of a CW Doppler lidar, which is depicted by the localized peaks in Fig. 1, results in a loss of precision (i.e., larger spread from the true value) in the velocity estimation from the RoI. The intensity of the noise, which for modern lidar is due primarily to shot noise (Peña and Bay Hasager, 2013), depends in part on the range-resolved intensity of the backscatter. Therefore, appropriate shot-noise error analyses should account for the unique noise content observed in each lidar return, which cannot be determined a priori (Simley et al., 2014). A particular configuration of interest to our work is a fast-scanning (i.e., ∼500 Hz) CW lidar that has been mounted on turbine nacelles (Sjöholm et al., 2013; Mikkelsen et al., 2013). One drawback of this configuration, however, is that the high temporal resolution is a trade-off for shorter averaging times that yield higher instrument error due to a low carrier-to-noise ratio or CNR (Angelou et al., 2012).

Interference from solid surfaces that intersect the probe volume introduces a source of bias in lidar readings, and the severity of the interference again cannot be determined a priori. For nacelle-mounted lidar, such interference is common and stems from the surrounding terrain, optical windows (i.e., boresight interference, which occurs near the center of the field of view for the SpinnerLidar configuration when the line of sight is normal to the weatherization window; Brown and Herges, 2020), neighboring turbines, meteorological towers, and the turbine's own blades for the case of a forward-facing lidar mounted on the top of the nacelle (rather than on the spinner). A characteristic spike shape in the Doppler return near zero velocity indicates the presence of solid interference.

The first tactic against spectral noise and solid interference is quality assurance/quality control (QA/QC) processing. In the context of nonstationary atmospheric measurements, the most simplistic QA/QC approach is thresholding (Angelou et al., 2012; Peña and Bay Hasager, 2013), whereby spectral bins with s magnitudes less than a specified threshold are nulled (if no signal remains below this threshold, then the lidar data can be rejected; Frehlich, 1996). Noise variance can be reduced by accumulating the spectra over multiple laser pulses along a single line of sight (Rye and Hardesty, 1993), and this approach has become mainstream in both pulsed and CW lidar technologies. Conversely, if the spectrum peak magnitude is too high, the data may be rejected on the grounds that a solid return has been captured. Rather than reject such a solid return outright, Godwin et al. (2012) worked to mitigate ground interference bias for airborne pulsed lidar, though their approach was admittedly subject to a large degree of subjectivity in defining certain thresholds and was also unable to handle wind speeds near the interference velocity. Herges and Keyantuo (2019) developed another technique that employs a user-defined set of filters to carefully estimate the bounds of the RoI, thus removing the impact of features due to solid interference and spectral noise away from the RoI.

The next step in lidar retrieval is the mean frequency estimation (i.e., parameter estimation of the Doppler frequency shift, which yields the line-of-sight velocity estimate). Mean frequency estimators (MFEs) have long been studied for radar and lidar applications including recent work with parameter estimation on spectra from fast-Fourier-transformed signals. Specific to pulsed lidar measurements, Lombard et al. (2016) examined five such estimators including the maximum, centroid, matched filter, maximum likelihood, and polynomial fit MFEs and found that all estimators save the first offer suitable accuracy compared to the theoretical ideal performance of the Cramer–Rao lower bound. Specific to CW lidar measurements, Held and Mann (2018) examined the maximum, centroid, and median MFEs and found the highest accuracy when validating lidar results against sonic anemometer measurements for the median MFE followed by the centroid and finally maximum MFEs. Thus, the median MFE has become the most common estimator used in wind energy.

After the frequency estimation, another layer of QA/QC can be applied through despiking techniques that reject outliers in a time series, such as the classical standard deviation filter, iterative standard deviation filter (Hojstrup, 1993; Vickers and Mahrt, 1997; Newman et al., 2016), or interquartile range filter (Hoaglin et al., 1984; Wang et al., 2015). Leveraging assumptions specifically related to lidar configurations, Forsting and Troldborg (2016) describe a finite-difference-based despiking technique that importantly considers spatial as well as temporal gradients. Beck and Kühn (2017) introduced an adaptive filtering technique, though it relies on the assumption of self-similar flow over a span of time.

Above we have reviewed how the type of QA/QC processing and mean frequency estimation have bearing on the accuracy of the final retrieved quantities of interest (QoIs). All the described techniques work to mitigate amplitude noise and solid interference but involve a degree of subjectivity in defining certain processing parameters. While appropriate selections of these parameters can be found for specific conditions and Doppler return shapes, there is no universal set of optimal parameters even for the selection of the simple noise threshold (Angelou et al., 2012; Gryning et al., 2016), which makes the application of the de-noising techniques prone to overestimating or underestimating QoIs. Working towards a solution, Brown and Herges (2020) quantified residual uncertainty in QoIs from a full retrieval technique by processing synthetic spectra with known ground truth properties that mimicked the shape of measured spectra.

The ultimate test of the accuracy of the QoI estimation, however, is experimental validation against an independent measurement. In terms of validation of the uncertainty of lidar techniques, most work has been performed on time-averaged samples, typically over a 10 min window as specified in the industry standard for power performance assessment (Commission, 2005). For instance, Smith et al. (2006), Albers et al. (2009), Slinger and Harris (2012), Gottschall et al. (2012), Hasager et al. (2013), Wagner and Bejdic (2014), Giyanani et al. (2015), and Cariou et al. (2013) all compare lidar-derived velocities to traditional anemometer-derived velocities over 10 min bins, often returning regression slopes and coefficients of determination within 0.01 of unity.

For turbine control and model validation purposes, however, the uncertainty of interest is the instantaneous one, for which values are significantly larger and the volume of previous work is significantly smaller. Courtney et al. (2008) reported instantaneous errors between co-located lidar probe volumes and cup anemometers to have standard deviation of 0.2 m s−1 and mean bias between −0.2 and 0.2 m s−1, though they noted that the actual values depend on the distribution of wind speeds. A wind tunnel experiment by van Dooren et al. (2022) showed instantaneous velocity from a co-located lidar probe volume and hot-wire anemometer with coefficients of determination much smaller than the 10 min averaged results above (i.e., . As Pedersen and Courtney (2021), for instance, have shown that the standard error in line-of-sight velocity measured versus a hard target for a CW lidar is on the order of 0.1 %, the main source of errors observed by Courtney et al. (2008) and van Dooren et al. (2022) is understood to be flow inhomogeneity and amplitude noise (neither of these cases included solid interference effects).

Like these last studies, this article considers instantaneous data from CW lidars in the face of flow inhomogeneity and amplitude noise. In contrast to the previous work, our work explicitly compares the uncertainty of several end-to-end retrieval techniques and does so over a wider range of flows and lidar return types than has been done previously. Specifically, we examine flows that are both unwaked and waked by a field turbine, including those for which the specific nacelle-mounted lidar problems of solid interference and amplitude noise present a particular challenge. The objective is to bound the achieved uncertainty in each of the retrieval techniques for the most common QoI: the spectral (i.e., geometric) median line-of-sight velocity, . To evaluate the efficacy of the interference and noise rejection processes, we compare to corresponding values measured from a meteorological tower co-located with the lidar focus point while also tracking data availability associated with the different retrieval techniques.

This work is novel not only by nature of the strides taken to quantitatively determine instantaneous lidar QoI uncertainties but also in the first-time exploration, benchmarking, and stress testing of two high-fidelity retrieval techniques. The study compares the accuracy of as processed from measured lidar spectra in three parallel ways: (1) with the conventional thresholding technique, (2) with the advanced filtering technique of Herges and Keyantuo (2019), and (3) with a novel application of an ensemble machine learning model that is trained on spectral data mimicking those observed in the field.

In the remainder of the article, the overview of the demonstration experiment is given in Sect. 2, followed by the methodology underlying the three retrieval techniques in Sect. 3, validation results in Sect. 4, discussion in Sect. 5, and concluding remarks with future work in Sect. 6.

2.1 Facility

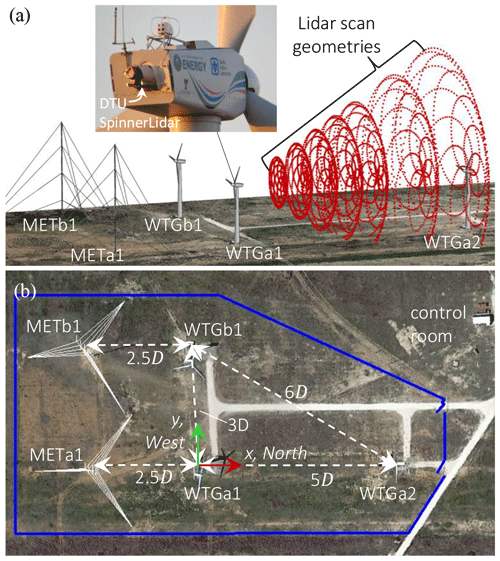

A validation case for the lidar retrieval techniques is derived from data at the Scaled Wind Farm Technology (SWiFT) facility in Lubbock, Texas, USA, as illustrated in Fig. 2. The site features level terrain with minimal surface roughness, and characterization of the atmospheric conditions is given in Kelley and Ennis (2016) with recent benchmarking and validation activities given in Doubrawa et al. (2020) and Hsieh (2021).

Figure 2(a) Rendering of the SWiFT facility in Lubbock, Texas, USA. The nacelle of WTGa1 was outfitted with a rear-mounted DTU SpinnerLidar, which scanned the flow at different focus lengths according to the rosette patterns shown in red. Rendering from Doubrawa et al. (2020). (b) Planform view of the site where D=27 m. Adapted from Herges and Keyantuo (2019).

Each of the three V27 wind turbine rotors on the site are 27 m in diameter, D, and have hub heights of 31.5 m. Two meteorological towers are positioned 2.5D ahead of the front-line turbines relative to the prevailing wind direction as shown in Fig. 2. Data were taken with the lidar scanning over the meteorological towers both with the rotors stationary and with the WTGa1 rotor operating, and we hereafter refer to these cases as the inflow and waked cases, respectively. These data derive from a 2016–2017 test campaign, most of the data for which have been released into the public domain through the A2e Data Archive and Portal (2019).

2.2 Ultrasonic anemometers

SATI series “A” style probe ultrasonic anemometers from ATI Technologies, Inc. are located at 10.1, 18.3, 31.9, 45.4, and 58.3 m above the ground on the METa1 and METb1 meteorological towers. The booms are due west (270∘ in the coordinate system of Fig. 2) of the tower. The anemometers sample data at 100 Hz and measure usonic, vsonic, and wsonic, which are the velocity components in the site reference frame according to the coordinate system of Fig. 2. The manufacturer-quoted accuracy of the usonic and vsonic components is ±0.01 m s−1. The total uncertainty of the horizontal wind direction measurement derived from the sonic anemometers is estimated at 1.22∘. There is an estimated 25–30 ms delay from the end of each sample until when the GPS time stamp is applied (i.e., ∼20 ms internal delay in the instrument and 6–7 ms serial delay).

Occasional spurious spikes in the signal are removed in pre-processing using a median absolute deviation filter with a length of 10 000 data points, or 100 s. Data lying more than 5 standard deviations away from the median are also removed. In addition to these standard quality control processing techniques used at SWiFT, this effort uses shape-preserving piecewise cubic interpolation across any spans of data up to 1 s in length that have been omitted either due to the removal process noted above or to malfunction of the instrumentation. Longer segments of instrument cutout were removed from consideration to be used in this study. No blockage correction was made for the presence of the tower or anemometers in this study.

2.3 Laser anemometer (lidar)

Scans from the DTU SpinnerLidar (Sjöholm et al., 2013) mounted on WTGa1 will be considered below. The beam pointing accuracy of the instrument is not quantified exactly, but the pointing direction has been verified with infrared photogrammetry in the lab (Herges et al., 2017), and the beam position in the field is known from the combination of Theodolite total station measurements of the lidar location in the stationary nacelle, the lidar accelerometers, and the turbine yaw heading. The scans of interest were focused 2.5D from WTGa1. At this focus length, the full-width half-maximum (FWHM) averaging length of the beam is 8.45 m as defined by a truncated Gaussian weighting function. Integrating the weighting function over a length of 16× FWHM centered around this focus length captures over 99 % of the area under the full weighting function; see Debnath et al. (2019) for more information. The probe volume averaging acts as a low-pass filter for the time series of from the lidar, but the filtered small-scale turbulence content is returned in each scan as additional power density spectral width, which in some cases can be used to improve turbulence estimates (Branlard et al., 2013; Peña et al., 2017).

The rosette scan patterns of the SpinnerLidar are completed in 2–4 s and consist of 984–1968 measurement locations, some of which are eliminated from the measurement domain when the focus distance falls below the surface of the ground. Within each scan, the lidar samples at 100 MHz, and power spectra are calculated from sequences of 512 samples to yield 256 fast-Fourier-transformed bins so that the returned power spectrum for each measurement location is the average of ∼400 consecutive spectra. The delay time between sample and GPS time stamp is less than 1 µs.

The CNR is calculated from the lidar spectra according to a wideband defined as Eq. (1):

where ulos is the minimum or maximum line-of-sight velocity sensed by the lidar according to the subscript and μnoise is the mean noise floor level in the spectrum as calculated over the last 100 bins of each spectrum. For the lidar used here, is 38.40 m s−1 and is 0.75 m s−1 (velocities lower than 0.75 m s−1 are removed due to high relative intensity noise; Lindelöw, 2007). Practically, the integral in Eq. (1) is evaluated discretely using trapezoidal integration over the bin width of 0.15 m s−1.

2.4 Pre-processing

Our work compares estimated velocities from the lidar spectra to point measurements from the sonic anemometers for cases when the lidar beam passed within a certain distance of the anemometers. Several steps are necessary to enable an appropriate and meaningful comparison as described below.

2.4.1 Bin selection

Similar to Gottschall et al. (2012), filtering of the 10 min bins for the present campaign was performed to isolate cases of specific interest. Several filters were applied to all 10 min averaged bins. Bins without the lidar activated were disregarded, as were bins with the yaw heading more than 60∘ from zero since the lidar measurement volume would not overlap the meteorological tower for those cases. The wind direction was also constrained to be within a certain tolerance of the line of sight of the lidar beam so that the lidar could resolve a significant component of the wind speed, and this tolerance was 30 and 60∘ for the inflow and waked cases, respectively. For the inflow cases, which have a larger database than the waked cases, additional filters were applied requiring all five sonic anemometers to be functioning and limiting the mean sonic wind speed at 31.9 m to be less than or equal to 6 m s−1. This second constraint is imposed because the lower velocity cases are the ones for which high-fidelity retrieval becomes most difficult in the presence of solid interference, which is a major focus of this article. Both inflow and waked cases, however, were required to have mean wind speed greater than 2 m s−1.

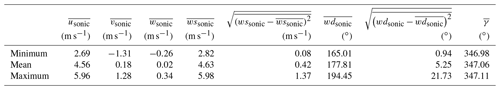

The filtering resulted in 69 10 min bins for the inflow cases as shown in Table 1 and five 10 min bins for the waked cases as shown in Table 2. These data cover multiple seasons, spanning 16 January to 11 July 2017. Data cover a range of stability states of the atmospheric boundary layer (ABL) as can be inferred from the more than 1 order of magnitude variation in the standard deviation of wind speed, which corresponds to turbulence intensities of 1 %–29 % for Table 1 and 6 %–21 % for Table 2.

Table 1Summary statistics of the 69 10 min bins used for the validation study of inflow data. Data shown correspond to the sonic anemometer at 31.9 m. The values wssonic and wdsonic are the horizontal wind speed and wind direction, respectively. The value γ is the turbine yaw heading, which corresponds to clockwise rotation in the reference frame of Fig. 2.

Table 2Summary statistics of the five 10 min bins used for the validation study of waked data. Data shown correspond to the sonic anemometer at 31.9 m. The values wssonic and wdsonic are the horizontal wind speed and wind direction, respectively. The value γ is the turbine yaw heading, which corresponds to clockwise rotation in the reference frame of Fig. 2.

2.4.2 Within-bin filtering

Within each bin scans were removed when the lidar was focused at distances other than 2.5D (i.e., the nominal distance to the meteorological tower), when the sonic anemometers were malfunctioning, and when there was more than 2 m of separation between the lidar beam path and the sonic anemometer in the plane as described in the following subsection. The within-bin filtering resulted in net comparison times of at least 355 and 14 min per sonic anemometer location for the validation study of the inflow and waked cases, respectively (the small sample size of the waked cases will be discussed below).

Various levels of other filtering, or sub-binning, are also explored in Sect. 4 to bin data on certain QoIs. The highest level of such sub-binning determines whether an individual return includes solid interference or not. This determination is performed similarly to the thresholding technique to be described in Sect. 3.1; a return is designated as having solid interference if the first useable velocity bin of the spectrum lies above the threshold to be described further below (see Eq. 3), which often occurs when solid interference is present. Other sub-binning operations include those based on the lidar CNR as well as on the time-local standard deviation of velocity from the sonic anemometers. For the latter, calculations are made using a running standard deviation with a window span corresponding to 16 × FWHM (the relationship between time window span and probe length is approximated by invoking Taylor's hypothesis to translate the time stamps of each sonic anemometer reading to horizontal distances from the lidar focus point for any given lidar scan). Note that the resulting quantity is related to the streamwise turbulence intensity by division of the streamwise velocity, but the absolute magnitude of the fluctuations in the atmosphere is considered here to be more relevant than the conventional normalized quantity in the context of the comparisons to be made below.

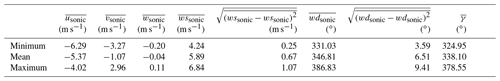

2.4.3 Spatiotemporal syncing

Once a 2–4 s scan window has been deemed valid for the validation analysis, a process is used to isolate the exact scan indices within each window when the lidar beam was pointed closest to each of the sonic anemometers. The lidar beam position is known in the coordinate system depicted in Fig. 2 as described in Sect. 2.3. For the waked cases, the turbine yaw setting was variable as indicated in Table 2, which resulted in high variability of the closest-passing scan indices within the rosette scan pattern. For the inflow cases, the turbine setting was usually fixed at 347∘, so the closest-passing scan index was more predictable. For all cases, the closest-passing scan index was only retained for this work if there was less than 2 m of separation between the lidar beam path and the sonic anemometer in the plane as shown in Fig. 3. Note that for scan indices with δ≠0, the focus point of the probe volume is slightly offset from the plane according to the 2.5D radius of the hemispherical scan geometry. Once the closest-passing index is identified, the data from the nearby sonic anemometer are cubically interpolated in time to the moment when the lidar beam sampled near it.

It is noted that the above procedure of comparing measurements between a stationary sensor and a nonstationary sensor requires good temporal synchronization. The synchronization is accomplished with GPS time stamps on all sensors. The synchronization accuracy then becomes a function of any delay occurring between the data-capturing and time-stamping processes, especially for the nonstationary sensor. For the nonstationary SpinnerLidar, the delay of <1 µs introduces negligible error in the perceived position of the beam, as it is more than 3 orders of magnitude smaller than the sample period of each individual measurement location in the rosette pattern. For the stationary ultrasonic anemometers, the delay of 25–30 ms is small compared to the timescales of the flow being resolved by the 8.45 m lidar probe volume.

2.4.4 Projection of velocity components

Although the lidar and sonic anemometer feature significantly different measurement volumes and can thus never be compared to the highest degree of confidence, projecting the sonic anemometer velocity data onto the line of sight of the lidar beam is an important step toward removing some of the uncertainty of the comparison. Projection was performed for each of the five closest individual scan indices identified in Sect. 2.4.3 to produce the line-of-sight velocity, , for each sonic anemometer according to Eq. (2):

where χ is the horizontal directional offset of the lidar beam from the −x axis, and δ is the elevation angle of the beam as described by Fig. 3.

Figure 3Rendering of WTGa1 and METa1, with the former yawed to 347∘ (i.e., nearly 180∘ from the orientation shown in Fig. 2) to cover the latter with the lidar scan pattern. Guy wires have been removed for clarity. The value χ is the horizontal directional offset angle of the lidar beam from the −x axis, and δ is the elevation angle of the beam, which becomes −18.2, −11.6, 0.2, 11.7, and 22.0∘ when the beam is pointed at the center of the five respective anemometers at z=10.1, 18.3, 31.9, 45.4, and 58.3 m. The maximum distance, d, in the plane between the lidar beam and the center of the sonic anemometer probe for the considered data is 2 m. Note that the lidar beam is thickened for clarity; the actual beam diameter at the waist is 2.7 mm for the 2.5D focus point.

2.5 Time-averaging error

The uncertainty bands on the ensemble data shown at various instances in Sect. 4 below correspond to the statistical time-averaging error (i.e., random uncertainty) and are derived from 10 000 bootstrap resamples with a 95 % confidence level (Benedict and Gould, 1996).

The three techniques for retrieval of nacelle-mounted lidar data are described in this section.

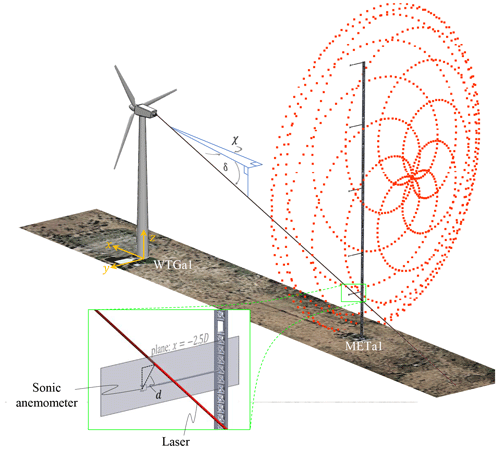

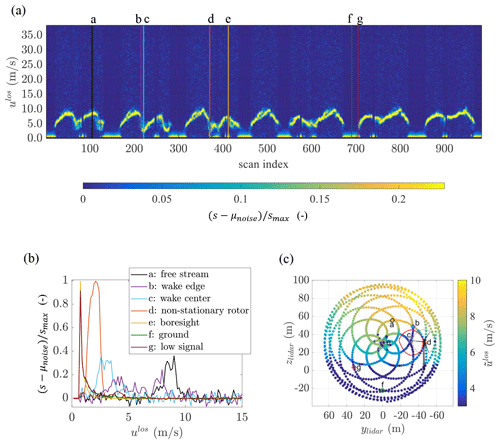

Figure 4Unprocessed Doppler spectra shown in three forms with tracking of seven example cases (indicated by the letters a–g): (a) image of noise-subtracted and rescaled power spectral density, , where ; (b) versus line-of-sight velocity, ulos; and (c) color map of spectral median line-of-sight velocity, , over the rosette scan pattern including the wake (as indicated by the red line and center dot) and relative location to the downstream turbine. In (c), ylidar and zlidar are the lateral and vertical coordinates, respectively, of the lidar coordinate system.

3.1 Thresholding

The thresholding technique used herein is related to the conventional thresholding approaches given in Angelou et al. (2012) and Peña and Bay Hasager (2013). First, a check is made on the magnitude of the first useable velocity bin of the spectrum, and the return is rejected if this magnitude is the maximum among the bins (i.e., the maximum over all ulos), which often occurs when dominant solid interference is present. Otherwise, the mean noise floor level in the spectrum, μnoise, and standard deviation of noise, σnoise, are calculated over the last 100 bins of each spectrum, similar to Simley et al. (2014). These last 100 bins are in the tail of the spectrum sufficiently away from the RoI (beyond the right edge of Fig. 1). The thresholded power spectrum, sth, is then calculated from the raw spectrum, s, via Eq. (3):

where nσ is a tunable parameter for the number of σnoise above the noise floor of the desired threshold level. Negative values of sth are subsequently set to zero, and the spectral median is calculated according to standard practice as embodied in the MATLAB function medfreq, which defines the median frequency as that which divides the spectrum into two equal areas.

As thresholding requires a degree of data loss, there is a trade-off between reduction in random error in a thresholded time series due to rejection of spurious spectral noise and an increase in random and bias errors due to reduced CNR and altered skew of the distribution, respectively. The optimal value of nσ for CW lidar depends at least on the spectral width as described in Angelou et al. (2012), and we choose an nσ of 5 so that any signal above this threshold can conservatively be regarded as from the wind rather than from noise (Peña and Bay Hasager, 2013).

3.2 Advanced filtering

The advanced filtering technique described by Herges and Keyantuo (2019) and also implemented in this article builds on the thresholding technique to maintain greater data availability while reducing both random and bias errors. The technique leverages the lidar spectral data throughout an entire scan (i.e., incorporating information from adjacent scan positions) to isolate the velocity field of interest within the spectra, remove signals from solid returns, and reduce noise using a bilateral filter. The advanced filtering technique was developed by matching known erroneous measurements within the lidar scan rosette to patterns determined from feature identification within the Doppler spectral image, which includes the spectral information throughout the entire scan. The feature identification within the spectral image is used to identify and remove hard targets, low signal returns, and returns from nearby nonstationary wind turbine blades. An additional outlier detection was developed as a two-dimensional implementation of traditional despiking methods to catch remaining outliers within the scan pattern. An overview of the technique is provided below, while Herges and Keyantuo (2019) explain the advanced filtering technique in greater detail.

A single 2 s example rosette scan with 984 points, or scan indices, shown in Fig. 4, was chosen to describe how the advanced filtering method works, and this method holds for all DTU SpinnerLidar data collected at the SWiFT site, including data with inflow variations in wind speed, turbulence, shear, veer, and aerosol particulate concentrations, throughout all focus distances (1.0, 1.5, 2.0, 2.5,, 3.0, 4.0, and 5.0D) and scan-head motor speed rates (500, 1000, 2000 rpm). This example scan, which was taken with the lidar and turbine in a different orientation than for the rest of the article, was measured with a focus distance of 5D, or 135 m, in the direction of a downstream turbine (i.e., WTGa2 in Fig. 2) that was operating within the lidar field of view and a wake from the lidar's own turbine (i.e., WTGa1).

Figure 4 shows the unprocessed input to the advanced filtering technique. The figure includes the spectral image created after noise subtraction and rescaling from all 984 power spectral density distributions concatenated in time along the scan index (a), seven example distributions from (a) plotted versus ulos (b), and the rosette scan pattern with initial estimates (c). The advanced filtering technique primarily uses the data format of the spectral image in Fig. 4a, with Fig. 4b and c included here to help interpret the information contained in the image. The different line colors demarcate the seven scan indices of interest; the indices were chosen to help demonstrate the advanced filtering technique across a wide subset of return types (i.e., even wider than will be considered in the later sections of this paper). As seen in Fig. 4c, the indices of interest include example returns from each of the following: the undisturbed free stream of the atmospheric boundary layer, the center of the wake, the edge of the wake, the boresight, the ground, and the rotating downstream rotor. Note that Fig. 4c also shows an outline of WTGa2 and the wake from WTGa1 (in red) to give a reference for what was physically occurring at the locations in the scan relative to the seven example locations.

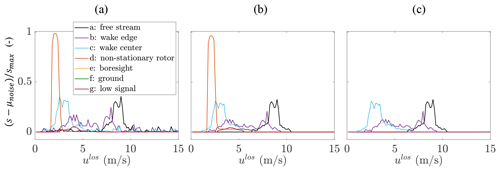

Figure 5 displays the effects of the primary steps of the advanced filtering technique on the seven example ulos traces. The first step (i.e., moving between Figs. 4b and 5a) was to remove the effects of the solid returns within the spectral image using a mask. The mask was created by proportionally projecting the signal strength at the lowest velocity bin into higher velocity bins. The values for the linear projection were determined empirically and may be specific to a given lidar device. However, the values held for the SpinnerLidar in this experiment throughout all focus distances. The mask regions were then increased to include two additional scan indices in both directions using the image processing technique of morphological dilation to ensure the regions fully masked the effect of the solid returns. The regions within the spectral image covered by the mask were zeroed out. Note that the high-strength ground return portion of the “low signal” example was removed, while the low-strength portion returned from the aerosols remains.

Figure 5Traces of noise-subtracted and rescaled power spectral density, , versus ulos where . These cases demonstrate the progression of the primary advanced filtering steps: (a) removal of hard targets; (b) bilateral filtering, thresholding, and isolation of RoI; and (c) combined outlier detection method and low signal quality filter.

The next step in the technique (i.e., moving between Fig. 5a and b) includes a combination of filtering to remove shot noise, thresholding, and identification of the RoI, the latter of which includes flow information from both the atmospheric boundary layer and wake. A weak bilateral filter1, the effect of which can be observed by comparing the noise in the wake edge distribution between Fig. 5a and b, is believed to be more effective and accurate at reducing shot noise compared to a one-dimensional filter because it utilizes the Doppler information from surrounding measurement points within the continuous flow field. The RoI within the spectral image was created by preserving the (noise-subtracted and rescaled) Doppler spectra above the threshold of 0.015. Smaller regions of spectra outside the RoI remained because of noise values above the threshold or signal returns from rotating blades, and these regions were removed if they were not interconnected with the primary flow-field RoI, which is determined as the large region that intersects the expected values. The thresholding value was chosen empirically as a value that approaches zero without including regions of noise interconnected with the primary RoI. Remaining invalid measurements from cases that are interconnected with the flow-field RoI and have a low CNR or are interconnected with the rotating downstream rotor blades were addressed in subsequent steps.

Two additional filters were used in the final step (i.e., moving between Fig. 5b and c) to remove the remaining invalid measurements. The first filter is a combination of two outlier detection methods that are used to capture the returns from nonstationary solid targets when the flow field among neighboring scan indices has similar velocities. The first outlier detection method uses a spatially smoothed scan pattern that is a time-weighted average of , calculated from the spectral median of the ulos traces in the filtered spectral image, within a sliding neighborhood to detect outliers from the difference between the spatially smoothed scan and the unsmoothed pattern. The second outlier detection method uses the peak prominence of the noise-subtracted and rescaled power spectral density of the filtered spectral image at each scan point to again isolate peak return signals from the operational rotor. The velocity difference smoothing and signal peak outlier detections were combined to robustly capture the effect of the operational rotor at all focus distances, removing only data that qualified as outliers using both detection methods and thus effectively removing the erroneous nonstationary solid targets. The second filter applied during the final step removes power spectra distributions with low signal quality. This filter uses the reciprocal of a signal quality metric of the filtered spectral image and removes low-quality cases in the ground region as well as cases from scans with periods of reduced aerosol within the atmospheric boundary layer.

Figure 5c shows the final result of the example traces using the advanced filtering method, leaving only the RoIs in the free stream, wake center, and wake edge cases, from which the spectral median is calculated in the same way as with the thresholding technique above. The figure also demonstrates the preservation of a wide distribution of line-of-sight velocities within the probe volume when measuring the shear layer of the wake edge. The need for expert development of the above technique and potential difficulty in adapting to new types of signal returns are part of the motivation for development of the machine learning approach described next.

3.3 Machine learning

The machine learning technique is an application of supervised machine learning regression via ensemble neural networks. The approach follows from the one-time construction of a high-dimensional parametric database of synthetic lidar spectra. A model of correspondence is then developed between the raw spectral shape and the QoI. The subsections below describe the neural network architecture, the training and testing approach, and prediction confidence level.

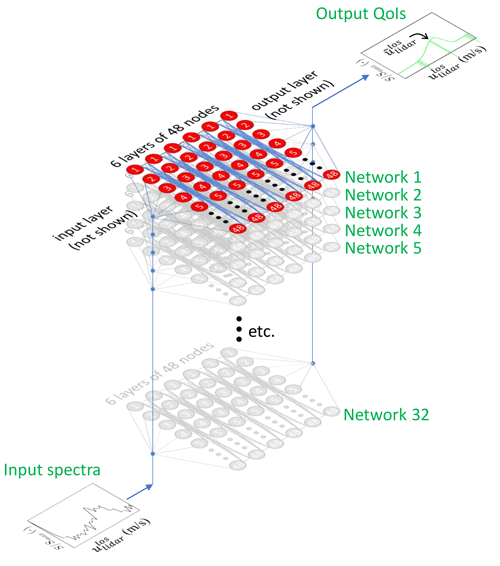

3.3.1 Architecture

The network architecture is depicted in Fig. 6. The individual network architecture is six hidden layers with 48 nodes each. Each node features a sigmoid symmetric transfer function, and model learning is based on mean square error evaluation and backpropagation using the Levenberg–Marquardt method. The input layer receives the spectral magnitudes of the first 129 bins in the spectrum, which correspond to a ulos range of 0.75–19.95 m s−1 and is more than wide enough to capture the RoI of all the cases studied below.

Figure 6Depiction of the ensemble neural network structure. Note that input and output layers are omitted for clarity.

Ensembles of the individual networks are generated to increase the regression performance by addressing the bias–variance trade-off; the relatively large number of nodes in the individual networks produces low bias estimates, while cross-referencing results from multiple networks attenuates the high variance associated with such large individual networks. The ensemble training approach is a classical one of bootstrap aggregating (often referred to as bagging) (Breiman, 1996), whereby B individual networks are trained by bootstrapping samples with replacement from the training dataset such that the number of bootstrapped samples is the same as the training data size. The bagging approach has been found to be resistant to model misspecification and overfitting (Tibshirani, 1996). Typical values of B are between 20 and 200 (Tibshirani, 1996); we use B=32.

Once the one-time training of the B individual neural networks is complete, we calculate the QoI from the median output of all B individual networks in the ensemble. In our work to be shown below, the QoI is , though it is also possible to generate estimates for other QoIs such as the spectral standard deviation.

3.3.2 Training and testing

The individual networks are implemented and trained through MATLAB's parallelized trainNeuralNetwork function. This function requires a training dataset, as well as a validation dataset to determine when to terminate the model refinement (i.e., to determine when the model begins to lose generality and overfit the training data). A third dataset is completely isolated from the training process to test the final model for generality. The split of training, validation, and testing data is 70 %, 15 %, and 15 %, respectively.

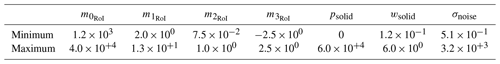

The synthetic spectra to be used for the training, validation, and testing of the ML model are generated from full-factorial parametric sweeps through a gridded seven-dimensional space designed to replicate the range of spectral shape parameters observed in the field. The process of replicating the range of observed shapes, which is described in Appendix A, is important since a trained model only produces valid output if the input data fall within the distribution of the training data. Related to this aspect, several limitations of the synthetic spectra database for this effort to bear in mind are the bound on the peak prominence of the RoI, which is required to be 4σnoise above μnoise (see Appendix A for more on these two noise parameters), the bound on , which is set to be no less than 2 m s−1, and the inclusion of only single-peaked spectra (i.e., no double-peaked spectra often found at the shear layer of a wind turbine wake). In addition to relaxing some of these constraints, future efforts might also benefit from generating synthetic spectra that satisfy not only the range but also the probability distribution of the statistics from the field data.

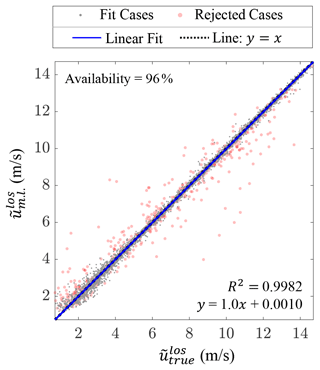

The use of the ∼58 000 training cases and ∼13 000 validation cases produces convergence of the root mean square (rms) error calculated on the ∼13 000 isolated testing cases to 0.141 m s−1. Figure 7 shows the performance of the ensemble network on the testing cases in which only the data points in gray, which represent the predictions with highest confidence as explained in the next subsection, are used in the linear regression and rms error calculations. The magnitude of the residuals is relatively constant with velocity except near the origin where the parameter estimation process can be complicated by the presence of the inverse function as described in Appendix A.

Figure 7Relationship between machine-learning-predicted spectral median, , and the true value, , from the 13 383 testing cases. The red data indicate predictions with low confidence as explained in Sect. 3.3.3. Removing these data points, which was done before the regression fitting, leaves partial data availability as indicated. Note that the y=x line is mostly obstructed from view by the linear fit line.

The variance component of error in neural networks often dominates the bias component (Geman et al., 1992), and this scenario is borne out even for our ensemble neural network, which has rms error much larger than mean error. However, the variance error is still relatively low in the context of wind energy applications. In practice, the variance (and bias) will be shown to be larger because of the presence of inhomogeneity within the lidar probe volume.

3.3.3 Prediction confidence

The ensemble strategy provides not only an estimate of a QoI via the median of the individual network outputs but also an associated estimate of the uncertainty of the QoI based on the distribution of the outputs from the individual networks. We calculate the standard error of the ensemble estimate as , where θ is the standard deviation of the individual network outputs. This approach, which benefits from the bootstrapping performed in the training process as described above, was found to provide a better estimate of standard error from multilayer perceptrons than several other approaches reviewed by Tibshirani (1996), and our own initial experience showed better performance with this approach than with one that trains a separate ensemble on the residual errors of the first ensemble.

In our implementation, we leverage the standard error to flag spectra that produce relatively large variation in the QoI across the ensemble members. Specifically, we set a threshold of standard error of 0.09 m s−1, above which data are rejected as unreliable. This threshold provides an acceptable balance between data availability and variance error based on a parameter sweep applied to the synthetic dataset, though the trade-off has not yet been studied on the experimental dataset.

This section presents the results of the validation study. Section 4.1 gives examples to demonstrate the retrieval techniques qualitatively, while Sect. 4.2 and 4.3 give the validation exercises for the inflow and waked cases, respectively. Due to the larger sample size for the inflow cases, we spend significantly more time analyzing these cases.

4.1 Retrieval examples

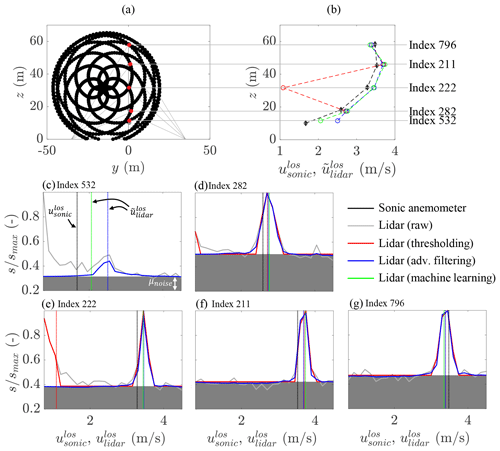

An example of an instantaneous comparison between a lidar return and sonic anemometer data is shown in Fig. 8. This example case illustrates several features observed throughout the full dataset.

Figure 8Comparison of lidar processing techniques for a single 2 s inflow scan at 02:38:10 (local time) on 9 February 2017. In (a), the instantaneous location of the lidar scan pattern (in black) is overlaid on the met tower (in gray) with the scan location closest to each respective sonic anemometer highlighted (in red). In (b), the sonic line-of-sight velocity, , and lidar spectral median line-of-sight velocity, , are plotted vs. height, with the sonic anemometer data temporally interpolated to the same instant as the lidar passing. The uncertainty bands indicate the sonic anemometer instrument's quoted uncertainty. Panels (c)–(g) show the individual scaled lidar spectra, , where , for each of the index locations in (a) and (b), with solid lines (−) indicating spectral magnitude and vertical dashed lines (–) indicating the line-of-sight velocity estimates. Panels (c)–(g) also show the raw lidar spectra, which have no corresponding entries in (b) since the raw data precede the MFE process. Note that the maximum value of panels (c)–(g) is always unity because the authors used the scaled version of the SpinnerLidar output; see Branlard et al. (2013) for context.

First, the turbine is yawed at 347∘, as it was for all of the inflow cases, and the location of the five closest lidar scan indices in Fig. 8a relative to the five sonic anemometers is thus representative of most of the inflow cases, the only exception being several 10 min bins that were measured with the lidar mounted at a nonzero yaw of −15.1∘, which caused the closest lidar scan indices to fall at the plane of symmetry of the rosette scan pattern. For the handful of waked cases, the turbine yaw setting was continuously variable, which led to a wider range of scan indices being used for the validation.

In Fig. 8b, the velocity estimates of the sonic anemometer indicate a roughly logarithmic boundary layer profile at this instant, and the disagreements between and are congruous with our understanding of the lidar measurement principles and processing. The smallest error between the two instruments occurs at the middle lidar location corresponding to δ=0.2∘, while there is added error away from this δ setting, because the lidar probe volume samples through a vertically nonhomogeneous ABL and because of truncation of the spectra at low velocities by the unusable bins at the beginning of the spectra.

Insight into the comparison of the three lidar retrieval techniques in Fig. 8b is provided with the help of panels (c)–(g), which show the spectral returns for each index. In general, we find that the advanced filtering and machine learning techniques have similar estimates of , while the thresholding technique shows significant deviations for indices 532 (Fig. 8c) and 222 (Fig. 8e). As before in Fig. 8b, the thresholding technique gives no estimate whatsoever for index 532 in Fig. 8c since the high magnitude of the first useable velocity bin flags this spectra as a full solid return, which the thresholding technique therefore rejects as described in Sect. 3.1. This solid return is due to ground interference, which is a common scenario for the scan indices with relatively large negative δ like index 532, depending on the scattering behavior of the laser at the exact location of intersection with the ground. For index 222 in Fig. 8e, the thresholding technique does give an estimate, but the estimate is strongly biased because of a partial solid return. This return is due to interference from the meteorological tower or sonic anemometer itself and is a common occurrence in our validation dataset because of the proximity of the lidar scan to the meteorological tower, particularly for the δ=0.2∘ indices. Both the advanced filtering and machine learning techniques successfully ignore the signature of this solid interference and estimate near to .

It is also worth noting the small differences in spectral shape between the thresholding and advanced filtering techniques above the threshold limit, which are due to the bilateral smoothing process across adjacent scans of the advanced filtering technique. The machine learning technique implicitly performs its own smoothing operation (without regard to adjacent scans), but no visualization of this smoothing is possible since the machine learning technique generates no output spectra.

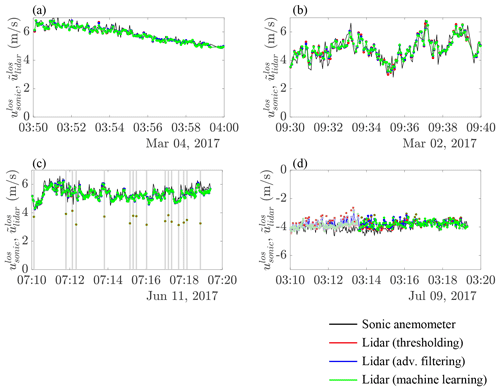

Next, we show sample processed data from 10 min bins in Fig. 9 that illustrate several points about the time series of processed lidar data. Figure 9a represents a bin with low turbulence intensity and one for which from the three lidar retrieval techniques tracks qualitatively well. Figure 9b shows a case of higher turbulence intensity, in which all three retrieval methods again perform similarly well, though small discrepancies between methods are observable. Figure 9c shows a bin where solid returns produce a number of instances in which none of the three retrieval techniques yield an estimate. Figure 9d is a wake case, and there are a handful of instances in which the thresholding and machine learning approaches again do not produce estimates because of strong solid returns from the meteorological tower. While the advanced filtering technique produces higher data availability for this bin, a stronger bias is detected in these results for the estimates between 03:14 and 03:16 than for the other two techniques. Note that the first half of the bin is removed from the comparison because the separation between the lidar beam and sonic anemometer in the plane exceeds the 2 m tolerance.

Figure 9Time series comparisons of spectral median lidar line-of-sight velocity, , with the nearby sonic anemometer line-of-sight velocity, , for four sample 10 min bins at scan index positions with . Panels (a)–(c) are inflow cases, and (d) is a wake case. Faded color indicates scans for which the distance between the lidar beam and the center of the sonic anemometer in the plane was larger than the 2 m tolerance. In the machine learning results, a red X indicates a removed reading due to low confidence as explained in Sect. 3.3.3. A vertical gray line indicates an instance when none of the three processing techniques returned a reading.

Other than the cases with solid returns, the agreement of all three retrieval methods with the reconstructed data is qualitatively good. The following sections provide a more quantitative statistical perspective of the performance of each of the retrieval methods.

4.2 Inflow cases

This section contains the results from our analysis of the 69 bins with inflow cases described in Table 1. First, we offer insight on the trends in the lidar errors for the cases without and with solid interference, which have total return counts of 47 927 and 7183, respectively. Next is a description of the practical significance of these trends for wind turbine applications.

4.2.1 Error trends without solid interference

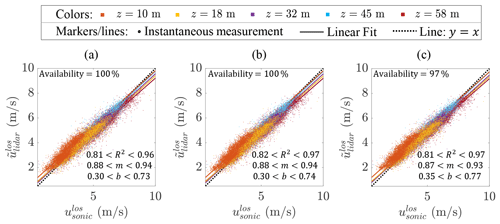

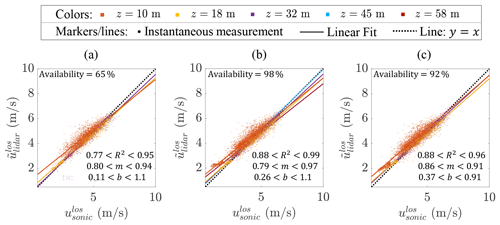

Considered first is the inflow data filtered to exclude any returns with solid interference present as described in Sect. 2.4.2. Figure 10 shows scatterplots of all such results differentiated by height for the three retrieval techniques. The similarity of the three panels is expected, and all three techniques produce roughly the same mean and random error when no solid interference is present. Notably, the data availability for the machine learning technique is 3 % lower than for the other two techniques (see Sect. 3.3.3 for the explanation), though this gap might be helped with an improved machine learning architecture and training scheme. While the overall performance between the three techniques for these cases of non-interfered returns appears fairly similar, further analysis is warranted to better understand several nuances of the nacelle-mounted CW lidar retrieval problem.

Figure 10Instantaneous lidar line-of-sight spectral median velocity estimates, , versus sonic anemometer line-of-sight velocity estimates, , for inflow cases without solid interference from the (a) thresholding, (b) advanced filtering, and (c) machine learning techniques. The variations shown for the coefficient of determination value, R2, the linear fit slope, m, and the linear fit offset, b, correspond to the ranges observed across the fits at all five comparison heights.

The sources of bias observed in Fig. 10 are several, though only one is likely related to the retrieval technique. This retrieval-related bias source is the truncation of RoIs that fall at least partially over the unusable velocity bins at the beginning of the spectra. This truncation will artificially increase for low velocities, which is indeed the trend observed comparing and at the 10 m height (i.e., where velocities are the lowest on average). While the machine learning technique offers the possibility to eliminate such a bias as noted in Appendix A, we do not observe any practically significant differences in the mean offset or slope of the linear trend lines between retrieval techniques in the dataset in Fig. 10. More advanced or exhaustive training may be needed to reap this benefit from the machine learning approach.

A bias of remains even at higher velocities in Fig. 10, which suggests the presence of another, most likely more dominant source of bias. This source probably stems from the difference between the spatial distribution of the measurement volumes of the sonic anemometer and the lidar. For example, the asymptotic character of the ABL mean velocity profile with increasing height can produce a reduction in the height-averaged compared to a point at the center of the probe volume (e.g., a sonic anemometer at the height of the lidar focus point), which is in fact the trend observed for the comparisons with the sonic anemometer at 58 m (see also Fig. 8b).

Another source of bias is that introduced due to local flow blockage around the sonic anemometers, boom arms, and meteorological tower, which may not be sensed by the lidar beam depending on its relative position (note that direct waking of the sonic anemometers from the meteorological tower should not be encountered in this study because of the boom orientation and constraint on wind direction mentioned above). Furthermore, there is clearly potential for internal bias in the sonic anemometers and lidar (Lindelöw-Marsden, 2009).

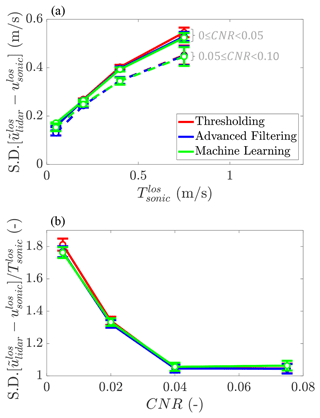

Related to scatter, two main sources in Fig. 10 are the spectral width resulting from turbulence within the lidar probe volume and amplitude noise. Based on the findings of Simley et al. (2014), we expect the former to be larger than the latter, especially in high turbulence conditions since the rms error due to the lidar's probe volume averaging scales linearly with turbulence magnitude. An approximately linear scaling is validated in Fig. 11a, which bins the data for four of the five comparison heights based on the running standard deviation of velocity from the sonic anemometer along the line of sight, , as described in Sect. 2.4.2 (the bottom comparison height was omitted because of irregular trends possibly related to proximity of the RoIs to the unusable bins of the lidar). Figure 11a can be interpreted to suggest that much of the random error is a function of turbulence magnitude. On the other hand, one can extrapolate the trend to to estimate that there is a baseline standard deviation of error of roughly 0.08 to 0.15 m s−1, which is primarily due to the interference of amplitude noise in the parameter estimation problem. Because of the coarse resolution of the binning and the possibility of the trend line flattening out as due to the discretization of the lidar spectra, we conservatively take the value at the first bin to be the estimated contribution of amplitude noise, which is 0.13 and 0.16 m s−1 for the higher and lower ranges of CNR, respectively, of the thresholding and advanced filtering techniques. These values are 0.15 and 0.17 m s−1, respectively, for the machine learning technique. Note that the uncertainty of the sonic anemometer velocity, quoted at ±0.01 m s−1 for usonic and vsonic, is small relative to the above values.

Figure 11Convergence of the standard deviation, SD, of error with the (a) magnitude of time-local turbulent fluctuations, , and (b) carrier-to-noise ratio, CNR, for inflow cases without solid interference across the four higher comparison heights. is the running standard deviation of velocity from the sonic anemometer along the line of sight based on the filtering window described in Sect. 2.4.2. The CNR values are calculated from the advanced filtering technique's output spectra as described in Sect. 2.3. Data plotted derive from all the comparison heights except the bottom z=10.1 m position.

The effect of the amplitude noise on random error, which is a function in part of the retrieval technique, can be drawn out explicitly as in Fig. 11b, where the influence of is nominally removed by the scaling of the ordinate and where the standard deviation of errors has been binned on CNR instead (CNR calculations are performed using the output spectra from the advanced filtering technique since this provides a more complete RoI than the thresholded spectra and since the machine learning technique does not produce output spectra). Figure 11b shows a principle derived from the Cramer–Rao lower bound (Rye and Hardesty, 1993), which is that the minimum attainable variance of the spectral estimation process is an inverse function of CNR. The slightly better parameter estimation performance of the advanced filtering versus the thresholding technique is a result of (1) the larger effective CNR of the RoI and (2) the bilateral filtering of the advanced filtering approach. The increase in error for the lower two CNR levels of the thresholding technique compared to the advanced filtering technique is expected since the thresholding process removes a larger and larger percentage of the signal as CNR→0. The estimation performance of the machine learning technique is better than that of the other two techniques at low CNR and worse than that of the other two techniques at high CNR, though the variations in performance between techniques are relatively small for these cases without solid interference.

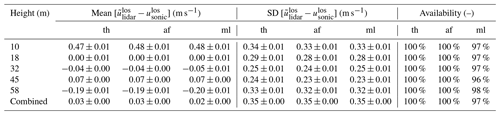

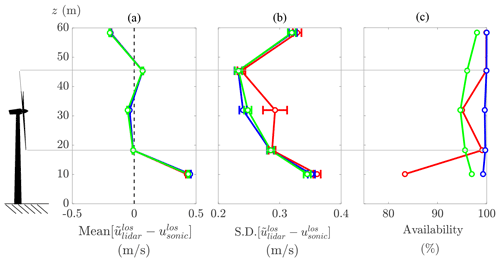

The overall performance of each lidar retrieval technique as a function of height is tabulated in Table 3. Except for the 10 m position, the bias errors are smaller than the random errors and are consistent between the three retrieval techniques, which is expected based on the results of Fig. 10. The standard deviations of the errors are between 0.23 and 0.33 m s−1 for the advanced filtering and machine learning techniques, while the thresholding technique has 1 %–3 % higher values. Between the advanced filtering and machine learning techniques, the former is overall the more effective within the bounds of the data considered in this study because of higher data availability and slightly better noise rejection.

4.2.2 Error trends with solid interference

Figure 12 shows the data and linear fit of the comparison of all inflow cases with the solid interference flag. The lower coefficient of determination values, R2, of the thresholding technique are primarily a consequence of a handful of partial solid returns that are not filtered out and that manifest as the outliers near the bottom of Fig. 12a. As described for the data without solid interference, a bias again exists for all three retrieval techniques near lower velocities. Note that unlike in Fig. 10, Fig. 12 is dominated by the data from the lowest sonic anemometer position, which follows because the strongest source of solid interference in our configuration is the ground.

Figure 12Instantaneous lidar line-of-sight spectral median velocity estimates, , versus sonic anemometer line-of-sight velocity estimates, , for inflow cases with solid interference from the (a) thresholding, (b) advanced filtering, and (c) machine learning techniques. The variations shown for the coefficient of determination value, R2, the linear fit slope, m, and the linear fit offset, b, correspond to the ranges observed across the fits at all five comparison heights.

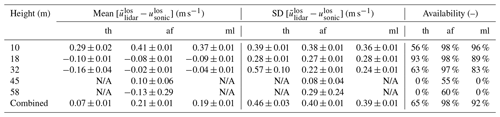

The tabulated data in Table 4 show several of the same trends as described for the non-interfered data in Table 3. Namely, the bias errors are generally much smaller than the random errors, and the advanced filtering and machine learning techniques outperform the thresholding technique in terms of random error. The notable differences from Table 3 are that the standard deviations of the errors for the advanced filtering and machine learning techniques have a larger range from 0.08 to 0.38 m s−1 (compared to 0.23 to 0.33 m s−1 before) and that the thresholding technique now has up to 154 % higher values (compared to a maximum of 3 % before) due mostly to the handful of outliers described for Fig. 12a. The thresholding technique thus has much poorer performance than the other two techniques in terms of random error, as well as in terms of data availability, which is around 30 % lower than for the other techniques over all comparison locations. Between the advanced filtering and machine learning techniques, machine learning provides an average improvement of 0.02 and 0.01 m s−1 for the mean and standard deviation of error, respectively, across all comparison heights, but these small gains are traded for 6 % lower data availability across all comparison heights.

Table 4Performance of lidar retrieval techniques versus sonic anemometer for inflow cases with solid interference. The abbreviations are threshold (th), advanced filter (af), and machine learning (ml). SD refers to standard deviation. N/A refers to cases in which no statistics are available because data availability is zero.

Figure 13 gives example spectra from the cases with solid interference to illustrate several features and deficiencies of the different retrieval techniques. Figure 13a is a common case, similar to the one shown in Fig. 8c, in which the thresholding technique does not make a prediction because of overwhelming solid interference, but the advanced filtering and machine learning techniques successfully produce a value of approximately equal to . Figure 13b corresponds to one of the outliers described above in Fig. 12a, where the thresholding technique exhibits a strong bias in its estimate because the magnitude of the interference spike is high enough to exceed the threshold but not high enough to be flagged as a solid return. Figure 13c is a case of a nonstationary solid return as evidenced by the strong peak just above 1 m s−1. The thresholding technique is again strongly biased, the advanced filtering technique produces a valid estimate, and the machine learning technique gives an estimate that does not meet its confidence threshold, which is not surprising since the technique was not trained on nonstationary solid returns. While it is unknown what moving object was present within the lidar beam path for this particular return, nonstationary solid returns are common when scanning nearby rotating turbines. Figure 13d is a difficult case in which the advanced filtering technique does not give an estimate because the data are obscured by ground returns, and the solid return mask, applied when moving between Fig. 5a and b, removes the RoI signal, although the machine learning technique produces an approximately correct estimate. Evidenced by the last two panels, there is still development work to improve the advanced filtering and machine learning techniques for cases with the combination of low velocity and solid interference.

Figure 13Selected examples of scaled lidar spectra, , where , versus lidar line-of-sight velocity, , and sonic anemometer line-of-sight velocity, , for inflow cases with solid interference. Solid lines (−) indicate spectral magnitude, and vertical dashed lines (–) indicate the line-of-sight velocity estimates. See text for details. Note that the maximum value of each panel is always unity because the authors used the scaled version of the SpinnerLidar output; see Branlard et al. (2013) for context.

4.2.3 Practical significance

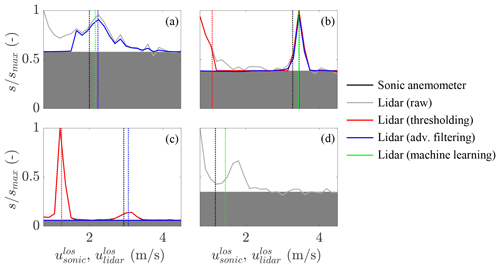

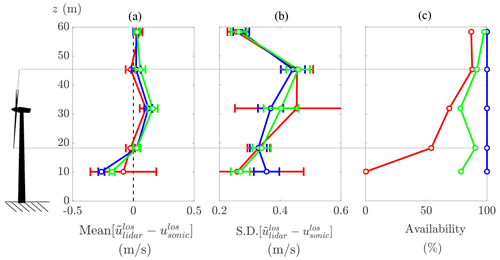

The error trends reviewed above for inflow cases have implications for wind turbine applications featuring nacelle-mounted, forward-facing lidar. Figure 14 shows the aggregate (i.e., both with and without solid interference) error results as a function of height relative to the wind turbine rotor of the current dataset. Typically, the most important information about the inflow from a wind turbine control perspective is the flow within the swept area of the rotor, which will be examined below.

The mean errors within the rotor height are small at less than 0.08 m s−1 and consistent between the three retrieval techniques as shown in Fig. 14a. The standard deviation of the errors within the rotor height as shown in Fig. 14b, however, are between 0.23 and 0.29 m s−1 for the advanced filtering and machine learning techniques, and the thresholding technique has 0.002–0.05 m s−1 (1 %–22 %) higher values depending on scan position because of its poor handling of solid returns. In terms of aggregated data availability, the advanced filtering technique has 99.7 % availability, followed by the machine learning technique at 96.2 % and the thresholding technique at 95.5 %. Between the two better-performing techniques, the advanced filtering is overall more effective than machine learning within the bounds of the data considered in this study because of higher data availability and slightly better noise rejection.

In practice, the value of the line-of-sight uncertainties quoted above will be increased by reprojection onto the wind direction. Assuming the lidar center axis is aligned with the wind direction and allowing ∘ (which corresponds to the vertical limits of the rotor height in this study for the given focus distance), the correction will be no more than an increase of 2 %, which does not change any of the values quoted in the previous paragraph by more than 0.01 m s−1. Instead considering a ±30∘ limit on the deviation of the line of sight from the wind direction (which corresponds to the outer cone angle of the DTU SpinnerLidar in this study), the correction will be an increase of ≤15 %. A further increase in uncertainty is introduced by the assumptions typically required about the local wind direction when inferring a velocity from a single line of sight, and these directional biases scale on (1) the rms magnitude of the transverse (i.e., y–z plane) wind components at the scan perimeter of interest and (2) the tangent of the deviation of the line-of-sight angle from the mean wind direction (Simley et al., 2014).

Figure 14Aggregate results from inflow cases for (a) mean error, (b), standard deviation (SD) of error, and (c) data availability plotted versus height off the ground. The dimensions of a V27 turbine as used in the experiment are shown for reference. The line colors are thresholding (red), advanced filtering (blue), and machine learning (green).

4.3 Waked cases

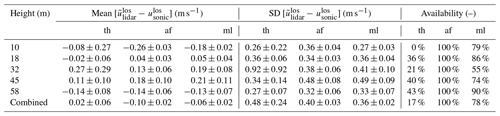

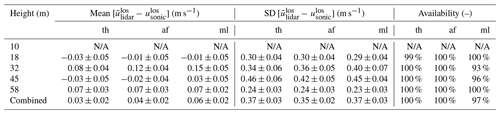

This section contains the results from our analysis of the five bins with waked cases described in Table 2. Below, we forego the bulk of the analysis of error trends performed in the previous section, primarily because the smaller sample size of waked cases does not permit a strong study. Rather, we show results that, despite the relatively large error bars on the data, hint at the practical significance of the lidar errors and variations between retrieval techniques for rear-mounted lidars on wind turbines.

Tables 5–6 and Fig. 15 are analogous to Tables 3–4 and Fig. 14, respectively, but for the waked rather than the inflow cases. Again, the ranking of efficacy of the three retrieval techniques (from highest to lowest) is advanced filtering, machine learning, and thresholding, and again the standard deviations of errors are substantially larger than the mean errors. For the advanced filtering and machine learning techniques, the ranges of the standard deviation of errors for cases within the rotor height and without solid interference are 0.29 to 0.45 m s−1, and these increase to 0.34 to 0.49 m s−1 for cases with solid interference. The increase in the upper bound of the standard deviations compared to the inflow cases is expected since the wake presents a relatively turbulent environment, which works against the precision of the lidar in comparison to a point measurement from a sonic anemometer as demonstrated by Fig. 11a. As shown in Fig. 15, both the thresholding and machine learning techniques have significantly lower data availability in the waked cases than in the previous inflow cases (note the difference in the horizontal axis limits between Figs. 14 and 15), which is related to a higher proportion of solid returns from the meteorological tower in the waked dataset due to the less control that was asserted on the yaw position of the turbine for the waked cases.

Figure 15Aggregate results from waked cases for (a) mean error, (b), standard deviation (SD) of error, and (c) data availability plotted versus height off the ground. The dimensions of a V27 turbine as used in the experiment are shown for reference. The line colors are thresholding (red), advanced filtering (blue), and machine learning (green).

The previously mentioned increase in uncertainty introduced by the assumptions required about the local wind direction have been specifically quantified for the waked case in Kelley et al. (2018), who simulated the SpinnerLidar with a 3D focus length in a turbulent wake at SWiFT using large eddy simulation. They found an additional mean error after projection on the order of 3 % due to deviations of the wake velocity direction from the nominal flow direction. Considering this increase in mean error, as well as the maximum 2 % increase in all errors due to reprojection onto the wind direction from ∘, the maximum mean and standard deviation of error for the aggregated waked cases within the rotor height can be estimated at 0.13 and 0.45 m s−1, respectively, for the advanced filtering technique and at 0.17 and 0.47 m s−1, respectively, for the machine learning technique.

As noted, the sample size of five bins is too small for a complete analysis. Furthermore, the spatial inhomogeneity of a waked flow adds further uncertainty to the results since a full analysis should ideally be blocked to account for differences in retrieval performance at different points of interest within the wake such as the shear layer and hub flow regions, for instance, and at different turbine thrust conditions. A more exhaustive dataset is needed and will be sought in future work.

Table 5Performance of lidar retrieval techniques versus sonic anemometer for waked cases without solid interference. The abbreviations are threshold (th), advanced filter (af), and machine learning (ml). SD refers to standard deviation.

All three lidar retrieval techniques can produce similar performance when no solid interference is present (assuming the machine learning model is not exposed to out-of-distribution samples). However, the advanced filtering and machine learning approaches generally give better performance than the thresholding technique when solid interference is present.

While the thresholding technique's merit for use within the rotor height in this study may be unfairly penalized by the setup of our validation study that features lidar scans directly over the solid obstruction of the meteorological tower, we note that inflow lidar scans in the field may, in fact, be required near existing meteorological towers in order to produce higher spatial resolution of the incoming flow. Other cases that are not considered in the current datasets for which the ability to effectively reject non-aerosol returns is important are for interference from the optical window (i.e., the boresight interference that affects SpinnerLidar measurements), nearby turbines, and precipitation. Nearby turbines can present a particularly complicated case because of nonstationary rotor blades, which shift the solid interference peak away from the typically assumed location at zero velocity. The much poorer performance of the thresholding technique observed above for all kinds of cases with solid interference represents a main motivation for using higher-fidelity techniques.

Comparing the advanced filtering and machine learning approaches, a slight performance advantage was demonstrated by the former for the data considered in our study. However, the potential for improvement of the machine learning technique may be higher. The advanced filtering technique, which required significant investment during development by subject matter experts, still requires continuing development for out-of-distribution cases as shown by the outliers and data loss in Fig. 12, for instance. On the other hand, continuing development can be accommodated with relatively low (computational) expense for the machine learning technique by increasing the size of the networks and/or the diversity of training cases, and this process requires less expert involvement. The machine learning approach therefore removes the ongoing expert commitment to the lidar retrieval problem, instead shifting the workload to a computer. Further, the machine learning technique does not truncate returns that fall at the beginning of the spectra but implicitly accounts for these (as well as potentially negative) velocities in the QoI estimation process. The machine learning approach thus provides the framework for both more efficient workflow development and higher accuracy than is possible by a series of advanced user-generated filters.