the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Optimizing cloud motion estimation on the edge with phase correlation and optical flow

Paytsar Muradyan

Rajesh Sankaran

Robert C. Jackson

Seongha Park

Sean A. Shahkarami

Dario Dematties

Yongho Kim

Joseph Swantek

Neal Conrad

Wolfgang Gerlach

Sergey Shemyakin

Pete Beckman

Nicola J. Ferrier

Scott M. Collis

Phase correlation (PC) is a well-known method for estimating cloud motion vectors (CMVs) from infrared and visible spectrum images. Commonly, phase shift is computed in the small blocks of the images using the fast Fourier transform. In this study, we investigate the performance and the stability of the blockwise PC method by changing the block size, the frame interval, and combinations of red, green, and blue (RGB) channels from the total sky imager (TSI) at the United States Atmospheric Radiation Measurement user facility's Southern Great Plains site. We find that shorter frame intervals, followed by larger block sizes, are responsible for stable estimates of the CMV, as suggested by the higher autocorrelations. The choice of RGB channels has a limited effect on the quality of CMVs, and the red and the grayscale images are marginally more reliable than the other combinations during rapidly evolving low-level clouds. The stability of CMVs was tested at different image resolutions with an implementation of the optimized algorithm on the Sage cyberinfrastructure test bed. We find that doubling the frame rate outperforms quadrupling the image resolution in achieving CMV stability. The correlations of CMVs with the wind data are significant in the range of 0.38–0.59 with a 95 % confidence interval, despite the uncertainties and limitations of both datasets. A comparison of the PC method with constructed data and the optical flow method suggests that the post-processing of the vector field has a significant effect on the quality of the CMV. The raindrop-contaminated images can be identified by the rotation of the TSI mirror in the motion field. The results of this study are critical to optimizing algorithms for edge-computing sensor systems.

- Article

(10219 KB) - Full-text XML

- BibTeX

- EndNote

Converting cloud images captured by a ground-based sky-facing camera into a time series of motion vectors has implications for reporting local weather and short-term forecasting of solar irradiance (Jiang et al., 2020; Radovan et al., 2021). Phase correlation (PC) estimates global translative shift between two similar images by detecting a peak in their cross-correlation matrix which is used to estimate the cloud motion vectors (CMVs) from the satellite and ground-based sky camera images (Leese et al., 1971; Dissawa et al., 2017, 2021; Zhen et al., 2019; Huang et al., 2011). On the other hand, optical flow (OF) estimates the pixel-wise motion assuming the conservation of brightness of the object pixels in two frames (Apke et al., 2022; Mondragón et al., 2020; Peng et al., 2016). However, OF is sensitive to image noise and the variation in lighting. Both OF and PC methods fail to detect textureless motion. Other object-based cloud tracking methods used in radar and satellite meteorology require cloud identification before the tracking stage. The cloud identification approaches vary from threshold-based to texture-based methods and machine learning methods (Steiner et al., 1995; Raut et al., 2008; Park et al., 2021).

The texture-based methods and the machine learning models add computational overheads, complicating their use in real-time applications. In infrared and microwave satellite images and radar images, the threshold of brightness temperatures and reflectivity marks a physical distinction of the features in the scene. However, for the cloud images in the visible spectrum, thresholds of RGB values may not be a meaningful criterion to distinguish the properties of the clouds because they are affected by the lighting conditions and time of the day. The texture-based techniques are also susceptible to detection errors due to reflections and shadows caused by solar zenith angles. While the optical flow (OF) method estimates dense motion field (Horn and Schunck, 1981; Chow et al., 2015), it also suffers from the limitations in visible camera images and may require segmentation or background subtraction before the images are processed (Denman et al., 2009; Wood-Bradley et al., 2012; El Jaouhari et al., 2015).

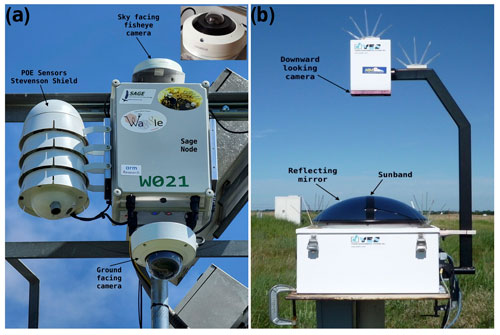

The Sage Project is designing and building a new kind of reusable cyberinfrastructure composed of geographically distributed sensor systems (Sage Waggle nodes shown in Fig. 1a) that include cameras, microphones, and weather and air quality sensors generating large volumes of data that are efficiently analyzed by an embedded computer connected directly to the sensor at the network edge (Beckman et al., 2016; https://sagecontinuum.org/, last access: 27 February 2023). An edge device rapidly analyzes the data in real time at the location where they are collected, and continuously sends and receives feedback from connected remote computing systems and other similar devices. In such networks including Sage, the computational efficiency of the algorithm is critical. The PC method can be implemented without preprocessing images and is robust to noise and changes in illumination, as it works by only correlating the phase information (Chalasinska-Macukow et al., 1993; Turon et al., 1997). This eliminates the burden of separating the background from the objects to be tracked. A straightforward implementation of the PC method in the frequency domain using the fast Fourier transform (FFT) is computationally efficient, and hence it is a natural choice to detect the cloud motion vectors from the hemispheric camera images at the edge.

Figure 1(a) Sage node deployment at the Atmospheric Radiation Measurement (ARM) user facility in Lamont, OK., with a fisheye camera for sky monitoring. (b) Downward-looking total sky imager (TSI) with rotating mirror sunband and setup.

The PC method is efficient for uniform rigid body motion, i.e., when an object's shape and size are preserved, and multiple objects in the scene are moving with the same velocity. There are a few limitations to the PC method that affect its applicability in tracking cloud motions in a sky-facing camera. First, the PC method is less efficient when multiple peaks in the correlation matrix are observed. This occurs when cloud features are moving with different velocities, as each peak is associated with the motion of one or more independent features in the images. This limitation is overcome by dividing the image into sufficiently smaller subregions or blocks and employing the PC separately for each block (Leese et al., 1971). As the multi-layer clouds with different cloud base heights move independently, Peng et al. (2016) used adaptive blocks for each cloud type.

Second, the changing cloud texture and geometries may cause incoherent motion vectors in some image blocks. Therefore, additional quality control measures are applied to remove the spurious CMVs, usually assuming that a spurious CMV substantially deviates from its surrounding CMVs in the presumably smooth velocity field (Westerweel and Scarano, 2005). For the assumption of the coherent velocity field, smaller block sizes are preferred. The optimal block size is determined by the maximum expected displacement during the frame interval.

Third, the ground-based cameras frequently encounter contamination on the mirror dome or hemispherical lens, obscuring the clouds during and after a precipitation event, and automated identification and removal of precipitation-contaminated images are critical (Heinle et al., 2010; Kazantzidis et al., 2012; Gacal et al., 2018; Voronych et al., 2019). The distortion of images caused by the presence of raindrops and the edge detection methods are used to identify raindrop contamination (Kazantzidis et al., 2012; Voronych et al., 2019). In this paper, we propose the use of motion vectors for detecting raindrop contamination on the rotating TSI mirror.

Finally, while it is common for cameras to produce high-resolution three-channel images, the PC method utilized only a single channel. Hence, either the grayscale image or one of the RGB channels is used. The dependence of CMV stability on the choice of image channels is undocumented.

Investigating the sensitivity of the motion vectors to the block sizes, the frame frequency, and its response to different spectral channels will help in the effective implementation of the method. Therefore, in this paper, we evaluate the performance of the blockwise PC with three visible channels, the grayscale, and the red to the blue ratio in two block sizes and two frame rates. We also demonstrate the effect of change in the image resolution and the change in frame rate on the CMV quality. We validated the PC method with constructed data and compared it with the OF method. The wind and ceilometer measurements are used for additional validation to show consistency with independent atmospheric measurements. However, wind retrieval is not an objective of the paper. The data, methodology, and algorithm are described in Sect. 2. The results are shown in Sect. 3, and their implications for the Sage edge-computing platform are discussed in Sect. 4.

2.1 Data

In this paper, we mainly used data from the Atmospheric Radiation Measurement (ARM) user facility's Southern Great Plains (SGP) atmospheric observatory (36.7∘ N, 97.5∘ W); in particular, at the supplemental S1 and central C1 facilities in Lamont, OK, due to long-term data availability from colocated instruments for wind and cloud base height measurements. The Sage camera images are used in Sect. 3.3.2.

2.1.1 Total sky imager

The total sky imager (TSI) is a mounted full-color digital camera looking downward toward a rotating hemispherical mirror (Fig. 1b). Daytime full-color hemispheric sky images are obtained from TSIs operational at the ARM SGP atmospheric observatory (Morris, 2005; Slater et al., 2001). The images recorded over the S1 site every 30 s (Morris, 2000) during the day on 26 July 2016 are used to demonstrate the sensitivity of the method described later on. The central sky region of 400×400 pixels is used to compute the CMV during the 06:36 to 20:35 CDT window. The data over the C1 site between 14 October 2017 and 14 August, 2019 are used for comparison of CMVs with the wind data.

2.1.2 Sage camera

Hanwha Techwin America's fish-eye camera (XNF-8010RV X series), hosted atop a Sage node and pointed toward the sky at the Argonne Testbed for Multi-scale Observational Studies (ATMOS) (41.70∘ N, 87.99∘ W), has a six MP CMOS sensor providing 2048×2048 pixels full-color images. Unlike the TSI camera, the Sage fish-eye camera lacks a sunband and a rotating mirror (Fig. 1). Images recorded from this camera every 30 s from 06:00 to 17:00 CDT on 13 and 14 February 2022 are used to demonstrate the effect of camera resolution and frame rate on the sensitivity of the method.

2.1.3 Wind profiling radar and ceilometer

To validate the estimates of the CMV in our work, cloud base height (CBH) and wind measurements are obtained from the colocated ceilometer and the wind profiling radar (WPR), respectively (Muradyan and Coulter, 1998; Morris et al., 1996). The ceilometer is an autonomous, ground-based active remote sensing instrument that transmits near-infrared pulses of light and detects multi-layer clouds from the signal backscattered from cloud droplets that reflect a portion of the energy back toward the ground. (Morris, 2016). The laser ceilometer measurements extend up to 7.7 km with 10 m vertical resolution. The wind profiles for comparison were obtained from the 915 MHz WPR, which transmits electromagnetic pulses in vertical and multiple tilted directions (three-beam configuration is used at SGP) to measure the Doppler shift of the returned signal due to atmospheric turbulence from all heights (Muradyan and Coulter, 2020). The consensus-averaged winds are estimated at an hourly interval and are available from 0.36 km to about 4 km at 60 m vertical resolution. We used the CBH and wind estimates over the SGP C1 site from 14 October 2017 to 14 August 2019.

2.2 Phase correlation using FFT

The phase correlation method for estimating motion in the images is based on a property of the Fourier transform that a translational shift in two images produces a linear phase difference in the frequency domain of the Fourier transform of the images (Leese et al., 1971). In other words, a signal f2 that is related to signal f1 by a translation vector (dx, dy), then their Fourier transforms denoted by F1 and F2 have equal magnitudes, but with a phase shift related to the normalized cross power spectrum, as follows.

where is the complex conjugate of F2. The phase shift term is the Fourier transform of the shifted Dirac delta function. Hence, we can calculate dx and dy by computing the inverse Fourier transform of the cross-power spectrum and finding the location of the peak (Leese et al., 1971; Tong et al., 2019). Therefore, PC in small image blocks, between the subsequent images, is rapidly computed using FFT. Because the phase correlation is executed only for a small image block, it is possible to employ parallel computation to further speed up the estimation of motion for a large dataset.

The following procedure describes the steps in implementing PC to estimate the shift in images I1(x,y) at time t1 and I2(x,y) at time t2. Let image I2 be spatially translated by with respect to the image I1:

-

Obtain the FFT of the images I1(x,y) and I2(x,y) as I1(μ,ν) and I2(μ,ν).

-

Compute C(μ,ν) by multiplying the FFT of the first image, and the complex conjugate of the second image. C(μ,ν) is the cross-covariance matrix in Fourier space.

-

Obtain an inverse FFT of . The real part of the outcome gives a covariance matrix Cov(p,q) in image space.

The above implementation of the PC algorithm is available in several programming languages, notably C, Python, and R in packages OpenCV (mulSpectrums), Skimage (phase_cross_correlation), and ImageFX (pcorr3d). For this study, we used a custom Python implementation, the same as Picel et al. (2018); Raut et al. (2021). (See the “Code availability” section.)

If image I2 is a spatially translated version of the image I1, then the phase covariance matrix Cov(p,q) is zero everywhere except for a sharp peak at the location corresponding to the displacement between the two images. The peak intensity is a good measure of the quality of the motion vector. Due to the reasons mentioned in Sect. 1, the actual peak in the covariance matrix can be fuzzy, and it corresponds to the best-fitting translational motion in the images. Sharp single-pixel peaks can sometimes occur in the covariance matrix, due to the high-frequency noise and artifacts in the images, which are flattened using Gaussian smoothing on Cov(p,q) with σ=3. An example of the procedure is given in Raut et al. (2021).

For each image block, the peak covariance location is assigned as the local motion vector in image I2 with reference image I1. As per the meteorological convention for winds, the U component is positive for an eastward flow, and the V component is positive for a northward flow. The location of the peak covariance from the center of the matrix gives the shift in the image features during the image interval along the X and Y dimensions of the image. We saved X and Y shifts and computed the motion vectors per minute. The image top is oriented towards the north, and therefore in the subsequent sections, the motion in the X and Y directions are referred to as U and V components, respectively.

2.3 Constructed data for validation

For studying the accuracy and quantitative error analysis of the method, a dataset with the known displacement vectors is needed. Synthetic or reconstructed image sequences are best suited for this task, as managing the displacement is trivial in such a dataset compared to the real dataset. However, the constructed dataset should be made with care to avoid unreal augmentations and artifacts while incorporating possible variations of the features from image to image. Such a dataset, although possibly not a perfect representation of the real data, can be used to study the properties of the algorithms.

These images can then be translated by the desired amount to achieve the cloud motion effect. We created image pairs by reconstructing the 2060 samples of Sage camera images classified as cloudy by the algorithm described in Dematties et al. (2023) in their clusters 3 and 8. The images were selected to have cloudiness in the central 200×200 pixel region. The pair of images were created and then subjected to the following modifications using an edge filter A and a flat filter B.

The first image was created with the following operations:

-

The original image was converted to grayscale.

-

Addition of Gaussian noise with mean zero and standard deviation 1.

-

Convolution with Kernel A.

-

Two iterations of erosion followed by dilation by Kernel B, i.e., morphological opening of the image.

-

Cropped the images to achieve the desired displacement.

The second image of the pair was created by modifying a few operations as follows:

-

Reverse the RGB colors in the original image before converting it to grayscale. This reversing of operations, also known as color augmentation, creates a spectrally different image with the same structure.

-

Addition of Gaussian noise with mean zero and 1 standard deviation.

-

Convolution with Kernel A.

-

One iteration of morphological opening by Kernel B.

-

Translated and cropped the images for the desired displacement.

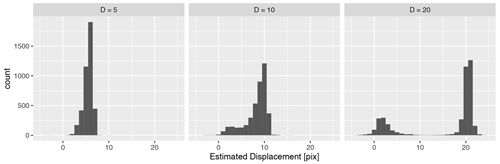

We translated the images by 5, 10, and 20 pixels in both X and Y directions for ease of comparison and interpretation of the results (see Sect. 3.1).

2.4 Outliers in the CMV field

When the image block belongs to the clear sky or the scene has changed beyond recognition by the correlation, the peak in the covariance matrix is usually near the boundaries of the block; thus giving artificially large displacements. Such vectors are easily identified using a maximum velocity limit Vmax. For this analysis, we used . If the Vmax is smaller than the expected maximum speed, then a larger block size is recommended.

Removing large magnitude vectors smooths the field; however, some motion vectors of reasonable magnitude but spurious directions remain. Such spurious vectors can be removed by comparing them with the surrounding motion vectors.

We compared each vector with the normalized median fluctuation of the neighboring blocks (Westerweel and Scarano, 2005). Consider 3×3 data with u0 as the displacement vector at the center block, as displacement vectors of the neighbors, and um as the median of neighbors, not including the central vector. Then the residuals (ri) of all neighbors are computed as to obtain the median residual (rm). The normalized median fluctuation r0 is given by

ϵ is the minimum normalization level that represents the acceptable fluctuation, usually 0.1–0.2. The CMV vectors with normalized median fluctuation values over 6 are discarded as outliers.

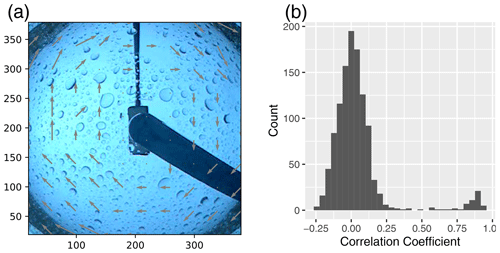

2.5 Identification of raindrop contamination

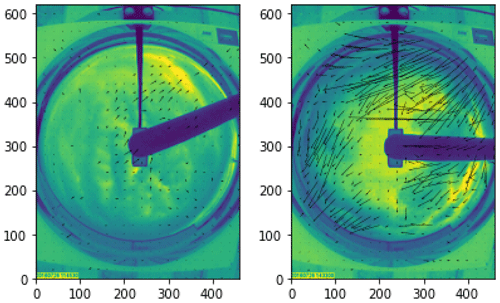

The CMV is not valid when rainwater present on the reflecting mirror obscures the clouds. However, in such a scenario, the rotation of the raindrop-contaminated mirror produces a rotating vector field, as shown in Fig. 2a. We correlated the estimated CMV fields with the mean of manually identified contaminated CMV fields and found that the correlation coefficient, r>0.4, is associated with the rotation of the raindrop-contaminated mirror (Fig. 2b). Because of the sharp edges of the raindrops, the rotational pattern is efficiently captured with few raindrops contaminating the mirror. However, it struggles to detect contamination when the drops are concentrated at the center of the dome. Therefore, after the rotation is detected, the next 10 min of data are flagged as contaminated even if no subsequent rotation is detected.

Figure 2(a) An example of the circular motion field generated every 2–4 min by the rotation of the raindrop-contaminated mirror of TSI. (b) The histogram of the correlation coefficient between the mean rotational vector field and CMV fields on 2 January 2017 shows a robust separation of raindrop-contaminated frames from the clean frames.

2.6 Setup for sensitivity analysis

To test the algorithm’s sensitivity to the block size, we divided the 400×400 pixel sky area into a grid of 10×10 and 20×20 blocks, and we referred to these as block length 40 and 20 pixels, respectively, in Figs. 5–8. Note that the choices for the number or size of blocks are restricted by the Vmax on one end and the neighborhood criteria on the other. For example, if the expected Vmax is 7 pixels per min−1, then the blocks should be at least 21 pixels wide (Sect. 2.4). On the other hand, for the 10×10 grid (block width of 40 pixels) with a 1-pixel neighborhood, the correction applies to the central region of 8×8 blocks only. Therefore, increasing block sizes reduces the number of blocks in the sky region, which reduces the scope of the neighborhood method in the error correction stage. To test the sensitivity to the frame interval, CMVs are also computed at 30 and 60 s intervals. The 30 s CMVs are accumulated over 1 min for comparison. As the PC uses monochromatic images, the CMVs were computed separately for the three RGB channels (abbreviated to Bu, Gn, and Rd in figures), the red to the blue ratio (RB, Slater et al., 2001), and the grayscale (Gy) images.

2.7 Optical flow algorithm for comparison

Let be the first image defining the pixel intensities at the time t. Therefore, the first and second images are related as

In the computation of OF, we assume that the intensities of the pixels that belong to the exact object change only due to the displacement (Horn and Schunck, 1981). This assumption allows for all changes detected in the x and y directions of the image to be associated with the motion only. The first-order approximation of the Taylor polynomial is

where and . However, to find the dense motion vector field, we used Farnebäck (2003) method from OpenCV which approximates the neighborhood of both frames by higher-order (quadratic) polynomials, . This algorithm works with an image pyramid with a lower resolution at each next level to track the features at multiple resolutions. Faster motions are captured with the increased levels of the pyramid. The algorithm provides a motion vector for each pixel of the input image. The motion field can be smooth or detailed depending on the given neighborhood size and the standard deviation used for the polynomial expansion.

3.1 Validation with constructed images

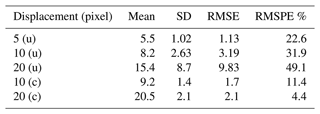

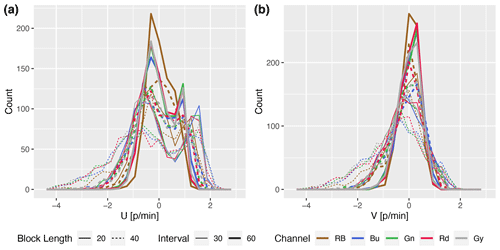

To show the validation of our implementation of the PC method, we used the images reconstructed from the Sage camera data, as described in Sect. 2.3. Finally, 2060 pairs of cloudy images translated by 5, 10, and 20 pixels, in both the X and Y directions, were used to estimate the displacement using the PC method described in the Sect. 2.2. The distributions of the estimated motion are shown in Fig. 3, and their comparison statistics are shown in Table 1. For a smaller displacement of five pixels, the algorithm estimates the values with 22.6 % root mean square percent error (RMSPE). With the increasing displacement of 10 and 20 pixels, the RMSPE increases to approximately 32 % and 49 %, respectively. This is consistent with the increasing spread in the estimates with increasing displacement as seen in Fig. 3. However, the algorithm tends to produce a peak near the zero value, except for very small displacements (D=5), and another peak at the given displacement. These results are consistent with Zhen et al. (2019). The proportion of vectors near the zero value increases with the displacement; however, in most cases, they are estimating the correct quadrant of the direction of the motion. However, these values need to be removed to get a good estimation of the speed of the motion. For demonstration purposes, we used the threshold to remove the near-zero values which significantly reduced the RMSE. However, in the real images, the method described in Sect. 2.4 is effective when the majority of the vectors are correct. For D=20, approximately a quarter of the vectors were near-zero vectors.

Figure 3Distribution of the motion estimated by the PC method in reconstructed images for displacement values of 5, 10, and 20 pixels.

3.2 Cloud motion and sensitivity results

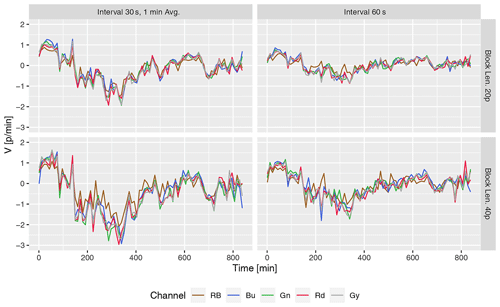

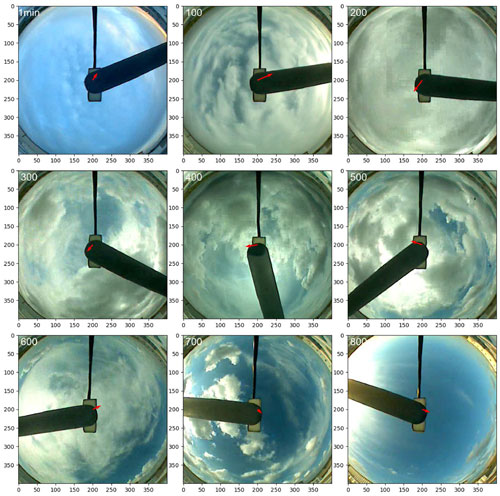

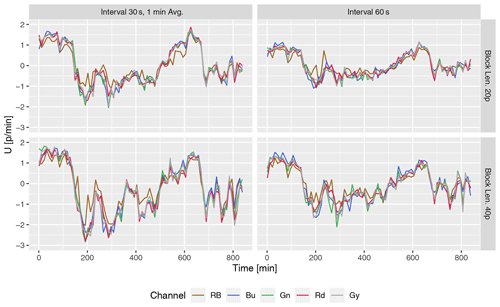

Changing sky conditions captured by TSI on 26 July 2016 during the 06:36 to 20:35 CDT are shown in Fig. 4 at 100 min intervals for reference. The sequence of images shows the movement of stratiform clouds from the southwest for over 2 h (∼150 min), with the occasional presence of low-level cumulus clouds. After about 3 h, the cumulus cloud development covered the sky (see the 200 min snapshot) moving predominantly from the east/northeast, as shown by the red arrow. Rapidly moving low-level clouds had less coherent motion at the block level than the altostratus. In addition, the low-level clouds intermittently traveled in patches with the altostratus aloft moving from the southwest. The time series of U and V components of CMV, shown in Figs. 5 and 6, respectively, are smoothed using cubic splines for easily discernible visualizations. The raw U component is shown in Fig. 12 for reference. The U and V plots suggest that the PC method successfully captured the direction of the motion and the reversal of the direction in all configurations. As described above, the mid-level clouds moving from the west and transitioning to low-level clouds moving from the east at around 150 min are seen in Fig. 5.

Figure 4Varying sky conditions on 26 July 2016, from 06:36 to 20:35 CDT (11:36–01:35 the next day in UTC) at 100 min intervals over Lamont, OK. A sky area of 400×400 pixels is cropped and used for CMV computation. The top of the image points to the north, and the red arrow shows the direction of motion for that frame.

Figure 5Smoothed time series of U component of domain-averaged CMV (pixel per min−1) on 26 July 2016 06:36 to 20:35 CDT (11:36–01:35 next day in UTC) over Lamont, OK. Variations with block size (20 and 40 pixels) and frame intervals (30 and 60 s) are shown for five channels.

The turbulent motion characterized the episodes of cumulus growth from 150 to 450 min, as evidenced by the fluctuations in the CMV during this phase in all channels, are however more pronounced in the RB channel. Between 500 and 600 min, cumulus and altostratus cleared, and high-level cirrus clouds became visible, flowing from the west. Additional late-afternoon cumulus movement (see the 700 min snapshot) and the clear sky with high-level cirrus or occasional westward-moving low-level cloud patches were present until sunset.

The frequency distribution of the CMV components (Fig. 7) also shows two peaks of a positive eastward component (U) distinguishing the rapidly moving mid-level and slow high-level clouds from the camera viewpoint. The larger blocks (40 pixels wide) and the shorter frame interval (30 s) have a wider range than the rest of the configuration, which shows their efficiency at capturing the low-level cumulus motion. It is important to note that 26 July 2016 was accompanied by a variety of cloud conditions and individual episodes of low, medium, and high-level cloud motion, each lasting for at least an hour. Thus, the short-term fluctuations of CMVs are mainly caused by the algorithm's instability. To assess the stability of CMVs for various configurations, we compare the autocorrelation of the CMV in the following subsection.

3.3 Stability of CMV

The stability of the CMV was tested by changing the block size, the frame interval, the combinations of red, green, and blue (RGB) channels from the total sky imager (TSI) and by changing the image resolution and frame rate in the Sage camera.

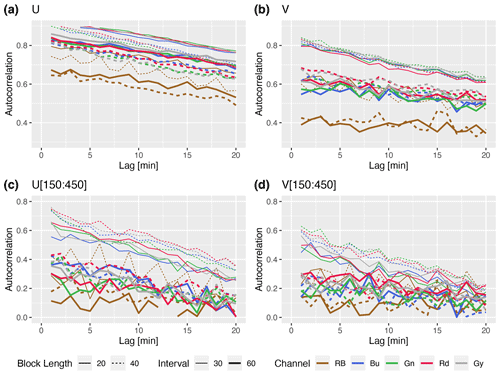

3.3.1 Block size, frame interval, and channel

The movement of clouds is usually smooth at the 1 min time interval. Except for the change in direction during the altostratus to cumulus transition, the movement of the clouds on 26 July 2016 should be more or less stable at the hourly intervals for most of the day (Figs. 5 and 6). However, the CMV fluctuates at a 1 min time interval, mainly due to the irregular response of the algorithm caused by the issues mentioned in Sect. 1. Therefore, the stability of motion vectors in time is evaluated for the above configurations by checking the autocorrelation of the CMV time series. The autocorrelation function (ACF) of U and V components for different configurations is shown in Fig. 8 (top panels). The linear ACF suggests a long decorrelation length for all the combinations. While RB has the lowest autocorrelations (more fluctuating vectors) for all configurations, the rest of the color channels have more or less equally stable vectors. The frame interval, followed by block length, noticeably affects the stability of the vectors.

The lower panels in Fig. 8 are the same as the top panels, but for the period between 150 and 450 min when the rapidly developing low-level clouds were present. The small cloud features were developing fast and had variable motion. Therefore, during this period, the autocorrelation is lower and the performance of the large block sizes and short frame intervals is noticeably better for both U and V components. The CMV from red and gray channels has slightly higher autocorrelation for the dominant motion (i.e., zonal component, U) during this period.

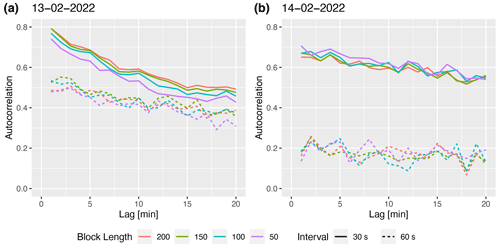

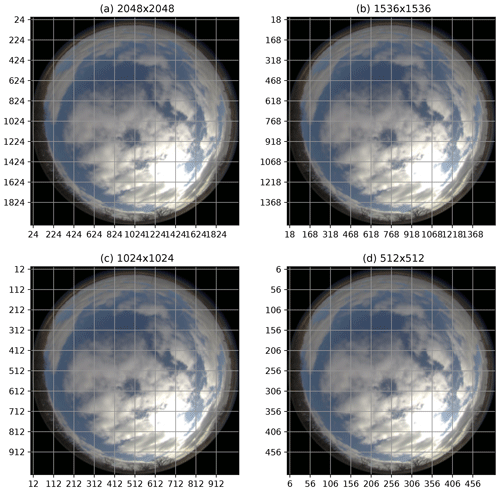

3.3.2 Image resolution and frame interval

Our analysis shows that CMVs are more stable for larger blocks and shorter frame intervals (see Sect. 3.3.1). Therefore, the stability of motion vectors is evaluated for the same blocks (i.e., the image divided into a 10×10 grid) and by reducing their resolution in steps to block lengths of 200, 150, 100, and 50 pixels, as shown in Fig. 9, with frame intervals of 30 and 60 s. The 13 February was dominated by mid-level stratus cloud motion, and 14 February had periods of low-level cumuliform development with fast movements and rapid evolution of cloud features dominating the scene. In addition, on both days, the cloud motion was mostly in east–west (zonal) direction, with the U component approximately 4 times larger than the V component. Therefore, ACF of only U components for four image resolutions and two frame intervals are shown in Fig. 10. ACF is significantly lower for longer frame intervals. For example, long intervals reduce the autocorrelation at lag 1 from 0.75 at 30 s intervals to 0.5 at 60 s intervals (Fig. 10a). This effect is even more prominent for the rapidly evolving cumuliform clouds (Fig. 10b), where the autocorrelation at the lag 1 drops from 0.65 to 0.2. On the other hand, a change in the resolution by a factor of 4 has minimal effect, and a change in lag 1 autocorrelation is within 0.05.

Figure 9The scheme for testing resolution sensitivity with the Sage camera image obtained on 21 April at 14:06:38 over Lamont, OK. A 10×10 block grid with four successively lower resolutions is used for CMV computation to compare the effect of resolution and time interval on the stability of CMV.

3.4 Comparison with wind data

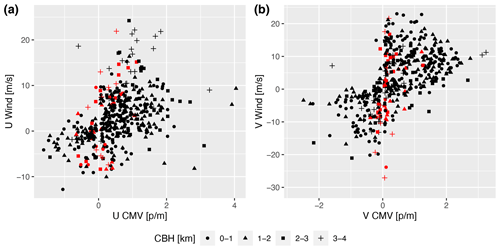

To compare the hourly mean CMV with winds of appropriate altitudes, we identified the hours with a stable CBH for at least 20 min from the ceilometer measurements from 14 October 2017 to 14 August 2019. The hourly winds are averaged for 1 km deep layers from the surface to 4 km altitude, and then the hourly mean CMVs are compared with the mean wind vectors in the vertical layer corresponding to the median CBH (Fig. 11). Note that the range of values for CMV and wind have an order of magnitude difference due to the different units. From the 551 d of data during this period, 876 daytime cloudy hours were identified, when simultaneous measurements from the WPR, the ceilometer, and CMV estimates were available. We only present CMVs for one setting; the 40-pixel block length, and the 30 s frame interval for the red channel. The rainy samples, identified using the method described in Sect. 2.5, mostly fall close to a zero value, as no mean motion is recorded. The sky-view camera data routinely suffer from rain, snow, and other debris on the lens that obstructs the view. The higher wind speeds near zero CMV can mainly occur due to the snow obstructing the view or smooth, flat cloud bases that are not successfully tracked. In addition, the quality of the wind profiles from the WPR is also adversely affected by rainfall (Muradyan and Coulter, 2020). Therefore, we removed instances with precipitating events from consideration in our comparison. The correlation coefficient (r) of the U component of the CMV and hourly wind averages improved from 0.38 for all the data to 0.42 after removing rainy samples, with a 95 % confidence interval. Likewise, for the V component, r increased from 0.56 for all data to 0.59, with a 95 % confidence interval. The slope of the linear fit for U components is between 2.4 and 3.4 for layers 0–3 km, and it is 5.7 for the 3–4 km layer, suggesting that the mid-level (i.e., 3–4 km) CMVs are noticeably underestimated from the camera viewpoint. The slopes of the V components are in the range of 3–4 for all layers. The WPR data above 4 km are sparse; hence no samples with matching criteria were available during the study period.

Figure 11Comparison of hourly mean U and V components of the CMV and mean wind in a 1 km deep layer where the stable cloud base height was observed during the hour. The rainy hours extracted using the method in Sect. 2.5 are shown with the red color.

The comparison of the CMV either from a ground-based camera or satellite sensors with that of atmospheric winds has several sources of uncertainty. The estimation and comparison of CBH and winds from the ceilometer and the wind profiler, respectively, show sampling uncertainty. In addition, the cloud displacement from the camera viewpoint differs with altitude, and deeper convective clouds do not always move parallel to the low-level winds. Therefore, this comparison may not be interpreted as a quantitative validation of the algorithm for wind retrievals; however, significant correlations of the magnitudes indicate that the estimates of the instantaneous CMVs from the camera images are stable over a long period. Although a perfect correlation does not exist between wind and CMV from ground camera images due to the above factors, more accurate identification of rain and snow-contaminated images would improve the comparison.

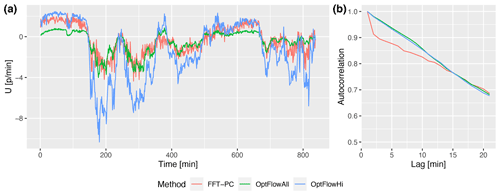

3.5 Comparison with the optical flow method

The estimations of the mean motion vector from PC and the OF algorithms for U components are shown in Fig. 12. The issue of near-zero values seen in Fig. 3 is also present in OF vectors, which is causing an underestimation of the mean magnitude compared to the PC (Fig. 12, OptFlowAll). Figure 13 shows a smoothed dense CMV field using the OF method. The near-zero values occur at the clear sky region or where the lighting and scene change drastically. Due to the dense motion field, these vectors are clustered in image space, and therefore they can not be removed with the neighborhood method of Westerweel and Scarano (2005). However, the regions with cloudiness are efficiently tracked by the OF method. After removing near-zero magnitudes using an arbitrary threshold of 1, the OF has higher magnitudes as compared to the PC method and better captures the variability than the PC method. The dense field of motion vectors can be leveraged for more adaptable statistical corrections than the arbitrary threshold used in this study for presentation purposes. The final CMV magnitudes could be highly dependent on the post-processing of the results for both PC and OF methods. Although the mean magnitudes are sensitive to post-processing corrections, the change in direction and magnitude of the motion vectors from both methods are comparable. The correlation between the OF and PC methods increases from 0.84 to 0.9 after removing the near-zero values. The autocorrelation functions in Fig. 12b show that the minute-by-minute fluctuations of the CMV are more stable for OF than for PC, due to the dense vector field of OF.

Figure 12Comparison of mean cloud motion estimated using PC and OF methods on 26 July 2016 over Lamont, OK. The mean OF vectors computed with all valid data and after removing low values are shown with green (OptFlowAll) and blue (OptFlowHi) lines, respectively. (a) Time series of domain average U component taken at the central 400×400 pixel region at a 1 min interval. No smoothing is applied to this plot. (b) Lag autocorrelation function shows the stability of the vectors.

Prior studies have documented the effectiveness of the blockwise PC and OF method for detecting cloud motion in IR and visible spectrum images (Leese et al., 1971; Chow et al., 2015; Dissawa et al., 2017; Zhen et al., 2019). We tested the sensitivity of the PC method to changes in block length, frame interval, and image resolution, as well as five combinations of the visible channels from a sky-viewing camera. These results are also applicable to satellite and radar-based motion estimation. Additionally, we compared the derived mean CMV from the PC method with the observed mean wind field from a colocated remote sensing instrument and the OF method. We also presented a method to detect raindrops on the rotating dome. However, the automated removal of contaminated images due to rain, snow, and other obscurities needs a more complex approach using advanced machine learning algorithms and labeled data.

The performance of different visible channels is comparable except for the red-to-blue channel ratio (RB). Although the RB is effective in segmenting clouds from the blue sky background (Dev et al., 2016), it smooths the cloud texture during overcast conditions, reducing the performance of the PC method. The red and grayscale performed slightly better than the blue and green channels. We find that larger block sizes provide a more stable estimation of cloud motion, and the stability benefits largely from the shortened interval between frames even for coarse-resolution camera data. Considering that the temporal changes in cloud patterns reduce the quality of the motion vectors, a shorter frame interval helps in maintaining the structure from one image to the next. However, a larger block size allows for a larger sample for stable correlation matching, achieving more stable estimates of the motion during disorganized cloud conditions (Fig. 8c and d). Although averaging in time over the short frame interval is a better way to achieve reliable estimates, a higher sampling rate may not always be feasible. In these situations, the large block size that can capture homogeneous motion is recommended for block-based PC implementation. We also show that increasing the spatial resolution, i.e., increasing the number of pixels without decreasing the number of blocks, marginally affects the quality of the motion vectors. At the same time, reducing the frame interval from 60 to 30 s outperforms quadrupling the resolution. Comparable results were obtained by Wang et al. (2018) for cloud segmentation using a ground-based camera.

Our analysis shows that doubling the frame rate outperforms quadrupling the resolution for PC. This non-intuitive result is very interesting in the context of edge computing. Because a shorter frame interval between the camera images effectively improves the quality of the CMVs, the application must have deterministic and low-latency access to sky images. Edge computing solves this problem efficiently by carefully placing and pairing computation with sensor data sources. Without incurring large data transfers and delays due to network outages, in an edge-computing platform like Sage, image data can be acquired and processed right next to the camera in the field. The high-level motion estimation result which is much smaller and compresses efficiently can be communicated and archived for further studies.

The validation with constructed data and the comparison of PC and OF methods suggests that the quality of the motion vectors is sensitive to the error corrections and removal of the near-zero magnitudes in the post-processing. The dense OF field can be corrected using spatial clustering methods to produce valuable results. It is also possible to use the inputs from the cloud cover estimation plugin to correct the raw OF field. The issue of multi-layer clouds mentioned in Sect. 1 can be addressed using OF dense motion field using adaptive clustering as post-processing as opposed to adaptive blocks used in Peng et al. (2016). Further sensitivity and comparative studies with OF algorithm are needed to test this technique.

The distortion of the sky images near the horizon, due to the wide field of view (FOV) of the fisheye lens, affects the accuracy of the mean cloud motion estimation. Therefore, the mean is estimated using the center portion of the images. The fisheye de-warping method can correct the regions near the horizon, where features are not heavily compressed.

Wind data retrieval from cloud motion vectors is an active area of research in satellite meteorology. Nevertheless, obtaining accurate wind retrievals from the ground-based optical camera images requires estimates of cloud-base heights, which is challenging without the lidar-based methods. Moreover, despite assuming ideal CMV and cloud-base height estimates, the resulting winds may not align well with the observed cloud motion due to the substantial vertical extent of cumulus clouds and the influence of vertical wind shear on their motion. The growth and decay of clouds can also result in additional cloud motion components unrelated to the wind. Thermal infrared cameras can potentially help determine cloud-base heights and also cloud motion vectors for estimating winds in the future.

Current machine learning algorithms for automatic cloud identification underperform in the presence of thin clouds (Park et al., 2021). To this end, we are generating a dataset of thin clouds identified by scanning mini micro-pulse lidar (MiniMPL) and a colocated sky-viewing camera using an edge-computing paradigm. One of the objectives is to use the camera images to predict cloud boundaries and cloud motion and utilize the knowledge to adapt MiniMPL scan strategies in real time for optimal sampling in various environmental conditions. Thus reducing the number of clear sky scans and targeting required clouds for the increased density of scans. Cloud locations predicted from CMV estimates can also be used in forecasting solar irradiance in near real time (Jiang et al., 2020; Radovan et al., 2021). The results of this study are helping to optimize image sampling and cloud motion estimation with edge-enabled camera systems.

The Sage plugin implementation on the waggle platform is made available from Sage UI (https://portal.sagecontinuum.org/, last access: 25 February 2023) and the full source code is available on GitHub at https://doi.org/10.5281/zenodo.7676068 (Shahkarami et al., 2023).

The data were obtained from the Atmospheric Radiation Measurement (ARM) user facility, a US Department of Energy (DOE) Office of Science user facility managed by the Biological and Environmental Research Program. The ARM SGP data can be obtained from the following DOI: https://doi.org/10.5439/1025309 (total sky imager; Flynn and Morris, 2023), https://doi.org/10.5439/1181954 (ceilometer; Morris et al., 1996), and https://doi.org/10.5439/1025135 (radar wind profiler; Muradyan and Coulter, 1998). Sage camera data were collected at the Argonne Testbed for Multi-scale Observational Studies (ATMOS). The ATMOS data used in this paper can be obtained by sending requests to the authors.

All authors contributed to the analysis plan and editing of the paper. BAR designed the methodology and conducted sensitivity tests, data analysis, and plotting. SAS, SP, and DD assisted in the algorithm selection and coding. SAS, YK, and JS contributed to the development of the Sage plugin. RS, SP, NC, SS, and WG are responsible for the deployment, testing, and scheduling of the plugin on the waggle nodes for real-time use. BAR, PM, and RCJ led the composition of the paper. PM contributed to the wind data analysis. SMC, NJF, and PB conceptualized the work and provided project oversight and direction.

The contact author has declared that none of the authors has any competing interests.

Publisher’s note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The Sage project is funded through the US National Science Foundation’s Mid-Scale Research Infrastructure program, NSF-OAC-1935984. The US Department of Energy (DoE) Atmospheric Radiation Measurement (ARM) user facility supported the work under the field campaign AFC 07056 “ARMing the Edge: Demonstration of Edge Computing”. Argonne National Laboratory’s contribution is based upon work supported by Laboratory Directed Research and Development (LDRD) funding from Argonne National Laboratory, provided by the Director, Office of Science, of the US Department of Energy under contract no. DE-AC02-06CH11357. We gratefully acknowledge the computing resources provided on Bebop, a high-performance computing cluster operated by the Laboratory Computing Resource Center at Argonne National Laboratory. We thank the ATMOS observatory staff for their assistance. The algorithm, data analysis, and graphics were programmed in Python (https://www.python.org/, last access: 25 February 2023) and the R programming language (https://www.r-project.org/, last access: 25 February 2023). We thank Robert Hoeller and an anonymous reviewer for their thoughtful comments and efforts that significantly improved the paper.

This research has been supported by the National Science Foundation (grant no. NSF-OAC-1935984) and the Argonne National Laboratory (grant no. DE-AC02-06CH11357). The US Department of Energy (DoE) Atmospheric Radiation Measurement (ARM) user facility supported the work under the field campaign “ARMing the Edge: Demonstration of Edge Computing” (grant no. AFC 07056).

This paper was edited by Ad Stoffelen and reviewed by Robert Hoeller and one anonymous referee.

Apke, J. M., Noh, Y.-J., and Bedka, K.: Comparison of Optical Flow Derivation Techniques for Retrieving Tropospheric Winds from Satellite Image Sequences, J. Atmos. Ocean. Tech., 39, 2005–2021, https://doi.org/10.1175/JTECH-D-22-0057.1, 2022. a

Beckman, P., Sankaran, R., Catlett, C., Ferrier, N., Jacob, R., and Papka, M.: Waggle: An open sensor platform for edge computing, in: 2016 IEEE SENSORS, Orlando, FL, USA, 30 October–3 November 2016, IEEE, 1–3, https://doi.org/10.1109/ICSENS.2016.7808975, 2016. a

Chalasinska-Macukow, K., Turon, F., Yzuel, M., and Campos, J.: Contrast performance of pure phase correlation, J. Optics, 24, 71, https://doi.org/10.1088/0150-536X/24/2/004, 1993. a

Chow, C. W., Belongie, S., and Kleissl, J.: Cloud motion and stability estimation for intra-hour solar forecasting, Sol. Energy, 115, 645–655, 2015. a, b

Dematties, D., Raut, B. A., Park, S., Jackson, R. C., Shahkarami, S., Kim, Y., Sankarana, R., Beckmana, P., Collis, S. M., and Ferrier, N.: Let's Unleash the Network Judgement: A Self-supervised Approach for Cloud Image Analysis, Artificial Intelligence for the Earth Systems, in press, 2023. a

Denman, S., Fookes, C., and Sridharan, S.: Improved simultaneous computation of motion detection and optical flow for object tracking, in: 2009 Digital Image Computing: Techniques and Applications, Melbourne, VIC, Australia, 1–3 December 2009, IEEE, 175–182, https://doi.org/10.1109/DICTA.2009.35, 2009. a

Dev, S., Lee, Y. H., and Winkler, S.: Color-based segmentation of sky/cloud images from ground-based cameras, IEEE J. Sel. Top. Appl., 10, 231–242, 2016. a

Dissawa, D., Godaliyadda, G., Ekanayake, M., Ekanayake, J. B., and Agalgaonkar, A. P.: Cross-correlation based cloud motion estimation for short-term solar irradiation predictions, in: 2017 IEEE International Conference on Industrial and Information Systems (ICIIS), Peradeniya, Sri Lanka, 15–16 December 2017, IEEE, 1–6, https://doi.org/10.1109/ICIINFS.2017.8300338, 2017. a, b

Dissawa, L. H., Godaliyadda, R. I., Ekanayake, P. B., Agalgaonkar, A. P., Robinson, D., Ekanayake, J. B., and Perera, S.: Sky Image-Based Localized, Short-Term Solar Irradiance Forecasting for Multiple PV Sites via Cloud Motion Tracking, Int. J. Photoenergy, 2021, 9973010, https://doi.org/10.1155/2021/9973010, 2021. a

El Jaouhari, Z., Zaz, Y., and Masmoudi, L.: Cloud tracking from whole-sky ground-based images, in: 2015 3rd International Renewable and Sustainable Energy Conference (IRSEC), Marrakech, Morocco, 10–13 December 2015, IEEE, 1–5, https://doi.org/10.1109/IRSEC.2015.7455105, 2015. a

Farnebäck, G.: (2003). Two-Frame Motion Estimation Based on Polynomial Expansion, in: Image Analysis. SCIA 2003. Lecture Notes in Computer Science, edited by: Bigun, J. and Gustavsson, T., Springer, Berlin, Heidelberg, 2749, 363–370, https://doi.org/10.1007/3-540-45103-X_50, 2003. a

Flynn, D. and Morris, V.: Total Sky Imager (TSISKYIMAGE), Atmospheric Radiation Measurement (ARM) user facility [data set], https://doi.org/10.5439/1025309, 2023. a

Gacal, G. F. B., Antioquia, C., and Lagrosas, N.: Trends of night-time hourly cloud-cover values over Manila Observatory: ground-based remote-sensing observations using a digital camera for 13 months, Int. J. Remote. Sens., 39, 7628–7642, 2018. a

Heinle, A., Macke, A., and Srivastav, A.: Automatic cloud classification of whole sky images, Atmos. Meas. Tech., 3, 557–567, https://doi.org/10.5194/amt-3-557-2010, 2010. a

Horn, B. K. and Schunck, B. G.: Determining optical flow, Artif. Intell., 17, 185–203, 1981. a, b

Huang, H., Yoo, S., Yu, D., Huang, D., and Qin, H.: Cloud motion detection for short term solar power prediction, in: 2013 IEEE International Conference on Smart Grid Communications (SmartGridComm), Vancouver, BC, Canada, 21–24 October 2013, IEEE, Vancouver, BC, Canada, 21–24 October 2013, https://doi.org/10.1109/SmartGridComm.2013.6688040, 2011. a

Jiang, J., Lv, Q., and Gao, X.: The ultra-short-term forecasting of global horizonal irradiance based on total sky images, Remote Sensing, 12, 3671, https://doi.org/10.3390/rs12213671, 2020. a, b

Kazantzidis, A., Tzoumanikas, P., Bais, A. F., Fotopoulos, S., and Economou, G.: Cloud detection and classification with the use of whole-sky ground-based images, Atmos. Res., 113, 80–88, 2012. a, b

Leese, J. A., Novak, C. S., and Clark, B. B.: An automated technique for obtaining cloud motion from geosynchronous satellite data using cross correlation, J. Appl. Meteorol., 10, 118–132, 1971. a, b, c, d, e

Mondragón, R., Alonso-Montesinos, J., Riveros-Rosas, D., and Bonifaz, R.: Determination of cloud motion applying the Lucas-Kanade method to sky cam imagery, Remote Sensing, 12, 2643, https://doi.org/10.3390/rs12162643, 2020. a

Morris, V.: Total Sky Imager (TSI): fractional sky coverage, ARM [data set], https://doi.org/10.5439/1025308, 2000. a

Morris, V.: Total Sky Imager (TSI) Handbook, Atmospheric Radiation Measurement Rep. DOE/SC-ARM/TR-017, OSTI.GOV, https://doi.org/10.2172/1020716, 2005. a

Morris, V., Zhang, D., and Ermold, B.: ceil, ARM [data set], https://doi.org/10.5439/1181954, 1996. a, b

Morris, V. R.: Ceilometer Instrument Handbook, Technical Report, DOE/SC-ARM-TR-020, OSTI.GOV, https://doi.org/10.2172/1036530, 2016. a

Muradyan, P. and Coulter, R.: 915-MHz Radar Wind Profiler/RASS (RWP915): wind consensus data, ARM [data set], https://doi.org/10.5439/1025135, 1998. a, b

Muradyan, P. and Coulter, R.: Radar Wind Profiler (RWP) and Radio Acoustic Sounding System (RASS) Instrument Handbook, Technical Report, DOE/SC-ARM/TR-044, OSTI.GOV, https://doi.org/10.2172/1020560, 2020. a, b

Park, S., Kim, Y., Ferrier, N. J., Collis, S. M., Sankaran, R., and Beckman, P. H.: Prediction of Solar Irradiance and Photovoltaic Solar Energy Product Based on Cloud Coverage Estimation Using Machine Learning Methods, Atmosphere, 12, 395, https://doi.org/10.3390/atmos12030395, 2021. a, b

Peng, Z., Yu, D., Huang, D., Heiser, J., and Kalb, P.: A hybrid approach to estimate the complex motions of clouds in sky images, Sol, Energy, 138, 10–25, 2016. a, b, c

Picel, M., Collis, S., Raut, B., Carani, S., Jackson, R., van Lier-Walqui, M., and Fridlind, A.: 3.4 TINT:TINT Is Not TITAN. Easy-to-Use Tracking Code Based on TITAN–Details and Uses, in: 8th Symposium on Advances in Modeling and Analysis Using Python, Vol. 98 of AMS Annual Meeting, Austin, TX, 7–11 January 2018, American Meteorological Society, https://ams.confex.com/ams/98Annual/webprogram/Paper335460.html, last access: 8 January 2018. a

Radovan, A., Šunde, V., Kučak, D., and Ban, Ž.: Solar Irradiance Forecast Based on Cloud Movement Prediction, Energies, 14, 3775, https://doi.org/10.3390/en14133775, 2021. a, b

Raut, B. A., Karekar, R. N., and Puranik, D. M.: Wavelet-based technique to extract convective clouds from infrared satellite images, IEEE Geosci. Remote S., 5, 328–330, 2008. a

Raut, B. A., Jackson, R., Picel, M., Collis, S. M., Bergemann, M., and Jakob, C.: An Adaptive Tracking Algorithm for Convection in Simulated and Remote Sensing Data, J. Appl. Meteorol. Clim., 60, 513–526, https://doi.org/10.1175/jamc-d-20-0119.1, 2021. a, b

Sage UI: https://portal.sagecontinuum.org/, last access: 25 February 2023.

Shahkarami, S., dariodematties, and Raut, B.: RBhupi/plugin-cmv-fftpc: check previouse release for fftpc, Version v1.23.02, Zenodo [code], https://doi.org/10.5281/zenodo.7676068, 2023. a

Slater, D., Long, C., and Tooman, T.: Total sky imager/whole sky imager cloud fraction comparison, in: Proc. 11th ARM Science Team Meeting, Atlanta, Georgia, 19–23 March 2001, 1–11, 2001. a, b

Steiner, M., Houze Jr., R. A., and Yuter, S. E.: Climatological characterization of three-dimensional storm structure from operational radar and rain gauge data, J. Appl. Meteorol., 34, 1978–2007, 1995. a

Tong, X., Ye, Z., Xu, Y., Gao, S., Xie, H., Du, Q., Liu, S., Xu, X., Liu, S., Luan, K., and Stilla, U.: Image registration with Fourier-based image correlation: A comprehensive review of developments and applications, IEEE J. Sel. Top. Appl., 12, 4062–4081, 2019. a

Turon, F., Chalasinska-Macukow, K., Campos, J., and Yzuel, M. J.: Pure phase correlation applied to multi-object colour scenes, J. Optics-UK, 28, 112, https://doi.org/10.1088/0150-536X/28/3/004, 1997. a

Voronych, O., Höller, R., Longhi Beck, G., and Traunmüller, W.: Solar PV nowcasting based on skycamera observations, Adv. Sci. Res., 16, 7–10, 2019. a, b

Wang, Y., Wang, C., Shi, C., and Xiao, B.: A selection criterion for the optimal resolution of ground-based remote sensing cloud images for cloud classification, IEEE T. Geosci. Remote, 57, 1358–1367, 2018. a

Westerweel, J. and Scarano, F.: Universal outlier detection for PIV data, Exp. Fluids, 39, 1096–1100, 2005. a, b, c

Wood-Bradley, P., Zapata, J., and Pye, J.: Cloud tracking with optical flow for short-term solar forecasting, in: Proceedings of the 50th Conference of the Australian Solar Energy Society, Melbourne, Citeseer, 6–7 December 2012. a

Zhen, Z., Xuan, Z., Wang, F., Sun, R., Duić, N., and Jin, T.: Image phase shift invariance based multi-transform-fusion method for cloud motion displacement calculation using sky images, Energ. Convers. Manage., 197, 111853, https://doi.org/10.1016/j.enconman.2019.111853, 2019. a, b, c