the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Introducing the Video In Situ Snowfall Sensor (VISSS)

Maximilian Maahn

Dmitri Moisseev

Isabelle Steinke

Nina Maherndl

Matthew D. Shupe

The open-source Video In Situ Snowfall Sensor (VISSS) is introduced as a novel instrument for the characterization of particle shape and size in snowfall. The VISSS consists of two cameras with LED backlights and telecentric lenses that allow accurate sizing and combine a large observation volume with relatively high pixel resolution and a design that limits wind disturbance. VISSS data products include various particle properties such as maximum extent, cross-sectional area, perimeter, complexity, and sedimentation velocity. Initial analysis shows that the VISSS provides robust statistics based on up to 10 000 unique particle observations per minute. Comparison of the VISSS with the collocated PIP (Precipitation Imaging Package) and Parsivel instruments at Hyytiälä, Finland, shows excellent agreement with the Parsivel but reveals some differences for the PIP that are likely related to PIP data processing and limitations of the PIP with respect to observing smaller particles. The open-source nature of the VISSS hardware plans, data acquisition software, and data processing libraries invites the community to contribute to the development of the instrument, which has many potential applications in atmospheric science and beyond.

- Article

(6891 KB) - Full-text XML

- BibTeX

- EndNote

It is well known that “every snowflake is unique”. The shape of a snow crystal is very sensitive to the processes that were active during its formation and growth. Vapor depositional growth leads to a myriad of crystal shapes depending on temperature, humidity, and their turbulent fluctuations. Aggregation combines individual crystals into complex snowflakes. Riming describes the freezing of small droplets onto ice crystals, causing them to rapidly gain mass and form a more rounded shape. In other words, the shape of snow particles is a fingerprint of the dominant processes during the life cycle of snowfall.

Better observations of the fingerprints of snowfall formation processes are needed to advance our understanding of ice and mixed-phase clouds and precipitation formation processes (Morrison et al., 2020). Given the importance of snowfall formation processes for global precipitation (Mülmenstädt et al., 2015; Field and Heymsfield, 2015), the lack of process understanding leads to gaps in the representation of these processes in numerical models. In a warming climate, precipitation amounts and extreme events, including heavy snowfall, are expected to increase (Quante et al., 2021), but the exact magnitudes are associated with large uncertainties (Lopez-Cantu et al., 2020).

Remote sensing observations of snowfall are indirect, which limits their ability to identify snow particle shape by design. Ground-based in situ observations of ice and snow particles can identify the fingerprints of the snowfall formation processes and provide detailed information on particle size, shape, and sedimentation velocity. Using assumptions about sedimentation velocity or an aggregation and riming model as a reference, the particle mass–size and/or mass–density relationship can also be inferred from in situ observations (Tiira et al., 2016; von Lerber et al., 2017; Pettersen et al., 2020; Tokay et al., 2021; Leinonen et al., 2021; Vázquez-Martín et al., 2021a). Various attempts have been made to classify particle types and identify active snowfall formation processes using various machine learning techniques (Nurzyńska et al., 2013; Grazioli et al., 2014; Praz et al., 2017; Hicks and Notaroš, 2019; Leinonen and Berne, 2020; Del Guasta, 2022; Maherndl et al., 2023b); these classifications are needed to support quantification of snowfall formation processes (Grazioli et al., 2017; Moisseev et al., 2017; Dunnavan et al., 2019; Pasquier et al., 2023). In situ observations have also been used to characterize particle size distributions (Kulie et al., 2021; Fitch and Garrett, 2022), to investigate sedimentation velocity and turbulence of hydrometeors (Garrett et al., 2012; Garrett and Yuter, 2014; Li et al., 2021; Vázquez-Martín et al., 2021b; Takami et al., 2022), and for model evaluation (Vignon et al., 2019). In combination with ground-based remote sensing, in situ snowfall data have been used to validate or better understand remote sensing observations (Gergely and Garrett, 2016; Li et al., 2018; Matrosov et al., 2020; Luke et al., 2021), to develop joint radar in situ retrievals (Cooper et al., 2017, 2022), and to train remote sensing retrievals (Huang et al., 2015; Vogl et al., 2022).

Different design concepts have been used for in situ snowfall instruments. Line scan cameras are commonly used by optical disdrometers such as the OTT Parsivel (Löffler-Mang and Joss, 2000), and their relatively large observation volume reduces the statistical uncertainty for estimating the particle size distribution (PSD). However, additional assumptions are required to size irregularly shaped particles such as snow particles correctly due to the one-dimensional measurement concept (Battaglia et al., 2010). This limitation can be overcome when adding a second line camera as for the Two-Dimensional Video Disdrometer (2DVD; Schönhuber et al., 2007), but particle shape estimates can still be biased by horizontal winds (Huang et al., 2015; Helms et al., 2022). The 2DVD's pixel resolution of approx. 190 µm per pixel (px) and the lack of gray-scale information prohibit resolving fine-scale details of snow particles.

To get high-resolution images, a group of instruments uses various approaches to obtain particle images with microscopic resolution at the expense of the measurement volume size. For example, the MASC (Multi-Angle Snowfall Camera; Garrett et al., 2012) takes three images with 30 µm px−1 pixel resolution of the same particle from different angles. This allows for resolving very fine particle structures, but during a snowfall event Gergely and Garrett (2016) observed only 102–104 particles, which is not sufficient to reliably estimate a PSD on the minute temporal scales needed to capture changes in precipitation properties. Del Guasta (2022) have developed a flatbed scanner (ICE-CAMERA) that has a pixel resolution of 7 µm px−1 and can provide mass estimates by melting the particles, but this approach only works at low snowfall rates. The images of the D-ICI (Dual Ice Crystal Imager; Kuhn and Vázquez-Martín, 2020) even have a pixel resolution of 4 µm px−1 and show particles from two perspectives, but similarly to the MASC, the small sampling volume does not allow for the measurement of PSDs with a sufficiently high accuracy.

The SVI (Snowfall Video Imager; Newman et al., 2009) and its successor the PIP (Precipitation Imaging Package; Pettersen et al., 2020) use a camera pointed to a light source to image snow particles in free fall. The open design limits wind field perturbations, and the large measurement volume (4.8 cm × 6.4 cm × 5.5 cm for a 1 mm snow particle) minimizes statistical errors in deriving the PSD. However, the pixel resolution of 100 µm px−1 is not sufficient to study fine details. Further, the open design requires that the depth of the observation volume is not constrained by the instrument itself. As a consequence, particle blur needs to be used to determine whether a particle is in the observation volume or not, which is potentially more error-prone than a closed instrument design. A similar design was used by Testik and Rahman (2016) to study the sphericity oscillations of raindrops. Kennedy et al. (2022) developed the low-cost OSCRE (Open Snowflake Camera for Research and Education) system that uses a strobe light to illuminate particles from the side, allowing for the observation of particle type of blowing and precipitating snow, but the observation volume is not fully constrained.

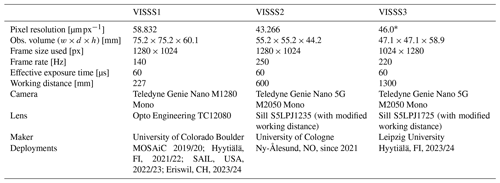

Figure 1(a) Concept drawing of the VISSS (not to scale with enlarged observation volume). See Sect. 3.2 and 3.3 for a discussion of the joint coordinate system and the transformation of the follower's coordinate system, respectively. (b) First-generation VISSS deployed at Gothic, Colorado, during the SAIL campaign (photo by Benn Schmatz); (c) randomly selected particles observed during MOSAiC on 15 November 2019 between 06:53 and 11:13 UTC.

This study presents the Video In Situ Snowfall Sensor (VISSS). The goal was to develop a sensor with an open instrument design without sacrificing the quality of measurement volume definition or resolution. It uses the same general principle as the PIP (Fig. 1): gray-scale images of particles in free fall illuminated by a background light. Unlike the PIP, this setup is duplicated with overlapping measurement volumes so that particles are observed simultaneously from two perspectives at a 90∘ angle. This robustly constrains the observation volume without the need for further assumptions. In addition, having two perspectives of the same particle increases the likelihood that the observed maximum dimension (Dmax) and aspect ratio are representative of the particle. While the VISSS does not reach the microscopic resolution of the D-ICI or ICE-CAMERA, its pixel resolution of 43 to 59 µm px−1 is significantly better than the PIP, and the use of telecentric lenses eliminates sizing errors caused by the variable distance of snow particles to the cameras.

The VISSS was originally developed for the MOSAiC (Multidisciplinary drifting Observatory for the Study of Arctic Climate) experiment (Shupe et al., 2022) and deployed at MetCity and, after the sea ice became too unstable in April 2020, on the P deck of the research vessel Polarstern. After MOSAiC, the original VISSS was deployed at Hyytiälä, Finland (Petäjä et al., 2016), in 2021/22; at Gothic, Colorado, as part of the SAIL campaign in 2022/23 (Surface Atmosphere Integrated Field Laboratory; Feldman et al., 2023); and at Eriswil, Switzerland, for the PolarCAP (Polarimetric Radar Signatures of Ice Formation Pathways from Controlled Aerosol Perturbations) campaign in 2023/24. During a test set up in Leipzig, Germany, the VISSS was used to evaluate a radar-based riming retrieval (Vogl et al., 2022). An improved second generation of the VISSS was installed at the French–German Arctic research base AWIPEV (the Alfred Wegener Institute, Helmholtz Centre for Polar and Marine Research – AWI – and the French Polar Institute Paul-Émile Victor – PEV) in Ny-Ålesund, Svalbard (Nomokonova et al., 2019), in 2021. A further improved third-generation VISSS with 1300 mm working distance was deployed in Hyytiälä at the end of 2023. The VISSS hardware plans and software libraries have been released under an open-source license (Maahn et al., 2023b; Maahn, 2023a, b; Maahn and Wolter, 2024) so that the community can replicate and further develop the VISSS. The VISSS hardware design and data processing are described in Sects. 2 and 3, respectively. Example cases including a comparison with the PIP are given in Sect. 4, and concluding remarks are given in Sect. 5.

The VISSS consists of two camera systems oriented at a 90∘ angle to the same measurement volume (Fig. 1). Both cameras work using the complementary metal oxide semiconductor (CMOS) global-shutter principle and use a resolution of 1280 × 1024 gray-scale pixels and a frame rate of 140 Hz (220 to 250 Hz since the second generation). One camera acts as the leader, sending trigger signals to both the follower camera and the two LED backlights that illuminate the scenes from behind with a 350 000 lux flash. Green backlights (530 nm) were chosen because the camera and lenses are optimized for visual light. The leader–follower setup results in a slight delay in the start of exposure between the two cameras. To compensate for this, the background LEDs are turned on for a duration of 60 µs only when the exposure of both cameras is active. Thus, the 60 µs flash of the backlights determines the effective exposure time of the camera as long as there is no bright sunlight, which is a rare condition during precipitation.

The two camera–lens–backlight combinations are at a 90∘ angle so that particles are observed from two perspectives, reducing sizing errors. Leinonen et al. (2021) found that using only a single perspective for sizing snow particles can lead to a normalized root mean square error of 6 % for Dmax, and Wood et al. (2013) estimated the resulting bias in simulated radar reflectivity to be 3.2 dB. For the VISSS, the accuracy of the measurements can be further improved by taking advantage of the fact that the VISSS typically observes 8 to 11 frames of each particle (assuming a sedimentation velocity of 1 m s−1 and a frame rate of 140 to 250 Hz), and additional perspectives can be obtained from the natural tumbling of the particle.

Telecentric lenses have a constant magnification within the usable depth of field, eliminating sizing errors. Consequently, the lens aperture must be as large as the observation area, making the lens bulky, heavy, and expensive. For the first VISSS (VISSS1), a lens with a magnification of 0.08 was chosen, resulting in a pixel resolution of 58.832 µm px−1 (Table 1). The working distance, i.e., the distance from the edge of the lens to the center of the observation volume, is 227 mm. This partly undermines the goal of having an instrument with an observation volume that is not obstructed by turbulence induced by nearby structures but was caused by budget limitations. It also does not allow for sufficiently large roofs over the camera windows to protect against snow accumulation in all weather conditions. This problem was partially solved by the increased budget (EUR 22 000) for the second-generation VISSS2, which used a 600 mm working distance lens as well as a camera with an increased frame rate of 250 Hz and a pixel resolution of 43.266 µm px−1. However, the optical quality of the lens proved to be borderline for the applications, resulting in an estimated optical resolution of approximately 50 µm and slightly blurred particle images. Consequently, the lens was changed again for the third-generation VISSS3, which has a working distance of 1300 mm. This was motivated by the result of Newman et al. (2009) showing that the airflow is undisturbed at a distance of 1 m from the instrument. Image quality is potentially also impacted by motion blur, and the exposure time of 60 µs was selected to limit motion blur of particles falling at 1 m s−1 to 1.02 and 1.44 px for VISSS1 and VISSS2, respectively. Particle blur can also occur when particles are not exactly in the focus of the lenses. The maximum circle of confusion is 1.3 px at the edges of the observation volume.

The lens–camera combinations and backlights are housed in waterproof enclosures that are heated to −5 and 10 ∘C, respectively. The low temperature in the camera housing is to prevent melting and refreezing of particles on the camera window.

The cameras of VISSS1 and VISSS2/VISSS3 are connected to the data acquisition systems via separate 1 and 5 Gbit Ethernet connections, respectively. Due to the increased frame rate, two separate systems are required to record data in real time for VISSS2/VISSS3.

The cameras transmit every captured image to the data acquisition systems, which are standard desktop computers running Linux. Based on simple brightness changes, the computers save only moving images and discard all other data (this was not implemented for MOSAiC). The raw data of the VISSS consist of the video files (.mov or .mkv video files with H.264 compression), the first recorded frame as an image (.jpg format) for quick evaluation of camera blocking, and a .csv file with the timestamps of the camera (capture_time) as well as the computer (record_time) and other meta-information for each frame. The cameras run continuously, and new files are created every 10 min (5 min for MOSAiC). In addition, a daily status .csv file is maintained that contains information about software start and stop times and when new files were created. Both cameras record completely separately, which requires an accurate synchronization of the camera and computer clocks for matching the observations of a single particle.

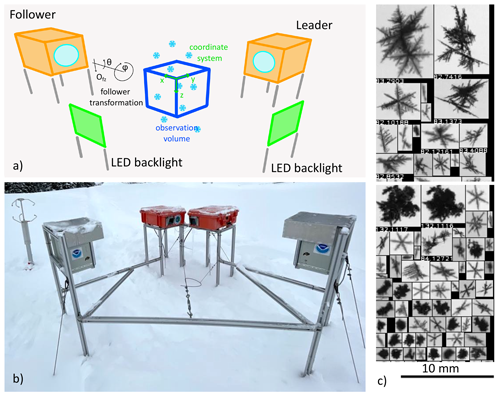

Figure 2Flowchart of VISSS data processing. Daily products have rounded corners; 10 min resolution products have square corners.

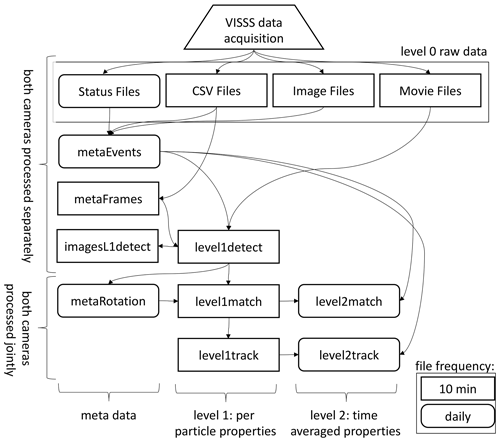

Figure 3Estimation of particle perimeter p and area A (cyan), maximum dimension Dmax (via smallest enclosing circle, magenta), smallest rectangle (red), region of interest (ROI; green), and elliptical fits using OpenCV's fitEllipseDirect (white) and fitEllipse functions (blue, covered by white line if identical to fitEllipseDirect). The particles were observed during MOSAiC on 15 November 2019 at 05:25 UTC except the particle on the right (Hyytiälä 23 January 2022 at 04:10 UTC).

Obtaining particle properties from the individual VISSS video images requires (1) detecting the particles, (2) matching the observations of the two cameras, and (3) tracking the particles over multiple frames to estimate the fall velocities. The Level-1 products contain per-particle properties in pixel units using (1) a single camera, (2) matched particles from both cameras, and (3) exploiting particles tracked in time. For the Level-2 products, the Level-1 observations are calibrated (i.e., converted from pixels into metric units) and distributions of the particle size, aspect ratio, and other properties are estimated based on the per-particle properties. In addition to the Level-1 and Level-2 products, there are metadata products: metaEvents is a netCDF version of the status files along with a camera blocking estimate based on the .jpg images. metaFrames is a netCDF version of the .csv file. metaRotation keeps track of the camera misalignment as detailed below. The imagesL1detect product contains images of the detected particles, which is required for creating quicklooks like Fig. 1c.

In the following, the processing of the Level-1 and Level-2 products is described in detail (Fig. 2).

3.1 Particle detection

Hydrometeors need to be detected and sized based on individual frames. First, video frames containing motion are identified by a simple threshold-based filter. Except for the MOSAiC data set, this is done in real time, which significantly reduces the data volume. Because snow may stick to the camera window, individual particles within a video frame cannot be identified by image brightness. Instead, the moving mask of pixels is identified by OpenCV's BackgroundSubtractorKNN class (Zivkovic and van der Heijden, 2006) in the image coordinate system (horizontal dimension X, vertical dimension Y pointing to the ground). In the moving mask identified by the background subtraction method, the individual particles are systematically too large so that the moving mask cannot be used directly for particle sizing. For each particle, i.e., connected group of moving pixels, we select a 10 px padded box around the region of interest (ROI) which is the smallest non-rotated rectangular box around the particle's moving mask (Fig. 3). This extended ROI is the input for OpenCV's Canny edge detection (after applying a Gaussian blur with a standard deviation of 1.5 px) to identify the edges of the particle. To estimate the particle mask by filling in the retrieved particle edges, gaps (typically 1 px in size) between the particle edges must be closed. For this, we dilate the retrieved edges by 1 px to form a closed contour, fill in the created contour, and erode the filled shape by 1 px to obtain the particle mask. To detect potential particle holes, which should be retained to avoid overestimating the particle area, the Canny filter particle mask and the moving mask are combined for the final particle mask. As a result, the VISSS can detect even relatively small particle structures, as shown in Fig. 3. The use of only 1 px (i.e., 43 to 59 µm) for dilation was found to be sufficient and allows us to potentially resolve more details of the particles than MASC and the PIP, which dilate by 200 µm (Garrett et al., 2012) and 300 µm (Helms et al., 2022), respectively. The final particle mask and corresponding contour are used to estimate the particle's maximum dimension (using OpenCV's minEnclosingCircle function), perimeter p (arcLength), area A (contourArea), and aspect ratio AR (defined as the ratio between the major and minor axis), as well as the canting angle α (defined between the vertical axis and major axis). AR and α are estimated in three different ways, from the smallest rectangle fitted around the contour (minAreaRect) or from an ellipse fitted to the contour (fitEllipse and the more stable fitEllipseDirect). The particle area equivalent diameter (Deq) is obtained from A. Particle complexity c (Garrett et al., 2012; Gergely et al., 2017) is derived from the ratio of the particle perimeter p to the perimeter of a circle with the same area A:

In addition to these geometric variables, the level1detect product contains variables describing the pixel brightness (min, max, standard deviation, mean, skewness), the position of the centroid, and the blur of the particle estimated from the variance of the Laplacian of the ROI. All particles are processed for which Dmax≥ 2 px and A≥ 2 px hold. To avoid detection of particles completely out of focus, the brightness of the darkest pixel must be at least 20 steps darker than the median of the entire image, and the variance of the Laplacian of the ROI brightness must be at least 10. Particle detection is the most computationally intensive processing step and is typically performed on a small cluster. Processing 10 min of heavy snowfall for a single VISSS camera can take several hours on a single AMD EPYC 7302 core.

3.2 Particle matching

The particle detection of each camera is completely separate, so the particles observed by each camera must be combined. This particle combination allows for the particle position to be determined in a three-dimensional reference coordinate system. As a side effect, this constrains the observation volume by discarding particles outside of the intersection of their observation volumes, i.e., observed by only one camera. We use a right-handed reference coordinate system (x,y,z) with z pointing to the ground to define the position of particles in the observation volume (Fig. 1). In the absence of an absolute reference, we attach the coordinate system to the leader camera (i.e., (xL,yL,zL) = (x,y,z)) such that x=XL and z=YL, where XL and YL are the particle positions in the two-dimensional leader images. Note that small letters describe the three-dimensional coordinate system and capital letters describe the two-dimensional position on the images of the individual camera images. The missing dimension y is obtained from the follower camera with , where XF is the horizontal position in the follower image.

The matching of the particles from both cameras is based on the comparison of two variables: the vertical position of the particles and their vertical extent. Due to measurement uncertainties, the agreement of these variables cannot be perfect and they are treated probabilistically. That is, it is assumed that the difference in vertical extent Δh (vertical position Δz) between the two cameras follows a normally distributed probability density function (PDF) with mean zero and a standard deviation of 1.7 px (1.2 px), based on an analysis of manually matched particle pairs. To determine the probability (of, e.g., measuring a certain vertical extent), the PDF is integrated over an interval of ± 0.5 px representing the discrete 1 px steps.

This process requires matching the observations of both cameras in time. The internal clocks of the cameras (“capture time”) can deviate by more than 1 frame per 10 min. The time assigned by the computers (“record time”) is sometimes, but not always, distorted by computer load. Therefore, the continuous frame index (“capture id”) is used for matching, but this requires determining the index offset between both cameras at the start of each measurement (typically 10 min). For this, the algorithm uses pairs of frames with observed particles that are less than 1 ms (i.e., less than of the measurement resolution) apart in record time assuming that the lag due to computer load is only sporadically increased. This allows the algorithm to identify the most common capture id offset of the frame pairs. We found that this method gives stable results for a subset of 500 frames. Similarly to h and z, the capture id offset Δi is used as the mean of a normal distribution with a standard deviation value of 0.01, which ensures that only particles observed at the same time are matched. During MOSAiC, the data acquisition computer CPUs turned out to be too slow to keep up with processing during heavy snowfall. With the additional impact of a bug in the data acquisition code and drifting computer clocks when the network connection to the ship's reference clock was interrupted, the particle matching for the MOSAiC data set often requires manual adjustment. These problems have been resolved for later campaigns so that matching now works fully automatically.

The joint product of the probabilities from Δh, Δz, and Δi is considered a match score, which describes the quality of the particle match. Manual inspection revealed that the number of false matches increases strongly for match scores of less than 0.001, which is used as a cutoff criterion. Assuming that the probabilities are correctly determined, this implies that 0.1 % of particle matches are falsely rejected, resulting in a negligible bias.

For each particle, its three-dimensional position is provided and all per-particle variables from the detection are carried forward to the matched-particle product level1match. The ratio of matched to observed particles from a single camera varies with the average particle size, since larger particles can be identified even when they are out of focus, and varies between approximately 10 % and 90 %.

3.3 Correction for camera alignment

Although alignment of both observation volumes is a priority during installation, the cameras can be rotated or displaced, i.e., misaligned. As a result, the same particle may be observed at different heights and does not hold. The observed offsets are not constant and can change due to unstable surfaces or pressure of accumulated snow on the VISSS frame. We could simply ignore the misalignment and continue to take z from the leader, but this would not allow us to generally use the vertical position to match particles from both cameras (see above). Also, offsets in z reduce the common observation volume of both cameras, which could lead to biases when calibrating the PSDs if not accounted for.

Besides a constant offset in the vertical z dimension Ofz, one of the cameras can also be rotated around the optical axis (expressed analogously to aircraft coordinate systems with roll φ), around the horizontal axis perpendicular to the optical axis (pitch θ), or around the vertical axis (yaw ψ). As a consequence, depends on the position of the particle in the observation volume.

To account for the misalignment, we attach the coordinate system to the leader (i.e., we assume that the leader is perfectly aligned, (xL,yL,zL) = (x,y,z)) and retrieve the misalignment of the follower with respect to the leader in terms of φ, θ, and Ofz. We cannot derive ψ from the observation, and we have no choice but to neglect it by assuming ψ=0 to reduce the number of unknowns. Mathematically, we need to transform the follower coordinate system (xF,yF,zF) to our leader reference coordinate system (xL,yL,zL) using rotation and shear matrices. In the Appendix A, we show how the transformation matrices can be arranged so that the follower's vertical measure zF can be converted to zL depending on φ and θ with

This equation can be considered a forward operator that calculates the expected leader observation zL based on a misalignment state (Ofz, φ, and θ) and additional parameters (xL, yF, zF). While we assume that the misalignment state is constant for each 10 min observation period, the other variables (xL, yF, zF) are available on a per-particle basis, combining observations from both cameras. Therefore, we can use a Bayesian inverse optimal estimation retrieval (Rodgers, 2000) implemented by the pyOptimalEstimation library (Maahn et al., 2020) to retrieve the misalignment state from the actual observed zL.

The retrieved misalignment parameters are required for matching, but retrieving the misalignment parameters requires matched particles. To solve this dilemma, we use an iterative method assuming that misalignment does not change suddenly. The method starts by using the misalignment estimates and uncertainties (inflated by a factor of 10) from the previous time period (10 min) to match particles of the current time period. These particles are used to retrieve values for φ, θ, and Ofz which are used as a priori input for the next iteration of misalignment retrieval. The iteration is stopped when the changes in φ, θ, and Ofz are less than the estimated uncertainties. For efficiency, the iterative method is applied only to the first 300 observed particles and the resulting coefficients are stored in the metaRotation product. A drawback of the method is that this processing step requires processing the 10 min measurement chunks in chronological order, creating a serial bottleneck in the otherwise parallel VISSS processing chain. Obviously, this method does not work when no information is available from the previous time step, e.g., after the instrument was set up or adjusted. To get the starting point for the iteration, the matching algorithm is applied for frames where only a single, relatively large (> 10 px) particle is detected so that the matching can be done based on particle height difference (Δh) alone, ignoring vertical offset (Δz).

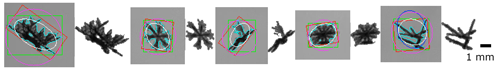

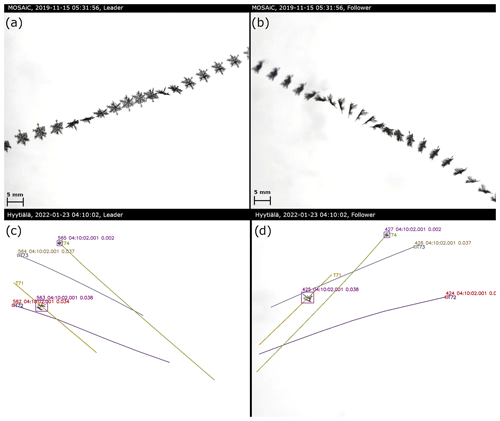

Figure 4Composite of a snow particle recorded by leader (a) and follower (b) during MOSAiC on 15 November 2019 at 05:31 UTC. Particle tracking is shown for a frame of the leader (c) and the matched frame of the follower (d) in Hyytiälä on 23 January 2022 at 04:10 UTC. For each snow particle (surrounded by boxes denoting particle id, time of observation, and match score), the particle track is shown. The tracks indicate past and future positions and are labeled with the track id number starting with T. Only parts of the tracks observed by both cameras are displayed.

3.4 Particle tracking

Tracking a matched particle over time provides its three-dimensional trajectory, from which sedimentation velocity and interaction with turbulence can be determined. Since the natural tumbling of the particles provides new particle perspectives, the estimates of particle properties such as Dmax, A, p, and AR can be further improved. This can be seen in a composite of a particle (Fig. 4a–b) observed during MOSAiC, which also shows how the multiple perspectives of the particle help to identify its true shape. The example also shows that during MOSAiC the alignment of the cameras was not perfect, resulting in some of the measurements being slightly out of focus; this has been resolved for later campaigns. The tracking algorithm uses a probabilistic approach similar to particle matching, taking into account that the particles' velocities only change to a certain extent from one frame to the next. That change can be quantified as a cost derived from the particles' distances and area differences between two time steps. This allows us to use the Hungarian method (Kuhn, 1955) to assign the individual matched particles to particle tracks for each time step in a way that minimizes the costs, i.e., to solve the assignment problem. To account for the fact that the particle's position is expected to change between observations, we use a Kalman filter (Kalman, 1960) to predict a particle's position based on the past trajectory and use the distance δl between predicted and actual position for the cost estimate. Without a past trajectory, the Kalman filter uses a first guess which we derive from the velocities of 200 previously tracked particles. If no previous particles are available, the tracking algorithm is applied twice to the first 400 particles to avoid a potential bias caused by using a non-case-specific fixed value as a first guess. We found that tracking based only on position is unstable and added the difference in particle area (δA, mean of both cameras) to the cost estimate to promote continuity of particle shape. The combined cost is estimated from the product of δl and δA weighted by their expected variance. The performance of the algorithm can be seen for an observation obtained in Hyytiälä on 23 January 2022 at 04:10 UTC where multiple particles are tracked at the same time (Fig. 4c–d). The results of the tracking algorithm are stored in the level1track product which contains the track id and the same per-particle variables as the other Level-1 products.

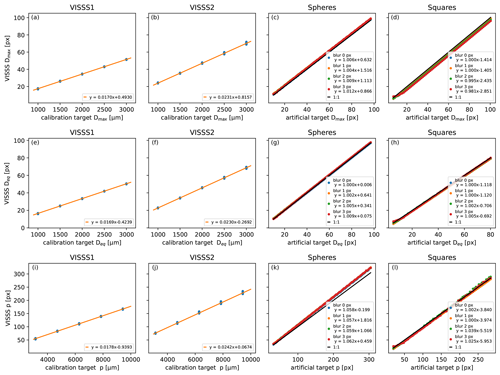

Figure 5Calibration of Dmax (first row), Deq (second row), and perimeter p (third row) using metal spheres for VISSS1 (first column), using metal spheres for VISSS2 (second column), using drawn sphere images (third column), and using drawn square images (fourth column). For artificial images, a Gaussian blur filter is applied with a standard deviation according to the embedded legends. The legends also show the results of linear least-squares fits.

3.5 Calibration

Calibration is required to convert Dmax, Deq, and p from pixels to micrometers. It depends not only on the optical properties of the lens but also on the computer vision routines used. Calibration is obtained using reference steel or ceramic spheres with 1 to 3 mm diameter that are dropped into the VISSS observation volume. After processing using the standard VISSS routines, the estimated sizes are compared to the expected ones. A linear least-squares fit is applied to the 604 reference sphere observations obtained at Hyytiälä and SAIL, resulting in

for VISSS1 (Fig. 5a), and

for VISSS2 based on 372 samples from Ny-Ålesund (Fig. 5b). The inverse of the slope is 58.832 µm px−1 (43.266 µm px−1) and is close to the manufacturer's specification of 58.75 µm px−1 (43.125 µm px−1) for VISSS1 (VISSS2). The random error estimated from the normalized root mean square error obtained from the difference between observed and expected size is less than 0.8 %, indicating that random errors are negligible. To investigate the source of the non-zero intercept, we also tested the VISSS computer vision routines with artificially created VISSS images with drawn spheres and compared the expected to measured Dmax by a least-squares fit (Fig. 5c). Gaussian blur with a standard deviation between 0 and 3 px was applied to account for a realistic range of blurring due to, e.g., motion blur or particles that are slightly out of focus. Note that in addition to that, a Gaussian blur filter with a standard deviation of 1.5 px needs to be applied during image processing for the Canny edge detection as discussed above. For the artificial spheres, the obtained slope deviates less than 2 % from the expected slope of 1.0, but the offset ranges from 0.6 to 1.5 px, caused by the seeming enlargement of the particle due to the applied blur. To investigate the shape dependency of the results, we repeated the experiment with squares (Fig. 5d). Again, the slope deviates less than 2 % from 1.0, but the offset is negative with values ranging between −1.4 and −2.9 px depending on blur. This is because the corners of the square are rounded when applying Gaussian blur so that the true Dmax can no longer be obtained. In summary, the VISSS routines overestimate the Dmax of spheres but underestimate the Dmax of squares. In reality, the VISSS observes a wide range of different shapes that can be either rather spherical or rather complex with “pointy” corners. Therefore, we decided to set the intercept to 0 when calibrating Dmax, which can cause a particle-shape-dependent bias of ± 4 % to ± 6 %. For particles smaller than 10 px, this bias can be slightly larger due to discretization errors, as can be seen from the larger impact of blur for small squares (Fig. 5d).

For better comparison with Dmax, Deq is used instead of A for testing the computer vision method for estimating A (Fig. 5e–h). The results are almost identical to Dmax, so the slopes derived from Dmax are applied to Deq (and consequently A) as well.

For the perimeter p (Fig. 5i–l), the slopes derived from the reference spheres are about 5 % steeper than for Dmax, indicating that VISSS p values are biased high. This bias is also found for artificial spheres independent of the applied additional blur. Therefore, this bias is related to the image processing and most likely caused by the Gaussian blur required for the Canny edge detection. For squares, however, the slope is close to 1, likely due to compensating effects caused by “cutting corners” of the algorithm. In reality, the VISSS observes more complex particles for which the perimeter increases with decreasing scale (compare to the coastline paradox; Mandelbrot, 1967). Therefore, we conclude that it is extremely unlikely that the perimeter of real particles is biased high like for artificial spheres but rather biased low depending on complexity. As a pragmatic approach, we also apply the Dmax slope to p but stress that p has a considerably higher uncertainty than Dmax or Deq.

The calibration is also checked by holding a millimeter pattern in the camera and measuring the pixel distance in the images; the difference found from the reference spheres is less than 2 %. The millimeter pattern calibration did not reveal any dependence on the position in the observation volume, so errors related to imperfect telecentricity of the lenses can likely be neglected.

3.6 Time-resolved particle properties

While Level-1 products contain per-particle properties, Level-2 products provide time-resolved properties. This includes the particle size distribution (PSD), which is the concentration of particles as a function of size normalized to the bin width. To estimate the PSD, the individual particle data are binned by particle size (1 px spacing, i.e., 43.266 to 58.832 µm), averaged over all frames during 1 min periods, and divided by the observation volume. For perfectly aligned cameras, the observation volume would simply be the volume of a cuboid with a base of 1280 px × 1280 px and a height of 1024 px. However, due to misalignment of the cameras, the actual joint observation volume is slightly smaller than a cuboid and can have an irregular shape. Therefore, the observation volumes are first calculated separately for leader and follower. To calculate the intersection of the two individual observation volumes, the eight vertices of the follower observation volume are rotated to the leader coordinate system, and the OpenSCAD library is used to calculate the intersection of the two separate observation volumes in pixel units. To account for the removal of partially observed particles detected at the edge of the image, the effective observation volume is reduced by on all sides. Consequently, each size bin of the PSD is calibrated independently with a different, Dmax-dependent effective observation volume. Finally, the volume is converted from pixel units to cubic meters using the calibration factor estimated above.

The Level-2 products are available based on the level1match and level1track products. For level2match, binned particle properties are available either from one of the cameras or using the minimum, average, or maximum from both cameras for each observed particle property. This means that the multiple observations of the same particle all contribute to the PSD. This does not bias the PSD because the number of observed particles is divided by the number of frames, and the PSD describes how many particles are on average in the observation volume. For level2track, the distributions are based on the observed tracks instead of individual particles and are calculated using the minimum, maximum, mean, or standard deviation along the observed track using both cameras. The use of the maximum (minimum) value along a track is motivated by the assumption that the estimated properties of a particle such as Dmax (AR) of a particle will be closer to the true value than when ignoring the different perspectives of a particle along the track obtained by the two cameras.

For both level2 variants, the binned PSD and A, perimeter p, and particle complexity c are available binned with Dmax and Deq to allow comparison with instruments using either size definition. In addition to the distributions, PSD-weighted mean values with 1 min resolution are available for A, AR, and c in addition to the first to fourth and sixth moments of the PSD that can be used to describe normalized size distributions (Delanoë et al., 2005; Maahn et al., 2015).

For VISSS observations where only a single camera is available, it would also be possible to develop a product based on particles detected by a single camera, using a threshold based on particle blur to define the observation volume, which is similar to the PIP (Newman et al., 2009).

Here, we analyze first-generation VISSS (VISSS1) data collected in winter 2021/22 at the Hyytiälä Forestry Field Station (61.845∘ N, 24.287∘ E; 150 ) operated by the University of Helsinki, Finland, to show the potential of the instrument. For comparison, we use a collocated PIP (von Lerber et al., 2017; Pettersen et al., 2020) and OTT Parsivel2 laser disdrometer (Löffler-Mang and Joss, 2000; Tokay et al., 2014). The distance between the VISSS and PIP was 20 m. The Parsivel was located inside of the double fence intercomparison reference, which was located 35 m from the VISSS.

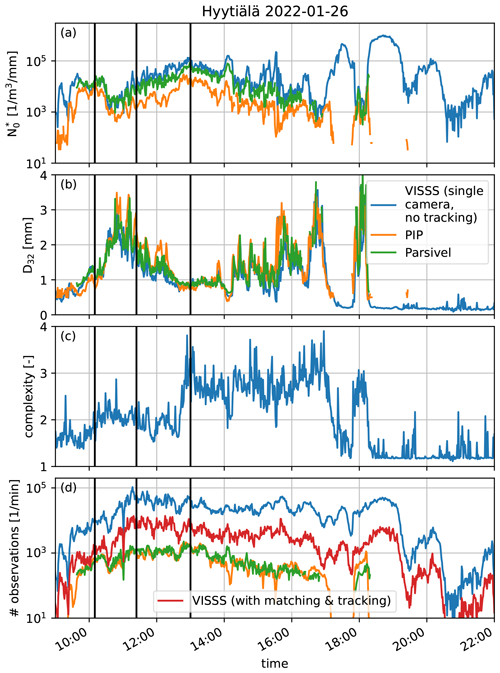

Figure 6Comparison of the VISSS (blue), PIP (orange), and Parsivel (green) for a snowfall case on 26 January 2022 at Hyytiälä using (a), D32 (b), complexity c (c), and the number of observed particles (d). For the VISSS, the latter is shown without (blue) and with (red) particle tracking. The three vertical black lines indicate the sample PSDs shown in Fig. 8.

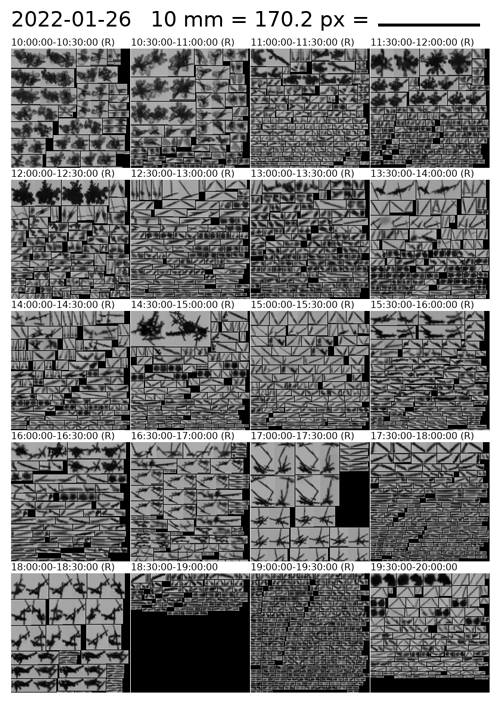

Figure 7Image pairs of particles observed by the two VISSS cameras on 26 January 2022 between 10:00 and 19:00 UTC in original resolution. The “(R)” indicates that more particles than shown were observed by the VISSS and only a random selection is presented in the panel. Even though particles ≥ 2 px are processed, only particles with Dmax≥ 10 px (0.59 mm) are shown because the particle shape of smaller particles cannot be identified.

4.1 Case study comparing the VISSS, PIP, and Parsivel

VISSS level2match data are compared with PIP and Parsivel observations for a snowfall case on 26 January 2022. For a fair comparison with the PIP and Parsivel, which observe particles from a single perspective, only data of a single VISSS camera are used in this section. Because the Parsivel uses something similar to Deq (see discussion in Battaglia et al., 2010, for the predecessor instrument), Deq is also used as a PIP and VISSS size descriptor in the following. Also, Deq is not affected by the problems of the PIP particle sizing algorithm identified by Helms et al. (2022). The PSD is characterized by the two variables, and D32, used to describe the normalized size distributions (Testud et al., 2001; Delanoë et al., 2005) where is a scaling parameter and D32 normalizes the size distribution by size. Assuming a typical value of 2 for the exponent b of the mass–size relation (e.g., Mitchell, 1996), D32 is the proxy for the mean mass-weighted diameter defined as the ratio of the third to the second measured PSD moments . Assuming the same value for b, can be calculated with

as shown in Maahn et al. (2015). The variability in and D32 as well as the particle complexity c and the number of particles observed throughout the day is depicted in Fig. 6. The spectral variable c is available for each size bin. Because using a PSD-weighted average over all sizes for c would be heavily weighted towards smaller particles which are less complex due to the finite resolution, we use the 95th percentile for c in the following. The main precipitation event lasted from 10:00 to 17:30 UTC and shows an anticorrelation between and D32: the former increases up to 105 until 13:00 UTC before decreasing to 103 at the end of the event. The particle complexity c divides the core period of the event into two parts with c≈ 2 before 13:00 UTC and c≈ 2.8 after 13:00 UTC. This transition can also be seen in the random selection of matched particles observed by the VISSS (Fig. 7) retrieved from the imagesL1detect product. For each particle, a pair of images is available from the two VISSS cameras. Before 13:00 UTC, a wide variety of different particle types were observed, including plates, small aggregates, and small rimed particles. Since particle shape and mean brightness are not used to match particles, the observed image pairs also confirm the ability of the VISSS to correctly match data from the two cameras. After 13:00 UTC, needles and needle aggregates dominate the observations, explaining the increase in observed complexity. Towards the end of the event, particles become smaller and more irregularly shaped. Around 18:30 UTC, even some ice-lolly-shaped particles (Keppas et al., 2017) are observed by the VISSS.

and D32 are also calculated from the PSDs observed by the PIP and Parsivel. For the core event, measured by the PIP is about an order of magnitude smaller than that measured by the VISSS and Parsivel. The agreement of the VISSS and Parsivel is better, but some peaks in are not resolved by the Parsivel when D32 is large. This discrepancy may be related to problems of the Parsivel with larger particles reported before (Battaglia et al., 2010). The reason for the observed differences between the PIP and the VISSS is likely more complex. Overall the measured D32 agrees better than . Because D32 is a proxy for the mass-weighted mean diameter, larger, more massive snowflakes have a larger impact on D32 than more numerous smaller particles. This implies that the PIP is not capturing as many small ice particles as the VISSS, while measurements of larger particles seem to be less affected. Tiira et al. (2016) have studied the effect of the left-side PSD truncation on PIP observations (see Fig. 6 in Tiira et al., 2016), but the observed VISSS–PIP difference seems to be somewhat larger than expected; namely the difference extends to larger D32 values.

The number of particle observations ranges between 10 000 and 100 000 per minute, showing that estimates of , D32, and c are based on a sufficient number of observations to limit the impact of random errors. This is about 1.5 orders of magnitude more particles than observed by the Parsivel and PIP (Fig. 6d), but this is not a fair comparison because the Parsivel and PIP report the number of unique particles, and the number of particle observations is used here for the VISSS. When applying the tracking algorithm to the VISSS and – consistently with the other sensors – considering only unique particle observations, the advantage of the VISSS is reduced to 50 % more particles than observed by the Parsivel and PIP. The average track length of the VISSS varies throughout the day between 5 and 20 frames with an overall average of 8.5 frames.

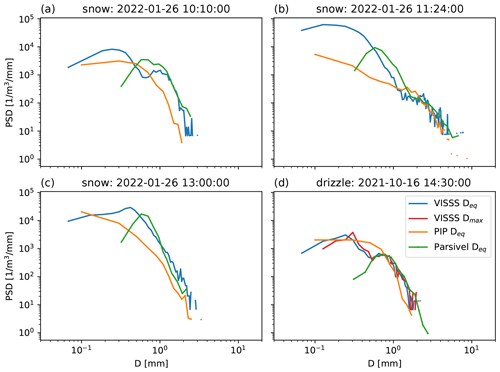

Figure 8(a–c) Particle size distributions of the VISSS, PIP, and Parsivel for the three cases indicated in Fig. 6 on 26 January 2022 integrated over 1 min. Deq is used as a size descriptor. (d) The same as (a)–(c) but showing the drop size distribution of a drizzle case on 16 October 2021. In addition, the VISSS drop size distribution is also shown with Dmax as the size descriptor.

To further investigate the differences between the instruments, we compare VISSS, PIP, and Parsivel PSDs (Fig. 8) for the three discussed times during the snowfall case. While the Parsivel and VISSS mostly agree for D > 1 mm for all three cases, the Parsivel observes more particles for 0.6 mm < D < 1 mm (as previously reported by Battaglia et al., 2010) before dropping for D < 0.6 mm, which is likely related to limitations associated with the Parsivel pixel resolution of 125 µm. The comparison of the VISSS and PIP shows larger discrepancies as explained above. The PSDs tend to agree for Deq > 1 mm for cases where larger ice particles are more spherical (11:24 UTC). For the needle case (13:00 UTC), the PIP reports lower number concentrations than the VISSS and Parsivel for almost all sizes. At 10:10 UTC, the VISSS and PIP approximately agree for sizes between 0.4 and 0.8 mm, but the PIP reports lower values for other sizes. Although no needles are observed at 10:10 UTC, Fig. 7 shows that there were also small columns that could be affected by the dilation of structures less than 0.4 mm wide by the PIP software, or some parts of radiating assemblage of plates were removed by the image processing.

All three instruments have different sensitivities to small particles. This can be seen for the drop in D32 around 17:45 UTC (Fig. 6) where the Parsivel does not report any values, and the PIP estimates differ strongly from the VISSS when D32 < 1 mm. The VISSS reports D32 values as low as 0.16 mm around 19:00 UTC. Although the sample sizes are sufficient (> 10 000 particles per minute), the errors are likely large due to the VISSS pixel resolution of ∼ 0.06 mm. In the absence of an instrument designed to observe small particles, it is not possible to determine how reliably the VISSS detects and sizes small particles.

Additional insight is provided by comparing the drop size distributions (DSDs) observed by the three instruments during a drizzle event on 16 October 2021 (Fig. 8d). The use of drizzle allows the Parsivel to be used as a reference instrument as it has been shown to provide accurate DSDs for sizes between 0.5 and 5 mm (Tokay et al., 2014). In fact, Parsivel and VISSS DSDs differ no more than 10 % for 0.55 mm > D > 0.9 mm, with both showing a dip in the distribution around 0.55 mm. For larger droplets, differences are likely related to their low frequency of occurrence increasing statistical errors. For smaller droplets, the VISSS (as well as the PIP) reports concentrations about an order of magnitude higher than the Parsivel. Similarly, Thurai et al. (2019) found that a 50 µm optical array probe observed more small drizzle droplets than a Parsivel. For these small particle sizes close to the VISSS camera pixel resolution, discretization errors likely play a role, which we investigate by comparing Dmax and Deq for the VISSS. As drizzle droplets can be considered sufficiently spherical (i.e., AR > 0.9) for D < 1 mm (Beard et al., 2010), we can evaluate whether Dmax=Deq holds as expected (Fig. 8d). As expected, VISSS Dmax and Deq are in almost perfect agreement for D > 0.5 mm, but larger differences occur for D < 0.3 mm, indicating that discretization errors can become substantial for D < 0.3 mm.

In the absence of a reference instrument for smaller particles in Hyytiälä or reference spheres with diameters smaller than 0.5 mm, the performance of the VISSS for observing small particles with D < 0.5 mm is difficult to assess. Particles close to the thresholds for size, area, and blur might be rejected for parts of the observed trajectory, which could explain the decrease in VISSS number concentration for small particle sizes.

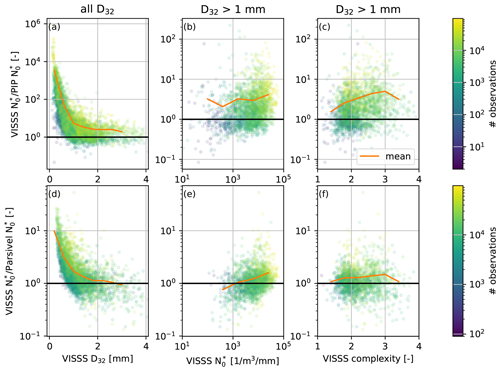

Figure 9Statistical analysis of the ratio of VISSS to PIP as a function of (a) VISSS D32, (b) VISSS , and (c) VISSS complexity c. (d–f) The same as (a)–(c) but comparing the VISSS to the Parsivel. The color indicates the number of particles observed by the VISSS; the orange line indicates the mean ratio. The analysis for (b, e) and c (c, f) is restricted to cases with D32 > 1 mm.

Figure 10Mean (a) Dmax, (b) Deq, (c) perimeter p, (d) aspect ratio AR, and (e) radar reflectivity factor Ze for cases with aggregates (blue), needles (orange), and graupel (green) when using only a single VISSS camera, both cameras, and both cameras considering particle tracking. The shaded areas denote 1 standard deviation.

4.2 Statistical comparison of the VISSS, PIP, and Parsivel

The results of the case study comparison of the VISSS, PIP, and Parsivel also hold when comparing 6661 min of joint snowfall observations during the winter of 2021/22 (Fig. 9). The ratio of observed by the VISSS and PIP (Parsivel) is compared to D32, , and complexity c. For D32 < 1 mm, the VISSS-to-PIP (VISSS-to-Parsivel) ratio increases strongly and can reach a value of 10 000 (10). Therefore, the comparison of the ratio with itself and c is limited to data with D32 > 1 mm. For the PIP, the difference in does not depend on but – as suggested by the needle case above – on complexity c, with higher c values indicating larger differences, probably as a result of limitations in the PIP image processing implementation. For the VISSS-to-Parsivel comparison, the difference depends on instead of c. Because D32 and are often anticorrelated, this could be related to size-dependent errors of the Parsivel as identified by Battaglia et al. (2010).

4.3 Advantage of the second VISSS camera

Here, we quantify the advantage of observing multiple orientations of a particle with the VISSS. For this, we compare 1 min values of mean Dmax, Deq, and p obtained from a single camera, using the maximum value obtained from both cameras and the maximum value obtained during the observed particle track (Fig. 10a–c). For AR, the minimum of the two cameras and along the track is used instead of the maximum (Fig. 10d). To evaluate the effect of particle type, three cases with mostly dendritic aggregates (6 December 2021, 07:19–12:30 UTC), needles (5 January 2022, 00:00–14:30 UTC), and graupel (6 December 2021, 00:00–04:50, 13:30–14:20, and 21:15–24:00, and 5 January 2022, 15:00–16:40 and 19:40–20:50 UTC) are used. The change in observed values is strongest for needles, which are the most complex particles, where when using two cameras Dmax, Deq, p, and AR change by 16 %, 10 %, 14 %, and −12 %, respectively, and when additionally considering tracking the values change by 24 %, 19 %, 24 %, and −27 %, respectively. Changes for dendritic aggregates and graupel are smaller and surprisingly similar: Dmax increases by 8 % and 7 % (13 % and 16 %), Deq increases by 6 % and 6 % (14 % and 14 %), and p increases by 7 % and 7 % (19 % and 16 %), respectively, when using two cameras (two cameras with tracking). The dependency of particle properties on orientation can also be seen from the fact that mean AR decreases from 0.62 to 0.54 and 0.42 for aggregates and from 0.73 to 0.67 and 0.54 for graupel, highlighting that orientating matters even for graupel.

Underestimating Dmax can lead to biases when using commonly used Dmax-based power laws for particle mass (Mitchell, 1996) or when using in situ observations to forward-model radar observations. This is because scattering properties of non-spherical particles are typically parameterized as a function of Dmax (Mishchenko et al., 1996; Hogan et al., 2012). Further, particle scattering properties are also impacted by the distribution of particle mass along the path of propagation (Hogan and Westbrook, 2014), which is impacted by AR. To analyze how the different Dmax and AR estimates affect the simulated radar reflectivity for vertically pointing cloud radar observations at 94 GHz, we use the PAMTRA radar simulator (Passive and Active Microwave radiative TRAnsfer tool; Mech et al., 2020) with the riming-dependent parameterization of the particle scattering properties (Maherndl et al., 2023a) assuming horizontal particle orientation (Sassen, 1977; Hogan et al., 2002). Using two cameras (i.e., max(Dmax,min(AR)) increases mean Ze values by 2.1, 2.5, and 1.8 dB for aggregates, needles, and graupel, respectively. When the varying orientations are taken into account during tracking, the offsets increase to 4.5, 4.6, and 3.7 dB, respectively, which are considerably larger than the commonly used measurement uncertainty of 1 dB for cloud radars. The change in Ze is similar to the 3.2 dB found by Wood et al. (2013) using idealized particles.

The hardware and data processing of the open-source Video In Situ Snowfall Sensor (VISSS) have been introduced. The VISSS consists of two cameras with telecentric lenses oriented at a 90∘ angle to each other and that observe a common observation volume. Both cameras are illuminated by LED backlights (see Table 1 for specifications). The goal of the VISSS design was to combine a large, well-defined observation volume and relatively high pixel resolution with a design that limits wind disturbance and allows accurate sizing. The VISSS was initially developed for MOSAiC, but additional deployments at Hyytiälä, Finland; Gothic, Colorado, USA; and Eriswil, Switzerland, followed. Advanced versions of the instrument have been installed at Ny-Ålesund, Svalbard, and again at Hyytiälä, Finland. The VISSS Level-1 processing steps for obtaining per-particle properties include particle detection and sizing, particle matching between the two cameras considering the exact alignment of the cameras to each other, and tracking of individual particles to estimate sedimentation velocity and improve particle property estimates. For Level-2 products, the temporally averaged particle properties and size distributions are available in calibrated metric units.

The initial analysis shows the potential of the instrument. The relatively large observation volume of the VISSS leads to robust statistics based on up to 10 000 individual particle observations per minute. The data set from Hyytiälä obtained in the winter of 2021/22 is used to compare the VISSS with collocated PIP and Parsivel instruments. While the comparison with the Parsivel shows – given the known limitations of the instrument for snowfall (Battaglia et al., 2010) – excellent agreement, the comparison with the PIP is more complicated. The differences in the observed PSDs increase with increasing particle complexity c (e.g., needles), but differences remain even for non-needle cases, and for a case with a relatively high concentration of large, relatively spherical particles, agreement was only found for sizes larger than 1 mm. Because the Parsivel is well characterized for liquid precipitation (Tokay et al., 2014), a drizzle case is also used for comparison. The case shows an excellent agreement between the Parsivel and VISSS for droplets larger than 0.5 mm, confirming the general accuracy of the VISSS. Compared to both the PIP and the Parsivel, the VISSS observes a larger number of small particles that can drastically change the retrieved PSD coefficients in some cases. However, the first-generation VISSS pixel resolution of 0.06 mm is likely to introduce discretization errors for particles smaller than 0.3 mm (i.e., 5 px), potentially leading to errors in the sizing of very small particles. Furthermore, we analyzed the advantage of the VISSS due to the availability of a second camera. Depending on the particle type, mean Dmax increases up to 16 % and mean aspect ratio AR decreases by 12 %. For the analyzed case, the VISSS observes each particle on average 8.5 times, which can further improve estimates of particle properties due to the natural rotation of the particle during sedimentation. In comparison to using only a single camera, this can increase the mean Dmax by up to 24 % and reduce AR by up to 31 %.

VISSS product development will continue, e.g., by implementing machine-learning-based particle classifications (Praz et al., 2017; Leinonen and Berne, 2020; Leinonen et al., 2021). Also, we will work on making VISSS data acquisition and processing more efficient by handling some processing steps in the data acquisition system in real time. We also invite the community to contribute to the development of the open-source instrument. This not only applies to the software products, but also allows for other groups to build and improve the instrument. It could even mean advancing the VISSS hardware concept further, by, e.g., adding a third camera to observe snow particles from below or – given the extended 1300 mm working distance of VISSS3 – from above. The VISSS hardware plans (second-generation VISSS; Maahn et al., 2023b; third-generation VISSS; Maahn and Wolter, 2024), data acquisition software (Maahn, 2023a), and data processing libraries (Maahn, 2023b) have been released under an open-source license so that reverse engineering as done by Helms et al. (2022) is not required to analyze the VISSS data processing. The only limitation of the licenses used is that modification of the VISSS needs to be made publicly available under the same license.

There are many potential applications for VISSS observations. They can be used for model evaluation with advanced microphysics (e.g., Hashino and Tripoli, 2011; Milbrandt and Morrison, 2015), characterization of PSDs as a function of snowfall formation processes, or retrievals combining in situ and remote sensing observations. Tracking of a particle in three dimensions can be used to understand the impact of turbulence on particle trajectories. Beyond atmospheric science, the VISSS shows potential for quantifying the occurrence of flying insects, as standard insect counting techniques such as suction traps are typically destructive and labor-intensive.

We use a right-handed coordinate system (x,y,z) to define the position of particles in the observation volume, where z points to the ground (see Fig. 1). The follower coordinate system (xF,yF,zF) can be transformed into the leader coordinate system (xL,yL,zL) by the standard transformation matrix

using the follower's roll φ, yaw ψ, and pitch θ, analogous to airborne measurements, and with , , and , where Ofx, Ofy, and Ofz are the offsets of the follower coordinate system in the x, y, and z directions, respectively (see Fig. 1) Offsets in Ofx and Ofy are neglected because they would only materialize in reduced particle sharpness and not in the retrieved three-dimensional position. The opposite transformation can be described by

Since we have only one measurement in the x and y dimensions but two in z, we use the difference between the measured zL and the estimated zL from matched particles to retrieve the misalignment angles and offsets:

In this equation, is unknown, so it is derived from

where, in turn, yL is not observed. Therefore, yL is obtained from

Inserting Eq. (A5) into Eq. (A4) yields, after a couple of simplifications,

Inserting Eq. (A6) into Eq. (A3) yields

We have no information about ψ; therefore we have no choice but to assume ψ=0, leading to

VISSS hardware plans (Maahn et al., 2023b; Maahn and Wolter, 2024), data acquisition software (Maahn, 2023a), and data processing libraries (version 2023.1.6; Maahn, 2023b) have been released under an open-source license. Processed VISSS, PIP, and Parsivel observations used for the pilot study are available at https://zenodo.org/record/8383794 (Maahn and Moisseev, 2023b). VISSS raw data are available for MOSAiC 2019/20 (https://doi.org/10.1594/PANGAEA.960391, Maahn et al., 2023a), Hyytiälä 2021/22 (https://doi.org/10.1594/PANGAEA.959046, Maahn and Moisseev, 2023a), and Ny-Ålesund 2021/22 (https://doi.org/10.1594/PANGAEA.958537, Maahn and Maherndl, 2023) on PANGAEA.

MM acquired funding, developed the instrument, processed the VISSS data, analyzed the data of the case study, and wrote the manuscript. DM processed PIP and Parsivel data and contributed to data analysis. NM and IS contributed to instrument calibration and particle tracking development, respectively. MS supported funding acquisition and was responsible for the VISSS deployment at MOSAiC. All authors reviewed and edited the draft.

At least one of the (co-)authors is a member of the editorial board of Atmospheric Measurement Techniques. The peer-review process was guided by an independent editor, and the authors also have no other competing interests to declare.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

We thank all persons involved in the MOSAiC expedition (MOSAiC20192020) of the research vessel Polarstern during MOSAiC in 2019–2020 (Project ID AWI_PS122_00) as listed in Nixdorf et al. (2021), in particular Christopher Cox, Michael Gallagher, Jenny Hutchings, and Taneil Uttal. In Hyytiälä, the VISSS was taken care of by Lauri Ahonen, Matti Leskinen, and Anna Trosits. In Ny-Ålesund, the VISSS installation was made possible by the AWIPEV team including Guillaume Hérment, Fieke Rader, and Wilfried Ruhe. During SAIL, we were supported by the operations team from the Rocky Mountain Biological Laboratory team and the DOE Atmospheric Radiation Measurement technicians, who took great care of the VISSS. Thanks go to Donald David, Rainer Haseneder-Lind, Jim Kastengren, Pavel Krobot, and Steffen Wolters for assembling VISSS instruments. We thank Thomas Kuhn and Charles Helms for their extensive and constructive reviews.

This work was funded by the German Research Foundation (DFG, Deutsche Forschungsgemeinschaft) Transregional Collaborative Research Center SFB/TRR 172 (Project ID 268020496), DFG Priority Program SPP2115 “Fusion of Radar Polarimetry and Numerical Atmospheric Modelling Towards an Improved Understanding of Cloud and Precipitation Processes” (PROM) under grant PROM-CORSIPP (Project ID 408008112), and the University of Colorado Boulder CIRES (Cooperative Institute for Research in Environmental Sciences) Innovative Research Program. Matthew D. Shupe was supported by the National Science Foundation (OPP-1724551) and National Oceanic and Atmospheric Administration (NA22OAR4320151). The deployment at Hyytiälä was supported by ACTRIS-2 TNA funded by the European Commission under the Horizon 2020 – Research and Innovation Framework Programme, H2020-INFRADEV-2019-2, grant agreement number 871115. The PIP deployment at the University of Helsinki station is supported by the NASA Global Precipitation Measurement Mission ground validation program.

This paper was edited by Alexis Berne and reviewed by Thomas Kuhn and Charles N. Helms.

Battaglia, A., Rustemeier, E., Tokay, A., Blahak, U., and Simmer, C.: PARSIVEL Snow Observations: A Critical Assessment, J. Atmos. Ocean. Tech., 27, 333–344, https://doi.org/10.1175/2009JTECHA1332.1, 2010. a, b, c, d, e, f

Beard, K. V., Bringi, V. N., and Thurai, M.: A New Understanding of Raindrop Shape, Atmos. Res., 97, 396–415, https://doi.org/10.1016/j.atmosres.2010.02.001, 2010. a

Cooper, S. J., Wood, N. B., and L'Ecuyer, T. S.: A variational technique to estimate snowfall rate from coincident radar, snowflake, and fall-speed observations, Atmos. Meas. Tech., 10, 2557–2571, https://doi.org/10.5194/amt-10-2557-2017, 2017. a

Cooper, S. J., L'Ecuyer, T. S., Wolff, M. A., Kuhn, T., Pettersen, C., Wood, N. B., Eliasson, S., Schirle, C. E., Shates, J., Hellmuth, F., Engdahl, B. J. K., Vásquez-Martín, S., Ilmo, T., and Nygård, K.: Exploring Snowfall Variability through the High-Latitude Measurement of Snowfall (HiLaMS) Field Campaign, B. Am. Meteorol. Soc., 103, E1762–E1780, https://doi.org/10.1175/BAMS-D-21-0007.1, 2022. a

Del Guasta, M.: ICE-CAMERA: a flatbed scanner to study inland Antarctic polar precipitation, Atmos. Meas. Tech., 15, 6521–6544, https://doi.org/10.5194/amt-15-6521-2022, 2022. a, b

Delanoë, J., Protat, A., Testud, J., Bouniol, D., Heymsfield, A. J., Bansemer, A., Brown, P. R. A., and Forbes, R. M.: Statistical Properties of the Normalized Ice Particle Size Distribution, J. Geophys. Res., 110, D10201, https://doi.org/10.1029/2004JD005405, 2005. a, b

Dunnavan, E. L., Jiang, Z., Harrington, J. Y., Verlinde, J., Fitch, K., and Garrett, T. J.: The Shape and Density Evolution of Snow Aggregates, J. Atmos. Sci., 76, 3919–3940, https://doi.org/10.1175/JAS-D-19-0066.1, 2019. a

Feldman, D. R., Aiken, A. C., Boos, W. R., Carroll, R. W. H., Chandrasekar, V., Collis, S., Creamean, J. M., de Boer, G., Deems, J., DeMott, P. J., Fan, J., Flores, A. N., Gochis, D., Grover, M., Hill, T. C. J., Hodshire, A., Hulm, E., Hume, C. C., Jackson, R., Junyent, F., Kennedy, A., Kumjian, M., Levin, E. J. T., Lundquist, J. D., O'Brien, J., Raleigh, M. S., Reithel, J., Rhoades, A., Rittger, K., Rudisill, W., Sherman, Z., Siirila-Woodburn, E., Skiles, S. M., Smith, J. N., Sullivan, R. C., Theisen, A., Tuftedal, M., Varble, A. C., Wiedlea, A., Wielandt, S., Williams, K., and Xu, Z.: The Surface Atmosphere Integrated Field Laboratory (SAIL) Campaign, B. Am. Meteorol. Soc., 104, 2192–2222, https://doi.org/10.1175/BAMS-D-22-0049.1, 2023. a

Field, P. R. and Heymsfield, A. J.: Importance of Snow to Global Precipitation, Geophys. Res. Lett., 42, 2015GL065497, https://doi.org/10.1002/2015GL065497, 2015. a

Fitch, K. E. and Garrett, T. J.: Graupel Precipitating From Thin Arctic Clouds With Liquid Water Paths Less Than 50 g M-2, Geophys. Res. Lett., 49, e2021GL094075, https://doi.org/10.1029/2021GL094075, 2022. a

Garrett, T. J. and Yuter, S. E.: Observed Influence of Riming, Temperature, and Turbulence on the Fallspeed of Solid Precipitation, Geophys. Res. Lett., 41, 6515–6522, https://doi.org/10.1002/2014GL061016, 2014. a

Garrett, T. J., Fallgatter, C., Shkurko, K., and Howlett, D.: Fall speed measurement and high-resolution multi-angle photography of hydrometeors in free fall, Atmos. Meas. Tech., 5, 2625–2633, https://doi.org/10.5194/amt-5-2625-2012, 2012. a, b, c, d

Gergely, M. and Garrett, T. J.: Impact of the Natural Variability in Snowflake Diameter, Aspect Ratio, and Orientation on Modeled Snowfall Radar Reflectivity, J. Geophys. Res.-Atmos., 121, 2016JD025192, https://doi.org/10.1002/2016JD025192, 2016. a, b

Gergely, M., Cooper, S. J., and Garrett, T. J.: Using snowflake surface-area-to-volume ratio to model and interpret snowfall triple-frequency radar signatures, Atmos. Chem. Phys., 17, 12011–12030, https://doi.org/10.5194/acp-17-12011-2017, 2017. a

Grazioli, J., Tuia, D., Monhart, S., Schneebeli, M., Raupach, T., and Berne, A.: Hydrometeor classification from two-dimensional video disdrometer data, Atmos. Meas. Tech., 7, 2869–2882, https://doi.org/10.5194/amt-7-2869-2014, 2014. a

Grazioli, J., Genthon, C., Boudevillain, B., Duran-Alarcon, C., Del Guasta, M., Madeleine, J.-B., and Berne, A.: Measurements of precipitation in Dumont d'Urville, Adélie Land, East Antarctica, The Cryosphere, 11, 1797–1811, https://doi.org/10.5194/tc-11-1797-2017, 2017. a

Hashino, T. and Tripoli, G. J.: The Spectral Ice Habit Prediction System (SHIPS). Part III: Description of the Ice Particle Model and the Habit-Dependent Aggregation Model, J. Atmos. Sci., 68, 1125–1141, https://doi.org/10.1175/2011JAS3666.1, 2011. a

Helms, C. N., Munchak, S. J., Tokay, A., and Pettersen, C.: A comparative evaluation of snowflake particle shape estimation techniques used by the Precipitation Imaging Package (PIP), Multi-Angle Snowflake Camera (MASC), and Two-Dimensional Video Disdrometer (2DVD), Atmos. Meas. Tech., 15, 6545–6561, https://doi.org/10.5194/amt-15-6545-2022, 2022. a, b, c, d

Hicks, A. and Notaroš, B. M.: Method for Classification of Snowflakes Based on Images by a Multi-Angle Snowflake Camera Using Convolutional Neural Networks, J. Atmos. Ocean. Tech., 36, 2267–2282, https://doi.org/10.1175/JTECH-D-19-0055.1, 2019. a

Hogan, R. J. and Westbrook, C. D.: Equation for the Microwave Backscatter Cross Section of Aggregate Snowflakes Using the Self-Similar Rayleigh-Gans Approximation, J. Atmos. Sci., 71, 3292–3301, https://doi.org/10.1175/JAS-D-13-0347.1, 2014. a

Hogan, R. J., Field, P. R., Illingworth, A. J., Cotton, R. J., and Choularton, T. W.: Properties of Embedded Convection in Warm-Frontal Mixed-Phase Cloud from Aircraft and Polarimetric Radar, Q. J. Roy. Meteor. Soc., 128, 451–476, https://doi.org/10.1256/003590002321042054, 2002. a

Hogan, R. J., Tian, L., Brown, P. R. A., Westbrook, C. D., Heymsfield, A. J., and Eastment, J. D.: Radar Scattering from Ice Aggregates Using the Horizontally Aligned Oblate Spheroid Approximation, J. Appl. Meteorol. Clim., 51, 655–671, https://doi.org/10.1175/JAMC-D-11-074.1, 2012. a

Huang, G.-J., Bringi, V. N., Moisseev, D., Petersen, W. A., Bliven, L., and Hudak, D.: Use of 2D-video Disdrometer to Derive Mean Density–Size and Ze–R Relations: Four Snow Cases from the Light Precipitation Validation Experiment, Atmos. Res., 153, 34–48, https://doi.org/10.1016/j.atmosres.2014.07.013, 2015. a, b

Kalman, R. E.: A New Approach to Linear Filtering and Prediction Problems, J. Basic Eng., 82, 35–45, https://doi.org/10.1115/1.3662552, 1960. a

Kennedy, A., Scott, A., Loeb, N., Sczepanski, A., Lucke, K., Marquis, J., and Waugh, S.: Bringing Microphysics to the Masses: The Blowing Snow Observations at the University of North Dakota: Education through Research (BLOWN-UNDER) Campaign, B. Am. Meteorol. Soc., 103, E83–E100, https://doi.org/10.1175/BAMS-D-20-0199.1, 2022. a

Keppas, S. Ch., Crosier, J., Choularton, T. W., and Bower, K. N.: Ice Lollies: An Ice Particle Generated in Supercooled Conveyor Belts, Geophys. Res. Lett., 44, 5222–5230, https://doi.org/10.1002/2017GL073441, 2017. a

Kuhn, H. W.: The Hungarian Method for the Assignment Problem, Nav. Res. Logist. Q., 2, 83–97, https://doi.org/10.1002/nav.3800020109, 1955. a

Kuhn, T. and Vázquez-Martín, S.: Microphysical properties and fall speed measurements of snow ice crystals using the Dual Ice Crystal Imager (D-ICI), Atmos. Meas. Tech., 13, 1273–1285, https://doi.org/10.5194/amt-13-1273-2020, 2020. a

Kulie, M. S., Pettersen, C., Merrelli, A. J., Wagner, T. J., Wood, N. B., Dutter, M., Beachler, D., Kluber, T., Turner, R., Mateling, M., Lenters, J., Blanken, P., Maahn, M., Spence, C., Kneifel, S., Kucera, P. A., Tokay, A., Bliven, L. F., Wolff, D. B., and Petersen, W. A.: Snowfall in the Northern Great Lakes: Lessons Learned from a Multi-Sensor Observatory, B. Am. Meteorol. Soc., 102, 1–61, https://doi.org/10.1175/BAMS-D-19-0128.1, 2021. a

Leinonen, J. and Berne, A.: Unsupervised classification of snowflake images using a generative adversarial network and K-medoids classification, Atmos. Meas. Tech., 13, 2949–2964, https://doi.org/10.5194/amt-13-2949-2020, 2020. a, b

Leinonen, J., Grazioli, J., and Berne, A.: Reconstruction of the mass and geometry of snowfall particles from multi-angle snowflake camera (MASC) images, Atmos. Meas. Tech., 14, 6851–6866, https://doi.org/10.5194/amt-14-6851-2021, 2021. a, b, c

Li, H., Moisseev, D., and von Lerber, A.: How Does Riming Affect Dual-Polarization Radar Observations and Snowflake Shape?, J. Geophys. Res.-Atmos., 123, 6070–6081, https://doi.org/10.1029/2017JD028186, 2018. a

Li, J., Abraham, A., Guala, M., and Hong, J.: Evidence of preferential sweeping during snow settling in atmospheric turbulence, J. Fluid Mech., 928, A8, doi:10.1017/jfm.2021.816, 2021 a

Löffler-Mang, M. and Joss, J.: An Optical Disdrometer for Measuring Size and Velocity of Hydrometeors, J. Atmos. Ocean. Tech., 17, 130–139, https://doi.org/10.1175/1520-0426(2000)017<0130:AODFMS>2.0.CO;2, 2000. a, b

Lopez-Cantu, T., Prein, A. F., and Samaras, C.: Uncertainties in Future U. S. Extreme Precipitation From Downscaled Climate Projections, Geophys. Res. Lett., 47, e2019GL086797, https://doi.org/10.1029/2019GL086797, 2020. a

Luke, E. P., Yang, F., Kollias, P., Vogelmann, A. M., and Maahn, M.: New Insights into Ice Multiplication Using Remote-Sensing Observations of Slightly Supercooled Mixed-Phase Clouds in the Arctic, P. Natl. Acad. Sci. USA, 118, e2021387118, https://doi.org/10.1073/pnas.2021387118, 2021. a

Maahn, M.: Video In Situ Snowfall Sensor (VISSS) Data Acquisition Software V0.3.1, Zenodo [code], https://doi.org/10.5281/zenodo.7640801, 2023a. a, b, c

Maahn, M.: Video In Situ Snowfall Sensor (VISSS) Data Processing Library V2023.1.6, Zenodo [code], https://doi.org/10.5281/zenodo.7650394, 2023b. a, b, c

Maahn, M. and Maherndl, N.: Video In Situ Snowfall Sensor (VISSS) Data for Ny-Ålesund (2021–2022), Pangaea [data set], https://doi.org/10.1594/PANGAEA.958537, 2023. a

Maahn, M. and Moisseev, D.: Video In Situ Snowfall Sensor (VISSS) Data for Hyytiälä (2021–2022), Pangaea [data set], https://doi.org/10.1594/PANGAEA.959046, 2023a. a

Maahn, M. and Moisseev, D.: VISSS, PIP, and Parsivel Snowfall Observations from Winter 2021/22 in Hyytiälä, Finland, Zenodo [data set], https://doi.org/10.5281/zenodo.8383794, 2023b. a

Maahn, M. and Wolter, S.: Hardware design of the Video In Situ Snowfall Sensor v3 (VISSS3), https://doi.org/10.5281/zenodo.10526898 (last access: 19 February 2024), 2024. a, b, c

Maahn, M., Löhnert, U., Kollias, P., Jackson, R. C., and McFarquhar, G. M.: Developing and Evaluating Ice Cloud Parameterizations for Forward Modeling of Radar Moments Using in Situ Aircraft Observations, J. Atmos. Ocean. Tech., 32, 880–903, https://doi.org/10.1175/JTECH-D-14-00112.1, 2015. a, b

Maahn, M., Turner, D. D., Löhnert, U., Posselt, D. J., Ebell, K., Mace, G. G., and Comstock, J. M.: Optimal Estimation Retrievals and Their Uncertainties: What Every Atmospheric Scientist Should Know, B. Am. Meteorol. Soc., 101, E1512–E1523, https://doi.org/10.1175/BAMS-D-19-0027.1, 2020. a

Maahn, M., Cox, C. J., Gallagher, M. R., Hutchings, J. K., Shupe, M. D., and Taneil, U.: Video In Situ Snowfall Sensor (VISSS) Data from MOSAiC Expedition with POLARSTERN (2019–2020), Pangaea [data set], https://doi.org/10.1594/PANGAEA.960391, 2023a. a

Maahn, M., Haseneder-Lind, R., and Krobot, P.: Hardware Design of the Video In Situ Snowfall Sensor v2 (VISSS2), Zenodo [data set], https://doi.org/10.5281/zenodo.7640821, 2023b. a, b, c

Maherndl, N., Maahn, M., Tridon, F., Leinonen, J., Ori, D., and Kneifel, S.: A Riming-Dependent Parameterization of Scattering by Snowflakes Using the Self-Similar Rayleigh–Gans Approximation, Q. J. Roy. Meteor. Soc., 149, 3562–3581, https://doi.org/10.1002/qj.4573, 2023a. a

Maherndl, N., Moser, M., Lucke, J., Mech, M., Risse, N., Schirmacher, I., and Maahn, M.: Quantifying riming from airborne data during HALO-(AC)3, EGUsphere [preprint], https://doi.org/10.5194/egusphere-2023-1118, 2023b. a

Mandelbrot, B.: How Long Is the Coast of Britain? Statistical Self-Similarity and Fractional Dimension, Science, 156, 636–638, https://doi.org/10.1126/science.156.3775.636, 1967. a

Matrosov, S. Y., Ryzhkov, A. V., Maahn, M., and de Boer, G.: Hydrometeor Shape Variability in Snowfall as Retrieved from Polarimetric Radar Measurements, J. Appl. Meteorol. Clim., 59, 1503–1517, https://doi.org/10.1175/JAMC-D-20-0052.1, 2020. a