the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

An all-sky camera image classification method using cloud cover features

Xiaotong Li

Baozhu Wang

Bo Qiu

Chao Wu

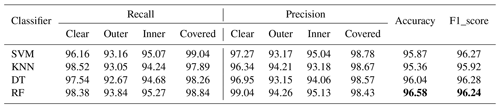

The all-sky camera (ASC) images can reflect the local cloud cover information, and the cloud cover is one of the first factors considered for astronomical observatory site selection. Therefore, the realization of automatic classification of the ASC images plays an important role in astronomical observatory site selection. In this paper, three cloud cover features are proposed for the TMT (Thirty Meter Telescope) classification criteria, namely cloud weight, cloud area ratio and cloud dispersion. After the features are quantified, four classifiers are used to recognize the classes of the images. Four classes of ASC images are identified: “clear”, “inner”, “outer” and “covered”. The proposed method is evaluated on a large dataset, which contains 5000 ASC images taken by an all-sky camera located in Xinjiang (38.19∘ N, 74.53∘ E). In the end, the method achieves an accuracy of 96.58 % and F1_score of 96.24 % by a random forest (RF) classifier, which greatly improves the efficiency of automatic processing of the ASC images.

- Article

(3288 KB) - Full-text XML

- BibTeX

- EndNote

Clouds are visible aerosols composed of water vapor in the atmosphere liquefied by cold, and they are important for the hydrological cycle and energy balance of the Earth (Sodergren et al., 2017). The analysis of clouds can provide a lot of valuable information such as weather prediction (Westerhuis et al., 2020), climate prediction, astronomical observatory site selection (Cao et al., 2020) and so on. For astronomical observatory site selection, cloud cover is a factor that must be considered. When the light emitted by a star reaches the telescope, it will be scattered and absorbed by clouds in the atmosphere. Therefore, the cloud cover determines the quality of the observation data and the available time for astronomy. Currently, cloud observations are mainly based on satellite remote sensing (Zhong et al., 2017; Young et al., 2018) and ground-based observations (Nouri et al., 2019). Satellite cloud images can capture large areas of cloud cover and directly observe the impact of clouds on the Earth's radiation, which is suitable for atmospheric research. However, the resolution of the images is low, making it impossible to study more cloud details. Ground-based cloud images have a smaller observation range and focus on monitoring the cloud thickness and distribution in local areas, which has the advantages of flexible observation sites and rich image information. This paper mainly focuses on the classification of ground-based cloud images.

The all-sky imaging device is an automatic observation instrument that can replace humans. The device has a digital camera that can capture images of half of the celestial sphere and can obtain details of clouds through retrieval algorithms. With the emergence of more and more all-sky imaging devices such as whole-sky imagers (WSIs; Sneha et al., 2020), total-sky imagers (TSIs; Ryu et al., 2019), all-sky imagers (ASIs; Nouri et al., 2018) and all-sky cameras (ASCs; Fa et al., 2019), a large number of all-sky images have now been generated, which provides a basis for the development of automatic cloud image classification algorithms.

Many researchers pay attention to the feature extraction technology of different cloud types. Calbo and Sabburg (2008) classified eight types of sky images using statistical features (smoothness, standard deviation, uniformity) and features obtained after Fourier transform of the images. Heinle et al. (2010) proposed a classification algorithm based on spectral features in RGB color space and texture features extracted by gray-level co-occurrence matrices (GLCMs). This method has a high accuracy for the classification of seven different classes of clouds. Sun et al. (2009) proposed a method where the local binary pattern (LBP) operator and the contrast of local cloud image texture are used to classify sky conditions. Li et al. (2016) expressed each image as a feature vector, which was generated by calculating the weighted frequency of the microstructures observed in the image. Dev et al. (2015) investigated an improved text-based classification method that combines color and texture features to improve the effect. The average accuracy on the Singapore Whole-Sky Imaging Categories (SWIMCAT) dataset is 95 %. Gan et al. (2017) worked on different size regions of the image, and they used a method based on sparse coding which is effective in terms of localization and computation. Wan et al. (2020) demonstrated that cloud classification results were not good if only texture or color features are used. As a result, a mixture of texture, color and spectral features were obtained from color images and then fed into a random forest (Svetnik et al., 2003).

In recent years, convolutional neural networks (CNNs) have shown very superior effects in the field of image classification and have been applied to all-sky image classification. Shi et al. (2017) used the output of the shallow convolutional layer of CNN as cloud features and fed it into a support vector machine (SVM) (Cristianini and Shawe-Taylor, 2000). Ye et al. (2017) extracted multiscale feature maps from pretrained CNN and then employed the Fisher vector to encode them, finally sending them to a classifier. Zhao et al. (2019) used the 3D-CNN model to extract the texture features of the images and then output the classification results with a fully connected layer. Zhao et al. (2020) proposed the improved frame difference method extractor to detect and extract features from large images into small images; then these small images were sent to a multi-channel CNN classifier. Liu et al. (2021) proposed a context graph attention network (CGAT) for the cloud classification, in which the context graph attention layer learned the context attention coefficients and acquired the aggregated features of nodes.

The algorithms proposed above, both traditional and deep learning methods, are based on texture, color and spectral features of cloud for classification, and they are all for cloud shape classification of local images or all-sky images. But for the astronomical observatory site selection, cloud cover is also an important factor that must be considered besides cloud shape. At present, few researchers have studied the classification algorithm based on cloud cover. The existing cloud cover calculation method only calculates the ratio of the cloud coverage area to the effective area of the ASC images and does not provide a detailed description of the cloud cover (Esteves et al., 2021). Therefore, we propose three cloud cover features in this paper that introduce cloud thickness and distribution position into the cloud cover calculation, and we classify the ASC images according to the TMT classification criteria. The rest of the paper is organized as follows. Section 2 introduces the dataset and TMT classification criteria. Section 3 describes three cloud cover features and the proposed classification method. Section 4 shows experiment results and analysis of the results. Finally, Sect. 5 concludes our contributions.

2.1 Dataset

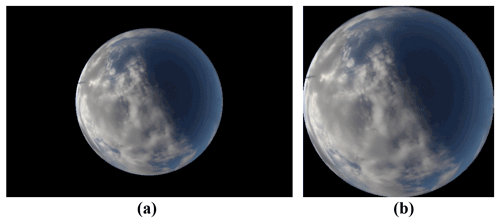

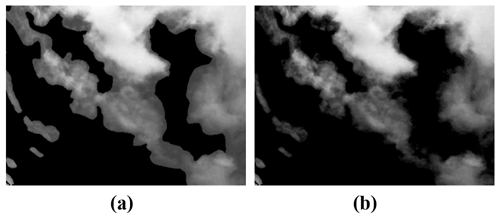

Images used in this paper were taken by an all-sky camera located in Xinjiang, China (38.19∘ N, 74.53∘ E), and provided by the Key Laboratory of Optical Astronomy at the National Astronomical Observatories of Chinese Academy of Sciences. The ASC has two parts, a Sigma 4.5 mm fisheye lens and a Canon 700D camera providing a field of view. The frequency of shooting in daytime is 20 min, increasing to 5 min per shot at night, and the exposure time will be adjusted between 15 and 30 s depending on the phase of the moon. The ASC images are stored in color JPEG format with a resolution of 480×720 pixels. In order to facilitate subsequent processing, the images are cropped to retain only the central valid area, and the cropped image size is 370×370 pixels. Note that the captured image is originally rectangular but the mapped all sky is circular, where the center is the zenith and the boundary is the horizon. Figure 1a shows an example of the original ASC image, and Fig. 1b displays the cropped ASC image.

We screened the ASC images taken from January 2019 to November 2020 to remove those that were overexposed or encountered bad weather. The screened images are all classified by professionals using a similar manual cloud identification method (see the next section) as described in the Thirty Meter Telescope (TMT) site testing campaign paper (Skidmore et al., 2008). In the end, a dataset with 5000 ASC images was obtained. We did our best to ensure a balanced number of images of each class in the dataset.

2.2 Image classes

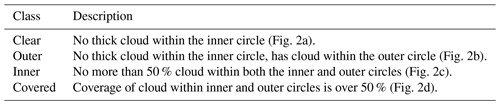

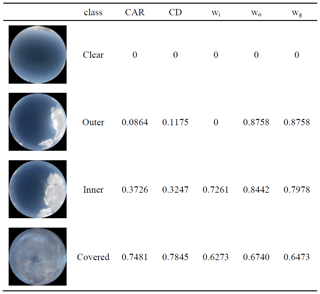

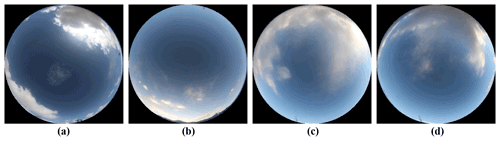

Traditionally, the ASC image classification takes cloud shape as the basic element, while considering the shape development and internal microstructure of the clouds. However, the cloud cover in the image is the first important factor to be considered for astronomical observatory site selection. Therefore, we classify the ASC images following the method of Skidmore et al. (2008, TMT classification criteria). The ASC images are divided into inner and outer circles with zenith angles of 44.7 and 65∘, respectively, and then the cloud cover is determined as clear, outer, inner and covered according to the thickness and distribution of the cloud on the ASC images. The classification definition is shown in Table 1, and typical images of each class are shown in Fig. 2.

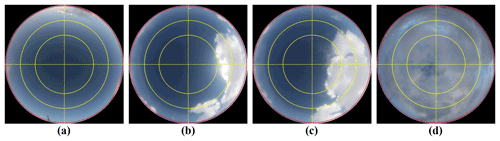

In this section, we first describe the cloud cover features proposed, and then we introduce the overall process of the ASC image classification method based on the cloud cover features. The framework is illustrated in Fig. 3.

3.1 Cloud cover features

The current TMT classification criteria are essentially only based on the area ratio and distribution of the cloud regions in the ASC images with insufficient consideration of cloud thickness and density of distribution, and no intuitive and reliable quantitative indicators, so the classification results are easily affected by subjective human factors. In this paper, three cloud features are proposed for the above problems, namely cloud weight, cloud area ratio and cloud dispersion. Cloud weight indicates the thickness of the cloud, cloud area ratio represents the ratio of the cloud area to the effective area of the image and cloud dispersion reflects the distribution of cloud regions around the center of the ASC images.

3.1.1 Cloud weight

The grayscale value G of any pixel in the ASC image can be regarded as the weighted superposition value of the grayscale value of multiple elements such as cloud, sky background and impurities in an ideal state, as shown in Eq. (1). The ideal state means that the grayscale value of the pixel is contributed by only one element and no other elements are involved, and the pixel can be regarded as the “pure pixel” of the element.

where G1, G2, …, GN denote the grayscale value of the pure pixel of different elements. α1, α2, …, αN are the weights of the contribution of the pure pixel of each element to the actual grayscale value of the image pixel.

For an ASC image, the grayscale value of the cloud region ideally is superimposed by the grayscale value of the sky background and cloud. Therefore, the grayscale value of cloud region can be defined by the superimposed model as

where G is the true grayscale value of the cloud region; Gsky is the grayscale value of the sky background in an ideal state. Since the shooting time of the image is known, the grayscale value of the sky background can be obtained by using images of different dates but the same moment; Gcloud is the grayscale value of cloud in the ideal state; α is the weight of the contribution of the sky background to the actual grayscale value of the pixel in the ideal state. During the shooting process of ASC, the sky background will be blocked by clouds. As the cloud becomes thicker and thicker, the contribution of the sky background to the grayscale value of cloud region will gradually decrease, and this phenomenon will be more obvious in the initial stage when the cloud thickness increases. When the cloud thickness increases to a certain degree, the grayscale value of cloud region is almost completely contributed by the cloud, so the relational expression between α and Gcloud is approximately a monotonically decreasing concave function. After extensive experimental verification, the relationship between the two is derived in this paper as

According to Eqs. (2)–(3), Gcloud is calculated as follows:

In order to verify the validity of the cloud grayscale value obtained by the superposition model, we process the grayscale image of the local cloud region according to Eq. (4), and the result is shown in Fig. 4. Figure 4a shows the original grayscale image, and Fig. 4b is the grayscale image in the ideal state obtained. By comparison, it can be found that the grayscale value of the processed image is significantly lower in the thin cloud part, which eliminates the influence of the sky background on the cloud grayscale value, thereby better reflecting the cloud contour. Therefore, the grayscale image processed by the superposition model reflects the grayscale value and the number of cloud pixels more realistically.

Based on the previous derivation, we proposed “cloud weight” to indicate the thickness of the clouds. Since there are large differences in color and brightness between clouds and sky in ASC images, this section defines the cloud weight by exploring the relationship between pixel grayscale value, cloud reflectivity, light intensity and cloud thickness.

In grayscale images, the magnitude of the grayscale value is related to the reflectivity of the object and the intensity of the incident light. The grayscale value can represent the brightness of the pixel, which is determined by the reflectivity and the intensity of the incident light. Therefore, the grayscale value of a cloud pixel Gcloud is calculated as

where Gcloud indicates the grayscale value of the pixel in the ideal state, r represents the reflectivity of the cloud to the light and l is the intensity of the incident light. Generally speaking, the reflectivity of the cloud to the light r is proportional to the cloud thickness w. The thicker the cloud, the higher the reflectivity. According to the theory of radiative transfer, the expression between the two is approximately

where λ is the correlation coefficient. The incident light intensity l is determined by the incident light, and the incident light sources are the same for the cloud and sky background. Compared with cloud, the sky background is simpler and does not have an overly complex function change in mapping the light source onto the grayscale image, so the magnitude of the sky background grayscale value Gsky is proportional to the intensity of the light source. Then Gsky is

where μ is the positive correlation coefficient. According to Eqs. (5)–(7), w is calculated as follows.

Since Gcloud and Gsky have a fixed range of grayscale, and is a constant which does not affect the classification result, the value of is taken as 0.1 in this paper after extensive experimental verification. Then the value of w represents the relative size of the cloud weight, which can approximately reflect the thickness of the cloud. In order to facilitate subsequent calculations and comparisons, we normalized w as follows.

where wn is the normalized value of cloud weight, and min(w) and max(w) represent the minimum and maximum values of cloud weight in the cloud region of the image, respectively.

This section defines the cloud weight based on the grayscale value to reflect the thickness of the cloud. The physical meaning of cloud weight is the difference between the brightness of the cloud and sky background, which can approximate the thickness of the cloud region. The feature of cloud weight makes the evaluation of cloud thickness completely based on grayscale images, which promotes the automatic processing of ASC images.

3.1.2 Cloud area ratio

At present, the common cloud area ratio calculation methods include ISCCP (Evan et al., 2007), CLAVR-1 (Wang et al., 2013) and CLAVR-X (Kim et al., 2016). ISCCP divides pixels into non-cloud and cloud pixels and assigns weights of 0 and 1, respectively, to calculate the ratio of the weighted sum of the two to the total number of pixels as the final result. CLAVR-1 divides the pixels into non-cloud, mixed cloud and cloud and then assigns weights of 0, 0.5 and 1, respectively, to calculate the ratio of cloud area. CLAVR-X classifies the image pixels into non-cloud, thin cloud, medium cloud and thick cloud according to the cloud thickness, and the weights are adjusted according to the cloud thickness. All the above calculation models divide the pixels into limited categories and assign weights respectively. Although cloud thickness and cloud coverage area are considered two cloud cover features, the division of cloud thickness is slightly rough. In the previous section, we completed the preliminary exploration of cloud weight and obtained a more accurate and scientific numerical representation of cloud thickness, so that the weight of cloud pixels can be optimized more accurately.

This work refines the weight for each pixel of the ASC images and redefines the calculation model of cloud area ratio. We use the cloud weight derived in the previous section to represent the weight of cloud pixels and define the ratio of the weighted sum of all pixels to the effective area of the image as the cloud area ratio (CAR), whose equation is

where Ncloud is the total number of cloud pixels in the image, denotes the cloud weight of the ith cloud pixel and Nall represents the total number of pixels in the effective area of the ASC image.

3.1.3 Cloud dispersion

Cloud dispersion (CD) is proposed mainly to indicate the influence degree of the distance between cloud and the center of the ASC image on astronomical observation results. The closer to the center, the greater the influence. Cloud dispersion is a quantitative representation of this effect. Generally speaking, cloud dispersion has three determinants: cloud area, cloud thickness and the distance between cloud and the center of the image. Therefore, we divide the image into N regions, namely . The area of xi is denoted as si, the average cloud thickness is denoted as wi and the absolute distance between the center of the region and the center of the ASC image is di. In order to facilitate the calculation, we convert the absolute distance to the relative distance , where R represents the radius of the effective area of the image. Then cloud dispersion of an ASC image can be represented using Eq. (11):

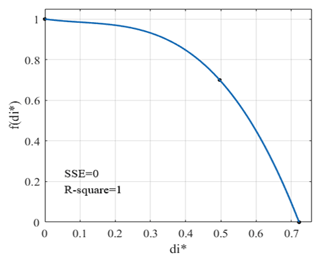

where is an influence degree function with as the independent variable. The smaller the , the greater the influence on the observation results and the greater the value of . From the above, it can be seen that is a function that decreases monotonously with , and the influence on the observation results is greatest when the cloud is located at the image center. Since the classification of the ASC images in this paper is based on the TMT classification criteria, it is necessary to consider the influence of the inner and outer circles when setting the parameters. After many experiments and comparison studies with the results of manual observation, we set the value of influence degree at the center of the ASC image to 1, the inner circle to 0.7 and the outer circle to 0. After linear fitting by the MATLAB curve fitting toolbox, is shown in the Fig. 5. The expression is Eq. (12), where the sine term is to correct the fitted quadratic function to satisfy the decreasing condition.

Figure 5Curve fitting between and . is the relative distance between the center of cloud and the center of ASC image, and is the influence degree function with as the independent variable.

In actual calculations, this paper takes each pixel as the calculation unit, which can make the measurement of cloud dispersion accurate to the pixel level. Then the region xi represents the individual image pixel whose area si is 1, and the cloud thickness wi can be expressed by the cloud weight in Sect. 3.1.1. According to Eqs. (11)–(12), the cloud dispersion calculation model based on pixels can be obtained as

3.2 Classification of ASC images

3.2.1 Pre-processing

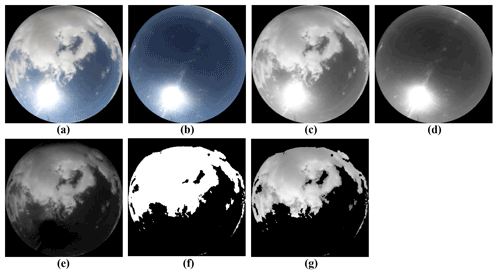

Since the extraction of cloud cover features is based on the grayscale images of the cloud region, it is necessary to extract the cloud region in the ASC images first. Sunlight has a high grayscale value and is very easily mistaken for cloud when performing cloud detection in the ASC images. Therefore, it is very important to remove the influence of sunlight. We use the difference method to filter the sunlight in this paper. For a given ASC image, the shooting time and the latitude and longitude of the shooting location are known, so we can use the image with different dates but the same moment as the clear-sky background image with the same sun elevation angle as the original image. The grayscale images of two images are first obtained, and the difference operation is performed to acquire the image after removing the sun. Then the cloud detection results are obtained using the binarization method. Finally, the cloud region is extracted by applying the image multiplication method.

Figure 6Cloud extraction result. (a) Original ASC image, (b) real clear background image with the same solar elevation angle as (a), (c) grayscale image of (a), (d) grayscale image of (b), (e) difference of (c) and (d), (f) cloud detection result, and (g) cloud extraction result.

Figure 6 shows an example to illustrate the various steps of extracting cloud region. Figure 6a is the acquired original ASC image, and Fig. 6b is the clear-sky background image with the same sun elevation angle. Figure 6c and d are the grayscale images of Fig. 6a and b, respectively, which have very similar brightness distributions. Figure 6e is the result of Fig. 6c minus Fig. 6d, and it can be seen that the influence of the sun has been completely eliminated. The cloud region shown in Fig. 6f can then be obtained by binarization, and the final extracted cloud region (Fig. 6g) can be obtained by multiplying Fig. 6f with Fig. 6c. Generally speaking, the cloud detection accuracy of traditional methods around the sun and near-horizon regions is relatively low, but the method used in this paper achieves better results. For thin cloud regions, this method can also accurately identify them. In addition, it is possible to exclude bright noises due to light refraction because they are in the same position and have similar brightness in Fig. 6c and d.

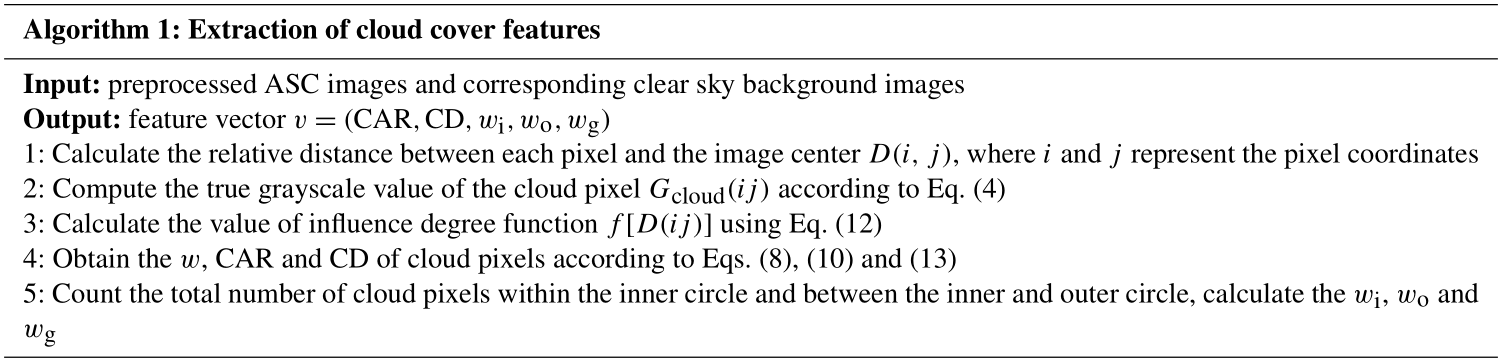

3.2.2 Extraction of cloud cover features

In this paper, we proposed three cloud cover features: cloud weight, cloud area ratio and cloud dispersion, which basically contain the information of cloud coverage area, thickness and distribution location. We represent the computational models of three features separately, which should theoretically match the TMT classification criteria. In order to improve the accuracy of ASC image classification, we extracted five features of each image separately, including cloud area ratio (CAR), cloud dispersion (CD), inner circle cloud weight (wi), outer circle cloud weight (wo) and global cloud weight (wg). Among them, wi represents the average cloud weight within the inner circle, wo denotes the average cloud weight between the inner and the outer circle, and wg indicates the average cloud weight within the outer circle. In addition, the effective range of both CAR and CD is within the outer circle. The steps of the feature extraction algorithm are shown in Algorithm 1.

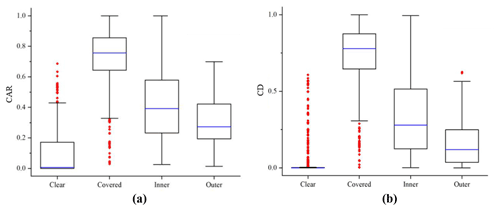

Table 2 shows examples of the value of cloud cover features for each type of ASC image, and the values are normalized for the convenience of comparison. As can be seen from the table, the size of the cloud weight is determined by the thickness of the cloud in the specified area, so there is no obvious difference between the cloud weight of each type image. However, it has a great influence on CAR and CD, and the size of cloud weight in the inner and outer circles affects the classification of ASC images based on TMT classification criteria, so the wi, wo and wg are used as features for classification in this paper. The CAR and CD are derived on the basis of cloud weight, and the two have an overall positive correlation. In addition, there are obvious differences between the values of different categories. Figure 7 shows the distribution of CAR and CD for the four ASC images. As Fig. 7 illustrates, the distribution of feature values of each type of ASC image is concentrated in a certain range. The CAR and CD of clear and covered have a more obvious division in values, while the overlap phenomenon exists in inner and outer. The distribution ranges of CAR and CD are relatively similar for each class of ASC image, but the overlapping part of CD for inner and outer is reduced compared with CAR, which indicates that considering the information of cloud location distribution can distinguish the image classes more effectively. Therefore, the CAR and CD can be used as features to classify the ASC images.

3.2.3 Selection of classifier and training sample

The above features are integrated into a feature set to feed into the classifier for training. To test the effectiveness of our proposed cloud cover features for classification, we selected four classifiers: decision tree (DT), support vector machine (SVM), K nearest neighbor (KNN) and random forest (RF). There are a total of 5000 different types of ASC images in the dataset, and we selected 4000 images for training the classifier and 1000 images for testing the effectiveness.

In this section we evaluate the effectiveness of cloud cover features for the classification of ASC images. Then we analyze and discuss the classification results.

4.1 Experiment results

The dataset is trained and tested using the method in the previous section. To evaluate the classification performance more comprehensively, we use accuracy, precision and recall as evaluation metrics. The accuracy can be calculated based on positive and negative classes as

where TP (true positive) is the number of correctly classified instances for a specific class, TN (true negative) is the number of correctly classified instances for the remaining types, FP (false positive) is the number of misclassified instances for the remaining types and FN (false negative) is the number of misclassified instances for a specific class. And the precision and recall can be expressed as

In addition, we use F1_score in the evaluation, which can be expressed as

The final test results of the four classifiers are shown in Table 3. It can be seen that the average accuracy of each classifier is more than 95 %, indicating that the cloud weight, cloud area ratio and cloud dispersion proposed are effective cloud cover features that can classify ASC images based on the TMT classification criteria, which greatly promotes the automatic processing of the images. The precision and recall of clear and covered are greater than 95 %, while outer and inner are both less than 95 %, indicating that outer and inner are easy to be misclassified. Among the four classifiers, the best performer is RF, which has an average accuracy of 96.58 % and F1_score of 96.24 %.

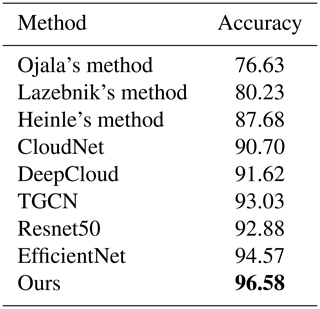

4.2 Comparison experiments

In order to verify the effectiveness of the method, we compare with other methods, including traditional methods and CNN-based methods. Among them, Ojala et al. (2002) generate cloud features based on the difference of grayscale value between the center pixel and the domain pixel of the local region of the cloud image. Lazebnik et al. (2006) divide the image into many sub-regions, then calculate the histogram of local features of each one, and finally stitch to get the spatial feature information of the image. Heinle et al. (2010) extract the color and texture features of the image and use KNN for classification after combination. In addition, we also selected CNN-based methods, such as CloudNet (Zhang et al., 2018), DeepCloud (Ye et al., 2017), TGCN (Liu et al., 2020), Resnet50 and EfficientNet, which are relatively new methods.

The classification accuracy of the method in this paper and other methods is presented in Table 4. It can be seen from the table that the CNN-based method performs better than the traditional method, because CNN can continuously extract various features of the image through convolutional operations to distinguish different kinds of images. And the classification performance of this paper method is superior to all other methods, which indicates that extracting specific cloud cover features for the classification criteria of cloud images can enhance the discrimination of different classes of cloud images.

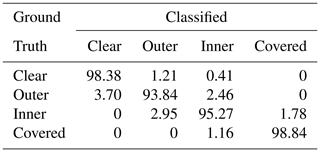

4.3 Performance discussion

To further analyze the misclassification, we obtained the confusion matrix of random forest classifier as shown in Table 5. It can be seen from the table that the classification accuracy of covered and clear is higher, while for outer and inner it is lower. The non-zero values of the non-diagonal elements in the table represent the probability of misclassification between classes. By looking at the misclassified images, it can be learned that some outer images are misclassified as clear or inner. Because some outer images have clouds in the inner circle, but the thickness is extremely small, they will be misclassified as inner. Or there are only scattered thin clouds in the outer circle, so they are misclassified as clear. Inner images are also misclassified as outer or covered. Some inner images have clouds in the inner circle, but an incorrect classification is caused by the thickness, or some inner images have a thin cloud thickness although the distribution of clouds is wide, so it is easy to make a misjudgment. Figure 8 displays some misclassified ASC images. The reason for misclassification is that the thickness of some cloud regions is incorrectly identified. Although the cloud cover features we proposed have taken into account the thickness of the cloud, there is still room for further improvement.

This paper proposes three cloud cover features according to the TMT classification criteria, namely cloud weight, cloud area ratio and cloud dispersion, and completes the classification of ASC images based on these features. In this method, the cloud weight indicates the thickness of the clouds, the cloud area ratio represents the distribution range of the cloud and the cloud dispersion reflects the cloud influence degree on astronomical observation results. We quantify these features and then use a classifier to identify classes of ASC images. A large dataset is composed of ASC images taken by the all-sky camera located in Xinjiang, China (38.19∘ N, 74.53∘ E), and evaluated to verify the effectiveness of the method. The experiment results show that the highest classification accuracy is 96.58 % and F1_score is 96.24 % by using the cloud cover feature. Based on this method, astronomical observatory site selection experts can greatly reduce the time to classify the ASC images, which will also greatly improve the efficiency of image processing. With comprehensive statistical data, they can choose the best site.

However, this method still has some shortcomings that need to be improved. The classification accuracy of inner and outer images needs to be increased, and better classification algorithms can be studied for these two types. The sunlight in ASC images affects the brightness of clouds, and how to better eliminate the influence of the sunlight is also a problem that needs further research. In addition, this paper does not study the classification of images in bad weather such as rain and fog, and we will study new classification algorithms with such images in the future.

The data are provided by the National Astronomical Observatories, Chinese Academy of Sciences (NAO-CAS), and we need to apply to the NAO-CAS before we publish the dataset. The data will be made available from author Xiaotong Li (865517454@qq.com) and corresponding author Bo Qiu (qiubo@hebut.edu.cn) upon request.

XL and BQ conceived and designed the study. XL performed the experiments and drafted the manuscript. BQ and CW proposed constructive suggestions on the revision of the manuscript.

The contact author has declared that neither they nor their co-authors have any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research has been supported by the National Natural Science Foundation of China (grant no. U1931134).

This paper was edited by Dmitry Efremenko and reviewed by two anonymous referees.

Calbo, J., and Sabburg, J.: Feature extraction from whole-sky ground-based images for cloud-type recognition, J. Atmos. Ocean. Tech., 25, 3–14, https://doi.org/10.1175/2007JTECHA959.1, 2008.

Cao, Z. H., Hao, J. X., Feng, L., Jones, H. R. A., Li, J., and Xu, J.: Data processing and data products from 2017 to 2019 campaign of astronomical site testing at Ali, Daocheng and Muztagh-ata, Res. Astron. Astrophys., https://doi.org/10.1088/1674-4527/20/6/82, 20, 082, 2020.

Cristianini, N. and Shawe-Taylor, J.: An introduction to support vector machines and other kernel-based learning methods, Cambridge University Press, Cambridge, UK, https://doi.org/10.1017/CBO9780511801389, 2000.

Dev, S., Lee, Y. H., and Winkler, S.: Categorization of cloud image patches using an improved texton-based approach, in: 2015 IEEE Image Proc., Quebec City, QC, Canada, 27–30 September 2015, 422–426, https://doi.org/10.1109/ICIP.2015.7350833, 2015.

Esteves, J., Cao, Y., Silva, N. P. D., Pestana, R., and Wang, Z.: Identification of clouds using an all-sky imager, in: 2021 IEEE Madrid PowerTech, Madrid, Spain, 28 June–2 July 2021, 1–5, https://doi.org/10.1109/PowerTech46648.2021.9494868, 2021.

Evan, A. T., Heidinger, A. K., and Vimont, D. J.: Arguments against a physical long-term trend in global ISCCP cloud amount, Geophys. Res. Lett., 34, 290–303, https://doi.org/10.1029/2006GL028083, 2007.

Fa, T., Xie, W. Y., Wang, Y. R., and Xia, Y. W.: Development of an all-sky imaging system for cloud cover assessment, Appl. Optics, 58, 5516–5524, https://doi.org/10.1364/AO.58.005516, 2019.

Gan, J. R., Lu, W. T., Li, Q. Y., Zhang, Z., Ma, Y., and Yao, W.: Cloud type classification of total-sky images using duplex norm-bounded sparse coding, IEEE J. Sel. Top. Appl., 10, 3360–3372, https://doi.org/10.1109/JSTARS.2017.2669206, 2017.

Heinle, A., Macke, A., and Srivastav, A.: Automatic cloud classification of whole sky images, Atmos. Meas. Tech., 3, 557–567, https://doi.org/10.5194/amt-3-557-2010, 2010.

Kim, C. K., Kim, H. G., Kang, Y, H., Yun, C. Y., and Lee, S. N.: Evaluation of Global Horizontal Irradiance Derived from CLAVR-x Model and COMS Imagery Over the Korean Peninsula, New & Renewable Energy, 12, 13–20, https://doi.org/10.7849/ksnre.2016.10.12.S2.13, 2016.

Lazebnik, S., Schmid, C., and Ponce, J.: Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories, in: Proceeedings of the IEEE computer society conference on computer vision and pattern recognition, New York, NY, USA, 17–22 June 2006, 2169–2178, https://doi.org/10.1109/CVPR.2006.68, 2006.

Li, Q., Zhang, Z., Lu, W., Yang, J., Ma, Y., and Yao, W.: From pixels to patches: a cloud classification method based on a bag of micro-structures, Atmos. Meas. Tech., 9, 753–764, https://doi.org/10.5194/amt-9-753-2016, 2016.

Liu, S., Li, M., Zhang, Z., Cao, X., and Durrani, T. S.: Ground-based cloud classification using task-based graph convolutional network, Geophys. Res. Lett., 47, 1–12, https://doi.org/10.1029/2020GL087338, 2020.

Liu, S., Duan, L. L., Zhang, Z., Cao, X. Z., and Durrani, T. S.: Ground-Based Remote Sensing Cloud Classification via Context Graph Attention Network, IEEE T. Geosci. Remote, 60, 1–11, https://doi.org/10.1109/TGRS.2021.3063255, 2021.

Nouri, B., Kuhn, P., Wilbert, S., Hanrieder, N., Prahl, C., Zarzalejo, L., Kazantzidis, A., Blanc, P., and Pitz-Paal, R.: Cloud height and tracking accuracy of three all sky imager systems for individual clouds, Sol. Energy, 177, 213–228, https://doi.org/10.1016/j.solener.2018.10.079, 2018.

Nouri, B., Wilbert, S., Segura, L., Kuhn, P., Hanrieder, N., Kazantzidis, A., Schmidt, T., Zarzalejo, L., Blanc, P., and Pitz-Paal, R.: Determination of cloud transmittance for all sky imager based solar nowcasting, Sol. Energy, 181, 251–263, https://doi.org/10.1016/j.solener.2019.02.004, 2019.

Ojala, T., Pietikainen, M., and Maenpaa, T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns, IEEE Trans. Pattern Anal. Mach. Intell., 24, 971–987, https://doi.org/10.1109/TPAMI.2002.1017623, 2002.

Ryu, A., Ito, M., Ishii, H., and Hayashi, Y.: Preliminary Analysis of Short-term Solar Irradiance Forecasting by using Total-sky Imager and Convolutional Neural Network, in: 2019 IEEE PES GTD Grand International Conference and Exposition Asia (GTD Asia), Bangkok, Thailand, 19–23 March 2019, 627–631, https://doi.org/10.1109/GTDAsia.2019.8715984, 2019.

Shi, C. Z., Wang, C. H., Wang, Y., and Xiao, B. H.: Deep convolutional activations based features for ground-based cloud classification, IEEE Geosci. Remote S., 14, 816–820, https://doi.org/10.1109/LGRS.2017.2681658, 2017.

Skidmore, W., Schock, M., Magnier, E., Walker, D., Feldman, D., Riddle, R., and Els, S.: Using All Sky Cameras to determine cloud statistics for the Thirty Meter Telescope candidate sites, in: SPIE Astronomical Telescopes + Instrumentation, Marseille, France, 27 August 2008, 862–870, https://doi.org/10.1117/12.788141, 2008.

Sneha, S., Padmakumari, B., Pandithurai, G., Patil, R. D., and Naidu, C. V.: Diurnal (24 h) cycle and seasonal variability of cloud fraction retrieved from a Whole Sky Imager over a complex terrain in the Western Ghats and comparison with MODIS, Atmos. Res., 248, 105180, https://doi.org/10.1016/j.atmosres.2020.105180, 2021.

Sodergren, A. H., McDonald, A. J., and Bodeker, G. E.: An energy balance model exploration of the impacts of interactions between surface albedo, cloud cover and water vapor on polar amplification, Clim. Dynam., 51, 1639–1658, https://doi.org/10.1007/s00382-017-3974-5, 2017.

Sun, X. J., Liu, L., Gao, T. C., and Zhao, S. J.: Classification of Whole Sky Infrared Cloud Image Based on the LBP Operator, Daqi Kexue Xuebao, 32, 490–497, https://doi.org/10.13878/j.cnki.dqkxxb.2009.04.010, 2009.

Svetnik, V., Liaw, A., Tong, C., Culberson, J. C., Sheridan, R. P., and Feuston, B. P.: Random Forest: A Classification and Regression Tool for Compound Classification and QSAR Modeling, J. Chem. Inf. Comput. Sci., 43, 1947–1958, https://doi.org/10.1021/ci034160g, 2003.

Wan, X. and Du, J.: Cloud Classification For Ground-Based Sky Image Using Random Forest, Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci., XLIII-B3-2020, 835–842, https://doi.org/10.5194/isprs-archives-XLIII-B3-2020-835-2020, 2020.

Wang, L. X., Xiao, P. F., Feng, X. Z., Li, H. X., Zhang, W. B., and Lin, J. T.: Effective Compositing Method to Produce Cloud-Free AVHRR Image, IEEE Geosci. Remote S., 11, 328–332, https://doi.org/10.1109/LGRS.2013.2257672, 2013.

Westerhuis, S., Fuhrer, O., Bhattacharya, R., Schmidli, J., and Bretherton, C.: Effects of terrain-following vertical coordinates on simulation of stratus clouds in numerical weather prediction models, Q. J. Roy. Meteor. Soc., 147, 94–105, https://doi.org/10.1002/qj.3907, 2020.

Ye, L., Cao, Z. G., and Xiao, Y.: DeepCloud: Ground-based cloud image categorization using deep convolutional features, IEEE T. Geosci. Remote, 55, 5729–5740, https://doi.org/10.1109/TGRS.2017.2712809, 2017.

Young, A. H., Knapp, K. R., Inamdar, A., Hankins, W., and Rossow, W. B.: The International Satellite Cloud Climatology Project H-Series climate data record product, Earth Syst. Sci. Data, 10, 583–593, https://doi.org/10.5194/essd-10-583-2018, 2018.

Zhang, J., Liu, P., Zhang, F., and Song, Q.: CloudNet: Ground-based cloud classification with deep convolutional neural network. Geophys. Res. Lett., 45, 8665–8672, https://doi.org/10.1029/2018GL077787, 2018.

Zhao, M. Y., Chang, C. H., Xie, W. B., Xie, Z., and Hu, J. Y.: Cloud shape classification system based on multi-channel CNN and improved FDM, IEEE Access, 8, 44111–44124, https://doi.org/10.1109/ACCESS.2020.2978090, 2020.

Zhao, X., Wei, H. K., Wang, H., Zhu, T. T., and Zhang, K. J.: 3D-CNN-based feature extraction of ground-based cloud images for direct normal irradiance prediction, Sol. Energy, 181, 510–518, https://doi.org/10.1016/j.solener.2019.01.096, 2019.

Zhong, B., Chen, W. H., Wu, S. L., Hu, L. F., Luo, X. B., and Liu, Q. H.: A cloud detection method based on relationship between objects of cloud and cloud-shadow for Chinese moderate to high resolution satellite imagery, IEEE J. Sel. Top. Appl., 10, 4898–4908, https://doi.org/10.1109/JSTARS.2017.2734912, 2017.