the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Validating physical and semi-empirical satellite-based irradiance retrievals using high- and low-accuracy radiometric observations in a monsoon-influenced continental climate

Yun Chen

Chunlin Huang

Hongrong Shi

Adam R. Jensen

Xiang'ao Xia

Yves-Marie Saint-Drenan

Christian A. Gueymard

Martin János Mayer

Yanbo Shen

Are high-accuracy radiometric observations strictly indispensable for the validation of satellite-based irradiance retrievals, or might low-accuracy observations serve as adequate substitutes? Owing to the scarcity of sites with redundant radiometers, such inquiries have seldom been contemplated, much less subjected to systematic examination; rather, it has been customary to employ all accessible observations during validation, frequently with only minimal quality control. In this investigation, we address this question by validating two distinct sets of satellite-retrieved irradiance – one derived through physical methods, the other through statistical means – against collocated high- and low-accuracy observations. Departing from the majority of validation studies, which rely exclusively upon an array of performance measures, we advocate and implement a rigorous distribution-oriented validation framework, yielding more profound insights and more comprehensive conclusions. Beyond the validation methodology itself, the dataset utilized in this study is noteworthy in its own regard: It incorporates radiometric observations from the newly established and first-ever Baseline Surface Radiation Network (BSRN) station situated within a monsoon-influenced continental climate (specifically, the Dwa Köppen classification), in conjunction with irradiance retrievals from the Fengyun-4B geostationary satellite, which are likewise new to the community. The accumulated evidence strongly suggests that the use of low-accuracy observations as a reference in validating irradiance retrievals may entail significant risks, because the discrepancies they introduce can be of a magnitude comparable to the commonly accepted margins of error or improvement (approximately several W m−2 or a few percent) upon which numerous scientific assertions depend.

- Article

(7907 KB) - Full-text XML

- BibTeX

- EndNote

Gridded irradiance retrieved from onboard imagers of geostationary satellites constitutes the foundation of numerous endeavors in solar energy meteorology, among which solar resource assessment and forecasting stand out as the most representative (Yang and Kleissl, 2024). Solar resource assessment aims to quantify the long-term (over years or decades) availability and variability of irradiance, thereby enabling the estimation of the energy yield and the evaluation of the economic feasibility and bankability of solar energy projects prior to their deployment. Given that the establishment and maintenance of dense radiometer networks over extensive regions and prolonged durations has never been a practical undertaking, and given that modeled irradiance (such as that derived from numerical weather predictions and reanalysis) remains constrained by its limited accuracy, the use of satellite-retrieved irradiance is the industry-standard practice for such assessments (Sengupta et al., 2024; Yang et al., 2022b). Solar forecasting – across varying short-term scales (from seconds to days) – is indispensable for grid operators, who must reliably formulate generation schedules accounting for the variable and intermittent nature of solar power output. In particular, within the intra-day window of 0–4 h, the advection of (normalized) satellite-retrieved irradiance fields has been consistently demonstrated to be the most reliable strategy (Yang et al., 2022a; Sweeney et al., 2020). In these applications of solar energy meteorology, the assurance of quality in satellite-retrieved irradiance products is of paramount importance – a necessity that has given rise to a substantial body of validation studies (e.g., Elias et al., 2024; Wandji Nyamsi et al., 2023; Qin et al., 2022; Kosmopoulos et al., 2018).

Irradiance retrieval from the top-of-the-atmosphere reflectance and brightness temperature measurements can be categorized into physical and data-driven types, depending on whether the retrieval process incorporates radiative transfer (Huang et al., 2019). Given that the execution of line-by-line radiative transfer calculations for each individual pixel and time stamp is computationally prohibitive, accelerated calculations, such as using a lookup table (e.g., Huttunen et al., 2016; Lefèvre et al., 2013) or parameterization (e.g., Huang et al., 2018; Xie et al., 2016), are invariably adopted in practice. Although physical retrieval methods are firmly grounded in established theory (Liou, 2002), they are not without their difficulties; persistent challenges that lead to geographic displacement of clouds remain unresolved. On the other hand, data-driven retrieval techniques, which can be further subdivided into semi-empirical (e.g., Huang et al., 2025; Chen et al., 2022; Perez et al., 2002) and machine learning (e.g., Shi et al., 2025, 2023; Verbois et al., 2023) methods, offer ease of implementation and can, under favorable conditions, achieve accuracy rivaling that of physical methods. However, such accuracy is contingent upon the quantity and quality of ground-based observations and cannot be assured a priori. Indeed, data-driven techniques often leverage large datasets with lower quality due to limited instrument maintenance, and the model potentially learns measurement errors, such as those due to soiling or calibration drift. Documented instances also exist where machine learning models, owing to overfitting, have produced retrievals exhibiting unphysical values or behavior (Yang et al., 2022c). In any case, these inherent limitations of existing retrieval techniques underscore the necessity of formal validation of satellite-derived irradiance.

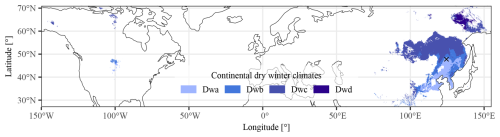

Figure 1The geographical distribution of the continental dry winter climates. The location of the Qiqihar station is marked with a black cross.

The quality of satellite-derived irradiance varies as a function of spatial locations, time periods, and atmospheric conditions. Consequently, numerous validation efforts have adopted the practice of partitioning data samples into distinct groups representative of disparate climate classes, seasonal variations, and sky conditions. This kind of practice requires extensive data support, where the Baseline Surface Radiation Network (BSRN; Driemel et al., 2018; Ohmura et al., 1998), being the world's largest research-grade surface radiation monitoring network, has hitherto been an indispensable and reliable data source (Bright, 2019). BSRN began collecting surface radiation data in 1992 with nine initial stations. Ever since, the station listing has been dynamic, with some new stations being added and some older ones being closed; the present tally of active stations stands at 43. One of the initial aims of the network is to achieve coverage across major climate zones (Ohmura et al., 1998). However, as revealed in a global overview of multi-component solar radiometer monitoring stations (Jensen et al., 2025), the geographical distribution of climate zones and thus radiometric stations is highly uneven. For that, the establishment of BSRN in rarer climate zones has proven particularly challenging. For instance, Fig. 1 shows the geographical distribution of the continental dry winter climates, denoted by the Köppen symbol “Dw.” Until recently, its four subzones (Dwa to Dwd) remained entirely unrepresented within the BSRN framework, constituting a critical factor that precluded systematic investigation under such meteorological regimes. This impediment has now been partially remedied by the establishment of a new BSRN station in Qiqihar, China, in October 2023 – a development that permits, for the first time, rigorous inquiry into surface radiation characteristics within continental dry winter climates, including the validation of satellite-retrieved irradiance products.

Indeed, ground-based radiometric observations form an indispensable element in the validation of satellite-retrieved irradiance. The conventional validation methodology consists of computing aggregate performance measures, such as mean bias error (MBE), root mean square error (RMSE), or correlation, between retrievals and observations. However, these overall performance statistics suffer from well-documented limitations, such as interdependence or incompleteness, rendering conclusions drawn therefrom inherently questionable (Tian et al., 2016). To give perspective, two distinct sets of retrievals may yield identical MBE or RMSE values while exhibiting radically divergent temporal characteristics; this phenomenon is known as underdetermination (Tian et al., 2016) and has been more extensively documented in the forecasting literature (e.g., Yang and Perez, 2019; Vallance et al., 2017). Therefore, some have recommended using a more rigorous and systematic approach known as the distribution-oriented validation framework (Murphy and Winkler, 1987), which is receiving increasing acceptance in the solar community (Yang and Bright, 2020). The key philosophy of the distribution-oriented validation framework is to scrutinize the joint, marginal, and conditional distributions of prediction and observation, which allows visual and quantitative assessments of different aspects of quality, such as bias, association, calibration, refinement, or discrimination, through error decompositions (Yang et al., 2020). These aspects of quality possess clearly defined statistical complementarity, thereby offering more substantive insight than traditional measure-oriented validation, which often proves both subjective and inconclusive. To that end, this work employs the distribution-oriented validation framework, thereby furnishing a more comprehensive and analytically sound assessment of the irradiance products under examination.

Beyond questions of validation methodology lies a more fundamental concern regarding the quality of ground-based radiometric observations themselves. Since these observations serve as the ground truth, their uncertainties and biases, which are typically unaccounted for, inevitably undermine the confidence of any derived conclusions. Although the manufacturer-reported daily uncertainty of secondary-standard (i.e., Class A) radiometers is below 2 %, such singular numerical representations cannot possibly represent the true uncertainties encountered under actual field conditions. It has been reported that the difference among regularly calibrated thermopile radiometers can reach up to ±17 % at high zenith angles and under cloudy skies (Habte et al., 2016). Moreover, the impracticality of deploying redundant radiometers for repeated measurements compels reliance upon rigorous quality control (QC) procedures as the sole means of ensuring the validity of ground truth. Even so, most operational radiometric sites are limited to measuring the global irradiance component, and not the individual diffuse and beam components. This limitation renders certain QC tests inapplicable, most notably the three-component closure test, which verifies the agreement between measured global irradiance and the sum of its diffuse and beam constituents. These considerations lead us to a critical epistemological question: To what extent can observed validation deviations be properly attributed to limitations in the ground-based observations themselves, rather than to deficiencies in the satellite retrievals? This distinction is not merely technical but fundamental to the proper interpretation of validation results (see Sect. 10.4 of Sengupta et al., 2024).

Guided by the above preliminaries, the present investigation establishes three principal objectives: (1) conduct a systematic validation of physical and semi-empirical satellite irradiance retrievals under the monsoon-influenced dry-winter hot-summer continental climate (i.e., the Dwa Köppen climate), which is a climatic regime remarkably underrepresented in existing studies; (2) demonstrate the methodological superiority of distribution-oriented validation compared to conventional measure-oriented approaches; and (3) quantitatively assess the propagation of observational uncertainties from ground-based radiometers into validation outcomes. The organization of this paper proceeds as follows: Sect. 2 provides a comprehensive exposition of the datasets, their quality control procedure, and the formal validation methodology. Section 3 depicts the validation outcomes and discusses the implications of the quality of ground-truth on gridded irradiance validation. Section 4 synthesizes the principal findings and their broader implications.

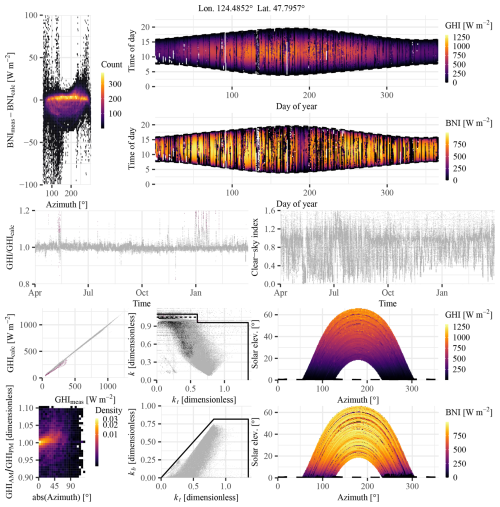

2.1 Data description

A total of four datasets are involved herein, among which two consist of ground-based radiometric observations, and the other two are satellite-retrieved irradiance. The two sets of ground-based observations are collocated. One set of ground-based observations comes from the BSRN Qiqihar (QIQ) station, which employs secondary-standard radiometers and represents high-standard radiometric practices. In contrast, the other set of ground-based observations comes from an operational station of the China Meteorological Administration (CMA), which uses a pyranometer from a Chinese manufacturer that is potentially associated with higher uncertainties than the secondary-standard ones. Notation-wise, we denote the high-accuracy set of observations as yH, and the low-accuracy set of observations as yL.

The two sets of satellite-retrieved irradiance are both derived from images captured by the Advanced Geostationary Radiation Imager (AGRI) on board the Fengyun-4B (FY-4B) satellite, which is the second of the latest-generation Chinese geostationary meteorological satellite series. To exemplify the physical retrieval algorithms, the official FY-4B irradiance product of the National Satellite Meteorological Center (NSMC) of CMA is considered. As for the statistical (or semi-empirical) retrieval, the product of Huang et al. (2023), which is based on a modified version of the Heliosat-2 method of Rigollier et al. (2004), is used without loss of generality. Similarly to the case of ground-based observations, the two sets of satellite-retrieved irradiance are denoted as xP and xS, respectively, with the subscripts denoting “physical” and “statistical.” In short, this work seeks to validate xP and xS using yH and yL, separately. The period of validation is one year from April 2024 to March 2025.

2.1.1 High-accuracy observations from the BSRN QIQ station

The BSRN QIQ station (47.7957° N, 124.4852° E, 170 m) is located in the monsoon-influenced dry-winter hot-summer continental climate, which has a symbol of “Dwa” under the Köppen climate classification. Given the geographical distribution of the Dwa climate, which is mostly in northeastern Asia, the surface radiation characteristics based on high-quality ground-based radiometers have rarely, if ever, been formally reported, thereby making this report unique and valuable.

Following the BSRN requirements, QIQ measures all three downward surface shortwave radiation components, as well as the downward longwave radiation, with a sampling rate of 1 s. For the global horizontal irradiance (GHI) and diffuse horizontal irradiance (DHI), CMP22 pyranometers from Kipp & Zonen are used, whereas a CHP1 pyrheliometer mounted on a SOLYS2 sun tracker is used to measure the beam normal irradiance (BNI). All radiometers are calibrated and regularly maintained. For more technical details of the QIQ station, the reader is referred to the recent work by Liu et al. (2025). It should be highlighted that the CMP22 pyranometers are ventilated but not heated, due to the high thermal offset observed during the winter of 2023 (the heaters have been switched off since then).

2.1.2 Low-accuracy observations from the CMA operational station

Compared to basic meteorological variables, such as temperature or precipitation, solar irradiance does not have the same degree of impact on daily human activities. Consequently, radiometers have hitherto been regarded as an additional feature in weather stations. For instance, CMA maintains more than 90 000 manned and unmanned weather stations, but only about 100 of them have radiometers installed (Yang et al., 2022b). Among these CMA operational radiometric stations, only a handful measure all three shortwave components, whereas most only measure GHI, including the Fuyu County Meteorological Bureau, within which the QIQ station is located. This GHI dataset, therefore, serves as an alternative version of ground truth for validating gridded irradiance retrievals.

The radiometer employed by the Fuyu Bureau is a DFN1 thermopile pyranometer by Huatron Environment, a Chinese brand. DFN1 is a first-class (i.e., Class B) pyranometer that has acquired the CMA's special technical equipment license for meteorological observation. According to the manufacturer, DFN1 has a spectral range of 300–3000 nm (200–3600 nm for CMP22), a response time of < 20 s (< 5 s for CMP22), and a temperature response of 2 % (0.5 % for CMP22). The data is sampled at 2 s but only logged as 1 min averages. The DFN1 at the Fuyu Bureau is also equipped with an SRC228 ventilation unit. Through the CMA standard data and communication protocol, the 1 min data is transmitted to the central server in near real-time.

2.1.3 Physical irradiance retrievals from NSMC

The FY-4B satellite was successfully launched on June 3, 2021, and was located at 123.5° E on 10 June 2021, relocated at 133° E from 11 April 2022 to 31 January 2024, and positioned at its final position of 105° E on 5 March 2024. The NSMC official FY-4B irradiance product is developed by the Algorithm Working Group and consists of all three surface shortwave components. The retrieval algorithm is of a physical type, which, as mentioned in the introduction, is based on radiation transfer, accounting for all important radiation transfer physical processes in the shortwave range, including multiple scattering, absorption, and thermal radiation, to form a look-up table. More specifically, the observations from channels 1 to 6 of the FY-4B AGRI and the FY-4B L2 snow cover product are used to obtain the instantaneous state variable information of the atmosphere and the surface, mainly including atmospheric extinction parameters and surface reflectance. After determining the instantaneous state of the atmosphere and the surface, irradiance is estimated according to the pre-established lookup table, taking into account the sun–surface–satellite geometry.

FY-4B has 15 channels with a native resolution ranging from 0.5–4 km at nadir, depending on the channel. Since the retrieval algorithm uses multiple channels, the spatial resolution of the final product follows that of the lowest-resolution channel, which is 4 km. Temporally, AGRI completes a full-disc scan over each 15 min interval. The exact instant of AGRI passing the pixel corresponding to the QIQ station is not considered in this work. Instead, the time stamps follow the scan intervals. This slight asynchrony is unlikely to cause major offsets during validation, especially after hourly averages are formed.

2.1.4 Statistical irradiance retrievals using the modified Heliosat-2 method

Heliosat-2 is a well-known statistical irradiance retrieval technique that is primarily based on three components: (1) a clear-sky model, (2) a method to estimate cloud index from satellite reflectance observations, and (3) a mapping function from cloud index (ν) to clear-sky index (κ). Once the κ is obtained, GHI can be reconstructed by multiplying κ with the clear-sky GHI. The reader is referred to Appendix A for an extended explanation of the algorithm. In the work of Huang et al. (2023), the clear-sky GHI is estimated via the REST2 model, the cloud index is estimated from the Fengyun-4A (FY-4A) level-1 reflectance of the visible channel (0.65 µm), and the coefficients of the ν-to-κ mapping are determined based on 38 stations operated by the Chinese Ecosystem Research Network. Since only the visible channel observations are involved, the spatial resolution of the product is 0.5 km, which can reveal more granular spatial features without sacrificing much accuracy compared to a benchmark product – the Himawari-8 product developed by the Japan Aerospace Exploration Agency (Huang et al., 2023). On the other hand, the cloud shadow and edge reflectances are more pronounced for high-resolution retrievals, which can disturb the calculation of the cloud index.

In this work, the same retrieval technique is used to acquire GHI on a grid cell collocated with the QIQ station, with the only difference being the raw data source, which is FY-4B instead of FY-4A. As noted by Huang et al. (2025), one of the advantages of semi-empirical retrieval methods is the independence of satellite calibration information, which means that the previous algorithm can be applied “as is” without modification. It should be noted, however, that FY-4A is an experimental satellite with known fluctuations in radiometric performance and an irregular observation schedule (Zhong et al., 2021). In contrast, FY-4B demonstrates superior calibration stability, comparable to the GOES-R and Himawari-8/-9 satellites. The statistically retrieved irradiance also follows the observation schedule of FY-4B, which has a regular 15 min interval.

2.2 Data quality control and temporal alignment

To ensure the soundness of the outcome drawn from the validation exercise, the ground-based observations must first undergo quality control. Additionally, considering the differences in temporal resolutions of various datasets, it is vital to aggregate and align them temporally. These two aspects are described in this section.

2.2.1 Quality control routine for irradiance

The optimal QC procedure ought to provide a sufficient balance between the number of rejected inlier data points and the number of retained anomalous data points. In this work, the QC checks used by the International Energy Agency (IEA) PVPS Task 16 team are considered (Forstinger et al., 2021), which is based on the BSRN recommended routine (Long and Shi, 2008) with notable additions. Notation-wise, several well-known normalized indexes (or k indexes) are to be first defined, they are the clearness index , the beam transmittance , the clear-sky index , and the diffuse fraction , where Gh, Dh, and Bn denote GHI, DHI, and BNI, respectively; Ghc is the clear-sky GHI; and E0=E0ncos Z is the extraterrestial GHI, with Z being the zenith angle.

The k indexes are normalized versions of various irradiance components. They are also very commonly used in radiation modeling, when the yearly and diurnal cycles of irradiance interfere with model fitting (Yang and Kleissl, 2024). Based on the k indexes, several QC tests can be formulated:

-

kb<kt, for Gh>50 W m−2, kt>0, and kb>0

-

, for Gh>50 W m−2 and kb>0

-

kt<1.35, for Gh>50 W m−2

-

k<1.05, for Z<75° and Gh>50 W m−2

-

k<1.10, for Z≥75° and Gh>50 W m−2

-

k<0.96, for kt>0.6, Z<85°, and Gh>50 W m−2

When the conditions specified by these tests are not met, the corresponding data points are flagged as “anomalous.”

Besides the k index tests, the extremely rare limits (ERL) test and the three-component closure test are also part of the QC routine. They are:

-

−2 W m W m−2

-

−2 W m W m−2

-

−2 W m W m−2

and

-

abs(δ)≤8 %, for Z<75° and Gh>50 W m−2

-

abs(δ)≤15 %, for ° and Gh>50 W m−2

where is the decimal difference of the closure relationship. Last but not least, the tracker-off test is given by:

-

, for Z<85°

-

, for Z<85°

It should be noted that although Ghc and Bnc can be estimated via a clear-sky model, they are defined as Ghc=0.8E0 and herein, with Dhc=0.165Ghc.

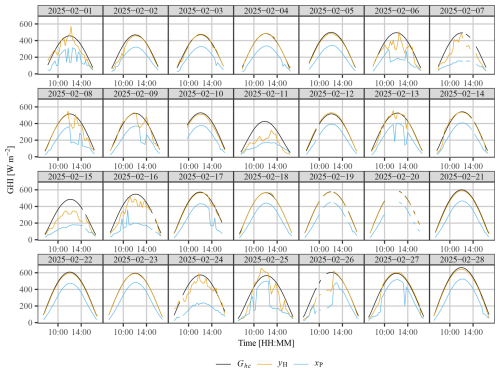

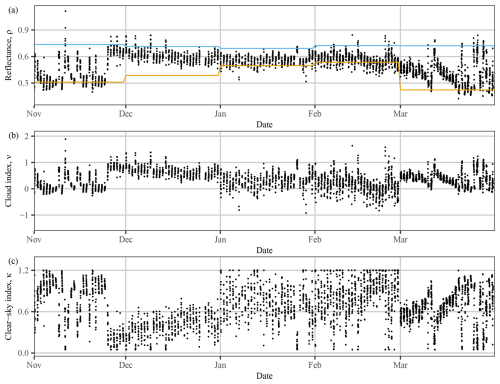

Figure 2A multiplot depicting the quality of QIQ observations. Each subplot shows the result of a particular quality control test. For the detailed interpretation of each test involved here, the reader is referred to Forstinger et al. (2021).

The above QC routine requires all three irradiance components to execute, and thus can only be applied to the QIQ observations. As for the CMA observations, only the first line of the ERL test is applicable. All tests are performed on 1 min data, and the outcome is displayed in Fig. 2, which is known as a multiplot, as named by Forstinger et al. (2021), to whom the reader is referred for detailed interpretation. (There are several calculated quantities with subscript “calc” in this plot, which all result from the closure equation.) In short, the 1 min QIQ observations are of good quality with very few missing and rejected data points. Most of the rejected data points are due to (1) frost deposition on the glass cover of the pyrheliometer, (2) elevated thermal offsets in pyranometers, and (3) horizon shading by nearby buildings during winter sunsets. Although regular maintenance is conducted by the on-site staff, it is still difficult to entirely avoid data issues, again highlighting the importance of QC.

2.2.2 Data aggregation and temporal alignment

One year of 1 min observations corresponds to 525 600 samples, among which 239 879 are daytime samples – considering the higher directional uncertainty of pyranometers at low-sun conditions, daytime samples are defined as those with zenith angles ≤85°. The daytime samples from QIQ are passed through the above-mentioned QC routine, and 1510 (or 0.64 %) data points are flagged and thus rejected before aggregation. The non-flagged data are aggregated to a 15 min data interval using right-labeled timestamps, i.e., the time stamps correspond to the end of the aggregation interval. To ensure that each aggregated value can sufficiently represent the average condition of the corresponding intervals, only intervals with more than seven 1 min data points are considered valid. After aggregation, a total of 15 576 valid 15 min data points remain.

Figure 3Scatterplots of (a) the low-accuracy CMA observations (yL) against the high-accuracy QIQ observations (yH) and (b) the Heliosat-2 statistical retrieval (xS) against the NSMC's physical retrieval (xP). Brighter colors signify higher sample density.

After processing the QIQ data (i.e., yH), the 1 min CMA observations (i.e., yL) are aggregated in the same fashion and appended to the QIQ data frame, alongside the two sets of 15 min-resolution retrievals (i.e., xP and xS). It is noted that both the CMA observations and the retrievals contain missing instances. A row-wise filter is thus applied to the data frame to remove incomplete timestamps, resulting in 14 595 final samples. Stated differently, all subsequent validation exercises are based on this 14 595×4 data frame, with the four columns being yH, yL, xP, and xS. Figure 3a shows the scatterplot between yH and yL, and the high- and low-accuracy observations have an exceptional agreement, with a Pearson correlation of 0.997 and a mean difference of 3 W m−2. In contrast, the scatterplot between xP and xS, as depicted in Fig. 3b, shows substantial discrepancies and noticeable nonlinearity, with a correlation of 0.926 and a mean difference of 40 W m−2, suggesting that one of these products has major technical issues that need to be further investigated.

2.3 An overview of the validation methodology

Validation of gridded products can be categorized into measure- and distribution-oriented approaches. Whereas the former employs a suite of performance measures and statistics to gauge the product quality, the latter examines the joint (or equivalently, the marginal and conditional) distributions of retrieval and observation. However, these two approaches are not mutually exclusive, as many performance measures can be written in terms of distributions, see Eqs. (1) and (2) below. Therefore, both approaches are introduced next and their outcomes reported in Sect. 3.

2.3.1 Measure-oriented validation

A majority of existing works on gridded irradiance validation use the measure-oriented approach, among which the MBE and RMSE are the most popular choices of performance measures (e.g., Yang and Bright, 2020; Sengupta et al., 2018). MBE is often reported because ensuring a low bias has the utmost importance in solar resource assessment, but high accuracy is not as critical (Perez et al., 2013). On the other hand, RMSE is a measure of accuracy that penalizes larger errors, which are particularly undesirable during solar forecasting (Yang et al., 2020) – it is thus by far the most popular measure (Blaga et al., 2019). It should be noted that other metrics, such as the mean absolute error or the root mean square percentage error, can also be considered for validation, but they are susceptible to incompatibility with RMSE during verification, as noted by Gneiting (2011). Thus, without loss of generality, only MBE and RMSE are used in this work.

Denoting the random variable representing the gridded retrieval as X, and that representing the observation as Y, then MBE and RMSE can be written as:

where 𝔼 is the expecation operator, which can be evaluated with n samples, and f(x,y) is the joint probability density function (PDF) of X and Y.

2.3.2 Distribution-oriented validation

Distribution-oriented validation allows both visual and quantitative assessments. It is highlighted that, besides the order of data samples, the joint distribution of retrieval and observation contains all information relevant to validation. According to Bayes' theorem, the joint PDF of retrieval X and observation Y may be decomposed in two ways:

where f(y|x) and f(x|y) are the conditional PDFs of Y given X and X given Y, respectively; f(x) and f(y) are marginal PDFs. Whereas Eq. (3) is known as the calibration–refinement factorization, Eq. (4) is known as the likelihood–base rate factorization.

The above naming convention reveals how each conditional or marginal distribution is linked to certain characteristics and properties of the validation samples. For instance, f(y|x) signifies the calibration of the retrievals, since it describes whether or not the observations can reliably materialize according to a particular retrieved value, say x0 – the word “reliable,” in this context, means that the expectation of the materialized observations agrees with the retrieved value, or mathematically, . Next, f(x) is the probability density of retrieval, which describes how refined the retrievals are – if the same value results each time, the retrievals are not at all refined. As for the f(x|y) term, it narrates the likelihood of different values of retrievals that would have been issued before a particular observation value, say y0, was materialized. Analogous to the calibration term, one seeks the property when assessing the likelihood. Last, the probability density of observation, that is, f(y), is referred to as the base rate in meteorology, and is a characteristic of the atmospheric process itself.

The visual assessment of the calibration–refinement and likelihood–base rate factorization can be performed by plotting out the densities, e.g., one may overlay f(x) with f(y) and observe the discrepancy between them. This type of analysis is intuitive. For quantitative assessments, the strategy is to decompose the mean square error (MSE) into several terms, each with a statistical interpretation. Specifically, MSE has the following well-known decompositions:

Equation (5) is the bias–variance decomposition of MSE, which gives rise to two variance terms, which are summaries of the variability in f(x) and f(y); a covariance term, which explains the linear correlation (i.e., association) between X and Y; and a squared unconditional bias term, which quantifies the bias of X with respect to Y. Equation (6) is closely tied to the calibration–refinement factorization. The second term of Eq. (6) computes the expected value of calibration – recall that a set of retrievals is said to be calibrated if for all x. As for the third term, it calculates the mean difference between the conditional and unconditional expectations of observation. When a set of retrievals possesses resolving power (i.e., resolution), the difference 𝔼(Y|X) and 𝔼(Y) should be significant. In other words, one expects the observations to materialize differently after different retrieved values are issued. Equation (7) is related to the likelihood–base rate factorization. Its second term is the mean square difference between Y and 𝔼(X|Y), which should be minimized. Suppose for a particular observation y0, the retrievals behave as , then we say that the retrieval technique is biased toward an additive constant b at y0. The third term of Eq. (7) gauges the ability of the retrieval technique in discriminating the diverse atmospheric conditions – if the atmospheric condition as specified by Y has no relevance on how X is generated, the retrieval technique has no discrimination. Before we proceed to the next section, it should be noted that the computation of the expectations in Eqs. (6) and (7) deserves some attention, and the detailed procedure is given in Appendix B.

This section comprises two distinct analytical components. The first component examines all-sample validation, presenting comprehensive results from both the measure-oriented and distribution-oriented validation approaches in Sect. 3.1 and 3.2, respectively. Its primary objective is to resolve the fundamental question underlying this investigation: To what measurable extent does the selection between low- and high-accuracy radiometric observations influence validation outcomes? The second component addresses three specific analytical considerations pertaining to the satellite-retrieved irradiance products under examination: (1) the relationship between temporal averaging intervals and validation metrics, (2) the implications of simple bias correction on validation results, and (3) identifiable algorithmic limitations that demonstrably compromise retrieval quality. These discussions are allocated to Sect. 3.3–3.5.

3.1 Overall results of measure-oriented validation

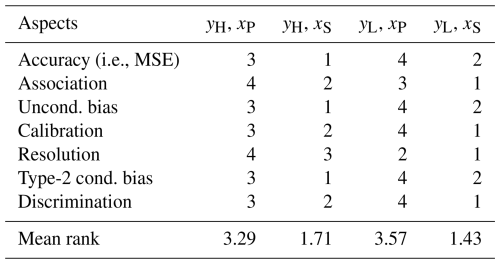

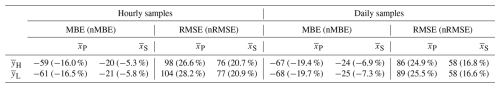

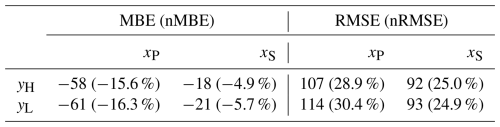

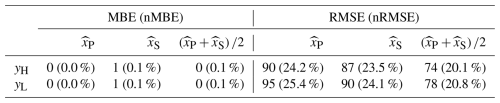

Table 1 shows the overall bias and accuracy of the two satellite-retrieved products at the validation location. Both MBE and RMSE are presented in the same unit as GHI, which is W m−2. Their relative counterparts, i.e., normalized MBE (nMBE) and normalized RMSE (nRMSE), prefixed with “n” and noted in parentheses, are normalized with respect to the mean instead of the maximum. Several interesting observations can already be made from Table 1.

Table 1Measure-oriented validation results of xP and xS, with respect to yH and yL. Both MBE and RMSE are in W m−2, with the normalized metrics noted in parentheses.

First, although all cases show negative MBEs, which suggests that both products underestimate GHI, the MBEs referenced to the high-accuracy ground-based observations are smaller than those referenced to the low-accuracy observations. This reveals that bias in observations propagates into the validation results. However, such an effect is directional, i.e., only if the bias of the observations and retrievals is of different signs will the apparent bias be larger than the true bias. Second, the differences in bias do not fully propagate into accuracy. The nMBE of xS with respect to yH and yL are −18 and −21 W m−2, respectively, but the difference between the RMSEs is smaller. This result can be explained through the bias–variance decomposition of MSE, in which bias only contributes partially to the overall accuracy. Third, the RMSEs of xS evaluated against yH and yL are rather indistinguishable, which highlights the main deficiency of measure-oriented validation – the results are often ambiguous and therefore misleading. More specifically, if RMSE is used as the sole performance measure, conclusions such as “yH and yL do not make a difference in validating xS” may be yielded.

In any case, the present measure-oriented validation suggests the superiority of the statistically retrieved GHI over the physically retrieved ones. This is not surprising, for the quality of the physical irradiance retrieval algorithms depends highly on the quality of those atmospheric state inputs, such as aerosols or water vapor. However, the NSMC has yet to establish a mature retrieval system for FY-4B (Xia et al., 2025), and directly inputting the imprecise atmospheric states to the retrieval algorithm can lead to unphysical or unrealistic irradiance values, which demand further investigation. However, measure-oriented validation offers only an overview of the product quality but lacks diagnostic ability at large. To that end, distribution-oriented validation ought to be employed if one aims to analyze and improve product quality.

3.2 Overall results of distribution-oriented validation

As previewed in Sect. 2.3, distribution-oriented validation allows both visual and quantitative assessments of the joint distribution of retrieval (X) and observation (Y), which are both considered in the present context as random variables. Since the joint distribution f(x,y) can be factorized into f(x), f(y|x), f(y), and f(x|y) via Bayes' theorem, visual assessment is facilitated by plotting out the joint, marginal, and conditional densities. On the other hand, quantitative assessment under the distribution-oriented validation mainly revolves around the MSE decompositions, e.g., Eqs. (5)–(7), from which a comprehensive collection of aspects of retrieval product quality can be gauged.

3.2.1 Visual validation

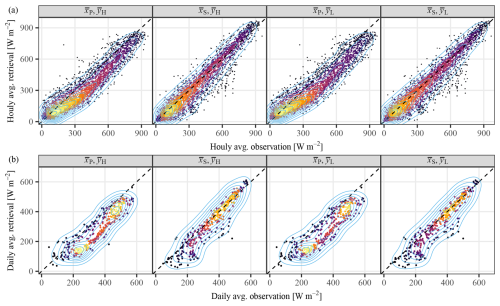

Given two sets of irradiance retrievals, namely, xP and xS, alongside two sets of ground-based observations, namely, yH and yL, four groups of retrieval–observation pairs can be formed; they are, (xP,yH), (xS,yH), (xP,yL), and (xS,yL). Each of these combinations results in a set of joint, marginal, and conditional densities, which are depicted in Figs. 4–6.

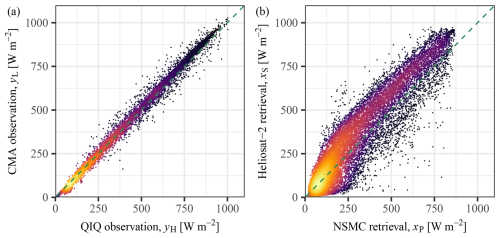

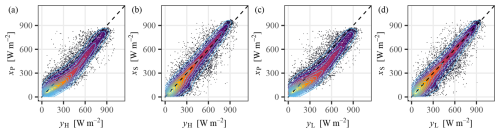

Figure 4The joint PDFs of retrievals and observations. Panels (a)–(d) correspond to the different combinations of xP, xS, yH, and yL. The contours show the 2D kernel densities.

The joint PDFs in Fig. 4 are represented through contour lines superimposed upon x–y scatter plots, in which brighter colors correspond to higher point density. The physical retrieval product manifests its most striking characteristic through systematic irradiance underestimation, producing a distinctly nonlinear relationship with both the high- and low-accuracy observations. In an ideal case, scatter points should cluster tightly about the identity line. However, neither the (xP,yH) and (xP,yL) distributions exhibit this desired behavior. The underestimation of xP is rather systematic, as it occurs over almost the entire irradiance range from 100–1000 W m−2, strongly suggesting an inherent algorithmic deficiency. Under normal circumstances, clear-sky conditions should yield highly accurate retrievals, resulting in dense point clusters along the identity line, as exemplified by the scatters in Fig. 4b and d. The observed deviation from this expected pattern in Fig. 4a and c leads us to hypothesize that the physical algorithm employed by NSMC has poor clear-sky irradiance retrieval ability, possibly due to the low and inconsistent quality of aerosol and water vapor, which serve as essential inputs for clear-sky irradiance computation. In fact, improving individual stages of the physical irradiance retrieval process has been identified as the foremost scientific challenge of the current FY-4B products (Xia et al., 2025).

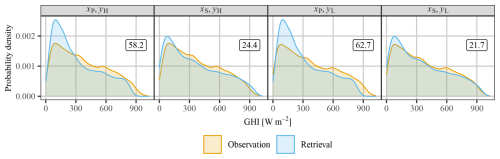

Figure 5The marginal PDFs of retrievals (xP or xS) and observations (yH or yL). The number in the top-right corner of each subplot is the Wasserstein distance between the corresponding marginal densities.

Figure 5 shows the marginal densities of retrieval and observation, under the four combinations. The number in the top-right corner of each subplot indicates the Wasserstein distance between two PDFs, which gauges their similarity, the smaller the better. The disagreements between the physical retrievals with respect to both high- and low-accuracy observations are larger than those between the statistical retrievals and observations, which is consistent with the finding from Fig. 4. It can be seen that the PDF of xP is higher in the low-irradiance range but lower in the high-irradiance range than the PDFs of the two sets of observations, again indicating underestimation. In contrast, the alignment between the PDFs of xS and observations is much closer. But interestingly, in the case of xS, the validation against yL returns a lower Wasserstein distance than yH, which signifies the potential danger of an overconfident validation when low-accuracy observations are used as reference.

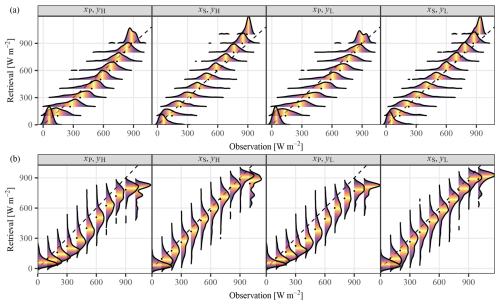

Figure 6The conditional PDFs of (a) observation given retrieval and (b) retrieval given observation, for different xP, xS, yH, and yL combinations. The colors indicate quantiles of the respective conditional PDF, with brighter ones being closer to the median.

Finally, we examine the conditional PDFs presented in Fig. 6, where the upper row displays f(y|x) and the lower row f(x∣y). The color gradations within these conditional distributions correspond to quantile values, whereas the black dots denote conditional means. It is a fundamental tenet of proper calibration that must hold universally across all x. This calibration condition permits direct evaluation of both xP and xS through inspection of Fig. 6a, where each panel exhibits numerous distinct conditional distributions. The sequence of black dots represents . Consequently, the proximity of these markers to the identity line provides a visual aid for assessing the calibration quality, i.e., the closer to the identity line the black dots are, the smaller the average deviation of from x is, which in turn implies better calibration. Clearly then, xP is less calibrated than xS. In an analogous fashion, the quantity can be assessed through Fig. 6b, in which the black dots correspond to . It is evident that the conditional biases, i.e., , for , are larger for xP than for xS, confirming the superiority of the latter. One should note that the deviations and are directional. Therefore, during quantitative evaluation, taking the absolute or squaring the term before averaging is necessary, as shown below. The final observation from Fig. 6 is that the conditional densities of xP are often asymmetrical, which can be attributed to two possible reasons. First, the asymmetry may originate from misclassification of sky condition, e.g., due to the parallax effect, where a clear pixel is misidentified as cloudy. In other cases, the asymmetry may be simply the effect of poor-quality input to the retrieval algorithm.

3.2.2 Quantitative validation

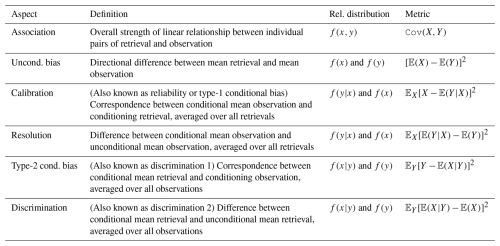

Distribution-oriented validation also allows for quantitative assessment of various aspects of product quality. A total of six aspects of product quality, which are based on Eqs. (5)–(7), are considered herein. Given a set of validation samples, with X denoting the retrieval and Y the observation, the six aspects of quality are association, unconditional bias, calibration, resolution, type-2 conditional bias, and discrimination. They can be quantified through 𝙲𝚘𝚟(X,Y), [𝔼(X)−𝔼(Y)]2, , , , and , respectively. These aspects of product quality are summarized and defined in Table 2, alongside their relevance to the distribution-oriented validation.

Table 2The definitions and relevance of different aspects of product quality to distribution-oriented validation (Murphy, 1993).

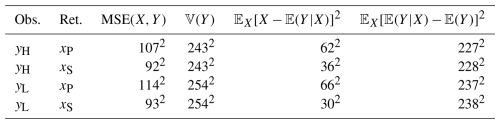

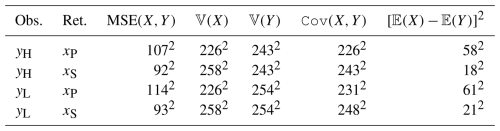

Table 3Results of bias–variance decomposition of MSE, cf. Eq. (5), based on different combinations of xP, xS, yH, and yH. The metrics are written as exponentiations, such that all bases have the unit of W m−2.

Table 3 lists the results of bias–variance decomposition of MSE, based on different combinations of xP, xS, yH, and yH. In terms of the variances, the standard deviation of yH is 243 W m−2, and compared to that, yL is overdispersed with a standard deviation of 254 W m−2. As for the retrievals, xS is even more overdispersed, whereas xP is underdispersed. On this point, the variance of xS is closer to that of yL and the variance of xP is closer to that of yS, which implies that yL exaggerates the performance of xS but understates that of xP. In terms of association, i.e., the 𝙲𝚘𝚟(X,Y) column, the highest value (2482 W2 m−4) is seen when the statistical retrievals are validated using low-accuracy observations, whereas the lowest value (2262 W2 m−4) is seen when the physical retrievals are validated using high-accuracy observations. For both physical and statistical retrievals, the results are consistent – the low-accuracy observations tend to yield overconfident results by exaggerating the association. In terms of the unconditional bias, i.e., the [𝔼(X)−𝔼(Y)]2 column, the opposite is true, in that, the low-accuracy observations return larger biases, announcing the products worse than they actually are, by about 3 W m−2, which is quite significant when considering it in the light of currently available high accuracy satellite-retrieved irradiance datasets (Yang and Bright, 2020). Overall, Table 3 strongly evidences that low-accuracy observations should not be used to validate gridded irradiance.

Moving on to Table 4, which shows the results of calibration–refinement decomposition of MSE, more interesting insights can be gained. First, the physically retrieved product is less calibrated than the statistical one, which agrees with the earlier visual assessment. Recall that for two sets of retrievals, the one with a smaller value of calibration should be preferred. On this point, the calibration terms corresponding to xP – see the column – are higher than those of xS, rendering xP inferior. In terms of resolution, i.e., the column, the choice of observations appears to have a larger impact on the validation results than the products themselves, which is a bit surprising. The resolutions of both xP and xS are ∼2272 W2 m−4 when evaluated against yH, and are about ∼2372 W2 m−4 when evaluated against yL. Since for a given set of observations, 𝔼(Y) takes a fixed value, the similarity must be attributed to 𝔼(Y|X), and one can conclude that the resolving power of xP and xS are comparable.

For the likelihood–base rate decomposition of MSE, its results are tabulated in Table 5. In terms of the type-2 conditional bias, the statistically retrieved product again shows superiority, regardless of whether the high- or low-accuracy observations are used for validation. It is worth noting that the type-2 conditional bias of xS appears higher when validated using yL than using yH, which contrasts the former case, where the calibration (i.e., type-1 conditional bias) of xS appears higher when validated using yH than using yL. As for discrimination, the difference in validation results across products is now larger than the difference across observation choices, which also contradicts the finding from Table 4.

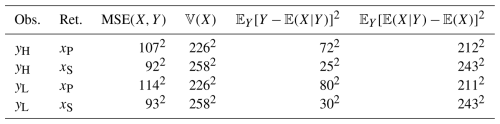

To give a summary of the quantitative assessment, a linear ranking method (see Alvo and Yu, 2014) is applied to the above results. There are four combinations of xP, xS, yH, and yH, and the mean rank of the ith combination, denoted by mi, is:

where vj with represent all possible rankings of the four combinations, nj is the frequency of occurrence of these rankings, is the total number of ranking exercises, and vj(i) denotes the score of the ith combination in ranking j. Table 6 shows the ranking of different combinations, together with their mean ranks computed using Eq. (8). It can be seen that there are four rankings, namely, , , , and , and the corresponding nj's are 3, 1, 2, and 1, respectively. The foremost finding is that the rankings based on various quantification metrics are not unified, which again highlights the pitfall of measure-oriented validation. Next, the statistically retrieved irradiance, in this case, has low mean ranks and thus outperforms the physically retrieved ones, which is to be further investigated below. Last but not least, when low-accuracy observations are used to validate satellite-retrieved products, it can offset the results in both directions – i.e., yL exaggerates the performance of xS (yL results in a lower mean rank of 1.43 than the 1.71 of yH) but understates that of xP (yL results in a higher mean rank of 3.57 than the 3.29 of yH) – which is not desirable in any case. Before we end this section, it is worth mentioning that variance plays an important role in validation. The variance ratio between observation and retrieval is further linked to calibration, type-2 conditional bias, and discrimination. Interested readers are referred to Mayer and Yang (2023) for further reading.

3.3 Validation of hourly and daily averages

Among the various factors that influence the quality of satellite-retrieved irradiance, the three-dimensional (3D) effects of clouds, including the parallax effect, arguably constitute the most significant source of error. This phenomenon manifests when cloud presence in either the sun-to-surface path or the surface-to-satellite path fails to coincide, leading to erroneous sky condition identification (Huang et al., 2019). Such misidentification poses substantial contamination risks to retrieval accuracy and thus validation outcomes, prompting some researchers to advocate for the exclusion of retrieval–observation pairs exhibiting differences exceeding three standard deviations from the mean (Wang and Pinker, 2009). An alternative approach is based on the well-documented fact that the 3D effect of clouds rapidly decreases as the temporal resolution of retrieval gets coarse (Huang et al., 2016). This has led to widespread adoption of hourly and daily product validation in the literature (e.g., Shi et al., 2023; Huang et al., 2023). In accordance with this methodological convention, we have aggregated the original 15 min data into both hourly and daily resolutions in this section, with sample numbers of 3740 and 355, respectively.

In accordance with the presentation style of Table 1, the overall biases and accuracies of hourly and daily samples are listed in Table 7. Comparing these tables reveals that the accuracy improves as the temporal resolution of the samples decreases. In contrast, the bias becomes larger after averaging. Particularly for daily samples, the bias of xP shoots over −19 %, unequivocally demonstrating fundamental algorithmic deficiencies, which motivate site-specific bias correction. That said, given that aggregation should normally not affect bias, the differences between the 15 min and daily MBE come from the fact that days are shorter in winter – i.e., the proportion of the 15 min winter samples is lower than the proportion of the daily winter samples. The fact that the MBE decreased to a higher underestimation for the daily mean GHI in itself shows that the underestimation is higher in winter than in summer, which is shown in more detail in a later section.

Figure 7The joint PDFs of (a) hourly averages and (b) daily averages of retrievals and observations.

Figure 7 shows the joint PDFs of hourly and daily samples, under different combinations of , , , and , with bars annotating averages. The joint PDFs of the hourly samples exhibit notable consistency with those of the 15 min samples from Fig. 4, e.g., the consistent underestimation and nonlinearity in seen earlier are again visible. The joint PDFs of the daily samples reveal a pronounced density peak near 450 W m−2, indicative of prevailing clear-sky conditions at the validation location. Of particular concern is that the scatter of the daily samples of only packs very loosely around the identity line, especially over the low irradiance range of 100–300 W m−2, which contradicts earlier reports of similar Fengyun irradiance products, cf. Fig. 5 in Huang et al. (2023) and Fig. 5 in Shi et al. (2023). This discrepancy potentially suggests the influence of winter snow cover on retrieval accuracy within the Dwa Köppen climate regime, which is to be further examined in Sect. 3.5.

3.4 Validation results after bias correction

Within the domain of solar energy meteorology, the imperative for bias correction in satellite-retrieved irradiance arises from a fundamental economic consideration: The precision of mean irradiance estimates bears direct consequence upon the financial viability and projected returns of solar energy projects (Yang et al., 2022b). This location-specific bias correction procedure is better known as site adaptation, which has attracted much research effort in the past decade (e.g., Zainali et al., 2024; Yang and Gueymard, 2021; Polo et al., 2016). Among various statistical and machine learning site-adaptation methods, quantile mapping stands as a classic option. More importantly, quantile mapping is more amenable than linear regression when nonlinearity is present in the data, such as the case of xP. Mathematically, quantile mapping is simply

where and are empirical cumulative distribution functions (ECDFs) of observation and retrieval, respectively; is the inverse function of , also known as the quantile function; x is an arbitrary retrieval value; and is the corrected value.

Table 8Same as Table 1, but for the bias-corrected xP and xS after quantile mapping, which are denoted as and . Additionally, the results of a simple combination of and are shown in the columns, demonstrating the complementarity of the two products.

Certainly, and need to be trained before applying quantile mapping to new data points. To that end, the 15 min samples are randomly split into two groups, namely, A and B. After the samples in B are quantile mapped using the and trained using A, the two groups switch, such that the bias-corrected versions of xP and xS has the same number of samples as the original data. The updated MBEs and RMSEs are provided in Table 8. It can be seen that the bias has been effectively eliminated, and thus translated to improved accuracy, in all cases. We further test the effect of combining the physical and statistical products by taking the arithmetic mean of the two time series, which is a fairly common strategy in forecasting science, leveraging the “wisdom of the crowd” (Wang et al., 2023; Atiya, 2020). A substantial reduction in RMSE is observed, which implies the complementarity of the two products. Nonetheless, despite that the bias-corrected samples have no bias with respect to both yH and yL, the RMSEs against yL are higher across the board, indicating that using low-accuracy observations as reference during validation may return underconfident results, by about 0.8 % in this particular case.

3.5 Noticeable algorithmic limitations during winter

Hitherto, our validation has proceeded through comprehensive analyses of all available samples. While these aggregate results adequately characterize the general performance attributes of both products, they necessarily obscure certain extreme cases where algorithmic performance degrades substantially. A more penetrating examination of these specific conditions promises to reveal important limitations in the underlying retrieval algorithms that might otherwise escape notice. For instance, all of those severely underestimated daily samples of in Fig. 7b, with W m−2, come from winter months, which indicates a potential algorithmic limitation of Heliosat-2 during winter, with prolonged snow cover being typical over November to March for the Dwa climate.

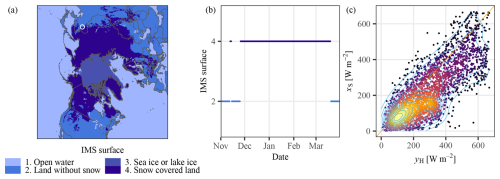

Figure 8(a) Observed reflectance from the 0.65 µm visible channel of AGRI onboard FY-4B (black dots) and dynamic range (colored lines) from November 2024 to March 2025, with the corresponding (b) cloud index, ν, and (c) clear-sky index, κ.

The most transparent method for diagnosing temporal data lies in direct visual examination through time series plotting. In this regard, Fig. 8 shows the satellite-observed reflectance (ρ), the corresponding dynamic range (ρH and ρL), cloud index (ν), and clear-sky index (κ), over the winter months; the reader is referred to Appendix A for the relationship among these variables under the Heliosat-2 framework. Several insights are revealed. First, the satellite-observed reflectance exhibits an abrupt jump in late November and stays high until mid-March, which may be attributed to the long-lasting snow cover at the QIQ station. To verify this hypothesis, data from the United States National Ice Center's (NIC's) Interactive Multisensor Snow and Ice Mapping System (IMS) is considered. Figure 9a exemplifies the original data, which is in NetCDF format and polar stereographic projection. Figure 9b displays the time series of the IMS surface value at the QIQ station, over November and December 2024, which matches well with the elevated ρ time series, thus confirming the hypothesis.

Figure 9(a) The NIC IMS map (in polar stereographic projection) of 24 November 2024, with the QIQ station marked with a white circle. (b) The time series of the IMS surface value at the QIQ station, from November 2024 to March 2025. (c) The scatter plot of xS against yH for all days with snow cover.

The next insight emerges from the monthly constant dynamic range employed by this version of the Heliosat-2 algorithm. In December, for instance, the lower bound ρL is underdetermined, resulting in overestimated ν and underestimated κ, as depicted in Fig. 8b and c. In contrast, ρL in February is overdetermined, resulting in numerous cases, which are unphysical. According to the Heliosat-2 computation, these cases would return κ=1.2, which is, again, extremely rare in reality. Overall, as evidenced by Fig. 9c, which plots the (xS,yH) scatter for all days with snow cover, the snow cover causes this version of the Heliosat-2 algorithm to severely underestimate the irradiance. Stated in another way, one may interpret this phenomenon as a case of cloud misidentification, in that, the algorithm is unable to distinguish the bright surface due to snow cover from clouds. To remedy this algorithmic pitfall, the bounds of dynamic range ought to be determined in a rolling fashion, which has been emphasized by Perez et al. (2002) in a very early publication. The difficulty, however, is one of computation – the iterative method for dynamic range determination for the full-disk area is too taxing, which promotes investigations into advanced sorting algorithms.

Whereas the algorithm limitations of the statistical product can be identified, the physical product is much more difficult to diagnose, given the complexity of the physical retrieval process. Therefore, only a simple visualization that reveals some product quality issues in winter is made, alongside a discussion on potential causes. Figure 10 shows the time series plot of xP, yH, and the McClear clear-sky irradiance in February 2025. Although the overall underestimation of the product is evident, it is fairly consistent throughout the month. From the plot of 8, 9, and 13 February, one may conclude that the product is able to capture the effect of clouds on irradiance to a good extent, resulting in ramp rates similar to the observations. Instead, it is the understimation of the clear-sky irradiance, see e.g., 4, 12, and 22 February, that affects the product quality. As clear-sky irradiance is primarily affected by the extraterrestrial irradiance, the sun–earth geometry, aerosols, and water vapors, with the former two being trivial to obtain, the underestimation is likely due to the imprecise information of the latter two, which echoes our earlier hypothesis in Sect. 3.2. This version of the NSMC's algorithm adopts an “indirect” approach that requires the AGRI observed channel reflectances as main inputs with little reliance on level-2 products, such as aerosol or water vapor. To that end, the inaccurate aerosol climatology used during retrieval might be the root cause of the systematic underestimation.

Validation of satellite-retrieved irradiance is ubiquitously performed in comparative studies concerning the quality of several products (e.g., Salazar et al., 2020; Marchand et al., 2018), as well as those studies investigating the change in radiation budget of the earth (e.g., Sanchez-Lorenzo et al., 2017; Zhang et al., 2015). With the validation results, various scientific assertions and conclusions are made. A critical yet frequently overlooked consideration concerns the reliability of observed inter-product variations or temporal radiation trends, which may be systematically distorted by the inherent limitations of the ground-based reference data employed in the validation process. To that end, this work formally investigates this issue by leveraging both high- and low-accuracy radiometric observations during the validation of two satellite-retrieved irradiance products for a location in a monsoon-influenced continental climate.

The contribution of this work is threefold. First, irradiance observations from a rarely reported climate regime (i.e., the Dwa Köppen climate) are presented, alongside two gridded irradiance products from the latest Chinese FY-4B weather satellite. Through rigorous analysis, we have identified a fundamental limitation in the operational physical retrieval algorithm implemented by NSMC: Its systematic underestimation of irradiance under clear-sky conditions reveals significant shortcomings in algorithmic maturity and implementation, particularly in terms of the quality of those atmospheric parameters intermediate to irradiance retrieval, such as aerosol optical depth or water vapor. Conversely, the statistical retrieval algorithm yields demonstrably superior results compared to the official NSMC product, at last for the geographical location under examination. However, the Heliosat-2 algorithm suffers substantial performance degradation under snow-covered conditions, in which the high surface albedo observed by the imager might be wrongfully attributed to clouds.

The second contribution resides in the novel application of the distribution-oriented validation framework, which was originally proposed to verify weather forecasts. Contrasting the measure-oriented validation, which is limited by the choice of those often confounding performance measures, the visual and quantitative assessments of the joint, marginal, and conditional distributions of retrieval and observation allow a more comprehensive understanding of various aspects of product quality, including bias, accuracy, association, calibration, resolution, and discrimination (cf. Tables 3–5). The main takeaway is that even if a certain product is validated to be strictly inferior in terms of bias and accuracy, it may still possess advantages in other aspects of quality (cf. Table 6). This complementarity between two or more products promotes combining or merging, as demonstrated in Table 8, which has led to a 4 % improvement in accuracy in this particular case.

Third, in regard to the quality of ground truth, the accumulated empirical evidence demonstrates conclusively that employing low-accuracy observations for irradiance retrieval validation introduces measurable deviations from what may be properly regarded as the “true”' values – that is to say, those values obtained through high-accuracy observational methods. Given the large sample size, these discrepancies represent actual differences in the validation outcomes rather than artifacts of measurement uncertainty. It must be highlighted that the deviations from the “true” value are not unidirectional, i.e., using the low-accuracy observations can yield both overconfident and underconfident results, depending on the particular metric under consideration. Moreover, the magnitude of the deviations caused by the low-accuracy observations is quite substantial, reaching several W m−2 or a few percent. Indeed, numerous prior conclusions, such as the assertion that “satellite products overestimated radiation by approximately 10 W m−2” by Zhang et al. (2015), rest upon differentials of comparable scale. The present finding thus necessitates rigorous methodological caution: Any validation exercise employing low-accuracy observations must interpret apparent results with care, recognizing the substantial uncertainty introduced by the observational limitations themselves.

In a nutshell, the Heliosat-2 algorithm (Rigollier et al., 2004) first estimates the cloud index, ν, from the satellite-observed reflectance, and then converts it to the clear-sky index, κ, via

where model parameters α=0.9, a0=1.008, , b0=2.8935, , and b2=2.3499 are determined empirically from data, in this case, observations from 38 ground-based stations in China (Huang et al., 2023). On the other hand, the determination of ν follows

where ρ is the satellite-observed reflectance after sun-to-surface and surface-to-satellite geometric corrections; ρL and ρH are the lower and upper bounds of the dynamic range derived from an adequate collection of ρ values, e.g., over a time period or over an area, see below. Physically, ρL corresponds to the expected clear-sky reflectance, whereas ρH corresponds to the expected reflectance of the brightest clouds.

In this version of the Heliosat-2 algorithm, ρH is first determined. More specifically, for each month, all samples of ρ with a zenith angle Z<80°, over China, are gathered and sorted; the 95 % quantile is then set as ρH. In other words, the ρH value for all of China is the same for a given month. In contrast, the determination of ρL is pixel-specific, and an iterative algorithm is used. Denoting the sorted samples of ρ from a specific pixel and a specific month with Z<75° as ρi, with , a threshold t(1) is obtained via

Subsequently, all ρi's that exceed threshold t(1) are removed, which results in n(1) remaining samples. In the second iteration, one proceeds with calculating

and ρi's that exceed threshold t(2) are removed. This iteration terminates when all remaining samples of ρi are below the threshold, and their average is used as ρL.

The expectation terms in Eqs. (6) and (7) are not straightforward to compute, for they involve the estimation of the conditional means over the support, i.e., all possible x values with f(x)>0. In this work, the kernel conditional density estimation (KCDE) is used to estimate 𝔼(Y|X) and 𝔼(X|Y). Denoting the conditional distribution of observation given retrieval as f(y|x), its kernel conditional density estimator is

where K(⋅) is the kernel function of choice, hy is the unknown bandwidth of the kernel, and wi(x) is given by

where hx is another unknown bandwidth. Following Hyndman et al. (1996), the conditional mean estimator at arbitrary x0 is

In this work, the Gaussian kernel is used without loss of generality, and hx and hy are both set to 10, following some empirical trial-and-error. Once is computed for all xi's, can be computed via

The other expectations can be computed in a similar fashion.

A total of four datasets are involved in this work, among which two are ground-based radiometric observations and the other two are satellite-retrieved irradiance from L1 Fengyun-4B products. The high-accuracy ground-based radiometric observations can be obtained from the official website of the Baseline Surface Radiation Network (https://bsrn.awi.de/data/data-retrieval-via-ftp/, last access: 7 May 2025). The low-accuracy ground-based radiometric observations are not publicly available but were obtained by contacting the China Meteorological Administration. L1 Fengyun-4B products are available from the FENGYUN Satellite Data Service (https://satellite.nsmc.org.cn/DataPortal/en/data/structure.html, last access: 7 May 2025). All code will be made available upon request.

Yu Chen: Conceptualization, Methodology, Software, Validation, Investigation, Writing – original draft. Dazhi Yang: Conceptualization, Methodology, Software, Resources, Writing – original draft, Visualization, Supervision, Project administration, Funding acquisition. Chunlin Huang: Data curation, Formal analysis, Investigation, Writing – review & editing. Hongrong Shi: Data curation, Formal analysis, Writing – review & editing. Adam R. Jensen: Formal analysis, Visualization, Writing – review & editing. Xiang'ao Xia: Validation, Project administration, Writing – review & editing. Yves-Marie Saint-Drenan: Methodology, Visualization, Validation, Writing – review & editing. Christian A. Gueymard: Validation, Writing – review & editing. Martin János Mayer: Formal analysis, Investigation, Writing – review & editing. Yanbo Shen: Methodology, Supervision, Writing – review & editing.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors. Views expressed in the text are those of the authors and do not necessarily reflect the views of the publisher.

This work is supported in part by the National Natural Science Foundation of China (No. 42375192) and in part by the China Meteorological Administration Innovation and Development Special Project (No. CXFZ2025J045).

This research has been supported by the National Natural Science Foundation of China (grant no. 42375192) and China Meteorological Administration (grant no. CXFZ2025J045).

This paper was edited by Yuanjian Yang and reviewed by Wenjun Tang and two anonymous referees.

Alvo, M. and Yu, P. L. H.: Exploratory Analysis of Ranking Data, in: Statistical Methods for Ranking Data, Frontiers in Probability and the Statistical Sciences, Springer New York, 7–21, https://doi.org/10.1007/978-1-4939-1471-5, 2014. a

Atiya, A. F.: Why does forecast combination work so well?, International Journal of Forecasting, 36, 197–200, https://doi.org/10.1016/j.ijforecast.2019.03.010, 2020. a

Blaga, R., Sabadus, A., Stefu, N., Dughir, C., Paulescu, M., and Badescu, V.: A current perspective on the accuracy of incoming solar energy forecasting, Progress in Energy and Combustion Science, 70, 119–144, https://doi.org/10.1016/j.pecs.2018.10.003, 2019. a

Bright, J. M.: Solcast: Validation of a satellite-derived solar irradiance dataset, Solar Energy, 189, 435–449, https://doi.org/10.1016/j.solener.2019.07.086, 2019. a

Chen, S., Liang, Z., Guo, S., and Li, M.: Estimation of high-resolution solar irradiance data using optimized semi-empirical satellite method and GOES-16 imagery, Solar Energy, 241, 404–415, https://doi.org/10.1016/j.solener.2022.06.013, 2022. a

Driemel, A., Augustine, J., Behrens, K., Colle, S., Cox, C., Cuevas-Agulló, E., Denn, F. M., Duprat, T., Fukuda, M., Grobe, H., Haeffelin, M., Hodges, G., Hyett, N., Ijima, O., Kallis, A., Knap, W., Kustov, V., Long, C. N., Longenecker, D., Lupi, A., Maturilli, M., Mimouni, M., Ntsangwane, L., Ogihara, H., Olano, X., Olefs, M., Omori, M., Passamani, L., Pereira, E. B., Schmithüsen, H., Schumacher, S., Sieger, R., Tamlyn, J., Vogt, R., Vuilleumier, L., Xia, X., Ohmura, A., and König-Langlo, G.: Baseline Surface Radiation Network (BSRN): structure and data description (1992–2017), Earth Syst. Sci. Data, 10, 1491–1501, https://doi.org/10.5194/essd-10-1491-2018, 2018. a

Elias, T., Ferlay, N., Chesnoiu, G., Chiapello, I., and Moulana, M.: Regional validation of the solar irradiance tool SolaRes in clear-sky conditions, with a focus on the aerosol module, Atmos. Meas. Tech., 17, 4041–4063, https://doi.org/10.5194/amt-17-4041-2024, 2024. a

Forstinger, A., Wilbert, S., Kraas, B., Peruchena, C. F., Gueymard, C. A., Collino, E., Ruiz-Arias, J. A., Polo Martinez, J., Saint-Drenan, Y.-M., Ronzio, D., Hanrieder, N., Jensen, A. R., and Yang, D.: Expert quality control of solar radiation ground data sets, in: Solar World Congress 2021, 1–12, International Solar Energy Society, Virtual conference, https://doi.org/10.18086/swc.2021.38.02, 2021. a, b, c

Gneiting, T.: Making and Evaluating Point Forecasts, Journal of the American Statistical Association, 106, 746–762, https://doi.org/10.1198/jasa.2011.r10138, 2011. a

Habte, A., Sengupta, M., Andreas, A., Wilcox, S., and Stoffel, T.: Intercomparison of 51 radiometers for determining global horizontal irradiance and direct normal irradiance measurements, Solar Energy, 133, 372–393, https://doi.org/10.1016/j.solener.2016.03.065, 2016. a

Huang, C., Shi, H., Yang, D., Gao, L., Zhang, P., Fu, D., Xia, X., Chen, Q., Yuan, Y., Liu, M., Hu, B., Lin, K., and Li, X.: Retrieval of sub-kilometer resolution solar irradiance from Fengyun-4A satellite using a region-adapted Heliosat-2 method, Solar Energy, 264, 112038, https://doi.org/10.1016/j.solener.2023.112038, 2023. a, b, c, d, e, f

Huang, C., Shi, H., Yang, G., Xia, X., Yang, D., Fu, D., Gao, L., Zhang, P., Hu, B., Chen, Y., and Chen, Q.: Autonomous solar resourcing in China with Fengyun-2, Solar Energy, 296, 113593, https://doi.org/10.1016/j.solener.2025.113593, 2025. a, b

Huang, G., Li, X., Huang, C., Liu, S., Ma, Y., and Chen, H.: Representativeness errors of point-scale ground-based solar radiation measurements in the validation of remote sensing products, Remote Sensing of Environment, 181, 198–206, https://doi.org/10.1016/j.rse.2016.04.001, 2016. a

Huang, G., Liang, S., Lu, N., Ma, M., and Wang, D.: Toward a broadband parameterization scheme for estimating surface solar irradiance: Development and preliminary results on MODIS products, Journal of Geophysical Research: Atmospheres, 123, 12180–12193, https://doi.org/10.1029/2018JD028905, 2018. a

Huang, G., Li, Z., Li, X., Liang, S., Yang, K., Wang, D., and Zhang, Y.: Estimating surface solar irradiance from satellites: Past, present, and future perspectives, Remote Sensing of Environment, 233, 111371, https://doi.org/10.1016/j.rse.2019.111371, 2019. a, b

Huttunen, J., Kokkola, H., Mielonen, T., Mononen, M. E. J., Lipponen, A., Reunanen, J., Lindfors, A. V., Mikkonen, S., Lehtinen, K. E. J., Kouremeti, N., Bais, A., Niska, H., and Arola, A.: Retrieval of aerosol optical depth from surface solar radiation measurements using machine learning algorithms, non-linear regression and a radiative transfer-based look-up table, Atmos. Chem. Phys., 16, 8181–8191, https://doi.org/10.5194/acp-16-8181-2016, 2016. a

Hyndman, R. J., Bashtannyk, D. M., and Grunwald, G. K.: Estimating and visualizing conditional densities, Journal of Computational and Graphical Statistics, 5, 315–336, https://doi.org/10.1080/10618600.1996.10474715, 1996. a

Jensen, A. R., Sifnaios, I., Anderson, K. S., and Gueymard, C. A.: SolarStations.org – A global catalog of solar irradiance monitoring stations, Solar Energy, 295, 113457, https://doi.org/10.1016/j.solener.2025.113457, 2025. a

Kosmopoulos, P. G., Kazadzis, S., Taylor, M., Raptis, P. I., Keramitsoglou, I., Kiranoudis, C., and Bais, A. F.: Assessment of surface solar irradiance derived from real-time modelling techniques and verification with ground-based measurements, Atmos. Meas. Tech., 11, 907–924, https://doi.org/10.5194/amt-11-907-2018, 2018. a

Lefèvre, M., Oumbe, A., Blanc, P., Espinar, B., Gschwind, B., Qu, Z., Wald, L., Schroedter-Homscheidt, M., Hoyer-Klick, C., Arola, A., Benedetti, A., Kaiser, J. W., and Morcrette, J.-J.: McClear: a new model estimating downwelling solar radiation at ground level in clear-sky conditions, Atmos. Meas. Tech., 6, 2403–2418, https://doi.org/10.5194/amt-6-2403-2013, 2013. a

Liou, K.-N.: An Introduction to Atmospheric Radiation, vol. 84, Elsevier, https://doi.org/10.1016/S0074-6142(13)62908-3, 2002. a

Liu, B., Yang, D., Wang, Z., Xia, X., Qiu, H., and Shen, Y.: On the closure relationship among shortwave radiometric measurements under a cold climate during winter, Solar Energy, 285, 113119, https://doi.org/10.1016/j.solener.2024.113119, 2025. a

Long, C. N. and Shi, Y.: An automated quality assessment and control algorithm for surface radiation measurements, The Open Atmospheric Science Journal, 2, https://doi.org/10.2174/1874282300802010023, 2008. a

Marchand, M., Ghennioui, A., Wey, E., and Wald, L.: Comparison of several satellite-derived databases of surface solar radiation against ground measurement in Morocco, Adv. Sci. Res., 15, 21–29, https://doi.org/10.5194/asr-15-21-2018, 2018. a

Mayer, M. J. and Yang, D.: Calibration of deterministic NWP forecasts and its impact on verification, International Journal of Forecasting, 39, 981–991, https://doi.org/10.1016/j.ijforecast.2022.03.008, 2023. a

Murphy, A. H.: What is a good forecast? An essay on the nature of goodness in weather forecasting, Weather and Forecasting, 8, 281–293, https://doi.org/10.1175/1520-0434(1993)008<0281:WIAGFA>2.0.CO;2, 1993. a

Murphy, A. H. and Winkler, R. L.: A general framework for forecast verification, Monthly Weather Review, 115, 1330–1338, https://doi.org/10.1175/1520-0493(1987)115<1330:AGFFFV>2.0.CO;2, 1987. a

Ohmura, A., Dutton, E. G., Forgan, B., Fröhlich, C., Gilgen, H., Hegner, H., Heimo, A., König-Langlo, G., McArthur, B., Müller, G., Philipona, R., Pinker, R., Whitlock, C. H., Dehne, K., and Wild, M.: Baseline Surface Radiation Network (BSRN/WCRP): New precision radiometry for climate research, Bulletin of the American Meteorological Society, 79, 2115–2136, https://doi.org/10.1175/1520-0477(1998)079<2115:BSRNBW>2.0.CO;2, 1998. a, b

Perez, R., Ineichen, P., Moore, K., Kmiecik, M., Chain, C., George, R., and Vignola, F.: A new operational model for satellite-derived irradiances: Description and validation, Solar Energy, 73, 307–317, https://doi.org/10.1016/S0038-092X(02)00122-6, 2002. a, b

Perez, R., Aguiar, R., Collares-Pereira, M., Dumortier, D., Estrada-Cajigal, V., Gueymard, C. A., Ineichen, P., Littlefair, P., Lund, H., Michalsky, J., Olseth, J. A., Renné, D., Rymes, M., Skartveit, A., Vignola, F., and Zelenka, A.: Solar resource assessment: A review, in: Solar Energy: The State of the Art, 1st edn., edited by: Gordon, J. M., Routledge, p. 79, https://doi.org/10.4324/9781315074412, 2013. a

Polo, J., Wilbert, S., Ruiz-Arias, J. A., Meyer, R., Gueymard, C., Súri, M., Martín, L., Mieslinger, T., Blanc, P., Grant, I., Boland, J., Ineichen, P., Remund, J., Escobar, R., Troccoli, A., Sengupta, M., Nielsen, K. P., Renne, D., Geuder, N., and Cebecauer, T.: Preliminary survey on site-adaptation techniques for satellite-derived and reanalysis solar radiation datasets, Solar Energy, 132, 25–37, https://doi.org/10.1016/j.solener.2016.03.001, 2016. a