the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Field intercomparison of prevailing sonic anemometers

Matthias J. Zeeman

Three-dimensional sonic anemometers are the core component of eddy covariance systems, which are widely used for micrometeorological and ecological research. In order to characterize the measurement uncertainty of these instruments we present and analyse the results from a field intercomparison experiment of six commonly used sonic anemometer models from four major manufacturers. These models include Campbell CSAT3, Gill HS-50 and R3, METEK uSonic-3 Omni, R. M. Young 81000 and 81000RE. The experiment was conducted over a meadow at the TERENO/ICOS site DE-Fen in southern Germany over a period of 16 days in June of 2016 as part of the ScaleX campaign. The measurement height was 3 m for all sensors, which were separated by 9 m from each other, each on its own tripod, in order to limit contamination of the turbulence measurements by adjacent structures as much as possible. Moreover, the high-frequency data from all instruments were treated with the same post-processing algorithm. In this study, we compare the results for various turbulence statistics, which include mean horizontal wind speed, standard deviations of vertical wind velocity and sonic temperature, friction velocity, and the buoyancy flux. Quantitative measures of uncertainty, such as bias and comparability, are derived from these results. We find that biases are generally very small for all sensors and all computed variables, except for the sonic temperature measurements of the two Gill sonic anemometers (HS and R3), confirming a known transducer-temperature dependence of the sonic temperature measurement. The best overall agreement between the different instruments was found for the mean wind speed and the buoyancy flux.

- Article

(4232 KB) - Full-text XML

-

Supplement

(132 KB) - BibTeX

- EndNote

Although sonic anemometers have been used extensively for several decades in micrometeorological and ecological research, there is still some scientific debate about the measurement uncertainty of these instruments. This is due to the fact that an absolute reference for the measurement of turbulent wind fluctuations in the free atmosphere does not exist. Traditionally, two approaches have been applied to evaluate the performance of sonic anemometers, either by placing them in a wind tunnel and testing them for different flow angles or by putting different instruments next to each other in the field over a homogeneous surface, so that all of them can be expected to measure the same wind velocities and turbulence statistics. The first approach has the advantage that the true flow characteristics are well known; however, the characteristics of the flow deviate far from those in the turbulent atmospheric surface layer where sonic anemometers are typically deployed. Reynolds numbers in a wind tunnel, for instance, are several orders of magnitude smaller than under natural conditions. In contrast, the second intercomparison approach has the disadvantage that it lacks an uncontested reference; however, such field experiments allow the simultaneous evaluation of several instruments under real-world conditions. In other words, the first approach has a high internal validity while the second approach has a high external validity.

Wind-tunnel experiments have been an important milestone towards revealing and quantifying probe-induced flow distortion effects. One of the first wind-tunnel tests including a correction equation for flow distortion effects is reported by Kaimal (1979). Considering the results of another wind-tunnel study about a three-dimensional hot-wire anemometer, Högström (1982) stressed the importance of such test for all turbulence sensors, and wind-tunnel experiments soon became a standard method for optimizing and calibrating sonic anemometers. Subsequently, Zhang et al. (1986) developed a new sonic anemometer based on measurements from the wind tunnel, which inspired the design of the Campbell CSAT3. A further wind-tunnel calibration for the Gill Solent R2 sonic anemometer is presented by Grelle and Lindroth (1994).

However, researchers soon realized that the transferability of wind-tunnel experiments to field conditions is limited. A very interesting comparative wind-tunnel study about several sonic anemometers (Gill Solent, METEK USA-1, Kaijo Denki TR-61A, TR-61B, and TR-61C) is conducted by Wieser et al. (2001). They evaluate flow distortion correction algorithms provided by the respective manufacturers and come to the following conclusion: “Because of the very low level of turbulence in the wind tunnel (no fences or trip devices have been used), the size and stability of vortices set up behind struts may be increased in comparison with field measurements” (Wieser et al., 2001). Moreover, Högström and Smedman (2004) present a critical assessment of laminar wind-tunnel calibrations by using a hot-film instrument as reference during a field experiment over a flat and level coastal area with very low vegetation. Their results indicate that wind-tunnel-based corrections might be overcorrecting, or at least do not improve the comparison with the reference measurement of turbulence statistics.

Despite these known limitations, more extensive wind-tunnel calibration studies were conducted, which led to the publication of the so-called angle-of-attack correction for Gill Solent R2 and R3 (van der Molen et al., 2004; Nakai et al., 2006). However, it is often overlooked that angle-of-attack-dependent errors might partially be an artefact of wind-tunnel experiments, because in quasi-laminar wind-tunnel flows the angle-of-attack remains constant. In contrast, the flow distortion caused by the same geometrical structure is much smaller under turbulent conditions, when the three-dimensional wind vector and the corresponding flow angles fluctuate constantly (Huq et al., 2017).

In order to address concerns about the validity of these wind-tunnel-based calibrations, the angle-of-attack-based flow distortion concept was investigated in the field under natural turbulent conditions. Nakai and Shimoyama (2012) mounted several Gill WindMaster instruments at different angles next to each other above a short grass canopy, and Kochendorfer et al. (2012) conducted a very similar field experiment focusing on RM Young Model 81000 anemometers, while the Campbell CSAT3 was only briefly examined. It has to be noted that the results of these two studies were interpreted under the false assumption that the instantaneous wind vector remains unchanged between different instruments that are mounted more than 1 m apart, which contradicts the concept of a fluctuating turbulent flow with a certain decay of the spatial autocorrelation function (Kochendorfer et al., 2013; Mauder, 2013).

However, such side-by-side comparisons with different alignment of the same instrument can be quite instructive, as long as only turbulence statistics are analysed, which can indeed be considered to be similar across several metres over homogeneous surfaces. Although their study site is less than ideal for a field intercomparison (over a sloped forest canopy within the roughness sublayer), Frank et al. (2013) found that non-orthogonally positioned transducers can underestimate vertical wind velocity (w) and sensible heat flux (H), by comparing the output of two pairs of CSAT3 anemometers while one pair was rotated by 90∘. This finding was substantiated in a follow-up study (Frank et al., 2016), which also covers a side-by-side comparison of two CSAT3 mounted at different alignment angles plus two sonic anemometers with an orthogonal transducer array and a CSAT3 with one vertical path. An elaborate statistical analysis leads them to the following conclusion: “Though we do not know the exact functional form of the shadow correction, we determined that the magnitude of the correction is probably somewhere between the Kaimal and double-Kaimal correction” (Frank et al., 2016), referring to the original work of Kaimal (1979).

In a parallel chain of events, international turbulence comparison experiments (ITCEs) have been carried out at different places around the world since the early days of sonic anemometry used for micrometeorological field campaigns (Dyer et al., 1982; Miyake et al., 1971; Tsvang et al., 1973, 1985), mostly with the aim of investigating the comparability of different instrumental designs. Typically, relative differences were analysed based on those comparative datasets, which generally suffer from the lack of a “true” reference measurement or etalon, but those experiments have the advantage that many anemometer models can be tested at once under real-world conditions. Nevertheless, absolute biases were also sometimes detected, such as the flow distortion from supporting structures, which from the 1976 ITCE was deduced from a non-zero mean vertical wind speed, especially for geometries with a supporting rod directly underneath the measurement volume (Dyer, 1981).

In those early ITCEs, mostly custom-made instruments were tested. However, since the beginning of the 1990s, a growing number of commercial sonic anemometer models have become available from a number of manufacturers. Based on their field intercomparison experiments, Foken and Oncley (1995) classified all instruments commonly used at the time according to their expected errors into those that are suitable for fundamental turbulence research and those that are sufficient for general flux measurements. About one decade later, several then-popular models were compared in a thorough and comprehensive study by Loescher et al. (2005). They tested eight different probes for the accuracy of their temperature measurement in a climate chamber; they investigated biases of the w measurement in a low-speed wind tunnel, and investigated differences in the turbulence statistics measured in the field. At about the same time, Mauder et al. (2007) conducted a field intercomparison of seven different sonic anemometers as part of the international energy balance closure experiment EBEX-2000 above a cotton field in California. Both studies more or less confirmed the classification of Foken and Oncley (1995), who concluded that only the directional probes without supporting structure directly underneath the measurement volume meet the highest requirements of turbulence research, while no significant deviations between those top-class instruments were detected.

The persisting lack of energy balance closure at many sites around the world (Stoy et al., 2013) and the emerging indications of a general flux underestimation of non-orthogonal sonic arrays (Frank et al., 2013) were the primary motivation of a special field experiment by Horst et al. (2015). They conducted an intercomparison at an almost ideal site, which was flat, even and with a homogeneous fetch. Two CSAT3 representing a typical non-orthogonal sensor were compared against two different orthogonal probes manufactured by Applied Technologies Inc. and one custom-made CSAT3 with one vertical path. Under the assumption that the flow-distortion correction of Kaimal (1979) is correct, they state that the CSAT3 requires a correction of 3 to 5 %. This is in quite good agreement with the conclusion of Frank et al. (2016), who suggest a correction of the magnitude between Kaimal and double Kaimal, and the numerical study of Huq et al. (2017), which found an underestimation of 3 to 7 %. Thus, at least for the CSAT3, some consensus is emerging about the magnitude of the correction required under turbulent conditions in the field.

Although the results on measurement error are converging for the CSAT3 model, less is known about the comparability between different sonic anemometer models available today. As the last comprehensive intercomparison experiments were conducted more than 10 years ago, and some new models have emerged on the market since then and some others have received firmware upgrades, we believed that it was time for another field intercomparison covering commonly used sonic anemometers. We deployed six different models from four different manufacturers next to each other over a short grass canopy. Furthermore, two CSAT3 were tested simultaneously in order to compare the influence of transducer rain guards. An orthogonal regression analysis is applied to the turbulence statistics obtained from the different instruments, and quantitative measures of uncertainty, such as bias and comparability (RMSE), are derived.

2.1 Field experiment

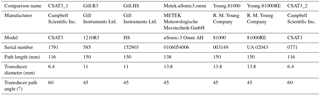

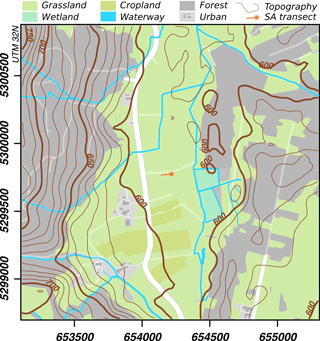

This sonic anemometer intercomparison experiment took place at the Fendt field site in southern Germany (DE-Fen; 47.8329∘ N 11.0607∘ E; 595 m a.s.l.), which belongs to the German Terrestrial Environmental Observatories (TERENO) network. The measurement period was from 6 to 22 June 2016, and the intercomparison was conducted as part of the multi-scale field campaign ScaleX (Wolf et al., 2017), where the sonic anemometers were subsequently deployed at different locations. The landscape surrounding the site comprises gentle hills that are partially covered by forest (Fig. 1), and the land cover within the footprint consisted of grassland with a canopy height of 0.25 m (Zeeman et al., 2017). The aerodynamic roughness length was estimated to be 0.03 m. In this field experiment, we compared seven sonic anemometers from four different manufacturers. A detailed list of all participating instruments is provided in Table 1.

Figure 1Location of the sonic anemometer (SA) transect at the DE-Fen field. Map modified from Fig. 1 in Zeeman et al. (2017).

Since the dominant wind direction is north for this site on typical summer days due to a thermal circulation between the Alps and the Alpine foreland (Lugauer and Winkler, 2005), we set up all instrumented towers in a row from east to west. The sensors were separated by 9 m from each other in order to avoid flow distortion between neighbouring towers. The measurement height of all sonic anemometers was 3.0 m, and they were oriented towards the west (270∘) for all non-omnidirectional probes (Fig. 2). Data from all instruments were digitally recorded on synchronized single-board computers (BeagleBone Black, BeagleBoard.org Foundation, Oakland Twp, MI, USA), equipped with temperature-compensated clocks (Chronodot, Macetech LLC, Vancouver, WA, USA), using an event-driven protocol for recording data lines, implemented in the Python programming language. The digital recording minimizes the influence of data cable properties on signal quality and minimizes the impact of loss of resolution by conversion between analogue and digital signals outside the scope of the sensor. Issues stemming from cable properties usually have a more apparent effect on digital than on analogue signal transmissions. In the case of a signal deterioration by oxidation of contacts or loosening cable connections, digitally transmitted data lines will start to show up in a corrupted format, while loss of signal resolution in analogue transmission may go unnoticed for some time. Therefore, the potential for added uncertainty to the observations recorded by analogue data transmission can in part be avoided by digital communications. The sampling rate was 20 Hz, except for the CSAT3_2, which was sampled at 60 Hz, and the Gill_HS, which was sampled at 10 Hz. All other settings were left at the factory-recommended values, including flow-distortion corrections. The differences due to different firmware versions are quite well documented for the CSAT3. According to Burns et al. (2012), discrepancies between firmware versions 3 and 4 occur mostly for the sonic temperature measurement and they become significant for wind speeds larger than 8 m s−1. During our field campaign, wind speeds were mostly lower than 5 m s−1 (Fig. 4). Therefore, we do not expect large errors. Nevertheless, we used the same firmware version (ver4) for both CSAT3.

Figure 2(a) Close-up pictures of all seven sonic anemometers; they are presented from left to right in the same order as they are listed in Table 1. (b) A photograph of the field intercomparison experiment; the micrometeorological installations of the TERENO/ICOS site DE-Fen can be seen in the background (left).

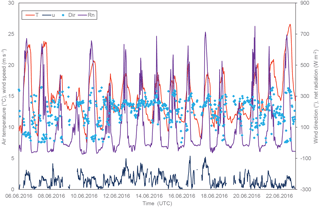

Figure 3 shows the meteorological conditions during the experiment. As expected for this site and for this time of the year, the dominant daytime wind direction was north. Wind speeds ranged between 0 and 5 m s−1. Air temperatures varied between 8 and 24 ∘C. Net radiation reached values up to 700 W m−2. On 8, 9 and 19 June, the cloud cover was rather dense all day. Most of the days are characterized be high loads of net radiation with values larger than 500 W m−2 at maximum. Nevertheless, also rain occurred on most of the days with the exception of first two days of the measurement period, 6–7 June, and the last day, 22 June. Overall, this experiment can be considered as being typical conditions in the early summer of temperate climate zones.

2.2 Data processing

All data were processed using the TK3 software (Mauder and Foken, 2015) according to the processing scheme of Mauder (2013). More precisely, turbulent statistics were calculated using 30 min block averaging, after applying a spike removal algorithm on the high-frequency raw data. We applied the double-rotation method (Kaimal and Finnigan, 1994) and a spectral correction for path averaging according to Moore (1986). The compared turbulent quantities are defined as follows:

-

, the averaged total wind velocity after alignment of the coordinate system into the mean wind (after double rotation);

-

, the averaged sonic temperature;

-

, the standard deviation of the vertical velocity component;

-

, the standard deviation of the sonic temperature;

-

, the friction velocity calculated from both covariances between the two horizontal wind components and w;

-

the buoyancy flux calculated from the air density ρ, the specific heat capacity at constant pressure cp and the covariance between w and Ts.

These quantities were filtered for rain (during the respective half hour or the half hour before as recorded by a Vaisala WXT520 sensor of the nearby TERENO station DE-Fen), obstructed wind directions φ based on 30 min averages (; ) and non-steady-state conditions, i.e. data with Foken et al. (2004) steady-state test flags 4–9, considering the u* flag for all statistics concerning the pure wind measurements (U, σw, u*) and the sensible heat flux flag for all statistics that include sonic temperature (Ts, , Hs).

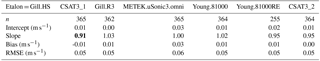

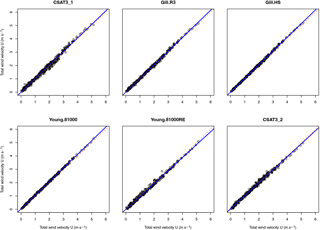

Figure 4Comparison of the 30 min averaged total wind velocity measurements (etalon = METEK.uSonic.omni).

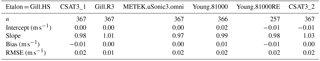

Table 2Regression results for the comparison of mean total wind velocity U, plus estimates for bias and comparability (RMSE).

The reference instrument (etalon) was chosen for each compared quantity independently according to a principal component analysis (PCA) using the R function princomp. We selected the instrument with the highest loading on the first principal component. Although the Young.81000RE had received the highest loading, we selected the sonic anemometer with the second highest loading as etalon instead because the Young.81000RE time series only starts more than 3 days later on 10 June 2016, 14:00, due to technical issues at the beginning of the field experiment.

For the statistical analysis of the intercomparison, an orthogonal Deming regression was applied in order to account for measurement errors in both x and y variables, using the R package mcr (Manuilova et al., 2014; R_Core_Team, 2016). Furthermore, we calculated the values for comparability, which is equivalent to the root mean square error (RMSE), and bias, which is the mean error for a certain measurement quantity.

3.1 Mean total wind velocity

For our comparison of the mean wind velocity measurements, the METEK.uSonic3.omni was selected as etalon, because it received the highest loading (−0.3785) on the first principal component of our PCA. However, the loadings of the two Gill instruments and the YOUNG.81000 are not much lower either. Hence, the two Gill anemometers and the Young.81000 compare slightly better with the etalon than the rest. Nevertheless, the agreement between the U measurements by all tested anemometers is generally very good, as can be seen from Fig. 4. This is also indicated by small regression intercepts (< 0.04 m s−1) and slopes close to one (1±0.03). In general, comparability values are smaller than 0.11 m s−1 and biases range between −0.05 and 0.06 m s−1 (Table 2). The agreement between the two CSAT3 is as good as the overall agreement between all tested instruments.

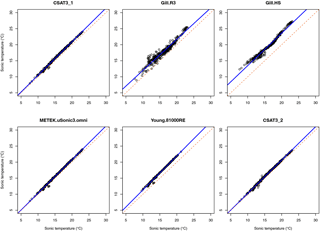

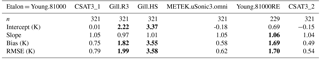

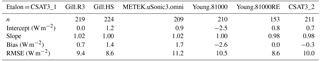

3.2 Mean sonic temperatures

The ultrasound-based temperature measurement is determined from the absolute time of flight as opposed to the differences in time of flight for the velocity measurement. Therefore, inaccuracies in path length due to inadvertent bending or varying electronic delays of the signal processing directly affect the accuracy of the measurement, and it is not surprising that the general agreement between different instruments is much worse for the sonic temperature than for the wind velocity. The Young.81000 received the highest loading (−0.3806) and was therefore chosen as etalon. Good agreement with this reference is found for the two CSAT3 and the METEK.uSonic.omni, which is indicated by values well below 1 K for bias and comparability. However, larger discrepancies occur for the two Gill sonic anemometers and the Young.81000RE. As can be seen from Fig. 5, the Young.81000RE sonic temperatures show a linear relationship with the etalon, so that the error of this instrument could be corrected by a simple regression equation using the coefficients provided in Table 3. In contrast, the sonic temperature measurements of the two Gill sensors show much more scatter and non-linearity in addition to a large bias, which is determined as 1.82 K for the Gill.R3 and 3.55 K for the Gill.HS. Therefore, the comparability values are also large with RMSE = 1.99 K for the Gill.R3 and 3.58 K for the Gill.HS.

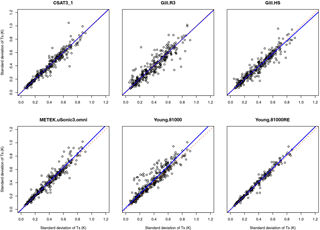

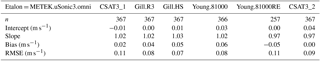

Figure 6Comparison of the standard deviation of the vertical velocity component σw (etalon = Gill.HS).

3.3 Standard deviation of the vertical velocity component

An accurate and precise measurement of the standard deviation of the vertical velocity component is particularly important because the w fluctuations are required for the determination of any scalar flux by eddy covariance – as also are those fluxes that require the deployment of an additional sensor, such as an infrared gas analyser or other laser-based fast-response sensors. During our field experiment, σw values ranged between 0 and 0.7 m s−1. The Gill.HS anemometer was chosen as etalon for σw as it received the highest loading from our PCA (−0.3781). All other instruments agree very well with this reference, as can be seen from Fig. 6. Intercepts and biases are very small, ranging from −0.01 to 0.02 m s−1 (Table 4). Values for comparability are better than 0.02 m s−1 and the regression slopes are close to one (1±0.03).

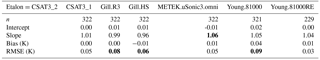

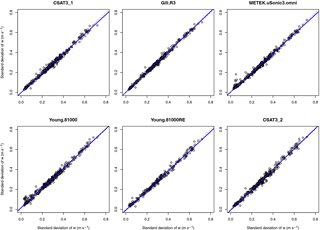

3.4 Standard deviation of the sonic temperature

Despite the large discrepancies of the mean sonic temperature measurements of the Gill instruments, the fluctuations of sonic temperature agree much better (Fig. 7). For this turbulent quantity, the CSAT2_2 was chosen as etalon, although it only had the second-highest loading in our PCA (−0.3816) because the Young.81000RE, which received a slightly higher loading (−0.3824), only recorded data 4 days after the comparison experiment had begun. None of the tested instruments shows a large bias nor a large regression intercept for the measurement of . However, the large errors in mean sonic temperature of the two Gill anemometers also led to a larger scatter for , which expresses itself in comparability values larger than 0.06 K for the Gill.HS and 0.08 K for the Gill.R3 (Table 5). Surprisingly, the Young.81000 has an even poorer comparability of 0.09 K – it was the etalon for the mean sonic temperature measurement. In contrast, the Young.81000RE shows a very good agreement with the etalon for despite its large bias when measuring mean sonic temperature. The METEK.uSonic.omni stands out because it has the highest regression slope of 1.06, which is a direct consequence of the almost equally high regression slope of 1.05 for the mean sonic temperature measurement. The agreement between the two CSAT3 is very good except for a few outliers, which were not rejected by our data-screening algorithm.

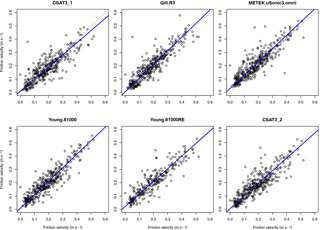

3.5 Friction velocity

Friction velocities ranged between 0 and almost 0.6 m s−1 during our experiment. Although the Young.81000RE has the highest loading (−0.3803) in our PCA, we chose the Gill.HS as etalon due to the above-mentioned data availability issue of the Young.81000RE, but again its loading is only slightly lower (−0.3801). For u*, generally much larger scatter is observed than for other purely wind-related quantities, such as U and σw(Fig. 8), which manifests itself in comparability values of 0.05 or 0.06 m s−1 respectively (Table 6). However, despite the large scatter, the biases and regression intercepts are generally smaller with values lower than 0.02 m s−1 in absolute numbers. Only the METEK.uSonic.omni measures friction velocities consistently larger than the etalon on average, which manifests itself in a bias and regression intercept of 0.03 m s−1. The relatively low regression slope of the CSAT3_1 of 0.91 does not lead to unusually poor error estimates of either comparability (0.05 m s−1) or bias (−0.01 m s−1). Similarly, the CSAT_2 shows the second lowest regression slope, but its bias and RMSD is very similar to the other instruments.

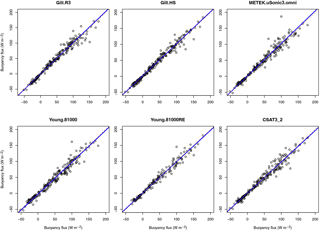

3.6 Buoyancy flux

Quantifying fluxes by eddy covariance is probably the most common application of sonic anemometers. Therefore, the comparison of the buoyancy flux measurements is perhaps the most interesting aspect of this study for many researchers. First, we would like to note that the number of available data is reduced by about one-third compared to the other quantities, which is due to rejection of instationary periods by the quality tests of Foken et al. (2004). The CSAT3_1 was chosen as etalon for this quantity because it received the highest loading in our PCA (−0.3786). The overall agreement between all sonic anemometers is excellent, as can be seen from Fig. 9. Biases are generally very small, with values less than 3 W m−2, and all of the regression slopes are very close to one (1±0.02) (Table 7). Some minor scatter that is apparent in the comparison plots of Fig. 9 results in comparability values between 8.6 and 11.2 W m−2 for the different instruments.

In theory, the overall agreement between sonic anemometers cannot be better than the random error, if the seven different measurement systems collect independent samples of an homogeneous turbulence field (Richardson et al., 2012). The stochastic error due to limited sampling of the turbulent ensemble (Finkelstein and Sims, 2001) is 17 % or 0.03 m s−1 on average for u* and 14 % or 5 W m−2 for Hs, based on data from CSAT3_1. The comparability values that we found between different instruments for these two quantities are only slightly larger. This means that a better agreement is hardly physically possible, and the remaining small discrepancies can be explained by slight surface heterogeneities within the footprint area of the different systems and by a very small instrumental error. The agreement between the two CSAT3 is as good as the agreement with other sonic anemometer models.

We found a much better agreement between different sonic anemometers, especially for u* and Hs, in comparison to previous intercomparison experiments (Loescher et al., 2005; Mauder et al., 2007). All tested instruments were within the limits that Mauder et al. (2006) classified as type A, i.e. sonic anemometers suitable for fundamental turbulence research. Perhaps this can partially be explained by a consistent digital data acquisition, implemented here with a very high precision clock and event-driven communication using Python programming language. Probably, the implementation of a more efficient spike removal algorithm for the high-frequency data and other additional quality tests in the post-processing scheme of Mauder (2013) also helped to improve the data quality of the resulting fluxes and consequently improved the agreement.

On top of that, the filtering for obstructed wind direction sectors and for rain, as described in Sect. 2.2, was crucial to remove poor-quality data. Both additional steps improved the agreement between instruments considerably. For σw, regression slopes ranged between 1.00 and 1.24 and intercepts were between −0.05 and 0.00 m s−1 after processing according to Mauder (2013). After filtering for obstructed wind direction, slopes ranged between 0.98 and 1.22 and intercepts remained between −0.05 and 0.00 m s−1. As can be seen from the results (Table 4), the overall agreement further improved after the filtering for rainy periods. Especially, some outliers of the CSAT3_2, which did not have the rain-guard meshes at the transducer heads, were rejected after this step. The effect of the data filtering on other quantities, such as Hs, was smaller. Here, the slopes ranged already only between 0.97 and 1.00 after processing according to Mauder (2013), which did not change much further after filtering for obstructed wind directions and for rainy periods (Table 7). This can be explained by the fact that the scheme of Mauder (2013) is designed for quality control of fluxes and not necessarily standard deviations. It is therefore much stricter for Hs than for σw.

Considering flow distortion errors of the order of 5 % or more that are reported in the literature (Frank et al., 2016; Horst et al., 2015; Huq et al., 2017), the very good agreement between all sonic anemometers in this field experiment is nevertheless somewhat surprising. A contribution by changes in the firmware of the different sonic anemometers over the last 10 years is likely but not fully documented. According to the manufacturer, the two CSAT3 sonic anemometers have no flow distortion correction at all, while all the other five instruments probably do apply some sort of correction – although the exact details are not publicly available for all of them. This could mean that flow distortion errors are indeed significant for our experiment but perhaps all instruments are afflicted with an error of almost the exact same magnitude and consequently underestimate σw and vertical scalar fluxes similarly, despite the obvious differences in sensor geometry and internal data processing.

Alternatively, one might also suppose that the flow distortion errors were generally small for our experimental setup due to the occurred distribution of instantaneous flow angles, since flow-distortion effects tend to be smaller for smaller angles of attack, as indicated by the studies of Grelle and Lindroth (1994) and Gash and Dolman (2003). However, the standard deviation of the angles of attack was about 15∘, which is comparable to other field experiments. For comparison, Gash and Dolman (2003) report about 90 % of their data to be within ±20∘ for the Horstermeer peat bog site, and Grare et al. (2016) report their data to be in a range of ±15∘, most of times even within ±10∘, measuring at 10 m above shrubland. Horst et al. (2015) report their angles of attack to be mostly within ±8∘ for measurements above low weeds and crop stubble with an aerodynamic roughness length of 0.02 m. Since the spread of angles of attack is at the upper end of the values reported in the literature, our comparison results can be considered as a conservative estimate for the random instrument-related uncertainty of typical applications of eddy covariance measurements over vegetation canopies. A common significant systematic error of all tested instruments is quite possible, as suggested by Frank et al. (2016).

One exception to the overall very good agreement is the sonic temperature measurement by both Gill sonic anemometers, the HS and the R3. This error appears not only as an offset but also as deviation of a linear functional relationship and increased scatter. A similar behaviour of other Gill anemometers has been reported before, and a possible explanation has also been provided in the past (Mauder et al., 2007; Vogt, 1995). Obviously, the sonic temperature measurement of Gill anemometers is compromised by a temperature dependence of the transducer delay, i.e. the time delay between the arrival of a sound pulse at the transducer and the registration by the electronics board.

Generally, biases and regression intercepts were very small for all sensors and all computed variables, except for the temperature measurements of the two Gill sonic anemometers (HS and R3), which are known to have a transducer-temperature dependence of the sonic temperature measurement (Mauder et al., 2007). Nevertheless, the Gill anemometers show an equally good agreement for other turbulence statistics. The comparability (RMSE) of the instruments is not always as good as the bias, indicating a random error that is slightly larger than any systematic discrepancies. The best overall agreement between the different instruments was found for the quantities U, σw, and Hs, which suggests that the sensors' physical structure and internal signal processing are designed for measuring wind speed and vertical scalar fluxes as accurately as possible. However, the relative random uncertainty of u* measurements is still large, pointing to the particular challenge in measuring the covariance of horizontal and vertical wind components due to the rather small spectral overlap.

The uncertainty estimate of Mauder et al. (2006) for the buoyancy flux measurement of 5 % or 10 W m−2 was confirmed, not only for those instruments that were classified in that study as “type A” (CSAT3 and Gill HS) but also for those that were labelled “type B” (Gill R3) back then and all other tested instruments (METEK uSonic3-omni, RM Young 81000 and 81000RE). Hence, from our results we cannot derive a classification of the tested sonic anemometers in different quality levels, which means that the evolution of anemometers by all major manufacturers has converged over the last decade.

For applications aiming at measuring vertical scalar fluxes, all tested instruments can be considered equally suitable, as long as digital data acquisition is implemented to avoid additional uncertainty and a stringent data quality control procedure is applied to detect malfunction of the eddy covariance system. Moreover, the deviations between instruments of different manufacturers are not larger than between different serial numbers of the same model. Therefore, we do not consider it to be necessary to agree on one single anemometer model to ensure comparability, e.g. for intensive field campaigns or for networks of ecosystem observatories. Instead, other criteria should be taken into account for the selection of a sonic anemometer, such as climatic conditions of a measurement site (e.g. frost, fog, heat), the distribution of wind directions (omnidirectional or not), the measurement height (path length), the compatibility with an existing data acquisition system or a certain scientific objective. In principle, this conclusion is not in contradiction with the classification Foken and Oncley (1995) and Mauder et al. (2006), because they also concluded that all instruments under investigation were suitable for general flux measurements. Only for specific questions of fundamental turbulence research was it advised to use certain types of instruments.

Although a good agreement between six different sonic anemometer models indicates a high precision of these type of instruments in general, a field intercomparison study can only provide limited insight into the absolute accuracy of these measurements. Particularly, a systematic error that is common to all tested instruments can inherently never be detected in this way. In the past, wind-tunnel experiments were conducted for this purpose, although their transferability to real-world conditions was always debated. Numerical simulations of probe-induced flow distortion (Huq et al., 2017) may provide a better way to characterize the suitability of sonic anemometers for turbulence measurements in the future. If systematic errors for one certain instrument are known from these computationally very expensive simulations, then classical field intercomparisons can be used to test models against such a well-characterized sensor. Moreover, a comparison with a remote sensing based system that is free of flow distortion, such as lidar, would be very helpful if it is able to sample a similarly small volume of air at a similar measurement rate as a sonic anemometer.

The underlying data of this sonic anemometer intercomparison field experiment are provided in the Supplement.

The supplement related to this article is available online at: https://doi.org/10.5194/amt-11-249-2018-supplement.

The authors declare that they have no conflict of interest.

We are grateful to Kevin Wolz and Peter Brugger for field assistance and to

Sandra Genzel for help with the data preparation. We thank GWU-Umwelttechnik

GmbH for generously lending us the Young.81000RE instrument for the duration

of the field experiment. The Fendt site is part of the TERENO and ICOS-D

ecosystems (Integrated Carbon Observation System, Germany) networks which are

funded, in part, by the German Helmholtz Association and the German Federal

Ministry of Education and Research (BMBF). We thank the Scientific Team of

ScaleX Campaign 2016 for their contribution. This work was conducted within

the Helmholtz Young Investigator Group “Capturing all relevant scales of

biosphere–atmosphere exchange – the enigmatic energy balance closure

problem”, which is funded by the Helmholtz Association through the

President's Initiative and Networking Fund.

The

article processing charges for this open-access

publication

were covered by a Research

Centre of the Helmholtz

Association.

Edited by: Szymon

Malinowski

Reviewed by: John Frank and one anonymous referee

Burns, S. P., Horst, T. W., Jacobsen, L., Blanken, P. D., and Monson, R. K.: Using sonic anemometer temperature to measure sensible heat flux in strong winds, Atmos. Meas. Tech., 5, 2095–2111, https://doi.org/10.5194/amt-5-2095-2012, 2012.

Dyer, A. J.: Flow distorsion by supporting structures, Bound.-Lay. Meteorol., 20, 243–251, 1981.

Dyer, A. J., Garratt, J. R., Francey, R. J., McIlroy, I. C., Bacon, N. E., Bradley, E. F., Denmead, O. T., Tsvang, L. R., Volkov, Y. A., Koprov, B. M., Elagina, L. G., Sahashi, K., Monji, N., Hanafusa, T., Tsukamoto, O., Frenzen, P., Hicks, B. B., Wesely, M., Miyake, M., Shaw, W., Hyson, P., McIlroy, I. C., Bacon, N. E., Victoria, A., Bradley, E. F., Tsvang, L. R., Volkov, Y. A., Koprov, B. M., Elagina, L. G., Sahashi, K., Monji, N., Hanafusa, T., Hicks, B. B., Frenzen, P., Wesely, M., Miyake, M., and Shaw, W.: An international turbulence comparison experiment (ITCE-76), Bound.-Lay. Meteorol., 24, 181–209, 1982.

Finkelstein, P. L. and Sims, P. F.: Sampling error in eddy correlation flux measurements, J. Geophys. Res., 106, 3503–3509, https://doi.org/10.1029/2000JD900731, 2001.

Foken, T. and Oncley, S. P.: Workshop on instrumental and methodical problems of land surface flux measurements, B. Am. Meteorol. Soc., 76, 1191–1193, 1995.

Foken, T., Göckede, M., Mauder, M., Mahrt, L., Amiro, B., and Munger, W.: Post-field data quality control, in Handbook of Micrometeorology, A Guide for Surface Flux Measurement and Analysis, edited by: Lee, X., Massman, W., and Law, B., Kluwer Academic Publishers, Dordrecht, 181–208, 2004.

Frank, J. M., Massman, W. J., and Ewers, B. E.: Underestimates of sensible heat flux due to vertical velocity measurement errors in non-orthogonal sonic anemometers, Agr. Forest Meteorol., 171–172, 72–81, https://doi.org/10.1016/j.agrformet.2012.11.005, 2013.

Frank, J. M., Massman, W. J., Swiatek, E., Zimmerman, H. A., and Ewers, B. E.: All sonic anemometers need to correct for transducer and structural shadowing in their velocity measurements, J. Atmos. Ocean. Tech., 33, 149–167, https://doi.org/10.1175/JTECH-D-15-0171.1, 2016.

Gash, J. H. C. and Dolman, A. J.: Sonic anemometer (co)sine response and flux measurement: I. The potential for (co)sine error to affect sonic anemometer-based flux measurements, Agr. Forest Meteorol., 119, 195–207, https://doi.org/10.1016/S0168-1923(03)00137-0, 2003.

Grare, L., Lenain, L., and Melville, W. K.: The Influence of Wind Direction on Campbell Scientific CSAT3 and Gill R3-50 Sonic Anemometer Measurements, J. Atmos. Ocean. Tech., 33, 2477–2497, https://doi.org/10.1175/JTECH-D-16-0055.1, 2016.

Grelle, A. and Lindroth, A.: Flow Distortion by a Solent Sonic Anemometer: Wind Tunnel Calibration and Its Assessment for Flux Measurements over Forest and Field, J. Atmos. Ocean. Tech., 11, 1529–1542, https://doi.org/10.1175/1520-0426(1994)011<1529:FDBASS>2.0.CO;2, 1994.

Högström, U.: A critical evaluation of the aerodynamical error of a turbulence instrument, J. Appl. Meteorol., 21, 1838–1844, 1982.

Högström, U. and Smedman, A. S.: Accuracy of sonic anemometers: Laminar wind-tunnel calibrations compared to atmospheric in situ calibrations against a reference instrument, Bound.-Lay. Meteorol., 111, 33–54, https://doi.org/10.1023/B:BOUN.0000011000.05248.47, 2004.

Horst, T. W., Semmer, S. R., and Maclean, G.: Correction of a Non-orthogonal, Three-Component Sonic Anemometer for Flow Distortion by Transducer Shadowing, Bound.-Lay. Meteorol., 155, 371–395, https://doi.org/10.1007/s10546-015-0010-3, 2015.

Huq, S., De Roo, F., Foken, T., and Mauder, M.: Evaluation of probe-induced flow distortion of Campbell CSAT3 sonic anemometers by numerical simulation, Bound.-Lay. Meteorol., 164, 9–28, https://doi.org/10.1007/s10546-017-0264-z, 2017.

Kaimal, J.: Sonic Anemometer Measurement of Atmospheric Turbulence, in: Proceedings of the Dynamic Flow Conference 1978 on Dynamic Measurements in Unsteady Flows, edited by: Hanson, B. W., Springer Netherlands, 551–565, 1979.

Kaimal, J. C. and Finnigan, J. J.: Atmospheric Boundary Layer Flows: Their Structure and Measurement, Oxford University Press, New York, NY, 1994.

Kochendorfer, J., Meyers, T. P., Heuer, M. W., Frank, J. M., Massman, W. J., and Heuer, M. W.: How well can we measure the vertical wind speed? Implications for the fluxes of energy and mass, Bound.-Lay. Meteorol., 145, 383–398, https://doi.org/10.1007/s10546-012-9738-1, 2012.

Kochendorfer, J., Meyers, T. P., Frank, J. M., Massman, W. J., and Heuer, M. W.: Reply to the Comment by Mauder on “How Well Can We Measure the Vertical Wind Speed? Implications for Fluxes of Energy and Mass”, Bound.-Lay. Meteorol., 147, 337–345, https://doi.org/10.1007/s10546-012-9792-8, 2013.

Loescher, H. W., Ocheltree, T., Tanner, B., Swiatek, E., Dano, B., Wong, J., Zimmerman, G., Campbell, J., Stock, C., Jacobsen, L., Shiga, Y., Kollas, J., Liburdy, J., and Law, B. E.: Comparison of temperature and wind statistics in contrasting environments among different sonic anemometer-thermometers, Agr. Forest Meteorol., 133, 119–139, https://doi.org/10.1016/j.agrformet.2005.08.009, 2005.

Lugauer, M. and Winkler, P.: Thermal circulation in South Bavaria – climatology and synoptic aspects, Meteorol. Z., 14, 15–30, https://doi.org/10.1127/0941-2948/2005/0014-0015, 2005.

Manuilova, E., Schuetzenmeister, A., and Model, F.: mcr: Method Comparison Regression, available at: https://cran.r-project.org/packag=mcr, 2014.

Mauder, M.: A comment on “How well can we measure the vertical wind speed? Implications for fluxes of energy and mass” by Kochendorfer et al., Bound.-Lay. Meteorol., 147, 329–335, https://doi.org/10.1007/s10546-012-9794-6, 2013.

Mauder, M. and Foken, T.: Eddy-Covariance Software TK3, available at: https://doi.org/10.5281/zenodo.20349, 2015.

Mauder, M., Liebethal, C., Göckede, M., Leps, J. P., Beyrich, F., and Foken, T.: Processing and quality control of flux data during LITFASS-2003, Bound.-Lay. Meteorol., 121, 67–88, https://doi.org/10.1007/s10546-006-9094-0, 2006.

Mauder, M., Oncley, S. P., Vogt, R., Weidinger, T., Ribeiro, L., Bernhofer, C., Foken, T., Kohsiek, W., Bruin, H. A. R., and Liu, H.: The energy balance experiment EBEX-2000, Part II: Intercomparison of eddy-covariance sensors and post-field data processing methods, Bound.-Lay. Meteorol., 123, 29–54, https://doi.org/10.1007/s10546-006-9139-4, 2007.

Miyake, M., Stewart, R. W., Burling, H. W., Tsvang, L. R., Koprov, B. M., and Kuznetsov, O. A.: Comparison of acoustic instruments in an atmospheric turbulent flow over water, Bound.-Lay. Meteorol., 2, 228–245, 1971.

Moore, C. J.: Frequency response corrections for eddy correlation systems, Bound.-Lay. Meteorol., 37, 17–35, https://doi.org/10.1007/BF00122754, 1986.

Nakai, T. and Shimoyama, K.: Ultrasonic anemometer angle of attack errors under turbulent conditions, Agr. Forest Meteorol., 162–163, 14–26, https://doi.org/10.1016/j.agrformet.2012.04.004, 2012.

Nakai, T., van der Molen, M. K., Gash, J. H. C., and Kodama, Y.: Correction of sonic anemometer angle of attack errors, Agr. Forest Meteorol., 136, 19–30, https://doi.org/10.1016/j.agrformet.2006.01.006, 2006.

R_Core_Team: A language and environment for statistical computing, available at: https://www.r-project.org/, 2016.

Richardson, A. D., Aubinet, M., Barr, A. G., Hollinger, D. Y., Ibrom, A., Lasslop, G., and Reichstein, M.: Uncertainty quantification, in: Eddy Covariance: A Practical Guide to Measurement and Data Analysis, edited by: Aubinet, M., Vesala, T., and Papale, D., Springer, Dordrecht, 173–210, 2012.

Stoy, P. C., Mauder, M., Foken, T., Marcolla, B., Boegh, E., Ibrom, A., Arain, M. A., Arneth, A., Aurela, M., Bernhofer, C., Cescatti, A., Dellwik, E., Duce, P., Gianelle, D., van Gorsel, E., Kiely, G., Knohl, A., Margolis, H., Mccaughey, H., Merbold, L., Montagnani, L., Papale, D., Reichstein, M., Saunders, M., Serrano-Ortiz, P., Sottocornola, M., Spano, D., Vaccari, F., and Varlagin, A.: A data-driven analysis of energy balance closure across FLUXNET research sites: The role of landscape-scale heterogeneity, Agr. Forest. Meteorol., 171–172, 137–152, https://doi.org/10.1016/j.agrformet.2012.11.004, 2013.

Tsvang, L. R., Koprov, B. M., Zubkovskii, S. L., Dyer, A. J., Hicks, B., Miyake, M., Stewart, R. W., and McDonald, J. W.: A comparison of turbulence measurements by different instruments; Tsimlyansk field experiment 1970, Bound.-Lay. Meteorol., 3, 499–521, 1973.

Tsvang, L. R., Zubkovskij, S. L., Kader, B. A., Kallistratova, M. A., Foken, T., Gerstmann, W., Przandka, Z., Pretel, J., Zelenny, J., and Keder, J.: International turbulence comparison experiment (ITCE-81), Bound.-Lay. Meteorol., 31, 325–348, 1985.

van der Molen, M. K., Gash, J. H. C., and Elbers, J. A.: Sonic anemometer (co)sine response and flux measurement, II. The effect of introducing an angle of attack dependent calibration, Agr. Forest Meteorol., 122, 95–109, https://doi.org/10.1016/j.agrformet.2003.09.003, 2004.

Vogt, R.: Theorie, Technik und Analyse der experimentellen Flussbestimmung am Beispiel des Hartheimer Kiefernwaldes, Wepf, Basel, 101 pp., 1995.

Wieser, A., Fiedler, F., and Corsmeier, U.: The influence of the sensor design on wind measurements with sonic anemometer systems, J. Atmos. Ocean. Tech., 18, 1585–1608, https://doi.org/10.1175/1520-0426(2001)018<1585:TIOTSD>2.0.CO;2, 2001.

Wolf, B., Chwala, C., Fersch, B., Gravelmann, J., Junkermann, W., Zeeman, M. J., Angerer, A., Adler, B., Beck, C., Brosy, C., Brugger, P., Emeis, S., Dannenmann, M., De Roo, F., Diaz-Pines, E., Haas, E., Hagen, M., Hajsek, I., Jacobeit, J., Jagdhuber, T., Kalthoff, N., Kiese, R., Kunstmann, H., Kosak, O., Krieg, R., Malchow, C., Mauder, M., Merz, R., Notarnicola, C., Philipp, A., Reif, W., Reineke, S., Rödiger, T., Ruehr, N., Schäfer, K., Schrön, M., Senatore, A., Shupe, H., Völksch, I., Wanninger, C., Zacharias, S., and Schmid, H. P.: The ScaleX campaign: scale-crossing land-surface and boundary layer processes in the TERENO-preAlpine observatory, B. Am. Meteorol. Soc., 98, 1217–1234, https://doi.org/10.1175/BAMS-D-15-00277.1, 2017.

Zeeman, M. J., Mauder, M., Steinbrecher, R., Heidbach, K., Eckart, E., and Schmid, H. P.: Reduced snow cover affects productivity of upland temperate grasslands, Agr. Forest Meteorol., 232, 514–526, https://doi.org/10.1016/j.agrformet.2016.09.002, 2017.

Zhang, S. F., Wyngaard, J. C., Businger, J. A., and Oncley, S. P.: Response characteristics of the U.W. sonic anemometer, J. Atmos. Ocean. Tech., 3, 315–323, https://doi.org/10.1175/1520-0426(1986)003<0315:RCOTUS>2.0.CO;2, 1986.