the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Calibration of PurpleAir low-cost particulate matter sensors: model development for air quality under high relative humidity conditions

Martine E. Mathieu-Campbell

Chuqi Guo

Andrew P. Grieshop

Jennifer Richmond-Bryant

The primary source of measurement error from widely used particulate matter (PM) PurpleAir sensors is ambient relative humidity (RH). Recently, the US EPA developed a national correction model for PM2.5 concentrations measured by PurpleAir sensors (Barkjohn model). However, their study included few sites in the southeastern US, the most humid region of the country. To provide high-quality spatial and temporal data and inform community exposure risks in this area, our study developed and evaluated PurpleAir correction models for use in the warm–humid climate zones of the US. We used hourly PurpleAir data and hourly reference-grade PM2.5 data from the EPA Air Quality System database from January 2021 to August 2023. Compared with the Barkjohn model, we found improved performance metrics, with error metrics decreasing by 16 %–23 % when applying a multilinear regression model with RH and temperature as predictive variables. We also tested a novel semi-supervised clustering method and found that a nonlinear effect between PM2.5 and RH emerges around RH of 50 %, with slightly greater accuracy. Therefore, our results suggested that a clustering approach might be more accurate in high humidity conditions to capture the nonlinearity associated with PM particle hygroscopic growth.

- Article

(6880 KB) - Full-text XML

-

Supplement

(953 KB) - BibTeX

- EndNote

In recent years, many communities have started using low-cost particulate matter (PM) sensors to predict community exposure risks (Bi et al., 2020, 2021; Chen et al., 2017; Jiao et al., 2016; Kim et al., 2019; Kramer et al., 2023; Lu et al., 2022; Snyder et al., 2013; Stavroulas et al., 2020), since short-term and long-term exposure to particulate matter with an aerodynamic diameter of 2.5 µm or smaller (PM2.5) is associated with several adverse health effects (Brook et al., 2010; Chen et al., 2016; Cohen et al., 2017; Health Effects Institute, 2020; Landrigan et al., 2018; Olstrup et al., 2019; Pope and Dockery, 2006). These low-cost sensors have been used to inform exposure risks in different applications including environmental justice (Kramer et al., 2023; Lu et al., 2022), wildfire exposure (Kramer et al., 2023), traffic-related exposure (Lu et al., 2022), and indoor exposure (Bi et al., 2021; Lu et al., 2022). The dense monitoring network enabled by deploying low-cost sensors provides the potential to understand the PM2.5 exposure risk at a higher spatial and temporal resolution than the established regulatory air quality monitoring system. Federal Reference Method or Federal Equivalence Method (FRM/FEM) monitors tend to be sparsely sited due to the cost and complexity of this instrumentation.

Several studies have evaluated the performance of low-cost PM sensors for different sources and meteorological conditions, with bias and low precision reported in several cases (Ardon-Dryer et al., 2020; Barkjohn et al., 2021; Bi et al., 2020, 2021; He et al., 2020; Holder et al., 2020; Jayaratne et al., 2018; Kelly et al., 2017; Kim et al., 2019; Magi et al., 2020; Malings et al., 2020; Sayahi et al., 2019; Stavroulas et al., 2020; Tryner et al., 2020; Wallace et al., 2021). A study conducted in 2016 (AQ-SPEC, 2016a) to evaluate low-cost PM2.5 sensors showed overall good agreement between PurpleAir PM sensors and two reference monitors, with R2 of 78 % and 90 % (AQ-SPEC, 2016b). However, an overestimation of 40 % was found for PurpleAir PM2.5 concentrations compared with the reference monitors (AQ-SPEC, 2016b; Wallace et al., 2021). Humidity has been documented as an important parameter that could greatly reduce the performance of low-cost sensors (Rueda et al., 2023; Wallace et al., 2021; Zusman et al., 2020). Most low-cost PM sensors, including the PurpleAir sensor, utilize optical sensors based on the light-scattering principle to estimate PM mass concentration. Thus, they are subject to measurement errors from various factors, including particle size, composition, optical properties, and interactions of particles with atmospheric water vapor (Hagan and Kroll, 2020; Rueda et al., 2023; Zheng et al., 2018; Zusman et al., 2020). In a high-humidity environment, accurate detection of particle size and concentration may be affected by hygroscopic growth of particles (Carrico et al., 2010; Chen et al., 2022; Healy et al., 2014; Jamriska et al., 2008; Wallace et al., 2021). Water vapor may also damage the circuitry of the sensors (Jamriska et al., 2008; Wallace et al., 2021). Relative humidity (RH) has therefore been confirmed to be a primary source of measurement error that requires concentration correction in low-cost PM sensors (Barkjohn et al., 2021; Sayahi et al., 2019; Wallace et al., 2021; Zusman et al., 2020).

The PurpleAir PM sensor is one of the most widely used low-cost PM sensors (Bi et al., 2021; Wallace et al., 2021). As of April 2022, there were more than 30 000 networked PurpleAir sensors, providing geolocated real-time air quality information (https://www2.purpleair.com, last access: 29 August 2023; https://www.airnow.gov, last access: 29 August 2023). Recently, the US Environmental Protection Agency (EPA), after an evaluation of the sensors, developed a national correction model for PurpleAir sensors (Barkjohn et al., 2021). However, this evaluation included few sites in the southeastern US (Barkjohn et al., 2021). The study covered 16 states using 39 sites selected according to their collocation with an FRM/FEM monitor. In this study, the southeastern US, the most humid region of the US, characterized by a humid subtropical climate (Konrad et al., 2013), was represented by only five sites and encompassed four states. The EPA correction model used multilinear regression (MLR) (Barkjohn et al., 2021). Some recent studies have used model-based clusters (MBCs) to improve performance metrics compared with their MLR models. McFarlane et al. (2021) and Raheja et al. (2023) applied a Gaussian mixture regression (GMR) bias correction model to PM2.5 PurpleAir sensors in Accra, Ghana. The GMR-based model developed by McFarlane et al. (2021) used daily data from one PurpleAir sensor collocated with one Met One Beta Attenuation Monitor 1020 from March 2020 to March 2021. Raheja et al. (2023) used three different brands of low-cost sensors including PurpleAir PA-II collocated with a Teledyne T640 as the reference-grade monitor at the University of Ghana in Accra, Ghana, from May to September 2021. However, a GMR-based model is not transferable to new settings (Raheja et al., 2023), since the regression function in a GMR is derived from input from modeling the joint probability distribution of the data (Maugis et al., 2009; McFarlane et al., 2021; Shi and Choi, 2011). The model is not flexible enough to handle differences in proportions of the input variables observed at different locations.

The objective of this study is to develop and evaluate PurpleAir bias correction models for use in the warm–humid climate zones (2A and 3A) of the US (Antonopoulos et al., 2022). First, we tested an MLR approach with different combinations of predictive variables. To avoid the transferability constraints observed for the GMR, our study then tested a novel semi-supervised clustering method. We used PurpleAir data and the FRM/FEM PM2.5 data from the EPA Air Quality System (AQS) database from January 2021 to August 2023. We tested new correction models developed for the high-humidity southeastern region of the country and compared them with the EPA nationwide PurpleAir data correction model proposed by Barkjohn et al. (2021).

2.1 Study area

The study area includes the “warm–humid and moist” climate zone of the United States, as defined by the International Energy Conservation Code (EICC) in 2021. The 2021 EICC identifies the appropriate climate zone designation for each county in the US (Antonopoulos et al., 2022). The climate zone map comprises eight regions, with seven represented in the continental US (Antonopoulos et al., 2022; Chapter 3, General requirements, 2021 International Energy Conservation Code, IECC). The thermal climate zones are based on meteorological parameters (designated as 1 to 8) including precipitation, temperature and humidity, and a moisture regime (designated as A, B, and C for humid–moist, dry, and marine, respectively). The thermal climate is determined using heating degree days and cooling degree days, and the moisture regime is based on monthly average temperature and precipitation (Antonopoulos et al., 2022; Chapter 3, General requirements, 2021 International Energy Conservation Code, IECC).

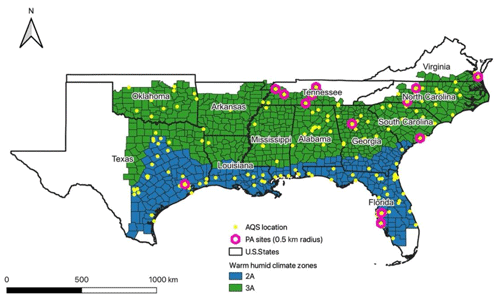

Figure 1Study area showing the warm–humid climate zone classification. The map also shows the distribution of available AQS monitors and the distribution of the PurpleAir sensors (PA sites) located within a 0.5 km radius of an AQS monitor.

The study area was composed of climate zones and moisture regimes 2A and 3A. The “warm–humid” climate zone designation corresponds to a specific area of the climate zone map that includes Zones 2A and 3A (Fig. 1). Zone 1A is excluded, given that its tropical characteristics are sufficiently different from most of the southeast. A warm and humid climate is characterized by high levels of humidity and high temperatures throughout the year and receives more than 20 in. (50 cm) of precipitation per year (Baechler et al., 2015). This area presents a state average annual humidity varying between 65.5 % and 74.0 % and an average temperature per state varying between 55.1 °F (12.83 °C) and 70.7 °F (21.50 °C). These 12 states have the 12 highest annual average dew-point temperatures in the continental US.

The study area includes 799 counties distributed into the 12 states. Except Kentucky, all of the southeastern US states are partially or entirely characterized by a warm–humid climate zone and included in our study area. The high humidity conditions in this part of the US might affect particle composition and size distribution due to water uptake (Hagan and Kroll, 2020; Jaffe et al., 2023; Patel et al., 2024; Rueda et al., 2023). A study conducted in 2018 (Carlton et al., 2018) found large contributions (50 %) to PM2.5 from biogenic secondary organic aerosol (BSOA) in the southeast US region compared with the rest of the country. The elevated BSOA is attributed to heavily forested areas and large urban areas in the region (Carlton et al., 2018; U.S. EPA, 2018).

2.2 Data collection

The PurpleAir (PA-II-SD) contains two Plantower PMS5003 laser-scattering particle sensors, a pressure–temperature–humidity sensor (BME280), and a Wi-Fi module (Magi et al., 2020). PM2.5 data from the PurpleAir sensors were obtained from the PurpleAir data repository (API PurpleAir, https://api.purpleair.com, last access: 29 August 2023), and PM2.5 data from the State and Local Air Monitoring System (SLAMS) were retrieved from the US EPA AQS (https://www.epa.gov/aqs, last access: 29 August 2023) for the period from 1 January 2021 to 28 August 2023 using their respective application programming interfaces (APIs). To obtain data for the study area, we used a bounding box (longitude: −100.01° W, −75.50° W; latitude: 25.81° N, 37.01° N) that contains all outdoor sensors available for this geographical area. We identified 997 available sensors. We used the PM2.5 dataset related to a standard environment, which was reported in the PurpleAir output as cf_1 (correction factor of 1). This represents a more appropriate raw measurement of PM concentrations without any nonlinear transformation (McFarlane et al., 2021) and has been used for several other studies (Barkjohn et al., 2021; Raheja et al., 2023; Tryner et al., 2020; Wallace et al., 2021). Hourly average PM2.5 concentrations were downloaded for both PurpleAir sensors and AQS monitors.

SLAMS data are collected by local, state, and tribal government agencies and made available via the AirNow API (https://www.airnow.gov, last access: 29 August 2023). To ensure data accuracy, AQS data are collected by FRM or FEM (U.S. EPA, 2023). These methods are primarily maintained to evaluate compliance with the National Ambient Air Quality Standards (NAAQS), although the data are often used for air pollution exposure and epidemiology research. We identified 181 FEM or FRM monitors in our study area.

2.3 Selection of PurpleAir sensors and data quality control criteria

We selected PurpleAir sensors within fixed radii of each FRM or FEM monitor. The R Statistical Software (version R 4.3.1) was employed for data selection, data quality control, and statistical modeling. We identified outdoor PurpleAir sensors within 2.0, 1.0, and 0.5 km of each FRM or FEM monitor. When a PurpleAir sensor fell within the buffer of two or more AQS monitors, the shorter distance to an AQS buffer centroid was applied to ensure better spatial join accuracy.

We applied a series of data exclusion criteria for quality control. First, we used a detection limit of 1.5 µg m−3 for the PurpleAir data. This value is equivalent to the average of the values reported by Tryner et al. (2020) and Wallace et al. (2021) for the cf_1 data series. We also excluded all PM2.5 data points that were greater than 1000 µg m−3. Then, we applied data exclusion criteria to clean the PurpleAir data based on agreement between the concentrations reported for the two Plantower PMS5003 sensors provided in the PurpleAir housing, labeled arbitrarily as Channels A and B. We considered low and high concentrations separately. For low PM2.5 concentrations (less than or equal to 25 µg m−3), we removed observations where the concentration difference between Channels A and B was greater than 5 µg m−3 and the percent error deviation was greater than 20 %. For high PM2.5 concentrations (greater than 25 µg m−3), we removed data records when the percent error deviation between Channels A and B was greater than 20 %. Similar cleaning criteria were used for quality assurance by Barkjohn et al. (2021) and Tryner et al. (2020), where data with a difference between Channels A and B less than 5 µg m−3 for low PM2.5 concentration were considered valid. Bi et al. (2020) removed data with the 5 % largest percent error difference between Channels A and B. Additionally, Barkjohn et al. (2021) excluded data points where Channels A and B deviated by more than 61 %. However, we decided to employ a more stringent criterion for our high-concentration data records (20 % deviation) considering that our study only included reported PurpleAir data available via the API and only for one region of the United States. Following data cleaning, the final PurpleAir concentration (CPA) dataset used in our study was obtained by averaging Channels A and B and included only hourly average PurpleAir data points that had a spatial (within the calculated radius) correspondence to hourly FEM1 concentration (CAQS) data. Missing CAQS data points were excluded before applying the radius-related spatial join.

To ensure data quality, the relative humidity measured by the BME280 sensor within the PurpleAir housing was evaluated. We compared hourly RH from the PurpleAir with the corresponding hourly RH from the National Oceanic and Atmospheric Administration (NOAA) Integrated Surface Database (ISD). The NOAA data were downloaded using the R package worldmet (worldmet: Import Surface Meteorological Data from NOAA ISD). The nearest NOAA station to each PurpleAir sensor was considered for the comparison. The average distance between a NOAA station and a PurpleAir sensor was approximately 16.09 km, with a minimum of 2.65 km and a maximum of 41.04 km. All PurpleAir sensors that presented a correlation of less than 0.80 with the corresponding RH from NOAA were excluded.

2.4 Model correction

2.4.1 Model inputs

Because measurement errors are related to water uptake by particles (Hagan and Kroll, 2020; Rueda et al., 2023; Wallace et al., 2021), temperature (T) and RH are the most commonly found bias correction parameters in the literature (Ardon-Dryer et al., 2020; Bi et al., 2020; Magi et al., 2020; Malings et al., 2020; Wallace et al., 2021) for the PurpleAir sensor. Thus, our meteorological data (hourly T, hourly RH) were taken from the PurpleAir sensor, similar to the analysis conducted by Barkjohn et al. (2021). Barkjohn et al. (2021) included dew-point temperature (DP) in addition to T and RH as input predictors in their modeling process. However, DP was excluded as a predictor in our study. DP exhibited collinearity with both RH and T when testing for variance inflation factor. In fact, a high correlation of 95 % was found between DP and T. Therefore, including it would inflate the goodness of fit of the model. This result is not surprising considering the interdependent atmospheric thermodynamic relationship of DP with RH and T. For data quality assurance, we only included data records within a range of 0–130 °F (−17.78–54.44 °C) for T and 0 %–100 % for RH. Similar quality assurance criteria were employed by Wallace et al. (2021), where data records with abnormal temperature and relative humidity measurements were removed.

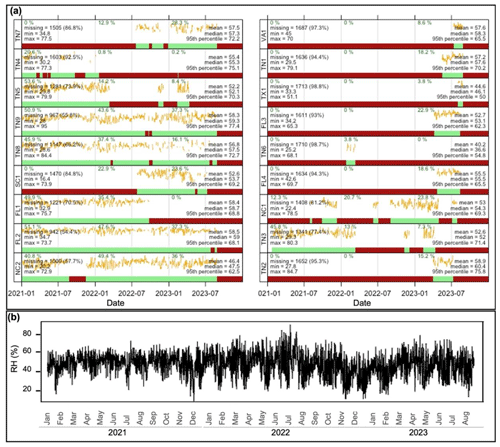

Figure 2(a) Summary statistics and time series (yellow lines) of daily average RH for each PurpleAir site showing the presence of data (green) and missing data (red). The y axis represents RH scaled from zero to the maximum daily value. The percentage of data captured per year is also provided. (b) Time series of daily average RH for the entire dataset with an SD of 10.56 %.

The final dataset used for our model calibration included CPA, CAQS, RH, and T. Temperature is reported in degrees Celsius in our models. We tested several MLR models, and we defined a supervised clustering approach.

2.4.2 Multilinear regression

Our study tested five MLR models (Eqs. 1–5) including the model proposed by Barkjohn et al. (2021) (Model Bj). Based on the evaluated predictors, we developed Models 1–4. The four proposed models and the Barkjohn model were structured as follows. 2

For each model, β0 represents the intercept; β1 to β3 are the coefficients of the predictors CPA, RH, and T, respectively; and ε is the error term.

2.4.3 Semi-supervised clustering

Alternative bias correction methods to MLR have been developed (Bi et al., 2020; McFarlane et al., 2021; Raheja et al., 2023) to capture the complex nonlinear hygroscopic growth of particles (Hagan and Kroll, 2020; Rueda et al., 2023). Some of these alternative techniques include MBCs (McFarlane et al., 2021; Raheja et al., 2023). An MBC assumes that the data are composed of more than one subpopulation (Raftery and Dean, 2006). The influence of RH on PurpleAir PM2.5 measurements, specifically at high ambient RH (Wallace et al., 2021), may be nonlinear, suggesting the formation of subgroups in our dataset. Therefore, our study tested a semi-supervised clustering (SSC) approach that combines unsupervised and supervised clustering processes to develop a nonlinear MBC (Raftery and Dean, 2006). Before implementing the SSC, we carried out two pre-processing steps. The first pre-processing step consisted of finding the optimal predictors for the clusters by applying a Gaussian mixture model (GMM) variable selection function (forward–backward) for MBC (Raftery and Dean, 2006). The GMM variable selection process uses the expectation maximization (EM) algorithm to determine the maximum likelihood estimate for GMM (Raftery and Dean, 2006). The optimal variables are then selected using the Bayesian information criterion (BIC). The list of potential variables included RH and T (the variable DP was excluded in this process because of multicollinearity with RH and T). The second pre-processing step was to determine the optimal number of clusters. For this, we used a combination of 26 clustering methods via the NbClust R package (Boehmke and Greenwell, 2019; Charrad et al., 2014). Knowing the optimal variable predictors and the optimal number of clusters, we initiated the unsupervised portion of our SSC using the K-means clustering algorithm. K-means, one of the most commonly employed clustering methods, is an unsupervised machine learning partitioning distance-based algorithm that computes the total within-cluster variation as the sum of squared (SS) Euclidian distances between the centroid of a cluster Ck and an observation xi based on the Hartigan–Wong algorithm (Hartigan and Wong, 1979; Yuan and Yang, 2019). Last, we applied a supervised clustering process built upon the results obtained for the unsupervised clustering approach. The supervised process allowed for distribution of the dataset within well-defined subsets. For each subset of the dataset associated with a cluster, an MLR was developed, defining a nonlinear MBC (Eq. 6):

where Ck is the number k of clusters regrouping xi observations for each p explanatory variable.

2.4.4 Model validation

For each of the evaluated models, the coefficient of determination, R2, was calculated to understand how well the regression model performs with the selected predictors. The predictive performance of each model was evaluated by estimating the root mean square error (RMSE) and mean absolute error (MAE). The RMSE is the standard deviation of the prediction errors. The MAE measures the mean absolute difference between the predicted values and the actual values in a dataset. Standard deviation (SD), R2, and RMSE are EPA's recommended performance metrics to evaluate a sensor's precision, linearity, and uncertainty, respectively (Duvall et al., 2021). We compared EPA's target value for SD, which refers to collocated identical sensors, with the estimated mean deviation or MAE for each paired observation of CAQS and CPA.

2.4.5 Cross-validation

Building the correction model based on the full dataset could overfit the model (Barkjohn et al., 2021). Therefore, we used leave-one-group-out cross-validation (LOGOCV) methods to evaluate how the model performs for an independent test dataset. LOGOCV involves splitting the dataset into specific or random groups, then predicting each group as testing data with the other groups used for training. We used an automatic LOGOCV, in which a random set of training data was composed to predict PM2.5 concentrations at each iteration. An ratio was defined between the training and test groups with 25 iterations. Then, we applied a leave-one-state-out cross-validation (LOSOCV) that involves splitting the dataset into specific states to evaluate the performance of the model. In our LOSOCV, every US state was left out successively and used in a validation test, while the remaining states were used to train the model. We used R2, RMSE, and MAE as performance metrics to evaluate the cross-validation results.

2.4.6 Sensitivity analysis

Sensitivity analyses were performed to determine how predictions of PM2.5 concentrations would vary under different temporal resolution. The sensitivity analysis applied the models, developed from hourly data at 0.5, 1.0, and 2.0 km buffers, to daily averaged data for the same buffers. We applied a completeness criterion of 90 %, or 21 h, following Barkjohn et al. (2021).

After applying all the quality assurance (QA) criteria to the raw datasets, we obtained 159 648 observations (18 PurpleAir sites), 238 047 observations (28 PurpleAir sites), and 394 010 observations (50 PurpleAir sites) for buffers of 0.5, 1.0, and 2.0 km, respectively, all at hourly temporal resolution. The QA process removed about 22 % (Table S1 in the Supplement) of the raw data, with data from three PurpleAir sites completely removed for the 0.5 km radius because RH from the humidity sensors correlated poorly with RH reported by NOAA stations (Fig. S1 in the Supplement). We found that two of these same three PurpleAir sites exhibited poor correlation for temperature as well. Moreover, the slope of the linear regression estimated for each PurpleAir sensor (Fig. 1) shows that RH from these three PurpleAir sites exhibited larger bias metrics. All 18 retained PurpleAir sites presented RH data that strongly correlated with NOAA stations (88 %–96 %), with 16 of them presenting a Pearson correlation R equal to or greater than 90 % (Fig. S1). As reported by recent studies (Barkjohn et al., 2022; Giordano et al., 2021; Magi et al., 2020; Tryner et al., 2020), PurpleAir sensors tend to report drier humidity measurements than ambient conditions. The comparison of our PurpleAir sensors with NOAA stations showed that each of the 18 retained PurpleAir sites reported lower humidity measurements than their corresponding NOAA station. They presented a negative difference in RH varying between 10 %–20 %, with uncertainty increasing with increased RH (Fig. S2 in the Supplement). In addition to the three PurpleAir sites removed for the 0.5 km radius, one and two additional PurpleAir sites were removed for the 1.0 and 2.0 km buffers, respectively. We did not detect any additional instrument error for temperature. Most of the retained PurpleAir sites had a strong correlation of 95 %–99 % for temperature with NOAA stations.

Summary statistics were explored to describe the main characteristics of our datasets (Figs. 2 and 3). Meteorological parameters for our three buffers (0.5, 1.0, and 2.0 km) exhibit roughly the same distribution (Fig. S3 in the Supplement). Further evaluation of our 0.5 km radius dataset revealed that 63 % of the hourly data for RH are greater than 50 %, with temperatures varying between −17.13 and 38.83 °C. RH for the 0.5 km radius dataset showed some monthly seasonality (Fig. 2b). However, independent of the number of months of data reported by a PurpleAir sensor, the distribution of RH is relatively consistent for individual PurpleAir sites (Fig. 2a). For this same radius, the number of complete months of data per PurpleAir sensor varied from approximately 1 to 29 months, with 11 sensors covering at least 10 months of hourly data (Fig. 2a).

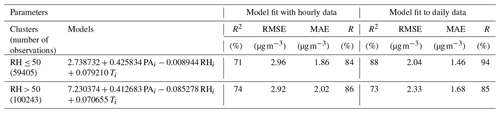

Table 2Semi-supervised clustering model development (model fit with hourly data) and application of the hourly model to daily data. Temperature is in units of degrees Celsius.

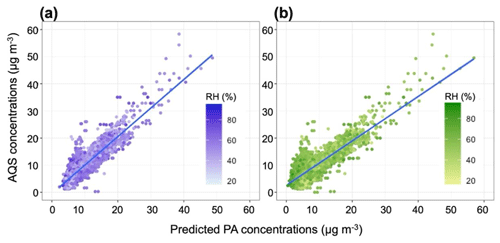

Figure 4Positive linear correlation between daily AQS and daily predicted PM2.5 concentrations with RH distribution. (a) AQS and predicted PM2.5 concentrations using Model 4 of the MLR process are shown in purple, and (b) AQS and predicted PM2.5 concentrations using the Barkjohn model are shown in green.

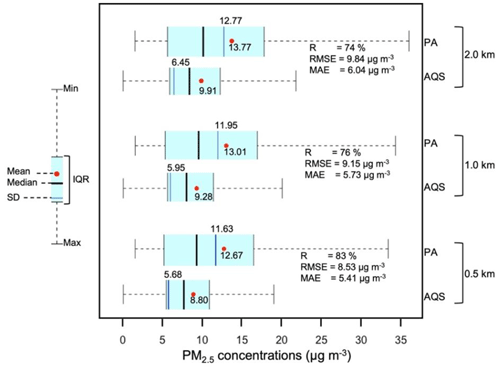

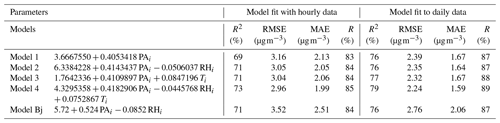

For the PM2.5 concentration data, Fig. 3 displays the mean and SD for the CAQS and CPA data for all three analyzed buffers. The Pearson correlation (R), R2, RMSE, and MAE between CAQS and CPA before fitting any model were also estimated for each radius (Fig. 3). All metrics, including R2 and RMSE, exceeded the target values3 (R2≥70 % and RMSE ≤ 7 µg m−3) recommended by the EPA (Duvall et al., 2021). Raw CPA presented greater magnitude and variability than CAQS (Fig. 3). The performance metrics (Tables 1 and 2, Tables S2–S5 in the Supplement) indicated less error with successively smaller buffer size, which suggests that model fit improves with decreased distance between the AQS monitors and PurpleAir sensors. The distance factor might be attributed to spatial variability between AQS monitors and PurpleAir sensors and the effect of various potential PM sources around the air monitors. Therefore, we present only the results for the 0.5 km buffer analysis. Tables S2–S5 contain the results for the 1.0 and 2.0 km buffers, respectively. Wallace et al. (2021) and Bi et al. (2021) also used a 0.5 km buffer around the AQS monitors in their low-cost sensor data calibration studies.

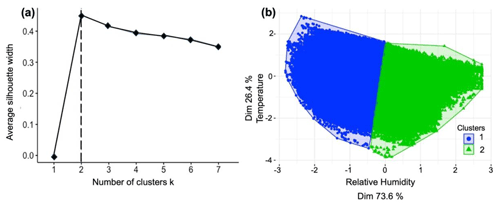

Figure 5Unsupervised clustering results: (a) number of clusters k using the silhouette algorithm. (b) Clustering subsets based on RH and T showing that RH has a greater influence in the process. The axis values correspond to covariance, and the dimensionality corresponds to how much of each variable participated in the clustering process.

3.1 MLR bias correction model

The bias correction models, including the Barkjohn model (2021), and their performance metrics are presented in Table 1. All four MLR-fitted models exhibited an average concentration of 8.80 µg m−3, with an SD varying between 4.71–4.84 µg m−3. The Barkjohn model had a mean of 7.67 µg m−3 and an SD of 6.08 µg m−3. RMSE and MAE, which summarize the error in hourly PM2.5 averages, exhibited relatively low values for the four fitted models when we consider the average CAQS in the dataset and its SD and the EPA's target value (≤ 7 µg m−3) for RMSE. Our dataset illustrates improved predictive performance for our four MLR-fitted models compared with the Barkjohn model (Table 1). The Barkjohn model presented a higher R2, as a measure of the goodness of fit, than Model 1; however, Model 1 is improved with respect to all error metrics. The Barkjohn model resulted in a higher MAE than the four models developed for this study. The best model fit was observed for Model 4, incorporating CPA, T, and RH, with substantially better prediction performance metrics compared with the other models (Table 1). The model would, however, be further improved with use of newer PurpleAir sensors because, over time, the quality of the sensors degrades. This is particularly true in the hot and humid climate zone (deSouza et al., 2023). Similarly, the presence of Teledyne T640 instruments among our AQS monitors may have affected the performance of our models since a positive bias of approximately 20 % has been reported with T640 instruments compared with other FEM or FRM monitors (https://cleanairact.org/wp-content/uploads/2024/03/AAPCA-Comments-Proposed-Update-of-T640-T640X-PM2.5-Data-FINAL-3.15.24.pdf, last access: 11 September 2024). Additionally, a study conducted by Searle et al. (2023) found that 12.9 % of the sensors deployed by PurpleAir between June 2021 and May 2023 reported a negative bias of approximatively 3 µg m−3. These PurpleAir sensors, specifically deployed between June 2021 and January 2022 and between March and May 2023, used an alternative Plantower PMS5003 that affected the reported particle size distributions and concentrations (Searle et al., 2023). Based on the technique developed by Searle et al. (2023) to identify PMS5003 sensors, we estimated that only one of our sensors (sensor ID: 116559), representing 0.62 % of our data, fell into this category. This may have a slight effect on the performance of our models. Furthermore, unlike our fitted models, Model Bj applied to our dataset displayed some negative values. Model 2 was similar in structure to the selected model from Barkjohn et al. (2021), with CPA and RH as predictors. All predictors for every model were statistically significant. Validation testing using LOGOCV (Table S6 in the Supplement) presented nearly identical results to models using the entire dataset, building confidence in the models. The LOSOCV resulted in an RMSE and an MAE of 3.32 and 2.29 µg m−3, respectively, for Model 4. These values were higher than those for the LOGOCV process, which is not surprising considering the variability between states.

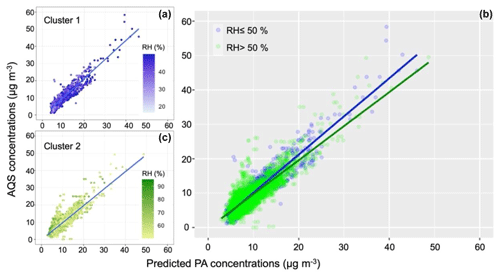

Figure 6Correlation between daily AQS and daily predicted PM2.5 concentrations using the SSC model. Each cluster is presented separately on the left (a, c), and both clusters are shown on the right (b).

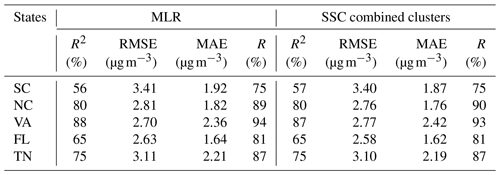

Table 4Application of MLR Model 4 and the SSC model to individual states. The result for SSC combined clusters is the result obtained after applying each cluster to the hourly data, then added together.

Our findings align with some previous low-cost sensor data calibration work (Barkjohn et al., 2021; Magi et al., 2020; Zheng et al., 2018), where relatively simple calibration models provided reasonable bias correction. Zheng et al. (2018), evaluating the performance of Plantower PMS3003, which is similar to the PM2.5 sensor used in PurpleAir, found an R2 value of 66 % for a 1 h averaging period after applying an MLR calibration equation to compare three Plantower sensors against each other and a collocated reference monitor over a period of 30 d. A study conducted by Magi et al. (2020), involving a 16-month PurpleAir PM2.5 data collection in an urban setting in Charlotte, North Carolina, resulted in R2 of 60 % for an MLR including CPA, RH, and T. Barkjohn et al. (2021) estimated an RMSE of 3 µg m−3 (no decimal specified) when fitting a model with RH for a mean concentration of 9 µg m−3 for FRM or FEM monitors. Moreover, the negative coefficient obtained for RH for Model 2 and Model 4 is not surprising considering that high RH can lead to hygroscopic growth of the particles and therefore cause uncertainties and overestimation in PurpleAir PM2.5 concentration readings (Bi et al., 2021; Wallace et al., 2021). The model developed by Barkjohn et al. (2021), as well as the MLR model developed by Raheja et al. (2023) using data in Accra, Ghana, had a negative coefficient for RH.

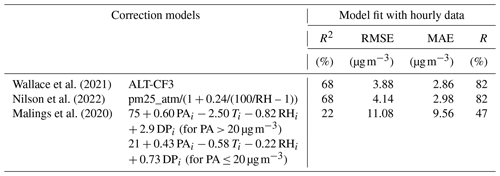

Following removal of data points that did not fit the QA criteria, the 0.5 km daily dataset included 5666 observations for the same 18 sensors when applying the hourly model to daily data. These produced a substantial improvement in the performance metrics compared with those of the hourly models (Table 1). Model 4 presented better performance metrics compared to the other models (Table 1). Figure 4 shows the correlation between the predicted CPA and CAQS for Model 4 and Model Bj along with the distribution of RH. The model developed by Barkjohn et al. (2021) used only daily averaged data; thus, it was directly comparable with our application of the model to daily data. An aggregate of data points can be seen on the left-hand side of the correlation plots (Fig. 4) to deviate from the model fit line. These data probably influenced the performance metrics of the models. An evaluation of Model Bj applied to our warm–humid climate zone daily PurpleAir datasets revealed substantially higher error metrics than the other models (Table 1).

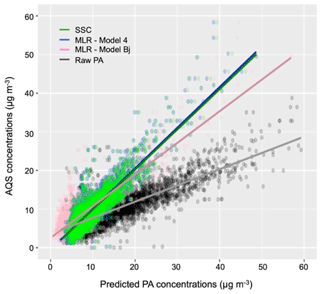

3.2 SSC model predictions

The SSC model included the same predictors as Model 4 (CPA, RH, and T) as the best MLR model obtained. The GMM process, discerning complex relationships between variables, found that RH and T are optimal predictors to use in the clustering process. Among the 26 indices evaluated, we found that 8 of them proposed k=2 as the optimal number of clusters (Table S9 in the Supplement). Thus, we set k=2 clusters for the unsupervised aspect of our SSC process. Figure 5a shows the k-cluster result for the silhouette algorithm, which is based on two factors: cohesion (similarity between the object and the cluster) and separation (comparison with other clusters) (Yuan and Yang, 2019). The unsupervised clustering suggested a distribution of the dataset into two well-defined clusters based on the RH predictor (Fig. 5b). For T, the same range of values was found within each defined cluster. RH is the most important variable that determined the clustering subdivision (Fig. 5b); therefore, we considered only RH for the cluster subdivision, and then we applied the supervised phase of the SSC process to adjust the random subdivision of the clusters and eliminate overlaps. The two clusters were RH ≤ 50 % (Cluster 1) and RH > 50 % (Cluster 2) (Table 2). This result aligns with Wallace et al. (2021), showing that the nonlinear effect between PM2.5 and RH emerges around RH of 50 %, similar to our cluster division (Fig. S4 in the Supplement).

The SSC approach provides improved model fits compared with the MLR models for our hourly data. Table 2 presents the modeling results of the RH-based semi-supervised clustering process. The difference between the two models resides primarily in their intercepts and their RH coefficients (Table 2). The RH factor is 10 times greater in Cluster 2 than Cluster 1, and the intercept of Cluster 2 is about 5.5 µg m−3 greater than Cluster 1. All predictors were statistically significant. Models from both clusters are within the range of the EPA's target values for linearity and error performance metrics (Table 2). Except for MAE, which is much lower for Cluster 1, the Cluster 2 model presented better performance metrics compared with the Cluster 1 model (Table 2). Compared with Model 4 from the MLR models, results from Cluster 1 showed equal RMSE and a very low MAE, while estimated metrics from Cluster 2 are greatly improved with the exception of MAE (Table 2). The combined predicted PurpleAir concentrations from the two SSC clusters resulted in an RMSE of 2.94 µg m−3 and an MAE of 1.96 µg m−3. Similar to the MLR validation testing, LOGOCV for SSC (Table S7 in the Supplement) produced similar metrics compared with the models using the entire dataset. LOSOCV for SSC showed improved performance on average compared with the same process for Model 4 (Table S8 in the Supplement), with every state exhibiting lower error metrics than the EPA's target value (≤ 7 µg m−3) for RMSE. Thus, the cluster-based models may be valid for any state in the study area.

Previous studies (McFarlane et al., 2021; Raheja et al., 2023) using an MBC to calibrate low-cost sensors are consistent with our SSC results, with lower MAEs and RMSEs for their GMR-based model compared with their MLR, indicating that an MBC is superior to an MLR approach. McFarlane et al. (2021) found for their GMR model an MAE of 0.5 less than their MLR of 2.2 µg m−3. Similarly, Raheja et al. (2023), for their GMR model using PurpleAir sensors, found an MAE of 1.93 µg m−3 and an RMSE of 2.58 µg m−3, corresponding to 0.17 and 0.30 µg m−3 less than their MLR model, respectively. However, because of transferability (Raheja et al., 2023) constraints with GMR-based models, Raheja et al. (2023) recommended using their MLR model for future applications, although they obtained an improved model using GMR.

We compared our results with three nonlinear models that were previously tested for PurpleAir sensors. Two of these studies were not fit with data for our warm–humid climate zone study area. Malings et al. (2020) developed a two-piecewise linear model based on a threshold of 20 µg m−3 PM2.5 concentrations using 11 PurpleAir sensors at two sites in Pittsburgh. The Malings et al. (2020) paper includes DP as one of the predictors (Table 3), which violates the assumption of predictor variable independence in the correction model since a high correlation was found between DP and T. Performance metrics for the Malings et al. (2020) model were inferior to those for our models and for the models developed by other authors (Table 3). Wallace et al. (2021, 2022) estimated correction factors based on the ratio of the mean AQS to the mean PurpleAir for all pairs of PurpleAir–AQS sites from California (Wallace et al., 2021) and from California, Washington, and Oregon (Wallace et al., 2022) in separate models. Using the correction factor of 3 (ALT-CF3) recommended in Wallace et al. (2021), we calculated higher MAE and RMSE (Table 3) than for any of our models and for the Barkjohn model. Similarly, the correction model developed by Nilson et al. (2022) for the cf = Atm data (same type of data used in their model) yielded similar R2 and even higher RMSE and MAE than found with the ALT-CF3 model (Table S9). Nilson et al. (2022) used 35 PurpleAir–FEM sites in the US and Canada including 2 sites in our study area.

As for the MLR, the SSC hourly model was applied to the daily average dataset. Figure 6 shows the nonlinearity of our dataset, with the slope varying for each cluster for the correlation between CAQS and CPA. The same aggregate of data points seen in Fig. 4 is also observed in the SSC models but only in Cluster 2 (Fig. 6). This may have affected the accuracy of the model (Table 1). Applying the hourly models to daily data resulted in substantial improvement, with lower uncertainties in each cluster of the SSC model compared with the hourly dataset (Table 2). Compared with the fit for Model 4 from the MLR (Table 1) to daily data, we observed that Cluster 1 presented better performance metrics than Cluster 2 (Tables 1 and 2). Compared with Model Bj applied to our daily dataset in Table 1, the daily SSC model displays improved results (lower RMSE and MAE) for each cluster.

To further assess the model performance in subgroups, Model 4 from MLR and the SSC model were applied to daily data from five states of the warm–humid climate zones (Table 4). For SSC, both models (RH ≤ 50 % and RH > 50 %) presented good results for all the metrics compared with the hourly data-fitted models and their application to daily data. Except for VA, where Model 4 produced lower error metric values, the SSC model outperformed MLR for all the states.

3.3 Final model selection

Model 4 from the MLR models and the SSC model align with previous studies, producing low error and high correlation (R2). After comparing NOAA and PurpleAir meteorological data (Fig. S5), we included in the Supplement (Table S10) these two sets of models (Model 4 from the MLR models and the SSC model) using NOAA meteorological data for RH and T that can be applied when meteorological information from PurpleAir sensors is biased or missing. Figure 7 summarizes the results of our study by presenting the correlation fit for MLR (Model 4 from the MLR models), as well as the combined clusters from SSC, Model Bj, and the raw PurpleAir data together. Tables S11 and S12 in the Supplement provide an evaluation of the performance of the models by air quality index (AQI) categories. Our results showed that applying Model Bj to our hourly dataset improved our error metric, RMSE, of 58.73 % from the raw data. MLR and the SSC model have lower error and higher correlation than Model Bj. A decrease of 15.91 % was obtained for RMSE from Model Bj to Model 4. However, Model 4 PM2.5 concentrations had a higher average mean deviation (1. 99 µg m−3) from CAQS than PM2.5 concentrations from the SSC model (1.96 µg m−3). Moreover, Model 4 PM2.5 concentrations from the MLR models tend to be slightly higher than PM2.5 concentrations from the SSC model at high RH and slightly lower at lower RH.

In conclusion, Model 4 from the MLR and the SSC model improved the error performance metrics by 16 %–23 % compared with the model developed by Barkjohn et al. (2021). The SSC model presented slightly better results than the overall MLR, suggesting that a clustering approach might be more accurate in areas with high humidity conditions to capture the nonlinearity associated with hygroscopic growth of particles in such conditions. Therefore, the SSC model is recommended for bias correction for the southeastern United States. However, Model 4 might be an acceptable alternative for its parsimony. Applying these models to PM2.5 PurpleAir concentrations collected in high-humidity areas will help to inform communities with a high-quality estimation of their exposure. These models might also benefit communities in high-humidity regions outside of the US. The next steps in model development may include evaluation of the transferability of these models to other humid locations in the world.

The processed datasets and programming codes written to pre-process the PurpleAir and AQS data, as well as to perform statistical analyses and visualizations, can be found at https://doi.org/10.5281/zenodo.14109565 (MartineMathieu, 2024). The hourly and daily predicted concentrations are also accessible via the same link. All raw data can be provided by the corresponding author upon request.

The supplement related to this article is available online at: https://doi.org/10.5194/amt-17-6735-2024-supplement.

MEMC, APG, and JRB conceptualized the work and developed the methods. MEMC curated the data, completed the formal analysis, and created figure visualizations. MEMC and CG wrote the original draft. MEMC, CG, APG, and JRB reviewed and edited the manuscript. JRB acquired funding.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

We thank the PurpleAir team for their support in obtaining PM2.5 concentrations and meteorological PurpleAir data.

This research has been supported by the National Institute of Environmental Health Sciences (grant nos. P42 ES013638 and P30 ES025128).

This paper was edited by Jessie Creamean and reviewed by two anonymous referees.

Antonopoulos, C., Gilbride, T., Margiotta, E., and Kaltreider, C.: Guide to Determining Climate Zone by County: Building America and IECC 2021 Updates, Richland, WA (United States), https://doi.org/10.2172/1893981, 2022.

API PurpleAir: PurpleAir, Inc., PurpleAir, https://www.api.purpleair.com (last access: 23 July/2023).

AQ-SPEC: Field Evaluation Purple Air PM Sensor, http://www.aqmd.gov/docs/default-source/aq-spec/field-evaluations/purpleair---field-evaluation.pdf (last access: 20 February 2024), 2016a.

AQ-SPEC: by: Polidori, A., Papapostolou, V., Zhang, H.: Laboratory Evaluation of Low-Cost Air Quality Sensors: Laboratory Setup and Testing Protocol, South Coast Air Quality Management District: Diamondbar, CA, USA, 2016b.

Ardon-Dryer, K., Dryer, Y., Williams, J. N., and Moghimi, N.: Measurements of PM2.5 with PurpleAir under atmospheric conditions, Atmos. Meas. Tech., 13, 5441–5458, https://doi.org/10.5194/amt-13-5441-2020, 2020.

Baechler, M. C., Gilbride, T. L., Cole, P. C., Hefty, M. G., and Ruiz, K.: Guide to Determining Climate Regions by County, Prepared for the U.S. Department of Energy under Contract DE-AC05-76RLO 1830, Pacific Northwest National Laboratory & Oak Ridge National Laboratory, TN, 2015.

Barkjohn, K. K., Gantt, B., and Clements, A. L.: Development and application of a United States-wide correction for PM2.5 data collected with the PurpleAir sensor, Atmos. Meas. Tech., 14, 4617–4637, https://doi.org/10.5194/amt-14-4617-2021, 2021.

Barkjohn, K. K., Holder, A. L., Frederick, S. G., and Clements, A. L.: Correction and Accuracy of PurpleAir PM2.5 Measurements for Extreme Wildfire Smoke, Sensors, 22, 24, https://doi.org/10.3390/s22249669, 2022.

Bi, J., Wildani, A., Chang, H. H., and Liu, Y.: Incorporating Low-Cost Sensor Measurements into High-Resolution PM2.5 Modeling at a Large Spatial Scale, Environ. Sci. Technol., 54, 2152–2162, https://doi.org/10.1021/acs.est.9b06046, 2020.

Bi, J., Wallace, L. A., Sarnat, J. A., and Liu, Y.: Characterizing outdoor infiltration and indoor contribution of PM2.5 with citizen-based low-cost monitoring data, Environ. Pollut., 276, 116763, https://doi.org/10.1016/J.ENVPOL.2021.116763, 2021.

Boehmke, B. and Greenwell, B.: Hands-On Machine Learning with R, Chapman and Hall/CRC, New-York, https://doi.org/10.1201/9780367816377, 2019.

Brook, R. D., Rajagopalan, S., Pope, C. A., Brook, J. R., Bhatnagar, A., Diez-Roux, A. V., Holguin, F., Hong, Y., Luepker, R. V., Mittleman, M. A., Peters, A., Siscovick, D., Smith, S. C., Whitsel, L., and Kaufman, J. D.: Particulate matter air pollution and cardiovascular disease: An update to the scientific statement from the american heart association, Circulation, 121, 2331–2378, https://doi.org/10.1161/CIR.0b013e3181dbece1, 2010.

Carlton, A. G., Pye, H. O. T., Baker, K. R., and Hennigan, C. J.: Additional Benefits of Federal Air-Quality Rules: Model Estimates of Controllable Biogenic Secondary Organic Aerosol, Environ. Sci. Technol., 52, 9254–9265, https://doi.org/10.1021/acs.est.8b01869, 2018.

Carrico, C. M., Petters, M. D., Kreidenweis, S. M., Sullivan, A. P., McMeeking, G. R., Levin, E. J. T., Engling, G., Malm, W. C., and Collett Jr., J. L.: Water uptake and chemical composition of fresh aerosols generated in open burning of biomass, Atmos. Chem. Phys., 10, 5165–5178, https://doi.org/10.5194/acp-10-5165-2010, 2010.

Carslaw, D.: worldmet: Import Surface Meteorological Data from NOAA Integrated Surface Database (ISD), R package licensed under the GNU General Public License, Repository: CRAN, https://davidcarslaw.github.io/worldmet/index.html (last access: 11 September 2024), 2023.

Charrad, M., Ghazzali, N., Boiteau, V., and Niknafs, A.: Nbclust: An R package for determining the relevant number of clusters in a data set, J. Stat. Softw., 61, 54–55, https://doi.org/10.18637/jss.v061.i06, 2014.

Chen, L., Zhang, F., Zhang, D., Wang, X., Song, W., Liu, J., Ren, J., Jiang, S., Li, X., and Li, Z.: Measurement report: Hygroscopic growth of ambient fine particles measured at five sites in China, Atmos. Chem. Phys., 22, 6773–6786, https://doi.org/10.5194/acp-22-6773-2022, 2022.

Chen, L. J., Ho, Y. H., Lee, H. C., Wu, H. C., Liu, H. M., Hsieh, H. H., Huang, Y. Te, and Lung, S. C. C.: An Open Framework for Participatory PM2.5 Monitoring in Smart Cities, IEEE Access, 5, 14441–14454, https://doi.org/10.1109/ACCESS.2017.2723919, 2017.

Chen, R., Hu, B., Liu, Y., Xu, J., Yang, G., Xu, D., and Chen, C.: Beyond PM2.5: The role of ultrafine particles on adverse health effects of air pollution, BBA Gen. Subjects, 1860, 2844–2855, https://doi.org/10.1016/j.bbagen.2016.03.019, 2016.

Cohen, A. J., Brauer, M., Burnett, R., Anderson, H. R., Frostad, J., Estep, K., Balakrishnan, K., Brunekreef, B., Dandona, L., Dandona, R., Feigin, V., Freedman, G., Hubbell, B., Jobling, A., Kan, H., Knibbs, L., Liu, Y., Martin, R., Morawska, L., Pope, C. A., Shin, H., Straif, K., Shaddick, G., Thomas, M., van Dingenen, R., van Donkelaar, A., Vos, T., Murray, C. J. L., and Forouzanfar, M. H.: Estimates and 25-year trends of the global burden of disease attributable to ambient air pollution: an analysis of data from the Global Burden of Diseases Study 2015, Lancet, 389, 1907–1918, https://doi.org/10.1016/S0140-6736(17)30505-6, 2017.

deSouza, P., Karoline Barkjohn, ab, Clements, A., Lee, J., Kahn, R., Crawford, B., and Kinney, P.: An analysis of degradation in low-cost particulate matter sensors, Environ. Sci. Atmos., 3, 521–536, https://doi.org/10.1039/d2ea00142j, 2023.

Duvall, R. M., Clements, A. L., Hagler, G., Kamal, A., Kilaru, V., Goodman, L., Frederick, S., Barkjohn, K. K., VonWald, I., Greene, D., and Dye, T.: Performance Testing Protocols, Metrics, and Target Values for Fine Particulate Matter Air Sensors: Use in Ambient, Outdoor, Fixed Site, Non-Regulatory Supplemental and Informational Monitoring Applications, U.S. EPA Office of Research and Development, Washington, DC, EPA/600/R-20/280, 79 pp., https://cfpub.epa.gov/si/si_public_record_Report.cfm?dirEntryId=350785&Lab=CEMM (last access: 20 February 2024), 2021.

Giordano, M. R., Malings, C., Pandis, S. N., Presto, A. A., McNeill, V. F., Westervelt, D. M., Beekmann, M., and Subramanian, R.: From low-cost sensors to high-quality data: A summary of challenges and best practices for effectively calibrating low-cost particulate matter mass sensors, J. Aerosol Sci., 158, 105833 p., https://doi.org/10.1016/j.jaerosci.2021.105833, 2021.

Hagan, D. H. and Kroll, J. H.: Assessing the accuracy of low-cost optical particle sensors using a physics-based approach, Atmos. Meas. Tech., 13, 6343–6355, https://doi.org/10.5194/amt-13-6343-2020, 2020.

Hartigan, J. A. and Wong, M. A.: Algorithm AS 136: A K-Means Clustering Algorithm, Appl. Stat, 28, 100–108, https://doi.org/10.2307/2346830, 1979.

He, M., Kuerbanjiang, N., and Dhaniyala, S.: Performance characteristics of the low-cost Plantower PMS optical sensor, Aerosol Sci. Tech., 54, 232–241, https://doi.org/10.1080/02786826.2019.1696015, 2020.

Health Effects Institute: State of Global Air 2020: a special report on global exposure to air pollution and its health impacts. Special Report, Health Effects Institute, Boston, MA, ISSN 2578-6873, 2020.

Healy, R. M., Evans, G. J., Murphy, M., Jurányi, Z., Tritscher, T., Laborde, M., Weingartner, E., Gysel, M., Poulain, L., Kamilli, K. A., Wiedensohler, A., O'Connor, I. P., McGillicuddy, E., Sodeau, J. R., and Wenger, J. C.: Predicting hygroscopic growth using single particle chemical composition estimates, J. Geophys. Res., 119, 9567–9577, https://doi.org/10.1002/2014JD021888, 2014.

Holder, A. L., Mebust, A. K., Maghran, L. A., McGown, M. R., Stewart, K. E., Vallano, D. M., Elleman, R. A., and Baker, K. R.: Field evaluation of low-cost particulate matter sensors for measuring wildfire smoke, Sensors (Switzerland), 20, 4796, https://doi.org/10.3390/s20174796, 2020.

International Energy Conservation Code: Chapter 3, General requirements, International Energy Conservation Code (IECC), https://codes.iccsafe.org/content/IECC2021P2/chapter-3-re-general-requirements (last access: last access: 18 December 2023), 2021.

Jaffe, D. A., Miller, C., Thompson, K., Finley, B., Nelson, M., Ouimette, J., and Andrews, E.: An evaluation of the U.S. EPA's correction equation for PurpleAir sensor data in smoke, dust, and wintertime urban pollution events, Atmos. Meas. Tech., 16, 1311–1322, https://doi.org/10.5194/amt-16-1311-2023, 2023.

Jamriska, M., Morawska, L., and Mergersen, K.: The effect of temperature and humidity on size segregated traffic exhaust particle emissions, Atmos. Environ., 42, 2369–2382, https://doi.org/10.1016/j.atmosenv.2007.12.038, 2008.

Jayaratne, R., Liu, X., Thai, P., Dunbabin, M., and Morawska, L.: The influence of humidity on the performance of a low-cost air particle mass sensor and the effect of atmospheric fog, Atmos. Meas. Tech., 11, 4883–4890, https://doi.org/10.5194/amt-11-4883-2018, 2018.

Jiao, W., Hagler, G., Williams, R., Sharpe, R., Brown, R., Garver, D., Judge, R., Caudill, M., Rickard, J., Davis, M., Weinstock, L., Zimmer-Dauphinee, S., and Buckley, K.: Community Air Sensor Network (CAIRSENSE) project: evaluation of low-cost sensor performance in a suburban environment in the southeastern United States, Atmos. Meas. Tech., 9, 5281–5292, https://doi.org/10.5194/amt-9-5281-2016, 2016.

Kelly, K. E., Whitaker, J., Petty, A., Widmer, C., Dybwad, A., Sleeth, D., Martin, R., and Butterfield, A.: Ambient and laboratory evaluation of a low-cost particulate matter sensor, Environ. Pollut., 221,491–500, https://doi.org/10.1016/j.envpol.2016.12.039, 2017.

Kim, S., Park, S., and Lee, J.: Evaluation of performance of inexpensive laser based PM2.5 sensor monitors for typical indoor and outdoor hotspots of South Korea, Appl. Sci.Basel, 9, 1947, https://doi.org/10.3390/app9091947, 2019.

Konrad, C. E., Fuhrmann, C. M., Billiot, A., Keim, B. D., Kruk, M. C., Kunkel, K. E., Needham, H., Shafer, M., and Stevens, L.: Climate of the southeast USA: past, present, and future, in: Climate of the southeast United States: Variability, change, impacts, and vulnerability, edited by: Island Press/Center for Resource Economics, Washington, DC, 8–42, https://doi.org/10.5822/978-1-61091-509-0_2, 2013.

Kramer, A. L., Liu, J., Li, L., Connolly, R., Barbato, M., and Zhu, Y.: Environmental justice analysis of wildfire-related PM2.5 exposure using low-cost sensors in California, Sci. Total Environ., 856, 159218, https://doi.org/10.1016/J.SCITOTENV.2022.159218, 2023.

Landrigan, P. J., Fuller, R., Acosta, N. J. R., Adeyi, O., Arnold, R., Basu, N. (Nil), Baldé, A. B., Bertollini, R., Bose-O'Reilly, S., Boufford, J. I., Breysse, P. N., Chiles, T., Mahidol, C., Coll-Seck, A. M., Cropper, M. L., Fobil, J., Fuster, V., Greenstone, M., Haines, A., Hanrahan, D., Hunter, D., Khare, M., Krupnick, A., Lanphear, B., Lohani, B., Martin, K., Mathiasen, K. V., McTeer, M. A., Murray, C. J. L., Ndahimananjara, J. D., Perera, F., Potočnik, J., Preker, A. S., Ramesh, J., Rockström, J., Salinas, C., Samson, L. D., Sandilya, K., Sly, P. D., Smith, K. R., Steiner, A., Stewart, R. B., Suk, W. A., van Schayck, O. C. P., Yadama, G. N., Yumkella, K., and Zhong, M.: The Lancet Commission on pollution and health, The lancet, 391, 462–512, https://doi.org/10.1016/S0140-6736(17)32345-0, 2018.

Lu, T., Liu, Y., Garcia, A., Wang, M., Li, Y., Bravo-villasenor, G., Campos, K., Xu, J., and Han, B.: Leveraging Citizen Science and Low-Cost Sensors to Characterize Air Pollution Exposure of Disadvantaged Communities in Southern California, Int. J. Env. Res. Pub. He., 19, 8777, https://doi.org/10.3390/ijerph19148777, 2022.

Magi, B. I., Cupini, C., Francis, J., Green, M., and Hauser, C.: Evaluation of PM2.5 measured in an urban setting using a low-cost optical particle counter and a Federal Equivalent Method Beta Attenuation Monitor, Aerosol Sci. Tech., 54, 147–159, https://doi.org/10.1080/02786826.2019.1619915, 2020.

Malings, C., Tanzer, R., Hauryliuk, A., Saha, P. K., Robinson, A. L., Presto, A. A., and Subramanian, R.: Fine particle mass monitoring with low-cost sensors: Corrections and long-term performance evaluation, Aerosol Sci. Tech., 54, 160–174, https://doi.org/10.1080/02786826.2019.1623863, 2020.

MartineMathieu: MartineMathieu/PurpleAir-calibration: PurpleAir Bias Correction v1.0.0 (v1.0.0), Zenodo [data set], https://doi.org/10.5281/zenodo.14109566, 2024.

Maugis, C., Celeux, G., and Martin-Magniette, M. L.: Variable selection for clustering with gaussian mixture models, Biometrics, 65, 701–709, https://doi.org/10.1111/j.1541-0420.2008.01160.x, 2009.

McFarlane, C., Raheja, G., Malings, C., Appoh, E. K. E., Hughes, A. F., and Westervelt, D. M.: Application of Gaussian Mixture Regression for the Correction of Low Cost PM2.5Monitoring Data in Accra, Ghana, ACS Earth Space Chem., 5, 2268–2279, https://doi.org/10.1021/acsearthspacechem.1c00217, 2021.

Nilson, B., Jackson, P. L., Schiller, C. L., and Parsons, M. T.: Development and evaluation of correction models for a low-cost fine particulate matter monitor, Atmos. Meas. Tech., 15, 3315–3328, https://doi.org/10.5194/amt-15-3315-2022, 2022.

Olstrup, H., Johansson, C., Forsberg, B., Tornevi, A., Ekebom, A., and Meister, K.: A multi-pollutant air quality health index (AQHI) based on short-term respiratory effects in Stockholm, Sweden, Int. J. Env. Res. Pub. He., 16, 105, https://doi.org/10.3390/ijerph16010105, 2019.

Patel, M. Y., Vannucci, P. F., Kim, J., Berelson, W. M., and Cohen, R. C.: Towards a hygroscopic growth calibration for low-cost PM2.5 sensors, Atmos. Meas. Tech., 17, 1051–1060, https://doi.org/10.5194/amt-17-1051-2024, 2024.

Pope, C. A. and Dockery, D. W.: Health effects of fine particulate air pollution: Lines that connect, J. Air Waste Manage., 56, 709–742, https://doi.org/10.1080/10473289.2006.10464485, 2006.

Raftery, A. E. and Dean, N.: Variable selection for model-based clustering, J. Am. Stat. Assoc., 101, 168–178, https://doi.org/10.1198/016214506000000113, 2006.

Raheja, G., Nimo, J., Appoh, E. K. E., Essien, B., Sunu, M., Nyante, J., Amegah, M., Quansah, R., Arku, R. E., Penn, S. L., Giordano, M. R., Zheng, Z., Jack, D., Chillrud, S., Amegah, K., Subramanian, R., Pinder, R., Appah-Sampong, E., Tetteh, E. N., Borketey, M. A., Hughes, A. F., and Westervelt, D. M.: Low-Cost Sensor Performance Intercomparison, Correction Factor Development, and 2+ Years of Ambient PM2.5 Monitoring in Accra, Ghana, Environ. Sci. Technol., 57, 10708–10720, https://doi.org/10.1021/acs.est.2c09264, 2023.

Rueda, E. M., Carter, E., L'Orange, C., Quinn, C., and Volckens, J.: Size-Resolved Field Performance of Low-Cost Sensors for Particulate Matter Air Pollution, Environ. Sci. Tech. Let, 10, 247–253, https://doi.org/10.1021/acs.estlett.3c00030, 2023.

Sayahi, T., Butterfield, A., and Kelly, K. E.: Long-term field evaluation of the Plantower PMS low-cost particulate matter sensors, Environ. Pollut., 245, 932–940, https://doi.org/10.1016/j.envpol.2018.11.065, 2019.

Searle, N., Kaur, K., and Kelly, K.: Technical note: Identifying a performance change in the Plantower PMS 5003 particulate matter sensor, J. Aerosol Sci., 174, 106256, https://doi.org/10.1016/j.jaerosci.2023.106256, 2023.

Shi, J. Q. and Choi, T.: Gaussian process regression analysis for functional data, CRC press, Taylor & Francis Group, Boca Raton, FL, https://doi.org/10.1201/b11038, 2011.

Snyder, E. G., Watkins, T. H., Solomon, P. A., Thoma, E. D., Williams, R. W., Hagler, G. S. W., Shelow, D., Hindin, D. A., Kilaru, V. J., and Preuss, P. W.: The changing paradigm of air pollution monitoring, Environ. Sci. Technol., 47, 11369–11377, https://doi.org/10.1021/es4022602, 2013.

Stavroulas, I., Grivas, G., Michalopoulos, P., Liakakou, E., Bougiatioti, A., Kalkavouras, P., Fameli, K. M., Hatzianastassiou, N., Mihalopoulos, N., and Gerasopoulos, E.: Field evaluation of low-cost PM sensors (Purple Air PA-II) Under variable urban air quality conditions, in Greece, Atmosphere-Basel, 11, 926, https://doi.org/10.3390/atmos11090926, 2020.

Tryner, J., L'Orange, C., Mehaffy, J., Miller-Lionberg, D., Hofstetter, J. C., Wilson, A., and Volckens, J.: Laboratory evaluation of low-cost PurpleAir PM monitors and in-field correction using co-located portable filter samplers, Atmos. Environ., 220, 117067, https://doi.org/10.1016/j.atmosenv.2019.117067, 2020.

U.S. EPA: Understanding Air Pollution in the South- eastern United States, U. S. Environmental Protection Agency, https://www.epa.gov/sciencematters/ understanding-air-pollution-southeastern-united-states (last access: 27 June 2024), 3 December 2018.

U.S. EPA: List of designated reference and equivalent methods, U. S. Environmental Protection Agency, Research Triangle Park, NC 27711, 2023.

U. S. Environmental Protection Agency: AirNow API, https://www.airnow.gov (last access: 29 August 2023), 2023.

U. S. Environmental Protection Agency: Air Quality System (AQS), https://www.epa.gov/aqs (last access: 29 August 2023), 2023.

Wallace, L., Bi, J., Ott, W. R., Sarnat, J., and Liu, Y.: Calibration of low-cost PurpleAir outdoor monitors using an improved method of calculating PM2.5, Atmos. Environ., 256, 118432, https://doi.org/10.1016/j.atmosenv.2021.118432, 2021.

Wallace, L., Zhao, T., and Klepeis, N. E.: Calibration of PurpleAir PA-I and PA-II Monitors Using Daily Mean PM2.5 Concentrations Measured in California, Washington, and Oregon from 2017 to 2021, Sensors, 22, 4741, https://doi.org/10.3390/s22134741, 2022.

Yuan, C. and Yang, H.: Research on K-Value Selection Method of K-Means Clustering Algorithm, J (Basel), 2, 226–235, https://doi.org/10.3390/j2020016, 2019.

Zheng, T., Bergin, M. H., Johnson, K. K., Tripathi, S. N., Shirodkar, S., Landis, M. S., Sutaria, R., and Carlson, D. E.: Field evaluation of low-cost particulate matter sensors in high- and low-concentration environments, Atmos. Meas. Tech., 11, 4823–4846, https://doi.org/10.5194/amt-11-4823-2018, 2018.

Zusman, M., Schumacher, C. S., Gassett, A. J., Spalt, E. W., Austin, E., Larson, T. V., Carvlin, G., Seto, E., Kaufman, J. D., and Sheppard, L.: Calibration of low-cost particulate matter sensors: Model development for a multi-city epidemiological study, Environ. Int., 134, 105329, https://doi.org/10.1016/j.envint.2019.105329, 2020.